西瓜书 习题7.3 朴素贝叶斯分类器+拉普拉斯修正

Naive Bayes Classifier with Laplacian correction

数据和代码在我的git上,原创代码:

https://github.com/qdbszsj/NBC

朴素贝叶斯分类器,用的贝叶斯定理(这不是废话),举个例子,说白了就是:绿瓜是好瓜的概率=所有好瓜里的绿瓜个数/所有绿瓜个数。假如一个瓜是绿的,还很清脆,那么这个瓜是好瓜的概率就是P(绿瓜是好瓜)*P(清脆瓜是好瓜),前面再乘一个好瓜坏瓜的权重,同理求出坏瓜的对应值,然后比较一下,取最大的那个(西瓜书p151,公式7.15)

这里的那个函数P很耗时间,而且经常会求一样的东西,因此我加了个记忆化搜索,用map存一下,避免重复计算。kindsOfAttribute这个是用来存第i个属性可能的取值数,好用来当拉普拉斯修正的那个要加在分母上的值,continuousPara是存连续值的那个mean和std,避免重复求。

然后这里我发现求出来的数和书本上不一样,仔细检查了一下,发现书上有一个地方错了,西瓜书P152页的那个P(凹陷|是)=6/8=0.750这里,真正我查了一下原表,应该是5/8=0.625才对,这里出错导致后面的数都多少有些差错。

我求累乘的时候,用的log累加法,这个道理很简单,为了防止小数下溢,精度损失,此处不多说了

import numpy as np

import pandas as pd

dataset=pd.read_csv('/home/parker/watermelonData/watermelon_3.csv', delimiter=",")

del dataset['编号']

print(dataset)

X=dataset.values[:,:-1]

m,n=np.shape(X)

for i in range(m):

X[i, n - 1] = round(X[i, n - 1], 3)

X[i, n - 2] = round(X[i, n - 2], 3)

y=dataset.values[:,-1]

columnName=dataset.columns

colIndex={}

for i in range(len(columnName)):

colIndex[columnName[i]]=i

Pmap={}# memory the P to avoid the repeat computing

kindsOfAttribute={}# kindsOfAttribute[0]=3 because there are 3 different types in '色泽'

#this map is for laplacian correction

for i in range(n):kindsOfAttribute[i]=len(set(X[:,i]))

continuousPara={}# memory some parameters of the continuous data to avoid repeat computing

goodList=[]

badList=[]

for i in range(len(y)):

if y[i]=='是':goodList.append(i)

else:badList.append(i)

import math

def P(colID,attribute,C):#P(colName=attribute|C) P(色泽=青绿|是)

if (colID,attribute,C) in Pmap:

return Pmap[(colID,attribute,C)]

curJudgeList=[]

if C=='是':curJudgeList=goodList

else:curJudgeList=badList

ans=0

if colID>=6:# density or ratio which are double type data

mean=1

std=1

if (colID,C) in continuousPara:

curPara=continuousPara[(colID,C)]

mean=curPara[0]

std=curPara[1]

else:

curData=X[curJudgeList,colID]

mean=curData.mean()

std=curData.std()

# print(mean,std)

continuousPara[(colID, C)]=(mean,std)

ans=1/(math.sqrt(math.pi*2)*std)*math.exp((-(attribute-mean)**2)/(2*std*std))

else:

for i in curJudgeList:

if X[i,colID]==attribute:ans+=1

ans=(ans+1)/(len(curJudgeList)+kindsOfAttribute[colID])

Pmap[(colID, attribute, C)] = ans

# print(ans)

return ans

def predictOne(single):

ansYes=math.log2((len(goodList)+1)/(len(y)+2))

ansNo=math.log2((len(badList)+1)/(len(y)+2))

for i in range(len(single)):

ansYes+=math.log2(P(i,single[i],'是'))

ansNo+=math.log2(P(i,single[i],'否'))

# print(ansYes,ansNo,math.pow(2,ansYes),math.pow(2,ansNo))

if ansYes>ansNo:return '是'

else:return '否'

def predictAll(iX):

predictY=[]

for i in range(m):

predictY.append(predictOne(iX[i]))

return predictY

predictY=predictAll(X)

print(y)

print(np.array(predictAll(X)))

confusionMatrix=np.zeros((2,2))

for i in range(len(y)):

if predictY[i]==y[i]:

if y[i] == '否':

confusionMatrix[0, 0] += 1

else:

confusionMatrix[1, 1] += 1

else:

if y[i] == '否':

confusionMatrix[0, 1] += 1

else:

confusionMatrix[1, 0] += 1

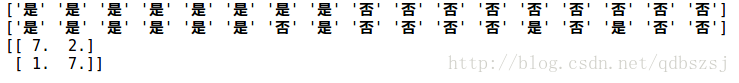

print(confusionMatrix)最后分类结果如下