Word2vec:skip-gram模型+Negative Sampling(负采样)代码实现

算法原理:

算法原理可以参考该链接

超参数

# Training Parameters

learning_rate = 0.1

batch_size = 128

num_steps = 3000000

display_step = 10000

eval_step = 200000

# Evaluation Parameters

valid_size = 20

valid_window = 100

#从词典的前100个词中随机选取20个词来验证模型

eval_words = np.random.choice(valid_window, valid_size, replace=False)

# Word2Vec Parameters

embedding_size = 200

max_vocabulary_size = 50000

min_occurrence = 10 # 词典中词出现的最低次数

skip_window = 3 # 窗口大小

num_skips = 2 # 每个输入中心词在其上下文区间中选取num_skips个词来生成样本

num_sampled = 64 # Number of negative examples

解释:

- 我们模型的验证是:计算于eval_words数组中的词最近似的几个词

词典生成模块

def make_vocabulary(data):

""" data:是一个一维的list,每个元素可以是单个字也可以是切词后的词 data是我们将句子切词后再拼接生成的(如果以字为单位不用切词直接拼接) """

word2count = [('UNK', -1)]

#统计语言库词的次数

word2count.extend(collections.Counter("".join(data)).most_common(max_vocabulary_size - 1))

#去掉出现次数比较少的词

for i in range(len(word2count) - 1, -1, -1):

if word2count[i][1] < min_occurrence:

word2count.pop(i)

else:

break

vocabulary_size = len(word2count)

word2id = dict()

for i, (word, _) in enumerate(word2count):

word2id[word] = i

#将data中的词转化为其对应索引ID

data_id = list()

unk_count = 0

for word in data:

index = word2id.get(word, 0)

if index == 0:

unk_count += 1

data_id.append(index)

word2count[0] = ('UNK', unk_count)#UNK用来代替词典里面有的词

id2word = dict(zip(word2id.values(), word2id.keys()))

return data_id,word2id,id2word,word2count,vocabulary_size

batch生成模块

data_index = 0 #上面模块生成的data_id的跟踪索引

# Generate training batch for the skip-gram model

def next_batch(batch_size, num_skips, skip_window,data):

#data就是上一个模块生成的data_id

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

#num_skips是每个中心词生成的样本数所以要求batch_size是它的倍数

# 确定了中心词后其上下文词个数为左skip_window个右 skip_window个

#对每个中心生成的样本的label是从这2 * skip_windo上下文中随机抽取的。

#所以样本数num_skips 要小于窗口大小

batch = np.ndarray(shape=(batch_size), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

# get window size (words left and right + current one)

span = 2 * skip_window + 1 # 7

buffer = collections.deque(maxlen=span) # 一个双向队列

if data_index + span > len(data): # 超出范围重新开始

data_index = 0

buffer.extend(data[data_index:data_index + span])

data_index += span

for i in range(batch_size // num_skips): # 整除向下取整 是每个batch中中心词个数

context_words = [w for w in range(span) if w != skip_window] # [0,1,2,4,5,6]

words_to_use = random.sample(context_words, num_skips) # 从一个list中随机选取batch_size个元素(2)[a,b]

for j, context_word in enumerate(words_to_use):

batch[i * num_skips + j] = buffer[skip_window]

labels[i * num_skips + j, 0] = buffer[context_word]

#向前滑动窗口

if data_index == len(data):#重新开始

buffer.extend(data[0:span])

data_index = span

else:#向前滑动一个

buffer.append(data[data_index])

data_index += 1

# Backtrack a little bit to avoid skipping words in the end of a batch

data_index = (data_index + len(data) - span) % len(data)

return batch, labels

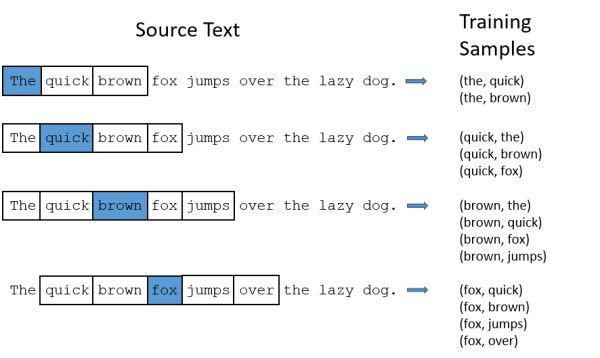

样本生成图:

其中skip_window=2;num_skips=整个窗口大小,和上面代码有一点差异。训练模型模块

def train():

s=time.time()

print("加载数据....")

data=read_file("./序列标注文件.txt") #这个函数需要根据自己的数据重写

print("cost:%s"%str(time.time()-s))

s = time.time()

print("创建字典...")

data_id, word2id, id2word, word2count, vocabulary_size=make_vocabulary(data)

print("cost:%s" % str(time.time() - s))

print("保存词汇表...")

print("词汇表大小: %d"%vocabulary_size)

with codecs.open("./vocabulary_text", "w", "utf8") as fout:

L = sorted(list(word2id.items()), key=lambda x: int(x[1]))

for word,id in L:

fout.write("%s\t%s\n"%(word,str(id)))

# Input data

X = tf.placeholder(tf.int32, shape=[None])

# Input label

Y = tf.placeholder(tf.int32, shape=[None, 1])

# Create the embedding variable (each row represent a word embedding vector)

embedding = tf.Variable(tf.random_normal([vocabulary_size, embedding_size]))

# Lookup the corresponding embedding vectors for each sample in X

X_embed = tf.nn.embedding_lookup(embedding, X)

# Construct the variables for the NCE loss

nce_weights = tf.Variable(tf.random_normal([vocabulary_size, embedding_size]))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

#这就是tensorflow中Negative Sampling(负采样)的实现

#可以直接看作一个softmax层的近似

loss_op = tf.reduce_mean(

tf.nn.nce_loss(weights=nce_weights,

biases=nce_biases,

labels=Y,

inputs=X_embed,

num_sampled=num_sampled,

num_classes=vocabulary_size))

# Define the optimizer

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

train_op = optimizer.minimize(loss_op)

# Evaluation

# Compute the cosine similarity between input data embedding and every embedding vectors

X_embed_norm = X_embed / tf.sqrt(tf.reduce_sum(tf.square(X_embed)))

embedding_norm = embedding / tf.sqrt(tf.reduce_sum(tf.square(embedding), 1, keepdims=True))

cosine_sim_op = tf.matmul(X_embed_norm, embedding_norm, transpose_b=True)

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

config = tf.ConfigProto()

config.allow_soft_placement=True

#可以获取到 operations 和 Tensor 被指派到哪个设备(几号CPU或几号GPU)上运行,

# 会在终端打印出各项操作是在哪个设备上运行的。

config.allow_soft_placement=True

#如果将这个选项设置成True,那么当运行设备不满足要求时,会自动分配GPU或者CPU。

config.gpu_options.per_process_gpu_memory_fraction = 0.5

#当使用GPU时,设置GPU内存使用最大比例

with tf.Session(config=config) as sess:

# Run the initializer

sess.run(init)

# Testing data

x_test = eval_words

print(x_test)

average_loss = 0

s=time.time()

for step in xrange(1, num_steps + 1):

# Get a new batch of data

batch_x, batch_y = next_batch(batch_size, num_skips, skip_window,data_id)

# Run training op

_, loss = sess.run([train_op, loss_op], feed_dict={X: batch_x, Y: batch_y})

average_loss += loss

if step % display_step == 0 or step == 1:

if step > 1:

average_loss /= display_step

print("Step " + str(step) + ", Average Loss= " + \

"{:.4f}".format(average_loss)+" cost: %s"%str(time.time()-s))

average_loss = 0

s = time.time()

# Evaluation

if step % eval_step == 0 or step == 1:

print("Evaluation...")

sim = sess.run(cosine_sim_op, feed_dict={X: x_test})

for i in xrange(len(eval_words)):

top_k = 8 # number of nearest neighbors

nearest = (-sim[i, :]).argsort()[1:top_k + 1]

log_str = '"%s" nearest neighbors:' % id2word[eval_words[i]]

for k in xrange(top_k):

log_str = '%s %s,' % (log_str, id2word[nearest[k]])

print(log_str)

print("保存词向量...")

embedding_array=np.array(sess.run(embedding),dtype=np.float32)

np.save("./vocabulary_vec", embedding_array) #二进制保存

np.savetxt("./vocabulary_vec.txt", embedding_array)#文本形式保存

完整代码

完整代码GitHub链接