Logstash:把Apache日志导入到Elasticsearch

在之前的文章“Logstash: 应用实践 - 装载CSV文档到Elasticsearch”中,我们已经讲述了如果把一个CSV格式的文件的数据传入到Elasticsearch之中,今天我们来讲述一下如何把一个Apache log的内容传入到Elasticsearch之中。

在进行这个练习之前,大家已经按照我之前的文章“如何安装Elastic栈中的Logstash”把Logstash安装好了。并且已经安装好自己的Elasticsearch及Kibana,并成功运行它们。在Elasticsearch的应用中,我们通常会把Logstash和Beats结合起来一起使用:

在今天的应用中,我们将使用Filebeats来读取我们的log,并把数据传入到Logstash之中。通过Logstash的Filter的处理,并最终送人到Elasticsearch中进行数据分析。

下载Apache 例子日志

方法一:

你可以到地址https://github.com/elastic/examples/blob/master/Common%20Data%20Formats/apache_logs/apache_logs进行下载。然后存于自己的一个本地目录当中。针对我的情况,我存于我自己的home目录下的一个data目录。

localhost:data liuxg$ pwd

/Users/liuxg/data

localhost:data liuxg$ ls apache_logs.txt

apache_logs.txt方法二:

我们在我们本地的目录使用如下的方法:

debian:

wget https://raw.githubusercontent.com/elastic/examples/master/Common%20Data%20Formats/apache_logs/apache_logsMac:

curl -L -O https://raw.githubusercontent.com/elastic/examples/master/Common%20Data%20Formats/apache_logs/apache_logs针对我的情况,我把这个apache_logs文件存于本地的home目录下的data文件夹中。

Log的每条记录有如下的格式:

83.149.9.216 - - [17/May/2015:10:05:03 +0000] "GET /presentations/logstash-monitorama-2013/images/kibana-search.png HTTP/1.1" 200 203023 "http://semicomplete.com/presentations/logstash-monitorama-2013/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36"

83.149.9.216 - - [17/May/2015:10:05:43 +0000] "GET /presentations/logstash-monitorama-2013/images/kibana-dashboard3.png HTTP/1.1" 200 171717 "http://semicomplete.com/presentations/logstash-monitorama-2013/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36"为了能够使得我们的应用正确运行,我们也必须下载一个叫做apache_template.json的tempate文件,并把这个文件置于和log文件同一个目录中。这个文件在如下的Logstash的配置中将会被用到。我们可以从如下的地址进行下载:

https://github.com/elastic/examples/blob/master/Common%20Data%20Formats/apache_logs/logstash/apache_template.json

安装Filebeat

在我们的应用中,我们需要使用Filebeat来把我们的log信息进行读取,并传入到Elastcsearch之中。

deb:

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.1-amd64.deb

sudo dpkg -i filebeat-7.3.1-amd64.debrpm:

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.1-x86_64.rpm

sudo rpm -vi filebeat-7.3.1-x86_64.rpmmac:

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.1-darwin-x86_64.tar.gz

tar xzvf filebeat-7.3.1-darwin-x86_64.tar.gzbrew:

brew tap elastic/tap

brew install elastic/tap/filebeat-fulllinux:

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.1-linux-x86_64.tar.gz

tar xzvf filebeat-7.3.1-linux-x86_64.tar.gz这样我们的Filebeat就安装好了。请注意:由于ELK迭代比较快,我们可以把上面的版本7.3.1替换成我们需要的版本即可。我们先不要运行Filebeat。

配置Filebeat

我们在Filebeat的安装目录下,可以创建一个这样的filebeat_apache.yml文件,它的内容如下:

filebeat.inputs:

- type: log

enabled: true

paths:

- /Users/liuxg/data/apache_logs

output.logstash:

hosts: ["localhost:5044"]

配置Logstash

我们在我们的data目录下创建一个Logstash的配置文件。这个文件可以位于data目录而不位于Logstash安装目录下的好处是,我们以后可以根据不同的Logstash版本运行,而不用在删除Logstash安装目录时,失去这个文件。我们把这个文件的名字取为apache_logstash.conf.。它的内容如下:

input {

beats {

port => "5044"

}

}

filter {

grok {

match => {

"message" => '%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "%{WORD:verb} %{DATA:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:response:int} (?:-|%{NUMBER:bytes:int}) %{QS:referrer} %{QS:agent}'

}

}

date {

match => [ "timestamp", "dd/MMM/YYYY:HH:mm:ss Z" ]

locale => en

}

geoip {

source => "clientip"

}

useragent {

source => "agent"

target => "useragent"

}

}

output {

stdout {

codec => dots {}

}

elasticsearch {

index => "apache_elastic_example"

template => "/Users/liuxg/data/apache_template.json"

template_name => "apache_elastic_example"

template_overwrite => true

}

}说明:

- 这里的input采用了beats,并监听端口5044。很多beats都会通过一个端口发布数据。在我们的例子里,我们采用Filebeat利用这个端口来把数据采集进来

- Filter部分。这里所有的filter都是按照从上到下的顺序来执行的。

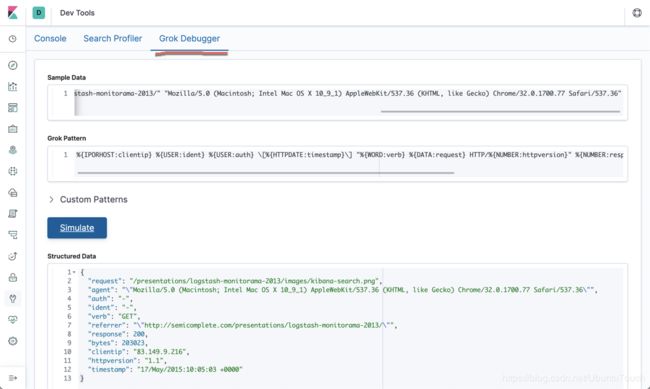

grok

这个通过正则表达式来匹配我们的每一条log信息。更多grok的pattern可以在地址grok pattern找到。

它把我们的每条消息通过正则表达式进行匹配的方法来进行结构化的处理。 针对如下的一条信息:

83.149.9.216 - - [17/May/2015:10:05:03 +0000] "GET /presentations/logstash-monitorama-2013/images/kibana-search.png HTTP/1.1" 200 203023 "http://semicomplete.com/presentations/logstash-monitorama-2013/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36"

我们通过grok把我们的每一条log信息进行匹配,然后把相应的值赋值给相应的变量。其实filebeat也传过很多其它的信息:

{

"request" => "/presentations/logstash-monitorama-2013/images/Dreamhost_logo.svg",

"log" => {

"file" => {

"path" => "/Users/liuxg/data/apache_logs"

},

"offset" => 3260

},

"agent" => {

"ephemeral_id" => "cb749ecc-852a-420a-b97a-4fe5d1b3ffb9",

"hostname" => "localhost",

"id" => "c88813ba-fdea-4a98-a0be-468fb53566f3",

"type" => "filebeat",

"version" => "7.3.0"

},

"ident" => "-",

"input" => {

"type" => "log"

},

"message" => "83.149.9.216 - - [17/May/2015:10:05:46 +0000] \"GET /presentations/logstash-monitorama-2013/images/Dreamhost_logo.svg HTTP/1.1\" 200 2126 \"http://semicomplete.com/presentations/logstash-monitorama-2013/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36\"",

"ecs" => {

"version" => "1.0.1"

},

"clientip" => "83.149.9.216",

"bytes" => 2126,

"verb" => "GET",

"auth" => "-",

"@version" => "1",

"@timestamp" => 2019-09-11T06:31:36.098Z,

"referrer" => "\"http://semicomplete.com/presentations/logstash-monitorama-2013/\"",

"host" => {

"name" => "localhost"

},

"timestamp" => "17/May/2015:10:05:46 +0000",

"httpversion" => "1.1",

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"response" => 200

}如果大家对Grok正则还不是很熟悉的话,有一个Grok debugger可以供大家来测试。这个工具已经集成到Kibana里面了。

我们也可以到网站https://grokdebug.herokuapp.com/去进行测试。

date filter

通过date filter的运用把上一个filter传过来的timestamp信息转化为一个@timestamp的字段。这个是非常重要的。在上面的显示中,我们可以看到有两个时间字段:timestamp及@timestamp。其中@timestamp表示的在运行时的当前timestamp。我们希望@timestamp来自于log里的时间信息,也就是timestamp所表述的时间。

运行完上面的date filter后,字段变为:

{

"auth" => "-",

"log" => {

"file" => {

"path" => "/Users/liuxg/data/apache_logs"

},

"offset" => 983

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"timestamp" => "17/May/2015:10:05:12 +0000",

"request" => "/presentations/logstash-monitorama-2013/plugin/zoom-js/zoom.js",

"ecs" => {

"version" => "1.0.1"

},

"message" => "83.149.9.216 - - [17/May/2015:10:05:12 +0000] \"GET /presentations/logstash-monitorama-2013/plugin/zoom-js/zoom.js HTTP/1.1\" 200 7697 \"http://semicomplete.com/presentations/logstash-monitorama-2013/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36\"",

"bytes" => 7697,

"response" => 200,

"verb" => "GET",

"ident" => "-",

"input" => {

"type" => "log"

},

"agent" => {

"hostname" => "localhost",

"id" => "c88813ba-fdea-4a98-a0be-468fb53566f3",

"ephemeral_id" => "fa8fe907-c89f-4410-8877-00faebefe76e",

"type" => "filebeat",

"version" => "7.3.0"

},

"@version" => "1",

"host" => {

"name" => "localhost"

},

"httpversion" => "1.1",

"referrer" => "\"http://semicomplete.com/presentations/logstash-monitorama-2013/\"",

"@timestamp" => 2015-05-17T10:05:12.000Z,

"clientip" => "83.149.9.216"

}我们可以很清楚地看出来@timestamp和timestamp的时间现在是完全一样的。

geoip filter

geoip filter可以根据我们的IP地址帮我们解析是来自哪一个地方的以及它的经纬度等等信息。经过geoip的处理后,我们可以看到如下的信息。

{

"verb" => "GET",

"httpversion" => "1.1",

"@version" => "1",

"host" => {

"name" => "localhost"

},

"message" => "83.149.9.216 - - [17/May/2015:10:05:25 +0000] \"GET /presentations/logstash-monitorama-2013/images/elasticsearch.png HTTP/1.1\" 200 8026 \"http://semicomplete.com/presentations/logstash-monitorama-2013/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.77 Safari/537.36\"",

"log" => {

"file" => {

"path" => "/Users/liuxg/data/apache_logs"

},

"offset" => 4872

},

"input" => {

"type" => "log"

},

"request" => "/presentations/logstash-monitorama-2013/images/elasticsearch.png",

"ident" => "-",

"@timestamp" => 2015-05-17T10:05:25.000Z,

"agent" => {

"id" => "c88813ba-fdea-4a98-a0be-468fb53566f3",

"ephemeral_id" => "9afaba31-7cd0-4202-9ca8-4501742fc7a3",

"hostname" => "localhost",

"type" => "filebeat",

"version" => "7.3.0"

},

"bytes" => 8026,

"geoip" => {

"city_name" => "Moscow",

"location" => {

"lon" => 37.6172,

"lat" => 55.7527

},

"longitude" => 37.6172,

"latitude" => 55.7527,

"postal_code" => "102325",

"region_name" => "Moscow",

"country_code3" => "RU",

"country_name" => "Russia",

"timezone" => "Europe/Moscow",

"country_code2" => "RU",

"region_code" => "MOW",

"continent_code" => "EU",

"ip" => "83.149.9.216"

},

"auth" => "-",

"response" => 200,

"clientip" => "83.149.9.216",

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"referrer" => "\"http://semicomplete.com/presentations/logstash-monitorama-2013/\"",

"ecs" => {

"version" => "1.0.1"

},

"timestamp" => "17/May/2015:10:05:25 +0000"

}useragent filter

useragent可以帮我们添加有关useragent(如系列,操作系统,版本和设备)的信息。进过useragent filter后,我们可以看到如下的信息:

{

"input" => {

"type" => "log"

},

"clientip" => "199.16.156.124",

"message" => "199.16.156.124 - - [18/May/2015:14:05:13 +0000] \"GET /files/lumberjack/lumberjack-0.3.0.exe HTTP/1.1\" 200 4378624 \"-\" \"Twitterbot/1.0\"",

"verb" => "GET",

"ecs" => {

"version" => "1.0.1"

},

"httpversion" => "1.1",

"response" => 200,

"timestamp" => "18/May/2015:14:05:13 +0000",

"referrer" => "\"-\"",

"@timestamp" => 2015-05-18T14:05:13.000Z,

"log" => {

"offset" => 780262,

"file" => {

"path" => "/Users/liuxg/data/apache_logs"

}

},

"@version" => "1",

"geoip" => {

"timezone" => "America/Chicago",

"country_code2" => "US",

"ip" => "199.16.156.124",

"country_code3" => "US",

"longitude" => -97.822,

"country_name" => "United States",

"latitude" => 37.751,

"continent_code" => "NA",

"location" => {

"lon" => -97.822,

"lat" => 37.751

}

},

"ident" => "-",

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"bytes" => 4378624,

"host" => {

"name" => "localhost"

},

"agent" => {

"hostname" => "localhost",

"id" => "c88813ba-fdea-4a98-a0be-468fb53566f3",

"ephemeral_id" => "e146fbc1-8073-404e-bc6f-d692cf9303d2",

"type" => "filebeat",

"version" => "7.3.0"

},

"request" => "/files/lumberjack/lumberjack-0.3.0.exe",

"auth" => "-"

}运行Logstash及Filebeat

我们可以通过如下的方式来运行Logstash:

$ ./bin/logstash -f ~/data/apache_logstash.conf这里的 -f 选项用来指定我们的定义的Logstash的配置文件。

我们进入到Filebeat的安装目录,并通过如下的命令来执行Filebeat的数据采集,并传入到Logstash之中:

$ ./filebeat -c filebeat_apache.yml这里我们用-c选项来指定我们的Filebeat的配置文件。

如果我们想重新运行我们的filebeat并重新让我们的Logstash处理一遍数据,可以删除registry目录:

Filebeat的registry文件存储Filebeat用于跟踪上次读取位置的状态和位置信息。

- data/registry 针对 .tar.gz and .tgz 归档文件安装

- /var/lib/filebeat/registry 针对 DEB 及 RPM 安装包

- c:\ProgramData\filebeat\registry 针对 Windows zip 文件

如果我们想重新运行数据一遍,我们可以直接到相应的目录下删除那个叫做registry的目录即可。针对.tar.gz的安装包来说,我们可以直接删除这个文件:

localhost:data liuxg$ pwd

/Users/liuxg/elastic/filebeat-7.3.0-darwin-x86_64/data

localhost:data liuxg$ rm -rf registry/等删除完这个regsitry目录后,我们再重新运行filebeat命令。

- output

我们直接在屏幕上输出点表示进度。同时输出我们的内容到Elasticsearch。

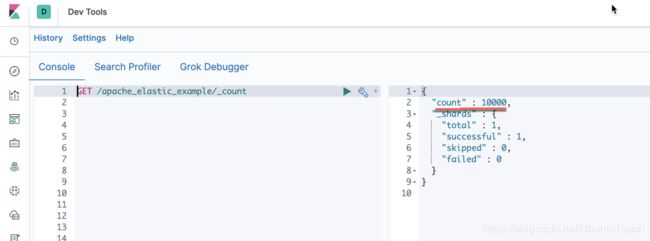

在Kibana里查看Index

如果我们进行顺利的话,我们已经完成了把apache_logs的数据成功导入到Elasticsearch之中。根据我们上面的配置文件,这个index的名字叫做apache_elastic_example。我们可以在Kibana中查看:

从上面的显示中可以看出来,我们已经有1000条数据。我们也可以进行搜索:

我们可以建立一个Index Pattern,并对数据进行分析:

一旦数据进入到Elasticsearch中,我们可以对这个数据进行分析。我们将在以后的文章中介绍如何使用Kibana对数据进行分析。