随机森林算法实现

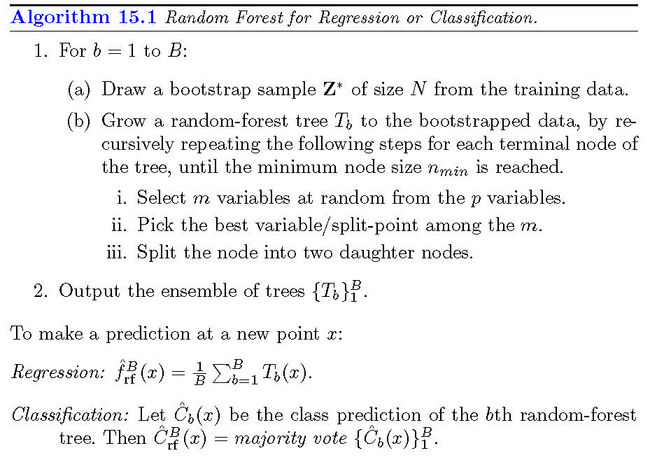

随机森林是一个包含多个决策树的分类器,该分类器的输出结果是由所有决策树输出结果的众数而定,

每个决策树都生长于一个被称为bootstrap样本的训练数据集之上,所谓“bootstrap样本”的构造原理为:

对于容量为n原始训练数据,采取重复抽样的方式抽样n次,形成一组新的训练数据,被称为原始数据

的一个bootstrap样本。在bootstrap样本上构造决策树的原理与传统的构造决策树的方法大致相同,但也

存在一些差别,不同之处在于:在对非叶节点分裂时,需要从所有的m个属性中随机地选取p个属性

(一般建议取p的值为m的平方根),再从这p个属性中选取最佳分裂属性以及相应的最佳分裂阀值,在该

bootstrap样本上最终生成的决策树不需要剪枝。在原始训练数据上生成多个bootstrap样本,利用上述

方法就会得到多颗完全生长的决策树,因为取样过程以及变量选取过程都是随机的,因此被形象地称为

随机森林。

算法的为代码如下:

本文利用Python语言实现随机森林算法,构建的每颗决策树都基于CART算法,所有代码都位于一个文件

randomforests.py中,

from __future__ import division

import numpy as np

import math

class node:

def __init__(self, col=-1, value=None, results=None, trueBranch=None, falseBranch=None):

self.col = col

self.value = value

self.results = results

self.trueBranch = trueBranch

self.falseBranch = falseBranch

def getLabel(self):

if self.results == None:

return None

else:

max_counts = 0

for key in self.results.keys():

if self.results[key] > max_counts:

label = key

max_counts = self.results[key]

return label

class RandomForestsClassifier:

def __init__(self, n_bootstrapSamples=20):

self.n_bootstrapSamples = n_bootstrapSamples

self.list_tree = []

def divideSet(self, samples, column, value):

splitFunction = None

if isinstance(value,int) or isinstance(value,float):

splitFunction = lambda row: row[column] >= value

else:

splitFunction = lambda row: row[column] == value

set1 = [row for row in samples if splitFunction(row)]

set2 = [row for row in samples if not splitFunction(row)]

return (set1,set2)

def uniqueCounts(self, samples):

results = {}

for row in samples:

r = row[len(row)-1]

if r not in results:

results[r] = 0

results[r] = results[r]+1

return results

def giniEstimate(self, samples):

if len(samples)==0: return 0

total = len(samples)

counts = self.uniqueCounts(samples)

gini = 0

for target in counts:

gini = gini + pow(counts[target],2)

gini = 1 - gini / pow(total,2)

return gini

def buildTree(self, samples):#构造CART决策树

if len(samples) == 0:

return node()

currentGini = self.giniEstimate(samples)

bestGain = 0

bestCriteria = None

bestSets = None

colCount = len(samples[0]) - 1

colRange = range(0,colCount)

np.random.shuffle(colRange)

for col in colRange[0:int(math.ceil(math.sqrt(colCount)))]:

colValues = {}

for row in samples:

colValues[row[col]] = 1

for value in colValues.keys():

(set1,set2) = self.divideSet(samples,col,value)

gain = currentGini - (len(set1)*self.giniEstimate(set1) + len(set2)*self.giniEstimate(set2)) / len(samples)

if gain > bestGain and len(set1) > 0 and len(set2) > 0:

bestGain = gain

bestCriteria = (col,value)

bestSets = (set1,set2)

if bestGain > 0:

trueBranch = self.buildTree(bestSets[0])

falseBranch = self.buildTree(bestSets[1])

return node(col=bestCriteria[0],value=bestCriteria[1],trueBranch=trueBranch,falseBranch=falseBranch)

else:

return node(results=self.uniqueCounts(samples))

def printTree(self, tree,indent=' '):#以文本形式显示决策树

if tree.results != None:

print str(tree.results)

else:

print str(tree.col)+':'+str(tree.value)+'?'

print indent+'T->',

self.printTree(tree.trueBranch,indent+' ')

print indent+'F->',

self.printTree(tree.falseBranch,indent+' ')

def predict_tree(self, observation, tree):#利用决策树进行分类

if tree.results != None:

return tree.getLabel()

else:

v = observation[tree.col]

branch = None

if isinstance(v,int) or isinstance(v,float):

if v >= tree.value: branch = tree.trueBranch

else: branch = tree.falseBranch

else:

if v == tree.value: branch = tree.trueBranch

else: branch = tree.falseBranch

return self.predict_tree(observation,branch)

def generateBootstrapSamples(self, data):#构造bootstrap样本

samples = []

for i in range(len(data)):

samples.append(data[np.random.randint(len(data))])

return samples

def fit(self, data):#构造随机森林

for i in range(self.n_bootstrapSamples):

samples = self.generateBootstrapSamples(data)

currentTree = self.buildTree(samples)

self.list_tree.append(currentTree)

def predict_randomForests(self, observation):#利用随机森林对给定观测数据进行分类

results = {}

for i in range(len(self.list_tree)):

currentResult = self.predict_tree(observation, self.list_tree[i])

if currentResult not in results:

results[currentResult] = 0

results[currentResult] = results[currentResult] + 1

max_counts = 0

for key in results.keys():

if results[key] > max_counts:

finalResult = key

max_counts = results[key]

return finalResultfrom sklearn.datasets import load_iris

iris = load_iris()

X = iris.data

y = iris.target

temp_data = np.concatenate([X, y.reshape((150,1))], axis=1)

#由于上述代码要求输入的观测数据存储在二维列表中,需将numpy二维数组转换成列表

data = []

for i in range(temp_data.shape[0]):

temp = []

for j in range(temp_data.shape[1]):

temp.append(temp_data[i][j])

data.append(temp)

rowRange = range(150)

np.random.shuffle(rowRange)

#从鸢尾花数据集(容量为150)按照随机均匀抽样的原则选取70%的数据作为训练数据

training_data = [data[i] for i in rowRange[0:105]]

#按照随机均匀抽样的原则选取30%的数据作为检验数据

testing_data = [data[i] for i in rowRange[105:150]]

classifier = randomforest.RandomForestsClassifier(n_bootstrapSamples=10)#初始化随机森林

classifier.fit(training_data)#利用训练数据进行拟合

finalResults = []

for row in testing_data:

finalResult = classifier3.predict_randomForests(row[0:4])#对检验数据集进行分类

finalResults.append(finalResult)

errorVector = np.zeros((45,1))

errorVector[np.array(finalResults) != (np.array(testing_data))[:,4]] =1

errorVector.sum()/45#计算错判率

实际上如果将随机森林中决策树的个数设置为20(n_bootstrapSamples=10),再重复运行上述代码,会发现错判率

几乎接近0,这些都表明随机森林作为分类器的效果是非常好的。