pytorch transforms实例演示

pytorch transforms实例演示

演示下transforms的效果,供大家一个参考吧

首先准备下需要用到的图片

import matplotlib.pyplot as plt

import torchvision.transforms as tf

from PIL import Image

import PIL

import numpy as np

import torch

import imageio

def show(img, transform=None):

if transform:

if isinstance(transform, (tf.FiveCrop, tf.TenCrop)):

t = transform(img)

for i in range(len(t)):

plt.subplot(len(t)/5, 5, 1+i)

plt.axis('off')

plt.imshow(t[i])

else:

for i in range(9):

plt.subplot(331 + i)

plt.axis('off')

t= transform(img)

plt.imshow(t)

print(str(transform))

print(t)

else:

plt.imshow(img)

plt.axis('off')

plt.show()

img = imageio.imread('http://ww1.sinaimg.cn/large/7ec23716gy1g6rx3xt8x1j203d02j3ye.jpg')

img = Image.fromarray(img)

print(img)

show(img)

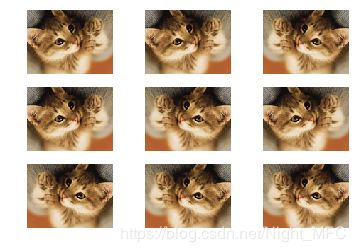

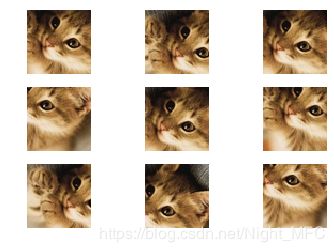

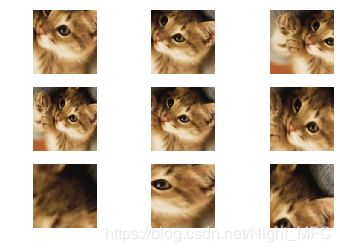

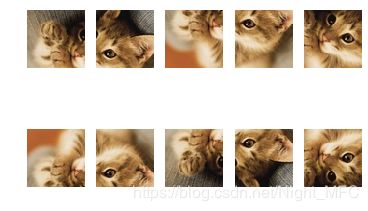

RandomHorizontalFlip,RandomVerticalFlip - 水平翻转,垂直翻转

# multiplt img

t = tf.RandomHorizontalFlip()

show(img, t)

show(img, tf.RandomVerticalFlip())

RandomHorizontalFlip(p=0.5)

RandomVerticalFlip(p=0.5)

CenterCrop - 中心裁剪,以图片中心为起点,按像素大小进行裁剪

show(img, tf.CenterCrop(60)) # 小于图片大小则进行中心缩放

show(img, tf.CenterCrop(150)) # 大于图片大小则进行中心缩小,边缘填充0

CenterCrop(size=(60, 60))

CenterCrop(size=(150, 150))

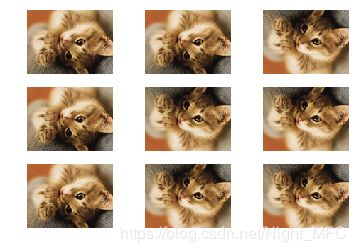

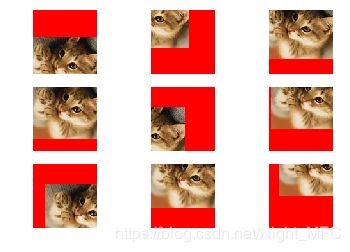

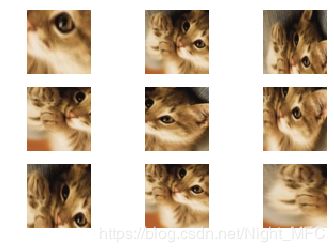

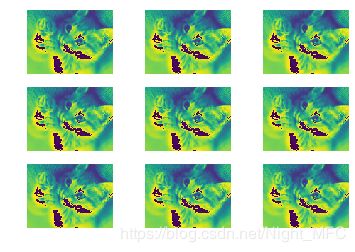

RandomCrop - 随机取不同的点作为中心进行裁剪

show(img, tf.RandomCrop(60))

show(img, tf.RandomCrop(100,padding=50, pad_if_needed=True))

show(img, tf.RandomCrop(100,padding=50, pad_if_needed=True, fill=255))

RandomCrop(size=(60, 60), padding=None)

RandomCrop(size=(100, 100), padding=50)

RandomCrop(size=(100, 100), padding=50)

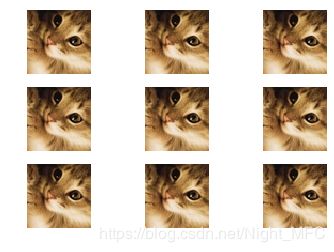

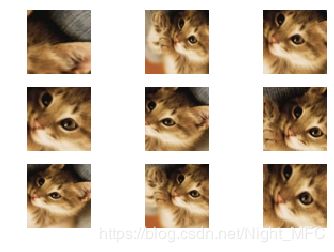

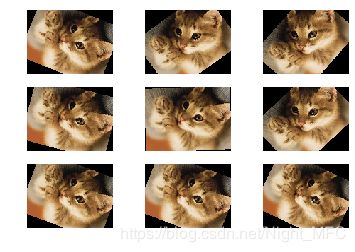

RandomResizedCrop - 先crop,再resize,将给定图像随机裁剪为不同的大小和宽高比,然后缩放所裁剪得到的图像为制定的大小

- size为缩放之后的大小

- scala为裁剪比例

- ratio为伸缩比例

- interpolation为resize模式

show(img, tf.RandomResizedCrop(60))

show(img, tf.RandomResizedCrop(150))

show(img, tf.RandomResizedCrop(60, scale=(0.5, 2.0))) # 缩放比例

show(img, tf.RandomResizedCrop(60, ratio=(1/2, 2/1))) # 拉伸比例

RandomResizedCrop(size=(60, 60), scale=(0.08, 1.0), ratio=(0.75, 1.3333), interpolation=PIL.Image.BILINEAR)

RandomResizedCrop(size=(150, 150), scale=(0.08, 1.0), ratio=(0.75, 1.3333), interpolation=PIL.Image.BILINEAR)

RandomResizedCrop(size=(60, 60), scale=(0.5, 2.0), ratio=(0.75, 1.3333), interpolation=PIL.Image.BILINEAR)

RandomResizedCrop(size=(60, 60), scale=(0.08, 1.0), ratio=(0.5, 2.0), interpolation=PIL.Image.BILINEAR)

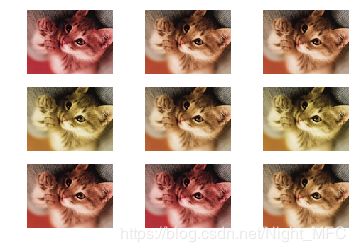

ColorJitter - hsv颜色空间 色调(H),饱和度(S),明度(V)

show(img, tf.ColorJitter(brightness=0.5)) # 亮度

show(img, tf.ColorJitter(contrast=0.5)) # 对比度

show(img, tf.ColorJitter(saturation=0.5)) # 饱和度

show(img, tf.ColorJitter(hue=0.1)) # 色调,只能是-0.5~0.5,应该指的是可以逆时针旋转180度或顺时针旋转180度

ColorJitter(brightness=[0.5, 1.5], contrast=None, saturation=None, hue=None)

ColorJitter(brightness=None, contrast=[0.5, 1.5], saturation=None, hue=None)

ColorJitter(brightness=None, contrast=None, saturation=[0.5, 1.5], hue=None)

ColorJitter(brightness=None, contrast=None, saturation=None, hue=[-0.1, 0.1])

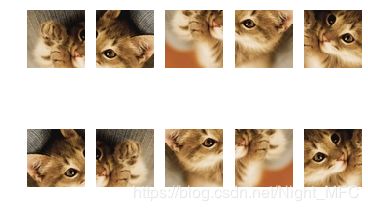

FiveCrop,TenCrop - 将一张图片裁剪出5张出来

- 输入(batch_size, channel, height, width)

- 输出(batch_size, ncrops, channel, height, width)

show(img, tf.FiveCrop(60))

# show(img, tf.FiveCrop(121))

FiveCrop(size=(60, 60))

(, , , , )

show(img, tf.TenCrop(60))

show(img, tf.TenCrop(60, vertical_flip=True))

TenCrop(size=(60, 60), vertical_flip=False)

(, , , , , , , , , )

TenCrop(size=(60, 60), vertical_flip=True)

(, , , , , , , , , )

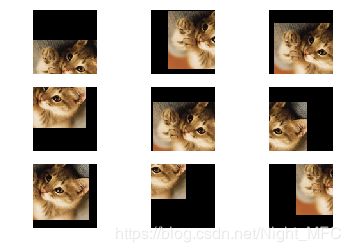

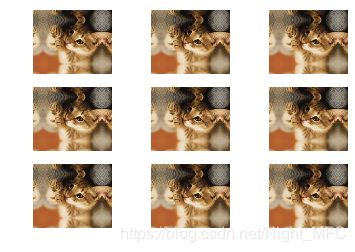

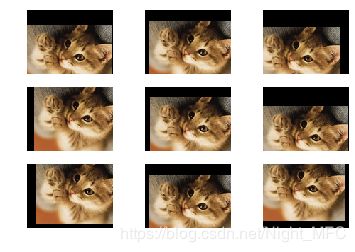

Pad - 填充值

show(img, tf.Pad(20))

show(img, tf.Pad(20,padding_mode='edge'))

show(img, tf.Pad(20,padding_mode='reflect'))

Pad(padding=20, fill=0, padding_mode=constant)

Pad(padding=20, fill=0, padding_mode=edge)

Pad(padding=20, fill=0, padding_mode=reflect)

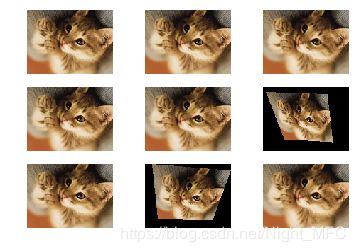

RandomPerspective - 透视变换

show(img, tf.RandomPerspective())

C:\ProgramData\Anaconda3\lib\site-packages\torchvision\transforms\functional.py:440: UserWarning: torch.gels is deprecated in favour of torch.lstsq and will be removed in the next release. Please use torch.lstsq instead.

res = torch.gels(B, A)[0]

RandomPerspective(p=0.5)

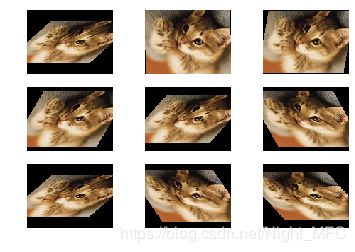

RandomAffine - 仿射变换,有旋转,平移,缩放,剪切变换

show(img, tf.RandomAffine(50)) # 旋转角度

show(img, tf.RandomAffine(0, translate=(0.1,0.3))) # 平移范围

show(img, tf.RandomAffine(0, scale=(0.5, 1))) # 缩放比例

show(img, tf.RandomAffine(0, shear=50)) # 剪切变换

show(img, tf.RandomAffine(50, resample=PIL.Image.BILINEAR)) # TODO

RandomAffine(degrees=(-50, 50))

RandomAffine(degrees=(0, 0), translate=(0.1, 0.3))

RandomAffine(degrees=(0, 0), scale=(0.5, 1))

RandomAffine(degrees=(0, 0), shear=(-50, 50))

RandomAffine(degrees=(-50, 50), resample=PIL.Image.BILINEAR)

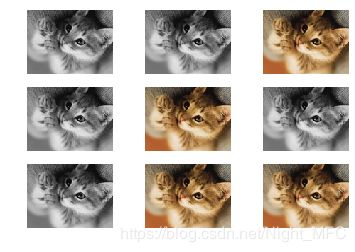

RandomGrayscale - 灰度模式

show(img, tf.RandomGrayscale(0.5)) # 使用0.5的概率进行灰度化

RandomGrayscale(p=0.5)

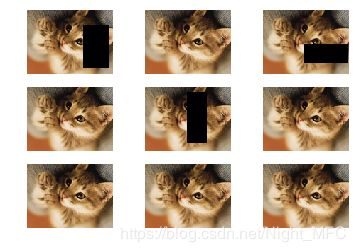

RandomErasing - 随机擦除

# Erasing还需要组合使用

t = tf.Compose([tf.ToTensor(),tf.RandomErasing(), tf.ToPILImage()])

show(img, t)

Compose(

ToTensor()

ToPILImage()

)

lambda

def foo(x):

r = x[0, ...] + 0.1

print(x.shape, x.max(), x.min(), r.shape, r.max(), r.min())

return r

t = tf.Compose([tf.ToTensor(),tf.Lambda(foo), tf.ToPILImage()])

show(img, t)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

torch.Size([3, 91, 121]) tensor(0.9686) tensor(0.) torch.Size([91, 121]) tensor(1.0686) tensor(0.1275)

Compose(

ToTensor()

Lambda()

ToPILImage()

)