卷积神经网络可视化——Grad CAM Python实现

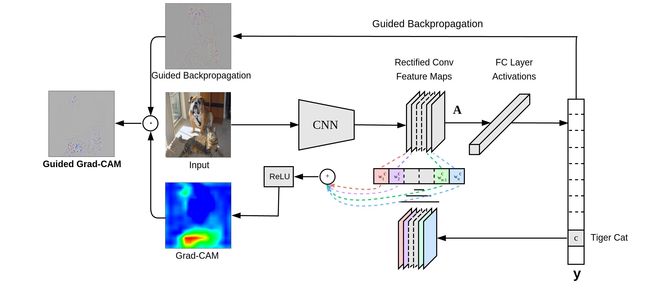

Grad CAM

主要参考这篇博客

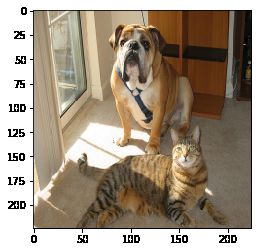

准备网络输入的图片

# 准备数据

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import numpy as np

%matplotlib inline

image_path = 'cat_dog.png'

image_as_pil = Image.open(image_path) # PIL type image

# Show origin image

print('原始图片:')

plt.imshow(image_as_pil)

plt.show()

# image transform

transform = transforms.Compose([

transforms.Resize(size=(224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# Image as tensor

image_as_tensor = transform(image_as_pil)

# Show after transformed

print('转化过后的图片:')

plt.imshow(np.transpose(image_as_tensor.data.numpy(), (1, 2, 0)))

plt.show()

# (3, 224, 224) -> (1, 3, 224, 224)

input_image = image_as_tensor.unsqueeze(0)

print('input images shape'.format(input_image.shape))

原始图片:

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

转化过后的图片:

input images shape

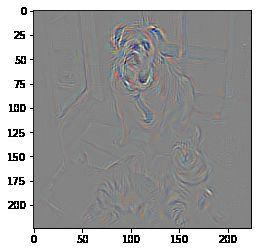

Guided Propagation Implementation

import torch

import torch.nn as nn

class GuidedPropo():

def __init__(self, model):

self.model = model

self.model.eval()

self.gradients = None # 需要输出的热力图

self.relu_forward_output = [] # 记录前行传播过程中,ReLU层的输出

self._hook_layers()

def _hook_layers(self):

def relu_forward_hook_function(module, ten_in, ten_out):

"""

在前向传播时,将ReLU层的输出保存起来

"""

self.relu_forward_output.append(ten_out)

def relu_backward_hook_function(module, grad_in, grad_out):

last_relu_output = self.relu_forward_output[-1]

# 正向传播时,ReLU输出大于0的位置设置为1, 小于0的位置设置为0

# 反向传播时,使用这个mask对出入的梯度进行设置,满足guided propagation算法

mask = last_relu_output[last_relu_output > 0] = 1

# 输入梯度小于0的位置设置为0

modified_grad_in = torch.clamp(grad_in[0], min=0.0)

# 最终的输出梯度

modified_grad_out = modified_grad_in * mask

# 再次向后传播梯度时,要更新最后一层的ReLU

del self.relu_forward_output[-1]

# 返回值与grad_out类型相同,都是tuple

return (modified_grad_out,)

def conv_backward_hook_function(module, grad_in, grad_out):

"""

反向传播到第一层卷积层时,输出的梯度就是我们需要的热力图

"""

self.gradients = grad_in[0]

for index, module in enumerate(list(self.model.features), 1):

if isinstance(module, nn.Conv2d) and index == 1: # 第一层卷积层

module.register_backward_hook(conv_backward_hook_function)

elif isinstance(module, nn.ReLU):

module.register_forward_hook(relu_forward_hook_function)

module.register_backward_hook(relu_backward_hook_function)

def generate_cam(self, input_image, target_class):

# Forward pass.

model_out = self.model(input_image) # shape [1, 1000]

# Target grad.

one_hot_output = torch.zeros(size=(1, model_out.size(1)), dtype=torch.float32) # shape [1, 1000]

one_hot_output[0][target_class] = 1

# Backward pass.

model_out.backward(gradient=one_hot_output)

# self.gradients.shape = [1, 3, 224, 224]

image_as_array = self.gradients.data.numpy()[0]

return image_as_array

from torchvision import models

from torch.autograd import Variable

# model = models.alexnet(pretrained=True)

model = models.alexnet(pretrained=True)

guided_cam = GuidedPropo(model)

input_image_var = Variable(input_image, requires_grad=True)

image_as_array = guided_cam.generate_cam(input_image_var, 243)

# 图像归一化

np.maximum(image_as_array, 0)

image_for_show = (image_as_array - np.min(image_as_array)) / (np.max(image_as_array) - np.min(image_as_array))

plt.imshow(np.transpose(image_for_show, (1, 2, 0)))

plt.show()

Grad CAM Implementation

# 获取目标层(指定卷积层)的输出和模型输出,以及指定层在反向传播时的gradient

class GradExtractor():

def __init__(self, model, target_layer):

self.model = model.eval()

self.target_layer = target_layer

self.gradients = None

def _save_grad(self, grad):

self.gradients = grad

def _forward_pass_on_convolution_layer(self, x):

conv_output = None

for index, module in enumerate(self.model.features, 1):

x = module(x)

if index == self.target_layer:

# 反向传播时调用register_hook中注册的函数

x.register_hook(self._save_grad)

conv_output = x

return conv_output, x # 指定卷积层的输出和model.features的输出

def forward_pass(self, x):

conv_output, output = self._forward_pass_on_convolution_layer(x)

output = output.view(output.size(0), -1) # Flatten

model_output = self.model.classifier(output)

return model_output, conv_output

grad_extractor = GradExtractor(model, 11)

input_image_var = Variable(input_image, requires_grad=True)

model_output, conv_output = grad_extractor.forward_pass(input_image_var)

one_hot_output = torch.ones(size=(1, model_output.size(1)), dtype=torch.float32)

one_hot_output[0][243] = 1.

model_output.backward(gradient=one_hot_output)

conv_grad = grad_extractor.gradients

print(model_output.shape, conv_output.shape, conv_grad.shape)

torch.Size([1, 1000]) torch.Size([1, 256, 13, 13]) torch.Size([1, 256, 13, 13])

# grad cam算法实现

class GradCam():

def __init__(self, model, target_layer):

self.model = model.eval()

self.extractor = GradExtractor(model, target_layer)

def generate_cam(self, input_image, target_class=None):

model_output, conv_output = self.extractor.forward_pass(input_image)

if target_class == None:

target_class = torch.argmax(model_output, dim=1).data.numpy()

# Target for backprop

one_hot_output = torch.zeros(size=(1, model_output.size(1)))

one_hot_output[0][target_class] = 1.

# Zero grad.

self.model.zero_grad()

# Backward pass with specified target

model_output.backward(gradient=one_hot_output, retain_graph=True)

# Get hooked gridient

guided_gradients = self.extractor.gradients.data.numpy()[0]

# Get convolution outputs

target = conv_output.data.numpy()[0] # 卷积层的输出(256, 13, 13)

# Get weights from gradients, Take averages for each gradient

weights = np.mean(guided_gradients, axis=(1, 2)) # 取每个gradient的均值作为weight

# Create empty numpy array for cam

cam = np.ones(target.shape[1:], dtype=np.float32)

# Multiply each weight with its conv output and then, sum

for i, w in enumerate(weights):

cam += w * target[i, :, :]

cam = np.maximum(cam, 0) # 相当于ReLU,小于0的值置为0

cam = (cam - np.min(cam)) / (np.max(cam) - np.min(cam)) # Normalize between 0-1

cam = np.uint8(cam * 255) # Scale between 0-255 to visualize

# 上采样到与原图片一样的大小

cam = np.uint8(Image.fromarray(cam).resize((input_image.shape[2], input_image.shape[3]), Image.BILINEAR))

return cam

grad_cam = GradCam(model, 11)

cam = grad_cam.generate_cam(input_image_var, 244)

plt.imshow(cam)

plt.show()

cam_gb = np.multiply(cam, image_as_array)

# 图像归一化

print(cam_gb.shape)

cam_gb = np.maximum(cam_gb, 0)

# cam_gb = (cam - np.min(cam_gb)) / (np.max(cam_gb) - np.min(cam_gb))

plt.imshow(np.transpose(cam_gb, (1, 2, 0)))

plt.show()

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

(3, 224, 224)