spark 存储之磁盘存储

spark 存储之磁盘存储

spark的数据需要写到磁盘上,主要有两个类负责这个工作.DiskBlockManager和DiskStore

DiskBlockManager

Creates and maintains the logical mapping between logical blocks and physical on-disk locations. One block is mapped to one file with a name given by its BlockId. Block files are hashed among the directories listed in spark.local.dir (or in SPARK_LOCAL_DIRS, if it’s set)

创建和维持在logical块和在磁盘上的物理块逻辑映射.一个块用它的blockId给定的名字映射到 一个文件.

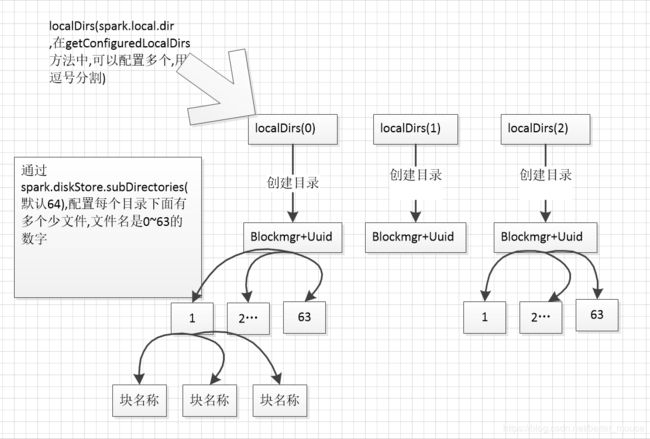

如果有一个块,先根据块名称hash%localDirs长度 选择子目录,然后再 块名称/localDirs长度%子目录个数,确定块最后到那个子目录.

/** Looks up a file by hashing it into one of our local subdirectories. */

// This method should be kept in sync with

// org.apache.spark.network.shuffle.ExternalShuffleBlockResolver#getFile().

//通过hash它到一个子目录 寻找一个文件

// 这个方法应该被加锁,在.....

def getFile(filename: String): File = {

// Figure out which local directory it hashes to, and which subdirectory in that

//hash值

val hash = Utils.nonNegativeHash(filename)

val dirId = hash % localDirs.length

val subDirId = (hash / localDirs.length) % subDirsPerLocalDir

// Create the subdirectory if it doesn't already exist

//创建一个子目录如果它不存在的话

val subDir = subDirs(dirId).synchronized {

val old = subDirs(dirId)(subDirId)

if (old != null) {

old

} else {

val newDir = new File(localDirs(dirId), "%02x".format(subDirId))

if (!newDir.exists() && !newDir.mkdir()) {

throw new IOException(s"Failed to create local dir in $newDir.")

}

subDirs(dirId)(subDirId) = newDir

newDir

}

}

new File(subDir, filename)

}

运行一个测试类.

test("basic block creation") {

val blockId = new TestBlockId("test")

val newFile = diskBlockManager.getFile(blockId)

writeToFile(newFile, 10)

assert(diskBlockManager.containsBlock(blockId))

newFile.delete()

assert(!diskBlockManager.containsBlock(blockId))

}

可以看到这个文件的最终目录 /tmp/spark-22c22eb5-1afd-4245-8af9-2b81c894ecb4/blockmgr-e4bde4de-c8db-437d-90d8-1414876d738c/30/test_test

如果在系统中看到blockmgr-uuid这样的目录,我们就知道这是为spark的disk存储的目录了.这个类主要为

DiskStore提供路径服务的.

DiskStore

DiskStore这个类需要DiskBlockManager来辅助它.

private[spark] class DiskStore(

conf: SparkConf,

diskManager: DiskBlockManager,

securityManager: SecurityManager)

Stores BlockManager blocks on disk.,存储BlockManager块到磁盘上.

/**

* Invokes the provided callback function to write the specific block.

* 调用提供的回调函数来编写特定块。

*

* @throws IllegalStateException if the block already exists in the disk store.

*/

def put(blockId: BlockId)(writeFunc: WritableByteChannel => Unit): Unit = {

if (contains(blockId)) {

throw new IllegalStateException(s"Block $blockId is already present in the disk store")

}

logDebug(s"Attempting to put block $blockId")

val startTime = System.currentTimeMillis

val file = diskManager.getFile(blockId)

val out = new CountingWritableChannel(openForWrite(file))

var threwException: Boolean = true

try {

writeFunc(out)

//记录下blockSize的大小

blockSizes.put(blockId, out.getCount)

threwException = false

} finally {

try {

out.close()

} catch {

case ioe: IOException =>

if (!threwException) {

threwException = true

throw ioe

}

} finally {

if (threwException) {

remove(blockId)

}

}

}

val finishTime = System.currentTimeMillis

logDebug("Block %s stored as %s file on disk in %d ms".format(

file.getName,

Utils.bytesToString(file.length()),

finishTime - startTime))

}

def putBytes(blockId: BlockId, bytes: ChunkedByteBuffer): Unit = {

put(blockId) { channel =>

bytes.writeFully(channel)

}

}

看一下测试类,如何做的

test("reads of memory-mapped and non memory-mapped files are equivalent") {

val conf = new SparkConf()

val securityManager = new SecurityManager(conf)

// It will cause error when we tried to re-open the filestore and the

// memory-mapped byte buffer tot he file has not been GC on Windows.

assume(!Utils.isWindows)

val confKey = "spark.storage.memoryMapThreshold"

// Create a non-trivial (not all zeros) byte array

val bytes = Array.tabulate[Byte](1000)(_.toByte)

val byteBuffer = new ChunkedByteBuffer(ByteBuffer.wrap(bytes))

val blockId = BlockId("rdd_1_2")

val diskBlockManager = new DiskBlockManager(conf, deleteFilesOnStop = true)

val diskStoreMapped = new DiskStore(conf.clone().set(confKey, "0"), diskBlockManager,

securityManager)

//写入块的数据

diskStoreMapped.putBytes(blockId, byteBuffer)

//查询块的数据

val mapped = diskStoreMapped.getBytes(blockId).toByteBuffer()

assert(diskStoreMapped.remove(blockId))

val diskStoreNotMapped = new DiskStore(conf.clone().set(confKey, "1m"), diskBlockManager,

securityManager)

diskStoreNotMapped.putBytes(blockId, byteBuffer)

val notMapped = diskStoreNotMapped.getBytes(blockId).toByteBuffer()

// Not possible to do isInstanceOf due to visibility of HeapByteBuffer

assert(notMapped.getClass.getName.endsWith("HeapByteBuffer"),

"Expected HeapByteBuffer for un-mapped read")

assert(mapped.isInstanceOf[MappedByteBuffer],

"Expected MappedByteBuffer for mapped read")

def arrayFromByteBuffer(in: ByteBuffer): Array[Byte] = {

val array = new Array[Byte](in.remaining())

in.get(array)

array

}

assert(Arrays.equals(new ChunkedByteBuffer(mapped).toArray, bytes))

assert(Arrays.equals(new ChunkedByteBuffer(notMapped).toArray, bytes))

}

这个类主要是把ByteBuffer类写入块名对应的文件.