DPDK系列之十三:容器基于OVS-DPDK的dpdkvhostuser端口的pktgen和testpmd数据包测试

一、前言

运行基于DPDK PMD应用程序的容器可以直接绑定OVS-DPDK创建的dpdvhostuser端口/socket,使用这种配置方式,两个容器直接的DPDK PMD应用可以使用userspace和DPDK PMD drive进行东西向高速互联。本文将展示这个配置过程。

转载自https://blog.csdn.net/cloudvtech

二、运行环境和配置

2.1 系统环境

OS:

cat /etc/redhat-release

CentOS Linux release 7.3.1611 (Core) 8vCPU

8GB memoryDPDK:

dpdk-stable-17.08.1 OVS-DPDK:

openvswitch-2.8.12.2 编译DPDK

参见文章《DPDK系列之一:DPDK 17.08.1在CentoS 7.2.1511的安装》

2.3 编译OVS-DPDK

参见文章《DPDK系列之七:OVS-DPDK的在CentOS安装和测试》。

转载自https://blog.csdn.net/cloudvtech

三、构建DPDK、pktgen和testpmd的容器镜像

3.1 构建基础镜像

cat Dockerfile_build_tool_container

FROM centos:7.3.1611

RUN rm -rf /etc/yum.repos.d

ADD ./resource/yum.repos.d /etc/yum.repos.d

COPY ./resource/kernel-header.3.10.0-693.11.6.el7.x86_64.tgz /root

WORKDIR /root

RUN tar -zxf kernel-header.3.10.0-693.11.6.el7.x86_64.tgz && mv 3.10.0-693.11.6.el7.x86_64 /lib/modules

RUN \

yum makecache && \

yum install -y make gcc git build-essential kernel-devel kernel-headers net-tools && \

yum install -y numactl-devel.x86_64 numactl-libs.x86_64 && \

yum install -y libpcap.x86_64 libpcap-devel.x86_64 && \

yum install -y pciutils wget xz && \

yum install -y patch

CMD ["/bin/bash"resource 目录

[root@container-host container]# ls resource/ -l

总用量 131104

-rwxr--r--. 1 root root 308 1月 23 16:08 build_dpdk.sh

-rw-r--r--. 1 root root 16719 5月 28 04:33 common_base

-rw-r--r--. 1 root root 2087 5月 28 04:35 compat.h

drwxrwxr-x. 16 root root 4096 5月 28 06:56 dpdk-17.05

-rw-r--r--. 1 root root 42178560 5月 28 04:27 dpdk-17.05.tar

-rw-r--r--. 1 root root 44554240 12月 7 09:02 dpdk-17.08.1.tar

-rw-r--r--. 1 root root 13994 1月 23 16:05 igb_uio.c

-rw-r--r--. 1 root root 32662324 1月 23 17:07 kernel-header.3.10.0-693.11.6.el7.x86_64.tgz

-rw-r--r--. 1 root root 7542744 5月 28 04:29 pktgen-3.4.2.tar.gz

drwxrwxr-x. 13 root root 4096 1月 11 08:59 pktgen-3.4.8

-rw-r--r--. 1 root root 7233352 1月 24 07:56 pktgen-3.4.8.tar.gz

-rw-r--r--. 1 root root 10810 1月 24 08:37 pktgen-latency.c

drwxr-xr-x. 2 root root 4096 1月 21 06:54 yum.repos.d

3.2 构建DPDK基础镜像

cat Dockerfile_dpdk_base

FROM centos_build:7.3.1611

COPY ./resource/dpdk-17.08.1.tar /root

WORKDIR /root/

RUN tar -xf dpdk-17.08.1.tar

WORKDIR /root/dpdk-stable-17.08.1

#might need bellow to patch through

#RUN rm ./lib/librte_eal/linuxapp/igb_uio/igb_uio.c

#ADD ./resource/igb_uio.c ./lib/librte_eal/linuxapp/igb_uio/igb_uio.c

ENV RTE_SDK=/root/dpdk-stable-17.08.1

ENV RTE_TARGET=x86_64-native-linuxapp-gcc

COPY ./resource/build_dpdk.sh /root

RUN /root/build_dpdk.sh

CMD ["/bin/bash"]cat resource/build_dpdk.sh

export RTE_SDK=/root/dpdk-stable-17.08.1

export RTE_TARGET=x86_64-native-linuxapp-gcc

make config T=x86_64-native-linuxapp-gcc

sed -ri 's,(PMD_PCAP=).*,\1y,' build/.config

make

cd $RTE_SDK

make install T=x86_64-native-linuxapp-gcc

make -C examples RTE_SDK=$(pwd) RTE_TARGET=build O=$(pwd)/build/examples

3.3 构建pktgen镜像

cat Dockerfile_pktgen

FROM dpdk_base:latest

COPY ./resource/pktgen-3.4.8.tar.gz /root

WORKDIR /root/

RUN tar -zxf pktgen-3.4.8.tar.gz

WORKDIR /root/pktgen-3.4.8

ENV RTE_SDK=/root/dpdk-stable-17.08.1

ENV RTE_TARGET=x86_64-native-linuxapp-gcc

RUN yum install -y numactl libpcap-dev

RUN rm -f ./app/pktgen-latency.c

COPY ./resource/pktgen-latency.c ./app

RUN make

CMD ["/bin/bash"]3.4 构建testpmd镜像

cat Dockerfile_testpmd

FROM centos_build:7.3.1611

COPY ./resource/dpdk-17.08.1.tar /root

WORKDIR /root/

RUN tar -xf dpdk-17.08.1.tar

WORKDIR /root/dpdk-stable-17.08.1

RUN rm ./lib/librte_eal/linuxapp/igb_uio/igb_uio.c

ADD ./resource/igb_uio.c ./lib/librte_eal/linuxapp/igb_uio/igb_uio.c

ENV RTE_SDK=/root/dpdk-stable-17.08.1

ENV RTE_TARGET=x86_64-native-linuxapp-gcc

COPY ./resource/build_dpdk.sh /root

RUN /root/build_dpdk.sh

CMD ["/bin/bash"]

3.5 all-in-one

cat build.sh

echo "build build tool container"

docker build -t centos_build:7.3.1611 . -f Dockerfile_build_tool_container

echo "build dpdk base container"

docker build -t dpdk_base . -f Dockerfile_dpdk_base

echo "build dpdk pktgen container"

docker build -t dpdk_pktgen . -f Dockerfile_pktgen

echo "build dpdk testpmd container"

docker build -t dpdk_testpmd . -f Dockerfile_testpmd

echo "remove no use images"

docker rmi $(docker images | awk '/^/ { print $3 }')

3.6 构建好的镜像

[root@container-host container]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

dpdk_pktgen latest 958fa59981ba 21 hours ago 1.824 GB

dpdk_base latest 102357908366 4 months ago 1.659 GB

dpdk_testpmd latest 102357908366 4 months ago 1.659 GB

centos_build 7.3.1611 c7b1c9560914 4 months ago 817.5 MB

docker.io/centos 7.3.1611 66ee80d59a68 6 months ago 191.8 MB转载自https://blog.csdn.net/cloudvtech

四、运行OVS建立dpdkvhostuser端口

4.1 配置大页

cat 1_configure.sh

echo 2048 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

mkdir /mnt/huge

chmod 777 /mnt/huge

mount -t hugetlbfs nodev /mnt/huge

cat /proc/meminfo | grep Hugecat 2_setup_nic.sh

export RTE_SDK=/root/dpdk-stable-17.08.1

export RTE_TARGET=x86_64-native-linuxapp-gcc

modprobe uio_pci_generic

modprobe uio

modprobe vfio-pci

insmod /root/dpdk-stable-17.08.1/build/kmod/igb_uio.ko

/root/dpdk-stable-17.08.1/usertools/dpdk-devbind.py --status4.3 配置OVS

cat 3_setup_ovs.sh

rm -rf /usr/local/etc/openvswitch/conf.db

/root/openvswitch-2.8.1/ovsdb/ovsdb-tool create /usr/local/etc/openvswitch/conf.db /root/openvswitch-2.8.1/vswitchd/vswitch.ovsschema

/root/openvswitch-2.8.1/ovsdb/ovsdb-server --remote=punix:/usr/local/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --pidfile --detach

ps -ef | grep ovsdb-server

/root/openvswitch-2.8.1/utilities/ovs-vsctl --no-wait init

/root/openvswitch-2.8.1/utilities/ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true

/root/openvswitch-2.8.1/utilities/ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x2

/root/openvswitch-2.8.1/utilities/ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x4

/root/openvswitch-2.8.1/utilities/ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem=512

export DB_SOCK=/usr/local/var/run/openvswitch/db.sockcore 1 for lcore,core 2 for pmd (start from core 0)

4.4 启动OVS并建立bridge br0

cat 4_start_ovs.sh

export DB_SOCK=/usr/local/var/run/openvswitch/db.sock

/root/openvswitch-2.8.1/utilities/ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK" start

/root/openvswitch-2.8.1/utilities/ovs-vsctl show

/root/openvswitch-2.8.1/utilities/ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev

#/root/openvswitch-2.8.1/utilities/ovs-vsctl show4.5 添加端口vhost-user[0,1,2,3]

cat 5_add_port.sh

/root/openvswitch-2.8.1/utilities/ovs-vsctl add-port br0 vhost-user0 -- set Interface vhost-user0 type=dpdkvhostuser

/root/openvswitch-2.8.1/utilities/ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser

/root/openvswitch-2.8.1/utilities/ovs-vsctl add-port br0 vhost-user2 -- set Interface vhost-user2 type=dpdkvhostuser

/root/openvswitch-2.8.1/utilities/ovs-vsctl add-port br0 vhost-user3 -- set Interface vhost-user3 type=dpdkvhostuser

/root/openvswitch-2.8.1/utilities/ovs-vsctl show这里由于是东西向数据通信,所以不需要添加物理NIC作为端口

4.6 添加ovs flow 控制

cat 6_add_ovs_flow.sh

/root/openvswitch-2.8.1/utilities/ovs-ofctl del-flows br0

/root/openvswitch-2.8.1/utilities/ovs-ofctl add-flow br0 in_port=2,dl_type=0x800,idle_timeout=0,action=output:3

/root/openvswitch-2.8.1/utilities/ovs-ofctl add-flow br0 in_port=3,dl_type=0x800,idle_timeout=0,action=output:2

/root/openvswitch-2.8.1/utilities/ovs-ofctl add-flow br0 in_port=1,dl_type=0x800,idle_timeout=0,action=output:4

/root/openvswitch-2.8.1/utilities/ovs-ofctl add-flow br0 in_port=4,dl_type=0x800,idle_timeout=0,action=output:1

/root/openvswitch-2.8.1/utilities/ovs-ofctl show br0端口1进来的数据包转发到端口4,端口2进来的数据包转发到端口3

转载自https://blog.csdn.net/cloudvtech

五、运行testpmd容器

docker run -ti --privileged -v /mnt/huge:/mnt/huge -v /usr/local/var/run/openvswitch:/var/run/openvswitch dpdk_testpmdexport DPDK_PARAMS="-c 0xE0 -n 1 --socket-mem 1024 \

--file-prefix testpmd --no-pci \

--vdev=virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2 \

--vdev=virtio_user3,mac=00:00:00:00:00:03,path=/var/run/openvswitch/vhost-user3"

export TESTPMD_PARAMS="--burst=64 -i --disable-hw-vlan --txd=2048 --rxd=2048 -a --coremask=0xc0"

./build/app/testpmd $DPDK_PARAMS -- $TESTPMD_PARAMSEAL: Detected 8 lcore(s)

EAL: No free hugepages reported in hugepages-1048576kB

Interactive-mode selected

Auto-start selected

previous number of forwarding cores 1 - changed to number of configured cores 2

Warning: NUMA should be configured manually by using --port-numa-config and --ring-numa-config parameters along with --numa.

USER1: create a new mbuf pool : n=163456, size=2176, socket=0

Configuring Port 0 (socket 0)

Port 0: 00:00:00:00:00:02

Configuring Port 1 (socket 0)

Port 1: 00:00:00:00:00:03

Checking link statuses...

Done

Start automatic packet forwarding

io packet forwarding - ports=2 - cores=2 - streams=2 - NUMA support enabled, MP over anonymous pages disabled

Logical Core 6 (socket 0) forwards packets on 1 streams:

RX P=0/Q=0 (socket 0) -> TX P=1/Q=0 (socket 0) peer=02:00:00:00:00:01

Logical Core 7 (socket 0) forwards packets on 1 streams:

RX P=1/Q=0 (socket 0) -> TX P=0/Q=0 (socket 0) peer=02:00:00:00:00:00

io packet forwarding - CRC stripping enabled - packets/burst=64

nb forwarding cores=2 - nb forwarding ports=2

RX queues=1 - RX desc=2048 - RX free threshold=0

RX threshold registers: pthresh=0 hthresh=0 wthresh=0

TX queues=1 - TX desc=2048 - TX free threshold=0

TX threshold registers: pthresh=0 hthresh=0 wthresh=0

TX RS bit threshold=0 - TXQ flags=0xf00 core 6 and core 7 for testpmd data forwarding

转载自https://blog.csdn.net/cloudvtech

六、运行pktgen容器

docker run -ti --privileged -v /mnt/huge:/mnt/huge -v /usr/local/var/run/openvswitch:/var/run/openvswitch dpdk_pktgen

export DPDK_PARAMS="-c 0x19 --master-lcore 3 -n 1 --socket-mem 1024 \

--file-prefix pktgen --no-pci \

--vdev=virtio_user0,mac=00:00:00:00:00:00,path=/var/run/openvswitch/vhost-user0 \

--vdev=virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1 "

export PKTGEN_PARAMS='-T -P -m "0.0,4.1"'

./app/x86_64-native-linuxapp-gcc/pktgen $DPDK_PARAMS -- $PKTGEN_PARAMS LOG

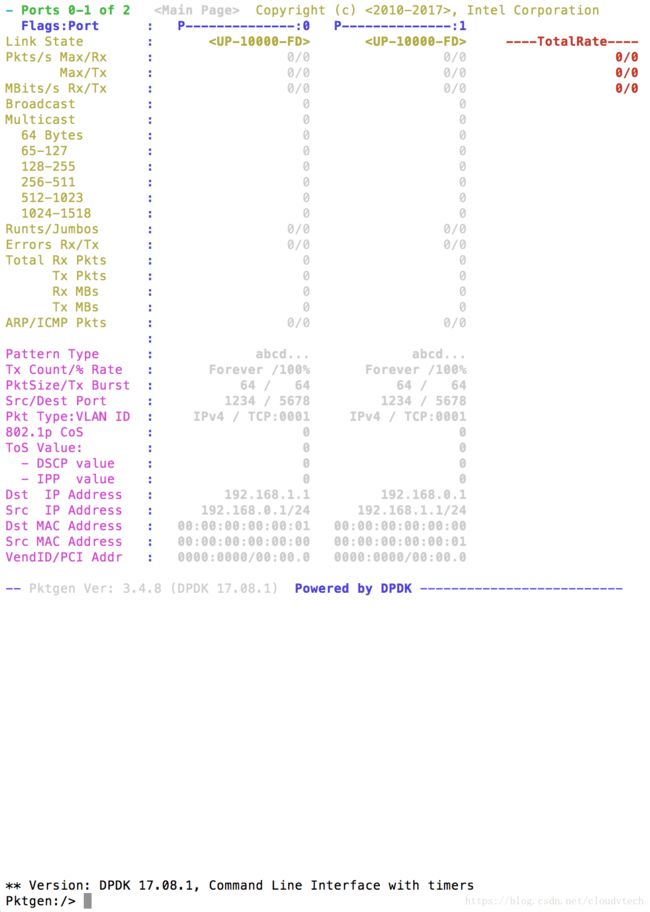

Copyright (c) <2010-2017>, Intel Corporation. All rights reserved. Powered by DPDK

EAL: Detected 8 lcore(s)

EAL: No free hugepages reported in hugepages-1048576kB

Lua 5.3.4 Copyright (C) 1994-2017 Lua.org, PUC-Rio

Copyright (c) <2010-2017>, Intel Corporation. All rights reserved.

Pktgen created by: Keith Wiles -- >>> Powered by DPDK <<<

>>> Packet Burst 64, RX Desc 1024, TX Desc 2048, mbufs/port 16384, mbuf cache 2048

=== port to lcore mapping table (# lcores 2) ===

lcore: 3 4 Total

port 0: ( D: T) ( 0: 0) = ( 1: 1)

port 1: ( D: T) ( 1: 1) = ( 1: 1)

Total : ( 0: 0) ( 1: 1)

Display and Timer on lcore 3, rx:tx counts per port/lcore

Configuring 2 ports, MBUF Size 2176, MBUF Cache Size 2048

Lcore:

0, RX-TX

RX_cnt( 1): (pid= 0:qid= 0)

TX_cnt( 1): (pid= 0:qid= 0)

4, RX-TX

RX_cnt( 1): (pid= 1:qid= 0)

TX_cnt( 1): (pid= 1:qid= 0)

Port :

0, nb_lcores 1, private 0xa52740, lcores: 0

1, nb_lcores 1, private 0xa552a8, lcores: 4

** Default Info (virtio_user0, if_index:0) **

max_rx_queues : 1, max_tx_queues : 1

max_mac_addrs : 64, max_hash_mac_addrs: 0, max_vmdq_pools: 0

rx_offload_capa: 28, tx_offload_capa : 0, reta_size : 0, flow_type_rss_offloads:0000000000000000

vmdq_queue_base: 0, vmdq_queue_num : 0, vmdq_pool_base: 0

** RX Conf **

pthresh : 0, hthresh : 0, wthresh : 0

Free Thresh : 0, Drop Enable : 0, Deferred Start : 0

** TX Conf **

pthresh : 0, hthresh : 0, wthresh : 0

Free Thresh : 0, RS Thresh : 0, Deferred Start : 0, TXQ Flags:00000f00

Create: Default RX 0:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Set RX queue stats mapping pid 0, q 0, lcore 0

Create: Default TX 0:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Range TX 0:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Sequence TX 0:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Special TX 0:0 - Memory used (MBUFs 64 x (size 2176 + Hdr 128)) + 192 = 145 KB headroom 128 2176

Port memory used = 147601 KB

Initialize Port 0 -- TxQ 1, RxQ 1, Src MAC 00:00:00:00:00:00

** Default Info (virtio_user1, if_index:0) **

max_rx_queues : 1, max_tx_queues : 1

max_mac_addrs : 64, max_hash_mac_addrs: 0, max_vmdq_pools: 0

rx_offload_capa: 28, tx_offload_capa : 0, reta_size : 0, flow_type_rss_offloads:0000000000000000

vmdq_queue_base: 0, vmdq_queue_num : 0, vmdq_pool_base: 0

** RX Conf **

pthresh : 0, hthresh : 0, wthresh : 0

Free Thresh : 0, Drop Enable : 0, Deferred Start : 0

** TX Conf **

pthresh : 0, hthresh : 0, wthresh : 0

Free Thresh : 0, RS Thresh : 0, Deferred Start : 0, TXQ Flags:00000f00

Create: Default RX 1:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Set RX queue stats mapping pid 1, q 0, lcore 4

Create: Default TX 1:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Range TX 1:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Sequence TX 1:0 - Memory used (MBUFs 16384 x (size 2176 + Hdr 128)) + 192 = 36865 KB headroom 128 2176

Create: Special TX 1:0 - Memory used (MBUFs 64 x (size 2176 + Hdr 128)) + 192 = 145 KB headroom 128 2176

Port memory used = 147601 KB

Initialize Port 1 -- TxQ 1, RxQ 1, Src MAC 00:00:00:00:00:01

Total memory used = 295202 KB

Port 0: Link Up - speed 10000 Mbps - full-duplex

!ERROR!: Could not read enough random data for PRNG seed

Port 1: Link Up - speed 10000 Mbps - full-duplex

!ERROR!: Could not read enough random data for PRNG seed

=== Display processing on lcore 3

RX/TX processing lcore: 0 rx: 1 tx: 1

For RX found 1 port(s) for lcore 0

For TX found 1 port(s) for lcore 0

RX/TX processing lcore: 4 rx: 1 tx: 1

For RX found 1 port(s) for lcore 4

For TX found 1 port(s) for lcore 4 interactive window

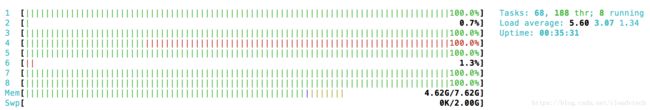

core 0,3,4 for pktgen data forwarding

转载自https://blog.csdn.net/cloudvtech