kafka+zookeeper整合springmvc,实现消息队列的生产与消费

整合步骤

- 安装启动zookeeper

- 安装启动kafka

- 创建消息生产者

- 创建消息消费者

1、安装启动zookeeper

zoo.cfg:配置

dataDir=D:\Sofeware\apache-zookeeper-3.5.5-bin\tmp

dataLogDir=D:\Sofeware\apache-zookeeper-3.5.5-bin\logs

双击zkServer.cmd启动

2、安装启动kafka

下载解压

server.properties:配置 zookeeper链接地址

zookeeper.connect=localhost:2181

zookeeper.connection.timeout.ms=6000

启动kafka

cd 到安装目录 .\bin\windows\kafka-server-start.bat .\config\server.properties

D:\Sofeware\kafka_2.11-2.3.0>.\bin\windows\kafka-server-start.bat .\config\serve

r.properties

3、创建消息生产者

applicationContext.xml:配置

加载 kafka.properties 配置文件

导入producer-kafka.xml配置文件

<bean id="propertyConfigurer" class="org.springframework.beans.factory.config.PropertyPlaceholderConfigurer">

<property name="systemPropertiesModeName" value="SYSTEM_PROPERTIES_MODE_OVERRIDE" />

<property name="ignoreResourceNotFound" value="true" />

<property name="locations">

<list>

<value>classpath:conf/kafka.propertiesvalue>

list>

property>

bean>

<import resource="producer-kafka.xml" />

kafka.properties:配置

################# kafka producer ##################

# brokers集群

kafka.producer.bootstrap.servers = localhost:9092

kafka.producer.acks = all

#发送失败重试次数

kafka.producer.retries = 3

kafka.producer.linger.ms = 10

# 33554432 即32MB的批处理缓冲区

kafka.producer.buffer.memory = 40960

#批处理条数:当多个记录被发送到同一个分区时,生产者会尝试将记录合并到更少的请求中。这有助于客户端和服务器的性能

kafka.producer.batch.size = 4096

kafka.producer.defaultTopic = alarm

kafka.producer.key.serializer = org.apache.kafka.common.serialization.StringSerializer

kafka.producer.value.serializer = org.apache.kafka.common.serialization.StringSerializer

producer-kafka.xml:配置

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd">

<bean id="producerProperties" class="java.util.HashMap">

<constructor-arg>

<map>

<entry key="bootstrap.servers" value="${kafka.producer.bootstrap.servers}" />

<entry key="retries" value="${kafka.producer.retries}" />

<entry key="batch.size" value="${kafka.producer.batch.size}" />

<entry key="linger.ms" value="${kafka.producer.linger.ms}" />

<entry key="buffer.memory" value="${kafka.producer.buffer.memory}" />

<entry key="acks" value="${kafka.producer.acks}" />

<entry key="key.serializer"

value="${kafka.producer.key.serializer}" />

<entry key="value.serializer"

value="${kafka.producer.value.serializer}"/>

map>

constructor-arg>

bean>

<bean id="producerFactory"

class="org.springframework.kafka.core.DefaultKafkaProducerFactory">

<constructor-arg>

<ref bean="producerProperties" />

constructor-arg>

bean>

<bean id="kafkaProducerListener" class="com.bdxh.rpc.service.KafkaProducerListener" />

<bean id="kafkaTemplate" class="org.springframework.kafka.core.KafkaTemplate">

<constructor-arg ref="producerFactory" />

<constructor-arg name="autoFlush" value="true" />

<property name="defaultTopic" value="${kafka.producer.defaultTopic}" />

bean>

beans>

创建producer-kafka.xml中的 KafkaProducerListener.java监听类.

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.log4j.Logger;

import org.springframework.kafka.support.ProducerListener;

public class KafkaProducerListener implements ProducerListener {

protected final Logger logger = Logger.getLogger(KafkaProducerListener.class.getName());

public KafkaProducerListener(){

}

@Override

public void onSuccess(String topic, Integer partition, Object key, Object value, RecordMetadata recordMetadata) {

logger.info("-----------------kafka发送数据成功");

logger.info("----------topic:"+topic);

logger.info("----------partition:"+partition);

logger.info("----------key:"+key);

logger.info("----------value:"+value);

logger.info("----------RecordMetadata:"+recordMetadata);

logger.info("-----------------kafka发送数据结束");

}

@Override

public void onError(String topic, Integer partition, Object key, Object value, Exception e) {

logger.info("-----------------kafka发送数据失败");

logger.info("----------topic:"+topic);

logger.info("----------partition:"+partition);

logger.info("----------key:"+key);

logger.info("----------value:"+value);

logger.info("-----------------kafka发送数据失败结束");

e.printStackTrace();

}

/**

* 是否启动Producer监听器

* @return

*/

@Override

public boolean isInterestedInSuccess() {

return false;

}

}

最后加上POM依赖

<dependency>

<groupId>com.101tecgroupId>

<artifactId>zkclientartifactId>

<version>0.3version>

dependency>

<dependency>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

<version>3.4.5version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>dubboartifactId>

<version>2.5.3version>

<scope>compilescope>

<exclusions>

<exclusion>

<artifactId>springartifactId>

<groupId>org.springframeworkgroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-clientsartifactId>

<version>1.0.1version>

dependency>

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

<version>1.3.5.RELEASEversion>

<exclusions>

<exclusion>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-clientsartifactId>

exclusion>

exclusions>

dependency>

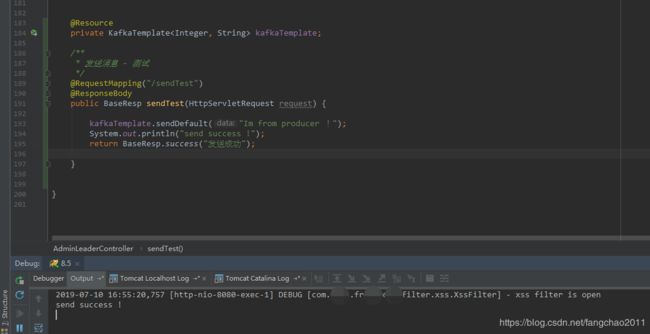

写个Controller 方法用来测试:

@Resource

private KafkaTemplate<Integer, String> kafkaTemplate;

/**

* 发送消息 - 测试

*/

@RequestMapping("/sendTest")

@ResponseBody

public BaseResp sendTest(HttpServletRequest request) {

kafkaTemplate.sendDefault("Im from producer !");

System.out.println("send success !");

return BaseResp.success("发送成功");

}

4、创建消息消费者

applicationContext.xml:配置

加载kafka.properties配置

导入consumer-kafka.xml配置文件

<bean id="propertyConfigurer" class="org.springframework.beans.factory.config.PropertyPlaceholderConfigurer">

<property name="systemPropertiesModeName" value="SYSTEM_PROPERTIES_MODE_OVERRIDE" />

<property name="ignoreResourceNotFound" value="true" />

<property name="locations">

<list>

<value>classpath:conf/kafka.propertiesvalue>

list>

property>

bean>

<import resource="consumer-kafka.xml" />

kafka.properties 配置文件:

################# kafka consumer ##################

kafka.consumer.bootstrap.servers = localhost:9092

# 如果为true,消费者的偏移量将在后台定期提交

kafka.consumer.enable.auto.commit = true

#如何设置为自动提交(enable.auto.commit=true),这里设置自动提交周期

kafka.consumer.auto.commit.interval.ms=1000

#order-beta 消费者群组ID,发布-订阅模式,即如果一个生产者,多个消费者都要消费,那么需要定义自己的群组,同一群组内的消费者只有一个能消费到消息

kafka.consumer.group.id = ebike-alarm

#告警topic

kafka.alarm.topic = alarm

#在使用Kafka的组管理时,用于检测消费者故障的超时

kafka.consumer.session.timeout.ms = 30000

kafka.consumer.key.deserializer = org.apache.kafka.common.serialization.StringDeserializer

kafka.consumer.value.deserializer = org.apache.kafka.common.serialization.StringDeserializer

consumer-kafka.xml 配置文件:

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd">

<bean id="consumerProperties" class="java.util.HashMap">

<constructor-arg>

<map>

<entry key="bootstrap.servers" value="${kafka.consumer.bootstrap.servers}" />

<entry key="group.id" value="${kafka.consumer.group.id}" />

<entry key="enable.auto.commit" value="${kafka.consumer.enable.auto.commit}" />

<entry key="session.timeout.ms" value="${kafka.consumer.session.timeout.ms}" />

<entry key="auto.commit.interval.ms" value="${kafka.consumer.auto.commit.interval.ms}" />

<entry key="retry.backoff.ms" value="100" />

<entry key="key.deserializer"

value="${kafka.consumer.key.deserializer}" />

<entry key="value.deserializer"

value="${kafka.consumer.value.deserializer}" />

map>

constructor-arg>

bean>

<bean id="consumerFactory"

class="org.springframework.kafka.core.DefaultKafkaConsumerFactory" >

<constructor-arg>

<ref bean="consumerProperties" />

constructor-arg>

bean>

<bean id="kafkaConsumerService" class="com.bdxh.ebike.api.controller.KafkaConsumerMessageListener" />

<bean id="containerProperties" class="org.springframework.kafka.listener.config.ContainerProperties">

<constructor-arg name="topics">

<list>

<value>${kafka.alarm.topic}value>

list>

constructor-arg>

<property name="messageListener" ref="kafkaConsumerService" />

bean>

<bean id="messageListenerContainer" class="org.springframework.kafka.listener.ConcurrentMessageListenerContainer" init-method="doStart" >

<constructor-arg ref="consumerFactory" />

<constructor-arg ref="containerProperties" />

bean>

beans>

创建consumer-kafka.xml 配置中的 KafkaConsumerMessageListener.java 监听类

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.log4j.Logger;

import org.springframework.kafka.listener.MessageListener;

public class KafkaConsumerMessageListener implements MessageListener<String,Object> {

private Logger logger = Logger.getLogger(KafkaConsumerMessageListener.class.getName());

public KafkaConsumerMessageListener(){

}

/**

* 消息接收-LOG日志处理

* @param record

*/

@Override

public void onMessage(ConsumerRecord<String, Object> record) {

logger.info("=============kafka消息订阅=============");

String topic = record.topic();

String key = record.key();

Object value = record.value();

long offset = record.offset();

int partition = record.partition();

/*if (ConstantKafka.KAFKA_TOPIC1.equals(topic)){

doSaveLogs(value.toString());

}*/

Object o = record.value();

logger.info(o.toString());

logger.info("-------------topic:"+topic);

logger.info("-------------value:"+value);

logger.info("-------------key:"+key);

logger.info("-------------offset:"+offset);

logger.info("-------------partition:"+partition);

logger.info("=============kafka消息订阅=============");

}

}

consumer 消费者POM依赖:(和producer生产者一致)

<dependency>

<groupId>com.101tecgroupId>

<artifactId>zkclientartifactId>

<version>0.3version>

dependency>

<dependency>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

<version>3.4.5version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>dubboartifactId>

<version>2.5.3version>

<scope>compilescope>

<exclusions>

<exclusion>

<artifactId>springartifactId>

<groupId>org.springframeworkgroupId>

exclusion>

exclusions>

dependency>

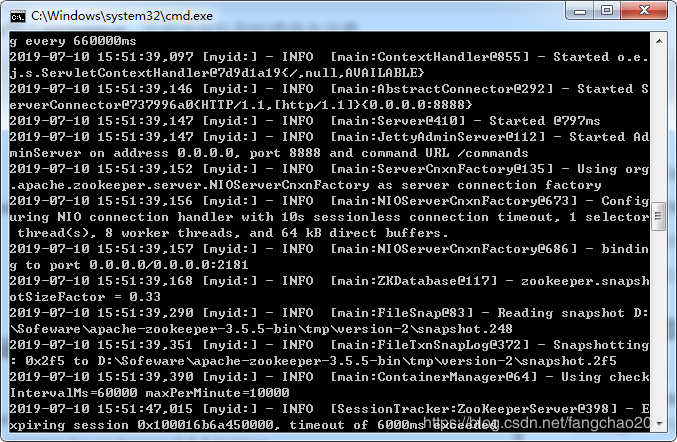

到此一切都配置完成。做个测试:

- 启动zookeeper

- 启动kafka

- 启动producer 消息提供者项目

- 启动consumer 消息消费者项目

- 调用producer 中 测试的controller --> /sendTest

结果:

producer:

consumer:

成功的生产了消息,和消费了消息。