spring-boot ElasticSearch-5.6.12 windows 安装,mysql,csv,pdf,word导入到ES

系统查询速度慢,就想用elasticsearch增加查询速度。并且能把pdf,csv 导入到elasticsearch.

系统使用了springboot版本号是.2.0.6.RELEASE。那么首先要确认elasticsearch的版本号

........

org.springframework.boot

spring-boot-starter-parent

2.0.6.RELEASE

org.springframework.data

spring-data-elasticsearch

org.springframework.boot

spring-boot-starter-web

......

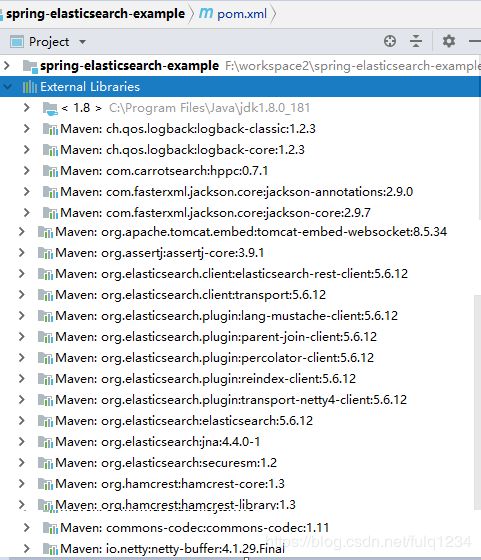

在intellij idea 的maven包里面可以看到版本号

SpringBoot 2.0.6版本对应的是es5.6.12的版本

那么就是

1.spring boot 2.0.6

2.jdk8

3.elastic search 版本号:5.6.12

4.elasticsearch-head(可视化插件,为了不出错,要和elastic search版本号一致),版本号:5.6.12

5.logstash-5.6.12。能导入mysql(或其他数据库),csv到elastic search

6.fscrawler-es5-2.6。可以导入pdf,word文件。

参考网站

1.参考代码(稍微修改):https://github.com/fonxian/spring-elasticsearch-example

2.elastic search,logstash都是在这里下载:https://www.elastic.co/cn/downloads/past-releases

3.elastic search下载:https://www.elastic.co/cn/downloads/past-releases/elasticsearch-5-6-12

4.logstash下载:https://www.elastic.co/cn/downloads/past-releases/logstash-5-6-12

5.安装elastic search header(先要安装nodejs)的参考https://www.cnblogs.com/asker009/p/10045125.html

6.fscrawler下载安装说明的网站:https://fscrawler.readthedocs.io/en/fscrawler-2.6/

7.安装包放在百度网盘一份

链接:https://pan.baidu.com/s/1CzHfg0lvPI81kxgWpkLHxg

提取码:aake

安装说明省略。

启动这些服务

1.启动elastic search

直接双击:E:\soft\elasticsearch-5.6.12\bin\elasticsearch.bat

访问http://localhost:9200/

网页内容:

name "3cgkZDo"

cluster_name "elasticsearch"

cluster_uuid "7Np7tHwwSpy_T-khLB7utA"

version

number "5.6.12"

build_hash "cfe3d9f"

build_date "2018-09-10T20:12:43.732Z"

build_snapshot false

lucene_version "6.6.1"

tagline "You Know, for Search"这个时候,intellij idea 的application.properties的才能生效。否则报错

spring.data.elasticsearch.cluster-nodes = localhost:93002。启动elastic search header

doc命令:

Microsoft Windows [版本 10.0.17763.615]

(c) 2018 Microsoft Corporation。保留所有权利。

C:\Users\lunmei>cd E:\soft\elasticsearch-head-master

C:\Users\lunmei>e:

E:\soft\elasticsearch-head-master>grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

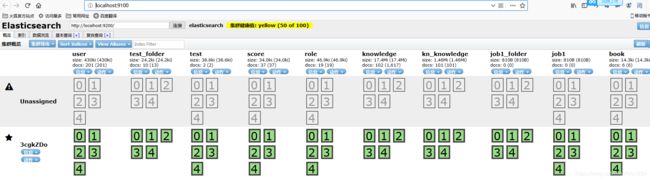

http://localhost:9100/

3.用logstash导入csv,mysql

新增文件E:\soft\logstash-5.6.12\bin\logstash-csv.conf。用来把文件C:\Users\lunmei\Desktop\sys_role.csv导入到elasticsearch

input {

file {

path => ["C:\Users\lunmei\Desktop\sys_role.csv"]

start_position => "beginning"

}

}

filter {

csv {

separator => ","

columns => ["id","name","value","tips","status","create_time","update_time"]

}

mutate {

convert => {

"id" => "string"

}

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "role"

document_id => "%{id}"

document_type => "role"

}

stdout{

codec => rubydebug

}

}

新增文件:E:\soft\logstash-5.6.12\bin\logstash-mysql.conf。用来把sql数据导入到elastic search

input {

stdin {

}

jdbc {

jdbc_connection_string => "jdbc:mysql://192.168.0.100:3306/zillion-wfm?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "root"

jdbc_driver_library => "mysql-connector-java-5.1.47.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

jdbc_default_timezone => "Asia/Shanghai"

record_last_run => true

use_column_value => true

tracking_column => "price"

last_run_metadata_path => "my_info_last"

#statement_filepath => "jdbc-sql.sql"

statement => "SELECT * FROM kn_knowledge"

schedule => "* * * * *"

type => "knowledge"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

elasticsearch {

hosts => "127.0.0.1:9200"

index => "knowledge"

document_id => "%{id}"

}

stdout {

codec => json_lines

}

}命令

Microsoft Windows [版本 10.0.17763.615]

(c) 2018 Microsoft Corporation。保留所有权利。

C:\Users\lunmei>e:

E:\>logstash -f logstash-csv.conf

'logstash' 不是内部或外部命令,也不是可运行的程序

或批处理文件。

E:\>cd E:\soft\logstash-5.6.12\bin

E:\soft\logstash-5.6.12\bin>logstash -f logstash-csv.conf

Sending Logstash's logs to E:/soft/logstash-5.6.12/logs which is now configured via log4j2.properties

[2019-07-19T18:02:22,722][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"E:/soft/logstash-5.6.12/modules/fb_apache....

E:\soft\logstash-5.6.12\bin>logstash -f logstash-mysql.conf

Sending Logstash's logs to E:/soft/logstash-5.6.12/logs which is now configured via log4j2.properties

..........

[2019-07-19T18:02:48,618][FATAL][logstash.runner ] SIGINT received. Terminating immediately..

终止批处理操作吗(Y/N)?

^C系统无法打开指定的设备或文件。

E:\soft\logstash-5.6.12\bin>文件或者mysql 导入成功

4.

详细看网页的说明

第一步要创建一个fscrawlerRunner.bat。创建完后双击。

启动fscrawler

E:\soft\fscrawler-es5-2.6\bin>fscrawler test

17:00:23,187 WARN [f.p.e.c.f.c.FsCrawlerCli] job [test] does not exist

17:00:23,189 INFO [f.p.e.c.f.c.FsCrawlerCli] Do you want to create it (Y/N)?

y

17:00:30,241 INFO [f.p.e.c.f.c.FsCrawlerCli] Settings have been created in [C:\Users\lunmei\.fscrawler\test\_settings.json]. Please review and edit before relaunch

这里注意test是个变量,代表job name。第一次启动这个job会创建一个相关的_setting.json用来配置文件和es相关的信息。而我们这个很明显在“C:\Users\lunmei\.fscrawler\test\_settings.json“。

那么在看网页资料的时候,所有涉及_settings.json,就找到地方了。这个是自动自动创建的。我一开始在这里浪费很多时间啊。

改好此文件后,再运行命令”fscrawler test“。把文件导入到elastic search中。

我有一个word文档,内容是“你好啊,你好啊”,但是想按照字段导入到elastic search。这个还得研究研究。加油。