IK分词器下载、使用和测试

对于Win10x86、Ubuntu环境均适用~

1.下载

为什么要使用IK分词器呢?最后面有测评~

访问:https://github.com/medcl/elasticsearch-analysis-ik/releases,找到与自己的ES相同的版本,

可以下载源码,然后自己编译,也可以直接下载编译好的压缩包,比如我这里是5.4.0版本:

如果选择下载源码然后自己编译的话,使用maven进行编译:

在该目录下,首先执行:mvn compile;,会生成一个target目录,然后执行mvn package;,会在target目录下生成一个releases目录,在该目录下有一个压缩包,这就是编译好的,与直接下载编译好是一样的~

或者把该项目在IDEA打开,在客户端执行maven的clear、compile和package命令,效果都是一样的,但是用命令行编译好像稍微快一点~

2.使用

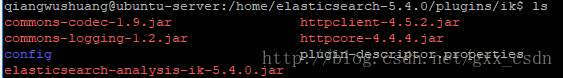

在es目录下的plugins目录下创建一个新文件夹,命名为ik,然后把上面的压缩包中的内容解压到该目录中。

比如在Ubuntu中,把解压出来的内容放到es/plugins/ik中:

之后,需要重新启动es。

3.测试

1). 创建索引,指定分词器为“ik_max_word”

PUT index

{

"settings": {

"number_of_shards": 3,

"number_of_replicas": 1,

"analysis": {

"analyzer": {

"ik": {

"tokenizer": "ik_max_word"

}

}

}

},

"mappings": {

"test1":{

"properties": {

"content": {

"type": "text",

"analyzer": "ik",

"search_analyzer": "ik_max_word"

}

}

}

}

}2). 写入数据到索引中

POST index/test1/1

{

"content": "里皮是一位牌足够大、支持率足够高的教练"

}

POST index/test1/2

{

"content": "他不仅在意大利国家队取得过成功"

}

POST index/test1/3

{

"content": "教练还带领广州恒大称霸中超并首次夺得亚冠联赛"

}3). 执行搜索,比如匹配有“教练”字样的文档

GET index/_search

{

"query": {

"match": {

"content": "教练"

}

},

"highlight": {

"pre_tags": [""],

"post_tags": [""],

"fields": {"content": {}}

}

}4). 搜索效果

{

"took": 8,

"timed_out": false,

"_shards": {

"total": 3,

"successful": 3,

"failed": 0

},

"hits": {

"total": 2,

"max_score": 0.18232156,

"hits": [

{

"_index": "index",

"_type": "test1",

"_id": "1",

"_score": 0.18232156,

"_source": {

"content": "里皮是一位牌足够大、支持率足够高的教练"

},

"highlight": {

"content": [

"里皮是一位牌足够大、支持率足够高的教练"

]

}

},

{

"_index": "index",

"_type": "test1",

"_id": "3",

"_score": 0.16203022,

"_source": {

"content": "教练还带领广州恒大称霸中超并首次夺得亚冠联赛"

},

"highlight": {

"content": [

"教练还带领广州恒大称霸中超并首次夺得亚冠联赛"

]

}

}

]

}

}没有问题哦~

4.关于IK分词器的几点说明

IK分词器对中文具有良好支持的分词器,相比于ES自带的分词器,IK分词器更能适用中文博大精深的语言环境,

此外,IK分词器包括ik_max_word和ik_smart,它们有什么区别呢?

ik_max_word会将文本做最细粒度的拆分;

ik_smart 会做最粗粒度的拆分。

可通过下面的测试自己感受它们的不同,测试语句为“这是一个对分词器的测试”,测试效果如下:

1). ik_max_word

GET index/_analyze?analyzer=ik_max_word

{

"text": "这是一个对分词器的测试"

}分词结果:

{

"tokens": [

{

"token": "这是",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

},

{

"token": "一个",

"start_offset": 2,

"end_offset": 4,

"type": "CN_WORD",

"position": 1

},

{

"token": "一",

"start_offset": 2,

"end_offset": 3,

"type": "TYPE_CNUM",

"position": 2

},

{

"token": "个",

"start_offset": 3,

"end_offset": 4,

"type": "COUNT",

"position": 3

},

{

"token": "对分",

"start_offset": 4,

"end_offset": 6,

"type": "CN_WORD",

"position": 4

},

{

"token": "分词器",

"start_offset": 5,

"end_offset": 8,

"type": "CN_WORD",

"position": 5

},

{

"token": "分词",

"start_offset": 5,

"end_offset": 7,

"type": "CN_WORD",

"position": 6

},

{

"token": "词",

"start_offset": 6,

"end_offset": 7,

"type": "CN_WORD",

"position": 7

},

{

"token": "器",

"start_offset": 7,

"end_offset": 8,

"type": "CN_CHAR",

"position": 8

},

{

"token": "测试",

"start_offset": 9,

"end_offset": 11,

"type": "CN_WORD",

"position": 9

}

]

}2). ik_smart

GET index/_analyze?analyzer=ik_smart

{

"text": "这是一个对分词器的测试"

}分词结果:

{

"tokens": [

{

"token": "这是",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

},

{

"token": "一个",

"start_offset": 2,

"end_offset": 4,

"type": "CN_WORD",

"position": 1

},

{

"token": "分词器",

"start_offset": 5,

"end_offset": 8,

"type": "CN_WORD",

"position": 2

},

{

"token": "测试",

"start_offset": 9,

"end_offset": 11,

"type": "CN_WORD",

"position": 3

}

]

}3). 自带的分词器

GET index/_analyze?analyzer=standard

{

"text": "这是一个对分词器的测试"

}分词结果:

{

"tokens": [

{

"token": "这",

"start_offset": 0,

"end_offset": 1,

"type": "" ,

"position": 0

},

{

"token": "是",

"start_offset": 1,

"end_offset": 2,

"type": "" ,

"position": 1

},

{

"token": "一",

"start_offset": 2,

"end_offset": 3,

"type": "" ,

"position": 2

},

{

"token": "个",

"start_offset": 3,

"end_offset": 4,

"type": "" ,

"position": 3

},

{

"token": "对",

"start_offset": 4,

"end_offset": 5,

"type": "" ,

"position": 4

},

{

"token": "分",

"start_offset": 5,

"end_offset": 6,

"type": "" ,

"position": 5

},

{

"token": "词",

"start_offset": 6,

"end_offset": 7,

"type": "" ,

"position": 6

},

{

"token": "器",

"start_offset": 7,

"end_offset": 8,

"type": "" ,

"position": 7

},

{

"token": "的",

"start_offset": 8,

"end_offset": 9,

"type": "" ,

"position": 8

},

{

"token": "测",

"start_offset": 9,

"end_offset": 10,

"type": "" ,

"position": 9

},

{

"token": "试",

"start_offset": 10,

"end_offset": 11,

"type": "" ,

"position": 10

}

]

}综上,同样是对“这是一个对分词器的测试”进行分词,不同的分词器分词结果不同:

ik_max_word:这是/一个/一/个/对分/分词器/分词/词/器/测试

ik_smart:这是/一个/分词器/测试

standard:这/是/一/个/对/分/词/器/的/测/试

体会一下,嘿嘿~