CentOS7 从零开始搭建 Hadoop2.7集群

- 序言

- 文件准备

- 权限修改

- 配置系统环境

- 配置Hadoop集群

- 配置无密码登录

- 启动Hadoop

- 默认举例

序言

- 下载软件与工具包

- pscp.exe : 用于从本地到目标机器的文件传输

- hadoop-2.7.3.targ.gz: Hadoop 2.7 软件包

- JDK 1.8: Java 运行环境

- 准备四台安装好CentOS Minimal 的机器,且已经配置网络环境。(只需要记住四台机器的IP地址,主机名后面设置)

- 机器1: 主机名 node, IP: 192.168.169.131

- 机器1: 主机名 node1, IP: 192.168.169.133

- 机器1: 主机名 node2, IP: 192.168.169.132

- 机器1: 主机名 node3, IP: 192.168.169.134

文件准备

添加用户组与用户

groupadd hadoop useradd -d /home/hadoop -g hadoop hadoop复制本机文件到目标机器

pscp.exe -pw 12345678 hadoop-2.7.3.tar.gz root@192.168.169.131:/usr/local pscp.exe -pw 12345678 spark-2.0.0-bin-hadoop2.7.tgz root@192.168.169.131:/usr/local解压并复制文件

tar -zxvf /usr/local/jdk-8u101-linux-x64.tar.gz #重命名 mv /usr/local/jdk1.8.0_101 /usr/local/jdk1.8 tar -zxvf /usr/local/hadoop-2.7.3.tar.gz mv /usr/local/hadoop-2.7.3 /home/hadoop/hadoop2.7

权限修改

修改夹所有者

chmod -R hadoop:hadoop /home/hadoop/hadoop2.7修改组执行权限

chmod -R g=rwx /home/hadoop/hadoop2.7

配置系统环境

配置系统变量

echo 'export JAVA_HOME=/usr/local/jdk1.8' >> /etc/profile echo 'export JRE_HOME=$JAVA_HOME/jre' >> /etc/profile echo 'export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar' >> /etc/profile echo 'export HADOOP_HOME=${hadoopFolder}' >> /etc/profile echo 'export PATH=$HADOOP_HOME/bin:$PATH' >> /etc/profile source /etc/profile配置主机域名

hostname node #当前机器名称 echo NETWORKING=yes >> /etc/sysconfig/network echo HOSTNAME=node >> /etc/sysconfig/network #当前机器名称,避免重启主机名失效 echo '192.168.169.131 node' >> /etc/hosts echo '192.168.169.133 node1' >> /etc/hosts echo '192.168.169.132 node2' >> /etc/hosts echo '192.168.169.134 node3' >> /etc/hosts关闭防火墙

systemctl stop firewalld.service systemctl disable firewalld.service

配置Hadoop集群

修改配置文件

sed -i 's/\${JAVA_HOME}/\/usr\/local\/jdk1.8\//' $HADOOP_HOME/etc/hadoop/hadoop-env.sh sed -i 's/# export JAVA_HOME=\/home\/y\/libexec\/jdk1.6.0\//export JAVA_HOME=\/usr\/local\/jdk1.8\//' $HADOOP_HOME/etc/hadoop/yarn-env.sh sed -i 's/# export JAVA_HOME=\/home\/y\/libexec\/jdk1.6.0\//export JAVA_HOME=\/usr\/local\/jdk1.8\//' $HADOOP_HOME/etc/hadoop/mapred-env.sh配置从节点主机名

echo node1 > $HADOOP_HOME/etc/hadoop/slaves echo node2 >> $HADOOP_HOME/etc/hadoop/slaves echo node3 >> $HADOOP_HOME/etc/hadoop/slaves拷贝文件并覆盖以下文件

- /home/hadoop/hadoop2.7/etc/hadoop/core-site.xml

<configuration> <property> <name>fs.defaultFSname> <value>hdfs://node:9000/value> <description>namenode settingsdescription> property> <property> <name>hadoop.tmp.dirname> <value>/home/hadoop/tmp/hadoop-${user.name}value> <description> temp folder description> property> <property> <name>hadoop.proxyuser.hadoop.hostsname> <value>*value> property> <property> <name>hadoop.proxyuser.hadoop.groupsname> <value>*value> property> configuration>- /home/hadoop/hadoop2.7/etc/hadoop/hdfs-site.xml

<configuration> <property> <name>dfs.namenode.http-addressname> <value>node:50070value> <description> fetch NameNode images and edits.注意主机名称 description> property> <property> <name>dfs.namenode.secondary.http-addressname> <value>node1:50090value> <description> fetch SecondNameNode fsimage description> property> <property> <name>dfs.replicationname> <value>3value> <description> replica count description> property> <property> <name>dfs.namenode.name.dirname> <value>file:///home/hadoop/hadoop2.7/hdfs/namevalue> <description> namenode description> property> <property> <name>dfs.datanode.data.dirname> <value>file:///home/hadoop/hadoop2.7/hdfs/datavalue> <description> DataNode description> property> <property> <name>dfs.namenode.checkpoint.dirname> <value>file:///home/hadoop/hadoop2.7/hdfs/namesecondaryvalue> <description> check point description> property> <property> <name>dfs.webhdfs.enabledname> <value>truevalue> property> <property> <name>dfs.stream-buffer-sizename> <value>131072value> <description> buffer description> property> <property> <name>dfs.namenode.checkpoint.periodname> <value>3600value> <description> duration description> property> configuration>- /home/hadoop/hadoop2.7/etc/hadoop/mapred-site.xml

<configuration> <property> <name>mapreduce.framework.namename> <value>yarnvalue> property> <property> <name>mapreduce.jobtracker.addressname> <value>hdfs://trucy:9001value> property> <property> <name>mapreduce.jobhistory.addressname> <value>node:10020value> <description>MapReduce JobHistory Server host:port, default port is 10020.description> property> <property> <name>mapreduce.jobhistory.webapp.addressname> <value>node:19888value> <description>MapReduce JobHistory Server Web UI host:port, default port is 19888.description> property> configuration>- /home/hadoop/hadoop2.7/etc/hadoop/yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.hostnamename> <value>nodevalue> property> <property> <name>yarn.nodemanager.aux-servicesname> <value>mapreduce_shufflevalue> property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.classname> <value>org.apache.hadoop.mapred.ShuffleHandlervalue> property> <property> <name>yarn.resourcemanager.addressname> <value>node:8032value> property> <property> <name>yarn.resourcemanager.scheduler.addressname> <value>node:8030value> property> <property> <name>yarn.resourcemanager.resource-tracker.addressname> <value>node:8031value> property> <property> <name>yarn.resourcemanager.admin.addressname> <value>node:8033value> property> <property> <name>yarn.resourcemanager.webapp.addressname> <value>node:8088value> property> configuration>

配置无密码登录

在所有主机上创建目录并赋予权限

mkdir /home/hadoop/.ssh chomod 700 /home/hadoop/.ssh在node主机上生成RSA文件

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa生成并拷贝 authorized_keys文件

cp /home/hadoop/.ssh/id_rsa.pub authorized_keys scp /home/hadoop/.ssh/authorized_keys node1:/home/hadoop/.ssh scp /home/hadoop/.ssh/authorized_keys node2:/home/hadoop/.ssh scp /home/hadoop/.ssh/authorized_keys node3:/home/hadoop/.ssh在所有主机上修改拥有者和权限

chmod 600 .ssh/authorized_keys chown -R hadoop:hadoop .ssh修改ssh 配置文件

注释掉 # AuthorizedKeysFile .ssh/authorized_keys重新启动ssh

service sshd restartNote: 第一次连接仍然需要输入密码。

启动Hadoop

进入Node 主机,并切换到hadoop账号

su hadoop格式化 namenode

/home/hadoop/hadoop2.7/bin/hdfs namenode -format启动 hdfs

/home/hadoop/hadoop2.7/sbin/start-dfs.sh启动 yarn

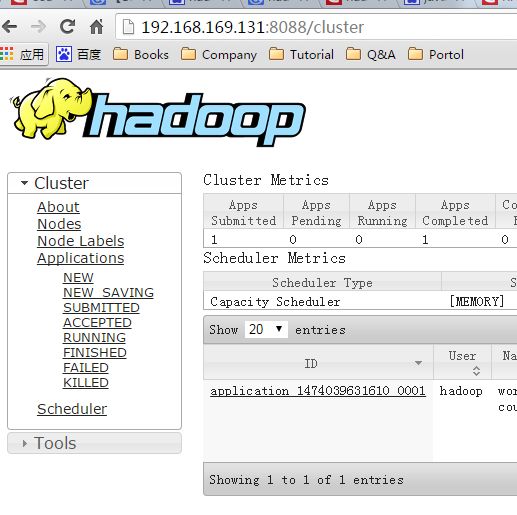

/home/hadoop/hadoop2.7/sbin/start-yarn.sh- 验证 yarn 状态

默认举例

创建文件夹

/home/hadoop/hadoop2.7/bin/hadoop fs -mkdir -p /data/wordcount /home/hadoop/hadoop2.7/bin/hadoop fs -mkdir -p /output/上传文件

hadoop fs -put /home/hadoop/hadoop2.2/etc/hadoop/*.xml /data/wordcount/ hadoop fs -ls /data/wordcount执行Map-Reduce

hadoop jar /home/hadoop/hadoop2.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar wordcount /data/wordcount /output/wordcount查看状态

http://192.168.169.131:8088/cluster浏览结果

hadoop fs -cat /output/wordcount/part-r-00000 | more

引用:

https://www.baidu.com/s?wd=hadoop%E9%9B%86%E7%BE%A4%E7%8E%AF%E5%A2%83%E6%90%AD%E5%BB%BA&rsv_spt=1&rsv_iqid=0xffb1db6f0002def2&issp=1&f=8&rsv_bp=1&rsv_idx=2&ie=utf-8&rqlang=cn&tn=baiduhome_pg&rsv_enter=1&oq=shell%20%E5%88%9B%E5%BB%BA%E6%96%87%E4%BB%B6%E5%A4%B9&rsv_t=b457e81oNfX2KqE2N62rZkxga5NJ4LA1PBga1gBeH2Y2RZr1dK5cXsG6jPkERHUs8L6b&inputT=7316&rsv_pq=8f6d21860003ff4f&rsv_sug3=177&rsv_sug1=134&rsv_sug7=100&bs=shell%20%E5%88%9B%E5%BB%BA%E6%96%87%E4%BB%B6%E5%A4%B9

http://www.cnblogs.com/liuling/archive/2013/06/16/2013-6-16-01.html