OpenCV4Android开发实录(2): 使用OpenCV3.4.1库实现人脸检测

OpenCV4Android开发实录(2): 使用OpenCV3.3.0库实现人脸检测

转载请声明出处:http://write.blog.csdn.net/postedit/78992490

OpenCV4Android系列:

1. OpenCV4Android开发实录(1):移植OpenCV3.3.0库到Android Studio

2.OpenCV4Android开发实录(2): 使用OpenCV3.3.0库实现人脸检测

上一篇文章OpenCV4Android开发实录(1):移植OpenCV3.3.0库到Android Studio大概介绍了下OpenCV库的基本情况,阐述了将OpenCV库移植到Android Studio项目中的具体步骤。本文将在此文的基础上,通过对OpenCV框架中的人脸检测模块做相应介绍,然后实现人脸检测功能。

一、人脸检测模块移植

1.拷贝opencv-3.3.0-android-sdk\OpenCV-android-sdk\samples\face-detection\jni目录到工程app module的main目录下

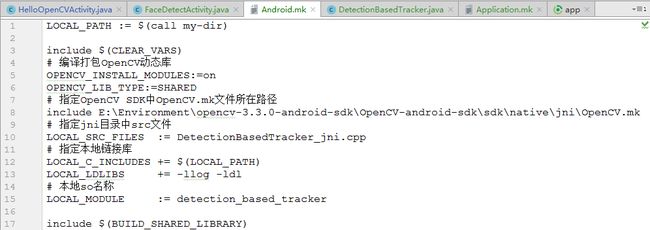

2.修改jni目录下的Android.mk

(1) 将

#OPENCV_INSTALL_MODULES:=off

#OPENCV_LIB_TYPE:=SHARED OPENCV_INSTALL_MODULES:=on

OPENCV_LIB_TYPE:=SHARED(2) 将

ifdef OPENCV_ANDROID_SDK

ifneq ("","$(wildcard $(OPENCV_ANDROID_SDK)/OpenCV.mk)")

include ${OPENCV_ANDROID_SDK}/OpenCV.mk

else

include ${OPENCV_ANDROID_SDK}/sdk/native/jni/OpenCV.mk

endif

include ../../sdk/native/jni/OpenCV.mk

endif include E:\Environment\opencv-3.3.0-android-sdk\OpenCV-android-sdk\sdk\native\jni\OpenCV.mk其中,include包含的就是OpenCV SDK中OpenCV.mk文件所存储的绝对路径。最终Android.mk修改效果如下:

3.修改jni目录下Application.mk。由于在导入OpenCV libs时只拷贝了armeabi 、armeabi-v7a、arm64-v8a,因此这里指定编译平台也为上述三个;修改APP_PLaTFORM版本为android-16(可根据自身情况而定),具体如下:

APP_STL := gnustl_static

APP_CPPFLAGS := -frtti –fexceptions

# 指定编译平台

APP_ABI := armeabi armeabi-v7a arm64-v8a

# 指定Android平台

APP_PLATFORM := android-164.修改DetectionBasedTracker_jni.h和DetectionBasedTracker_jni.cpp文件,将源文件中所有包含前缀“Java_org_opencv_samples_facedetect_”替换为“Java_com_jiangdg_opencv4android_natives_”,其中com.jiangdg.opencv4android.natives是Java层类DetectionBasedTracker.java所在的包路径,该类包含了人脸检测相关的native方法,否则,在调用自己编译生成的so库时会提示找不到该本地函数错误,以DetectionBasedTracker_jni.h为例:

/* DO NOT EDIT THIS FILE - it is machine generated */

#include

/* Header for class org_opencv_samples_fd_DetectionBasedTracker */

#ifndef _Included_org_opencv_samples_fd_DetectionBasedTracker

#define _Included_org_opencv_samples_fd_DetectionBasedTracker

#ifdef __cplusplus

extern "C" {

#endif

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeCreateObject

* Signature: (Ljava/lang/String;F)J

*/

JNIEXPORT jlong JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeCreateObject

(JNIEnv *, jclass, jstring, jint);

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeDestroyObject

* Signature: (J)V

*/

JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeDestroyObject

(JNIEnv *, jclass, jlong);

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeStart

* Signature: (J)V

*/

JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeStart

(JNIEnv *, jclass, jlong);

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeStop

* Signature: (J)V

*/

JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeStop

(JNIEnv *, jclass, jlong);

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeSetFaceSize

* Signature: (JI)V

*/

JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeSetFaceSize

(JNIEnv *, jclass, jlong, jint);

/*

* Class: org_opencv_samples_fd_DetectionBasedTracker

* Method: nativeDetect

* Signature: (JJJ)V

*/

JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeDetect

(JNIEnv *, jclass, jlong, jlong, jlong);

#ifdef __cplusplus

}

#endif

#endif 5.打开Android Studio中的Terminal窗口,使用cd命令切换到工程jni目录所在位置,并执行ndk-build命令,然后会自动在工程的app/src/main目录下生成libs和obj目录,其中libs目录存放的是目标动态库libdetection_based_tracker.so。

生成so库:

注意:如果执行ndk-build命令提示命令不存在,说明你的ndk环境变量没有配置好。

6.修改app模块build.gradle中的sourceSets字段,禁止自动调用ndk-build命令,设置目标so的存放路径,代码如下:android {

compileSdkVersion 25

defaultConfig {

applicationId "com.jiangdg.opencv4android"

minSdkVersion 15

targetSdkVersion 25

versionCode 1

versionName "1.0"

}

….// 代码省略

sourceSets {

main {

jni.srcDirs = [] //禁止自动调用ndk-build命令

jniLibs.srcDir 'src/main/libs' // 设置目标的so存放路径

}

}

….// 代码省略

}其中,jni.srcDirs = []的作用是禁用gradle默认的ndk-build,防止AS自己生成android.mk编译jni工程,jniLibs.srcDir 'src/main/libs'的作用设置目标的so存放路径,以将自己生成的so组装到apk中。

二、源码解析使用OpenCV3.3.0库实现人脸检测功能主要包含以下四个步骤,即:

(1) 初始化加载OpenCV库引擎;

(2) 通过OpenCV库开启Camera渲染;

(3) 加载人脸检测模型;

(4) 调用人脸检测本地动态库实现人脸识别;

1.初始化加载OpenCV库引擎

OpenCV库的加载有两种方式,一种通过OpenCV Manager进行动态加载,也就是官方推荐的方式,这种方式需要另外安装OpenCV Manager,主要通过调用OpenCVLoader.initAsync()方法进行初始化;另一种为静态加载,也就是本文所使用的方法,即先将相关架构的so包拷贝到工程的libs目录,通过调用OpenCVLoader.initDebug()方法进行初始化,类似于调用system.loadLibrary("opencv_java")。

if (!OpenCVLoader.initDebug()) {

// 静态加载OpenCV失败,使用OpenCV Manager初始化

// 参数:OpenCV版本;上下文;加载结果回调接口

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_3_0,

this, mLoaderCallback);

} else {

// 如果静态加载成功,直接调用onManagerConnected方法

mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

}private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

// OpenCV初始化加载成功,再加载本地so库

System.loadLibrary("detection_based_tracker");

// 加载人脸检测模型

…..

// 初始化人脸检测引擎

…..

// 开启渲染Camera

mCameraView.enableView();

break;

default:

super.onManagerConnected(status);

break;

}

}

};在OpenCV中与Camera紧密相关的主要有两个类,即CameraBridgeViewBase和JavaCameraView,其中,CameraBridgeViewBase是一个基类,继承于SuarfaceView和SurafaceHolder.Callback接口,用于实现Camera与OpenCV库之间的交互,它主要的作用是控制Camera、处理视频帧以及调用相关内部接口对视频帧做相关调整,然后将调整后的视频帧数据渲染到手机屏幕上。比如enableView()方法、disableView()方法用于连接到Camera和断开与Camera的连接,代码如下:

public void enableView() {

synchronized(mSyncObject) {

mEnabled = true;

checkCurrentState();

}

}

public void disableView() {

synchronized(mSyncObject) {

mEnabled = false;

checkCurrentState();

}

} private void checkCurrentState() {

Log.d(TAG, "call checkCurrentState");

int targetState;

if (mEnabled && mSurfaceExist && getVisibility() == VISIBLE) {

targetState = STARTED;

} else {

targetState = STOPPED;

}

if (targetState != mState) {

/* The state change detected. Need to exit the current state and enter target state */

processExitState(mState);

mState = targetState;

processEnterState(mState);

}

}

private void processEnterState(int state) {

Log.d(TAG, "call processEnterState: " + state);

switch(state) {

case STARTED:

// 调用connectCamera()抽象方法,启动Camera

onEnterStartedState();

// 调用连接成功监听器接口方法

if (mListener != null) {

mListener.onCameraViewStarted(mFrameWidth, mFrameHeight);

}

break;

case STOPPED:

// 调用disconnectCamera()抽象方法,停用Camera

onEnterStoppedState();

// 调用断开连接监听器接口方法

if (mListener != null) {

mListener.onCameraViewStopped();

}

break;

};

} @Override

protected boolean connectCamera(int width, int height) {

// 初始化Camera,连接到Camera

if (!initializeCamera(width, height))

return false;

mCameraFrameReady = false;

// 开启一个与Camera相关的工作线程CameraWorker

Log.d(TAG, "Starting processing thread");

mStopThread = false;

mThread = new Thread(new CameraWorker());

mThread.start();

return true;

}

@Override

protected void disconnectCamera() {

// 断开Camera连接,释放相关资源

try {

mStopThread = true;

Log.d(TAG, "Notify thread");

synchronized (this) {

this.notify();

}

// 停止工作线程

if (mThread != null)

mThread.join();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

mThread = null;

}

/* Now release camera */

releaseCamera();

mCameraFrameReady = false;

}private class CameraWorker implements Runnable {

@Override

public void run() {

do {

…..//代码省略

if (!mStopThread && hasFrame) {

if (!mFrameChain[1 - mChainIdx].empty())

deliverAndDrawFrame(mCameraFrame[1 - mChainIdx]);

}

} while (!mStopThread);

}

}为了得到更好的人脸检测性能,OpenCV在SDK中提供了多个frontface检测器(人脸模型),存放在..\opencv-3.3.0-android-sdk\OpenCV-android-sdk\sdk\etc\目录下,这篇对OpenCV自带的人脸检测模型做了比较,结果显示LBP实时性要好些。因此,本文选用目lbpcascades录下lbpcascade_frontalface.xml模型,该模型包括了3000个正样本和1500个负样本,我们将其拷贝到AS工程的res/raw目录下,并通过getDir方法保存到/data/data/com.jiangdg.opencv4android/ cascade目录下。

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int byteesRead;

while ((byteesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, byteesRead);

}

is.close();

os.close();4. 人脸检测

在opencv-3.3.0-android-sdk的face-detection示例项目中,提供了CascadeClassifier和

DetectionBasedTracker两种方式来实现人脸检测,其中,CascadeClassifier是OpenCV用于人脸检测的一个级联分类器,DetectionBasedTracker是通过JNI编程实现的人脸检测。两种方式我都试用了下,发现DetectionBasedTracker方式还是比CascadeClassifier稳定些,CascadeClassifier会存在一定频率的误检。

public class DetectionBasedTracker {

private long mNativeObj = 0;

// 构造方法:初始化人脸检测引擎

public DetectionBasedTracker(String cascadeName, int minFaceSize) {

mNativeObj = nativeCreateObject(cascadeName, minFaceSize);

}

// 开始人脸检测

public void start() {

nativeStart(mNativeObj);

}

// 停止人脸检测

public void stop() {

nativeStop(mNativeObj);

}

// 设置人脸最小尺寸

public void setMinFaceSize(int size) {

nativeSetFaceSize(mNativeObj, size);

}

// 检测

public void detect(Mat imageGray, MatOfRect faces) {

nativeDetect(mNativeObj, imageGray.getNativeObjAddr(), faces.getNativeObjAddr());

}

// 释放资源

public void release() {

nativeDestroyObject(mNativeObj);

mNativeObj = 0;

}

// native方法

private static native long nativeCreateObject(String cascadeName, int minFaceSize);

private static native void nativeDestroyObject(long thiz);

private static native void nativeStart(long thiz);

private static native void nativeStop(long thiz);

private static native void nativeSetFaceSize(long thiz, int size);

private static native void nativeDetect(long thiz, long inputImage, long faces);

}@Override

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

….// 代码省略

// 获取检测到的脸部数据

MatOfRect faces = new MatOfRect();

…// 代码省略

if (mNativeDetector != null) {

mNativeDetector.detect(mGray, faces);

}

// 绘制检测框

Rect[] facesArray = faces.toArray();

for (int i = 0; i < facesArray.length; i++) {

Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

}

return mRgba;

}注:由于篇幅原因,关于人脸检测的C/C++实现代码(原理),我们将在后续文章中讨论。

三、效果演示

1. FaceDetectActivity.class

/**

* 人脸检测

*

* Created by jiangdongguo on 2018/1/4.

*/

public class FaceDetectActivity extends AppCompatActivity implements CameraBridgeViewBase.CvCameraViewListener2 {

private static final int JAVA_DETECTOR = 0;

private static final int NATIVE_DETECTOR = 1;

private static final String TAG = "FaceDetectActivity";

@BindView(R.id.cameraView_face)

CameraBridgeViewBase mCameraView;

private Mat mGray;

private Mat mRgba;

private int mDetectorType = NATIVE_DETECTOR;

private int mAbsoluteFaceSize = 0;

private float mRelativeFaceSize = 0.2f;

private DetectionBasedTracker mNativeDetector;

private CascadeClassifier mJavaDetector;

private static final Scalar FACE_RECT_COLOR = new Scalar(0, 255, 0, 255);

private File mCascadeFile;

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

// OpenCV初始化加载成功,再加载本地so库

System.loadLibrary("detection_based_tracker");

try {

// 加载人脸检测模式文件

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int byteesRead;

while ((byteesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, byteesRead);

}

is.close();

os.close();

// 使用模型文件初始化人脸检测引擎

mJavaDetector = new CascadeClassifier(mCascadeFile.getAbsolutePath());

if (mJavaDetector.empty()) {

Log.e(TAG, "加载cascade classifier失败");

mJavaDetector = null;

} else {

Log.d(TAG, "Loaded cascade classifier from " + mCascadeFile.getAbsolutePath());

}

mNativeDetector = new DetectionBasedTracker(mCascadeFile.getAbsolutePath(), 0);

cascadeDir.delete();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

// 开启渲染Camera

mCameraView.enableView();

break;

default:

super.onManagerConnected(status);

break;

}

}

};

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN);

setContentView(R.layout.activity_facedetect);

// 绑定View

ButterKnife.bind(this);

mCameraView.setVisibility(CameraBridgeViewBase.VISIBLE);

// 注册Camera渲染事件监听器

mCameraView.setCvCameraViewListener(this);

}

@Override

protected void onResume() {

super.onResume();

// 静态初始化OpenCV

if (!OpenCVLoader.initDebug()) {

Log.d(TAG, "无法加载OpenCV本地库,将使用OpenCV Manager初始化");

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_3_0, this, mLoaderCallback);

} else {

Log.d(TAG, "成功加载OpenCV本地库");

mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

}

}

@Override

protected void onPause() {

super.onPause();

// 停止渲染Camera

if (mCameraView != null) {

mCameraView.disableView();

}

}

@Override

protected void onDestroy() {

super.onDestroy();

// 停止渲染Camera

if (mCameraView != null) {

mCameraView.disableView();

}

}

@Override

public void onCameraViewStarted(int width, int height) {

// 灰度图像

mGray = new Mat();

// R、G、B彩色图像

mRgba = new Mat();

}

@Override

public void onCameraViewStopped() {

mGray.release();

mRgba.release();

}

@Override

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

mGray = inputFrame.gray();

// 设置脸部大小

if (mAbsoluteFaceSize == 0) {

int height = mGray.rows();

if (Math.round(height * mRelativeFaceSize) > 0) {

mAbsoluteFaceSize = Math.round(height * mRelativeFaceSize);

}

mNativeDetector.setMinFaceSize(mAbsoluteFaceSize);

}

// 获取检测到的脸部数据

MatOfRect faces = new MatOfRect();

if (mDetectorType == JAVA_DETECTOR) {

if (mJavaDetector != null) {

mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2,

new Size(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

}

} else if (mDetectorType == NATIVE_DETECTOR) {

if (mNativeDetector != null) {

mNativeDetector.detect(mGray, faces);

}

} else {

Log.e(TAG, "Detection method is not selected!");

}

// 绘制检测框

Rect[] facesArray = faces.toArray();

for (int i = 0; i < facesArray.length; i++) {

Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

}

Log.i(TAG, "共检测到 " + faces.toArray().length + " 张脸");

return mRgba;

}

}

源码下载:https://github.com/jiangdongguo/OpenCV4Android(欢迎star & fork)

更新于2018-3-13

四、使用Cmake方式编译和升级到OpenCV3.4.1

在上面工程中,有两个不好的体验:(1)每次编译so时必须手动调用ndk-build命令;(2)在编写C/C++代码时没有代码提示,也没有报错警告。我想这两种情况无论是哪个都是让人感觉很不爽的,因此,今天打算在OpenCV3.4.1版本的基础上对项目进行重构下,使用Cmake方式来进行编译。

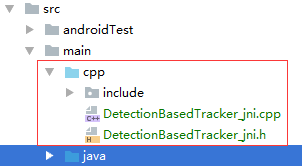

1. 新建main/app/cpp目录。将jni目录下的"DetectionBasedTracker_jni.cpp" 和"DetectionBasedTracker_jni.h" 文件拷贝到该目录下,并将opencv-3.4.1源码..\opencv-3.4.1-android-sdk\OpenCV-android-sdk\sdk\native\jni\目录下的整个include目录拷贝到该cpp目录下,同时可以删除整个app/src/main/jni目录

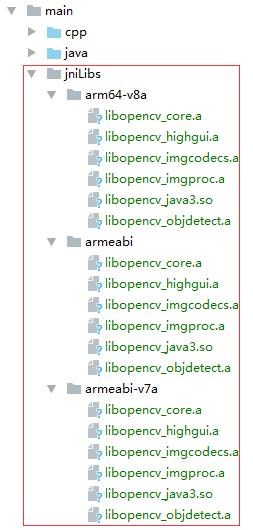

2. 新建main/app/jniLibs目录,将opencv-3.4.1源码中..\opencv-3.4.1-android-sdk\OpenCV-android-sdk\sdk\native\libs或staticlibs相关架构的动态库(.so)和静态库(.a)文件拷贝到该jniLibs目录下,同时删除libs、obj目录。

3. 在app目录下新建脚本文件CMakeLists.txt,该文件用于编写Cmake编译运行需要的脚本。需要注意的是,你在jniLibs目录导入了哪些静态库和动态库,在CmakeList.txt编写自动编译脚本时只能导入和链接这些库:

# Sets the minimum version of CMake required to build the native

# library. You should either keep the default value or only pass a

# value of 3.4.0 or lower.

cmake_minimum_required(VERSION 3.4.1)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds it for you.

# Gradle automatically packages shared libraries with your APK.

set(CMAKE_VERBOSE_MAKEFILE on)

set(libs "${CMAKE_SOURCE_DIR}/src/main/jniLibs")

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/include)

#--------------------------------------------------- import ---------------------------------------------------#

add_library(libopencv_java3 SHARED IMPORTED )

set_target_properties(libopencv_java3 PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_java3.so")

add_library(libopencv_core STATIC IMPORTED )

set_target_properties(libopencv_core PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_core.a")

add_library(libopencv_highgui STATIC IMPORTED )

set_target_properties(libopencv_highgui PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_highgui.a")

add_library(libopencv_imgcodecs STATIC IMPORTED )

set_target_properties(libopencv_imgcodecs PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_imgcodecs.a")

add_library(libopencv_imgproc STATIC IMPORTED )

set_target_properties(libopencv_imgproc PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_imgproc.a")

add_library(libopencv_objdetect STATIC IMPORTED )

set_target_properties(libopencv_objdetect PROPERTIES

IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_objdetect.a")

#add_library(libopencv_calib3d STATIC IMPORTED )

#set_target_properties(libopencv_calib3d PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_calib3d.a")

#

#

#add_library(libopencv_dnn STATIC IMPORTED )

#set_target_properties(libopencv_core PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_dnn.a")

#

#add_library(libopencv_features2d STATIC IMPORTED )

#set_target_properties(libopencv_features2d PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_features2d.a")

#

#add_library(libopencv_flann STATIC IMPORTED )

#set_target_properties(libopencv_flann PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_flann.a")

#

#add_library(libopencv_ml STATIC IMPORTED )

#set_target_properties(libopencv_ml PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_ml.a")

#

#add_library(libopencv_photo STATIC IMPORTED )

#set_target_properties(libopencv_photo PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_photo.a")

#

#add_library(libopencv_shape STATIC IMPORTED )

#set_target_properties(libopencv_shape PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_shape.a")

#

#add_library(libopencv_stitching STATIC IMPORTED )

#set_target_properties(libopencv_stitching PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_stitching.a")

#

#add_library(libopencv_superres STATIC IMPORTED )

#set_target_properties(libopencv_superres PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_superres.a")

#

#add_library(libopencv_video STATIC IMPORTED )

#set_target_properties(libopencv_video PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_video.a")

#

#add_library(libopencv_videoio STATIC IMPORTED )

#set_target_properties(libopencv_videoio PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_videoio.a")

#

#add_library(libopencv_videostab STATIC IMPORTED )

#set_target_properties(libopencv_videostab PROPERTIES

# IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_videostab.a")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11 -fexceptions -frtti")

add_library( # Sets the name of the library.

opencv341

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

# Associated headers in the same location as their source

# file are automatically included.

src/main/cpp/DetectionBasedTracker_jni.cpp)

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log)

target_link_libraries(opencv341 android log

libopencv_java3 #used for java sdk

#17 static libs in total

#libopencv_calib3d

libopencv_core

#libopencv_dnn

#libopencv_features2d

#libopencv_flann

libopencv_highgui

libopencv_imgcodecs

libopencv_imgproc

#libopencv_ml

libopencv_objdetect

#libopencv_photo

#libopencv_shape

#libopencv_stitching

#libopencv_superres

#libopencv_video

#libopencv_videoio

#libopencv_videostab

${log-lib}

)

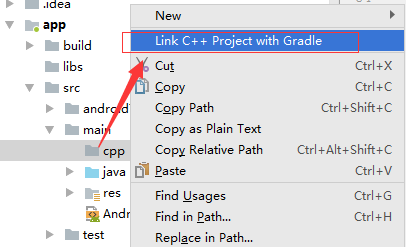

4. 右击选中app/src/main/cpp目录,选择"Link C++ Project with Gradle"并浏览选择本项目中的CmakeLists.txt文件,将C++环境关联到gradle

5. 将工程中的openCVLibrary330 module更新到openCVLibrary341,修改app目录下的gradle.build文件

apply plugin: 'com.android.application'

android {

compileSdkVersion 25

defaultConfig {

//...代码省略

externalNativeBuild {

cmake {

arguments "-DANDROID_ARM_NEON=TRUE", "-DANDROID_TOOLCHAIN=clang","-DCMAKE_BUILD_TYPE=Release"

//'-DANDROID_STL=gnustl_static'

cppFlags "-std=c++11","-frtti", "-fexceptions"

}

}

// 设置输出指定目标平台so

ndk{

abiFilters 'armeabi-v7a','arm64-v8a','armeabi'

}

}

// ...代码省略

externalNativeBuild {

cmake {

path 'CMakeLists.txt'

}

}

}

dependencies {

//.. 代码省略

implementation project(':openCVLibrary341')

}