【项目总结】ACM Recsys2019

深度学习课程项目完结,挑了个吃力不讨好的ACM Recsys2019,需要做一个基于序列分析的推荐系统。数据集给了一个线上操作序列的数据,需要预测最后一次操作的对象是哪个item,具体情况可以访问比赛主页ACM RecSys challenge 2019 | Home 。评价指标是MRR,要求对最后一次操作的25个items进行排序,榜单前20已经做到0.66以上,几乎是精确的预测到了下一次的item,我们第一次先分了长短两种序列做,结果只有baseline的水平(0.20多些),其实平均上也达到了预测排序前五个中出现了实际操作对象。后来期末结束后又重新做了一次,把所有能用的信息全部都用上了,最好的模型达到了0.28多,也就是平均预测排序前3~4个中出现了实际操作对象。

通过阅读文献可以发现,基于序列分析的推荐系统大都是使用GRU网络结构,限于硬件配置,笔者没有能够做很多的参数测试,唯一得出的结论是GRU层似乎并非越多越好,无论是前一次的分长短两种序列做模型还是后一次的用上所有字段信息做的模型,最终提交结果表明一层GRU都要比多层GRU有更好的效果,有可能是多层GRU容易使得模型过拟合。

这边给了TEX代码。TEX代码中引用的外部文件只有11张图片(Figure1~Figure11),其中Figure6~Figure10是实验作图,由于实在是画的太丑就不展示了。Figure1~5,11在TEX代码后

\documentclass{article}

%http://cs231n.stanford.edu/project.html

% if you need to pass options to natbib, use, e.g.:

% \PassOptionsToPackage{numbers, compress}{natbib}

% before loading neurips_2019

% ready for submission

% \usepackage{neurips_2019}

% to compile a preprint version, e.g., for submission to arXiv, add add the

% [preprint] option:

% \usepackage[preprint]{neurips_2019}

% to compile a camera-ready version, add the [final] option, e.g.:

\usepackage[final]{neurips_2019}

% to avoid loading the natbib package, add option nonatbib:

% \usepackage[nonatbib]{neurips_2019}

\usepackage[utf8]{inputenc} % allow utf-8 input

\usepackage[T1]{fontenc} % use 8-bit T1 fonts

\usepackage{hyperref} % hyperlinks

\usepackage{url} % simple URL typesetting

\usepackage{booktabs} % professional-quality tables

\usepackage{amsfonts} % blackboard math symbols

\usepackage{nicefrac} % compact symbols for 1/2, etc.

\usepackage{microtype} % microtypography

\usepackage{amsmath}

\usepackage{geometry}

\usepackage{setspace}

\usepackage{algorithm}

\usepackage{algorithmicx}

\usepackage{algpseudocode}

\usepackage{graphicx} %插入图片

\title{A Session-Based and Sequence-Aware Recommender System}

% The \author macro works with any number of authors. There are two commands

% used to separate the names and addresses of multiple authors: \And and \AND.

%

% Using \And between authors leaves it to LaTeX to determine where to break the

% lines. Using \AND forces a line break at that point. So, if LaTeX puts 3 of 4

% authors names on the first line, and the last on the second line, try using

% \AND instead of \And before the third author name.

\author{%

Yang Cao, Yiting Li, Jianhua Jin \\

Departmant of Information Management and Information System\\\& Finance Departmant \\

Shanghai University of Finance and Economics\\

% examples of more authors

% \And

% Coauthor \\

% Affiliation \\

% Address \\

% \texttt{email} \\

% \AND

% Coauthor \\

% Affiliation \\

% Address \\

% \texttt{email} \\

% \And

% Coauthor \\

% Affiliation \\

% Address \\

% \texttt{email} \\

% \And

% Coauthor \\

% Affiliation \\

% Address \\

% \texttt{email} \\

}

\begin{document}

\maketitle

%%%%abstract

\begin{abstract}

We apply different models to solve the challenge of session-based and sequence-aware recommendation. The \emph{mean reciprocal rank} (MRR) is used as the metric. And GRU4Rec was chosen to be our basic model and make some small changes. We experiment on different number of GRU layers in our first \emph{Long-short GRU4Rec Model}, and we find the MRR score lower than one GRU layer. Also, we construct another model named \emph{Integrated GRU4Rec Model} by adding more additional information, such as devices user used and cities in which items locate, to improve the performance, and the score got a little better.

\end{abstract}

%%%%1 Introduction

\section{Introduction}

Traditional solution for recommendation such as Collaborative Filtering is mainly based on correlation between users and item. However, these methods has an assumption that every item is independent and is insufficient for dealing with sequential information. Also, in real world, most row data in fields like E-commerce, users’ behavior is typically stored in a session. Problems regarding how to tap into users' long-term preference and dealing with session-based information has been a major research field in recommendation systems.\\

The ACM Recommender Systems conference (RecSys) is the premier international forum for the presentation of new research results, systems and techniques in the broad field of recommender systems. RecSys19 launched a new challenge with trivago to find a way to make use of the trail of implicit and explicit signals that users leave behind during their visit in the form of interactions with content, search refinements, and filter usage, to predict which accommodation the users will click on at the end of their trivago journey. In this challenge, a recommender system needs to be developped to present travellers accommodation information based on users' interest and latent preference. Urged by this objective, We want to find an efficient approach to dealing with problems in tasks of session-based and sequence-aware recommender system.

%%%%2 Related work

\section{Related work}

There are lots of works focusing on session-based neural recommendation. We scrutinized several highly-influenced paper to get inspired.

\begin{table}[h]

\centering

\caption{Literature Review}

\begin{spacing}{1.2}

\centering

\begin{tabular}{p{200 pt}p{40 pt}p{70 pt}}

\\[-2mm]

\toprule

\textbf{Essay} & \textbf{Platform} & \textbf{Feature} \\

\midrule

Session-based recommendations with recurrent neural networks & ICLR 2016 & GRU4Rec \\

Parallel Recurrent Neural Network Architectures for Feature-rich Session-based Recommendations & RecSys 2016 & GRU4Rec $+$\quad Item features \\

Incorporating Dwell Time in Session-Based Recommendations with Recurrent Neural Networks & RecSys 2017 & GRU4Rec $+$\quad Dwell Time \\

Personalizing Session-based Recommendations with Hierarchical Recurrent Neural Networks & RecSys 2017 & HGRU4Rec \\

\bottomrule

\end{tabular}

\end{spacing}

\end{table}

\\

Shuai Zhang \cite{Zhang2019DeepLB} summarized some previous work on DL-based recommendation systems and pointed out the strength of using RNN model on session-based task. In 2016, Hidasi\cite{Hidasi2016SessionbasedRW} discovered that modeling the whole session can provide more accurate recommendations. They were the first to incorporate RNN into session-based neural recommendations and proposed three new loss function on the task. Their model GRU4REC on RecSys'15 has recall around 0.6 and MRR has reached 0.2693. However, items typically have rich feature representations such as pictures and text descriptions that can be used to model the sessions. Same year, Hidasi\cite{Hidasi2016ParallelRN} investigated how these features can be exploited and proposed a model called p-RNN. Their implementation on dataset VIDSL has improved performance by 12.41\% in Recall and by 18.72\% in MRR compared to their baseline model Item-KNN. Veronika and Tsvi Kuflik \cite{Bogina2017IncorporatingDT} incorporated dwell time into existing RNN framework by boosting items above the predefined dwell time threshold and on RecSys’15.Their best recall on RecSys'15 reached 0.7885 and 0.5834 on MRR. In 2017, Massimo and Alexandros \cite{Quadrana2017PersonalizingSR} devised a Hierarchical RNN model that relays end evolves latent hidden states of the RNNs across user sessions by adding a user-level GRU. Results on two industry datasets show large improvements over the GRU4REC. \\Some implementation has already be placed on comparing different factors affecting the performance of DL-based models. Hui Fang(2019)\cite{Fang2019DeepLS} tested several gadgets on GRU4REC and found features such as dwell time, different loss functions,data augementation have significant influence on the overall performance of GRU4Rec.

%%%%3Data

\section{Data}

Our data comes from the Recsys Challenge 2019, shown on the website:

\begin{center}

\url{https://recsys.trivago.cloud/challenge/dataset/}

\end{center}

\subsection{Data preview}

\paragraph{Problem definition}

The data provided for this challenge consists of a training and test set, and metadata for accommodations (items). The training set contains user actions up to a specified time (split date). It can be used to build models of user interactions and specifies the type of action that has been performed (filter usage, search refinements, item interactions, item searches, item click-outs) as well as information about impressed items and prices at the time of a click-out. The recommendations should be provided for a test set that contains information about sessions after the split date but is missing the information about the accommodations that have been clicked in the last part of the sessions. The required output is a list of maximum 25 items for each click-out ordered by preferences for the specific user. The higher the actually clicked item appears on the list the higher the score.

\subsubsection{Item metadata}

The item metadata describes the items. We can take the item metadata as features for each item. There are 927142 items in the data and each item has different number of features. The item metadata look like this:

\begin{itemize}

\item \textbf{item\_id:} identifier of the accommodation as used in the reference values for item related action types, e.g. clickout\_item and item interactions, and impression list.

\item \textbf{properties:} pipe-separated list of filters that are applicable for the given item.

\end{itemize}

Note that the total number of different properties are 157 each item has 20 properties in average.

\subsubsection{Session actions data (training and test set)}

Training set (whose shape is 15932992$\times$12) and test set (whose shape is 3782335$\times$12) have the same 12 features, which are shown below:

\begin{itemize}

\item \textbf{user\_id:} identifier of the user.

\item \textbf{session\_id:} identifier of each session.

\item \textbf{timestamp:} UNIX timestamp for the time of the interaction.

\item \textbf{step:} step in the sequence of actions within the session.

\item \textbf{action\_type:} identifier of the action that has been taken by the user.

\item \textbf{reference:} reference value of the action as described for the different action types.

\item \textbf{platform:} country platform that was used for the search.

\item \textbf{city:} name of the current city of the search context.

\item \textbf{device:} device that was used for the search.

\item \textbf{current\_filters:} list of pipe-separated filters that were active at the given timestamp.

\item \textbf{impressions:} list of pipe-separated items that were displayed to the user at the time of a click-out (only when action\_type = clickout\_item).

\item \textbf{prices:} list of pipe-separated prices of the items in impressions (only when action\_type = clickout\_item).

\end{itemize}

Note that there are 10 different \textbf{action\_type}:

\begin{itemize}

\item \textbf{interaction item deals:} item\_id.

\item \textbf{interaction item image:} item\_id.

\item \textbf{search for destination:} totally 25309 destinations.

\item \textbf{filter selection:} totally 205 filters.

\item \textbf{change of sort order:} totally 8 different sort orders.

\item \textbf{search for item:} item\_id.

\item \textbf{interaction item rating:} item\_id.

\item \textbf{clickout item:} item\_id.

\item \textbf{interaction item info:} item\_id.

\item \textbf{search for poi:} totally 15118 POIs.

\end{itemize}

Only 6 of them corresponds to references which are item\_id. The other 4 action types need to be taken specially.

\subsection{Data preprocess}

\subsubsection{Item metadata}

Considerng the large amount of items, traditional 1-of-N representation of items may lead to huge dimension of outputs and tremendous number of params. So we use the 157 properties in Item metadata to construct a 157-dimenstion representation of items. In this way, we lower the complexity and enhence the extendability of models.

\subsubsection{Analysis of action types and reference}

Noting that there are many successive actions which happens in the same timestamp(also with same reference and action type), we drop duplicates and then according to the statistics, among all of 10 action types, \textbf{interaction item rating} and \textbf{clickout item} appears most frequently, occupying namely 35\% and 30\%. Since the number of the other of 4 action types with reference of item\_id is relatively small, and \textbf{interaction item rating} and \textbf{clickout item} can be distinguished with \textbf{impressions} features, so we just treat these 6 action types as the same action type.

As to the other 4 action types with difference references, we find that they can be regarded as some state. For example, when a user takes filter selection, this action will influence all the actions following until the next \textbf{filter selection} happens. In this way, we can treat these 4 action types as supplementary information and realize that the references in one sequence are all item\_ids.

\begin{itemize}

\item \textbf{search for destination:} We find that information in destination is almost covered by feature \textbf{city}. We simply drop it.

\item \textbf{filter selection:} We find that information in filters is almost covered by feature \textbf{current\_filters}. We simply drop it.

\item \textbf{change of sort order:} There are totally 8 different sort orders, we use 4 dimensions to represent them(namely distance, price, recommended, rating) so that the information is more accurate:

\begin{itemize}

\item distance only: 1 0 0 0

\item price and recommended: 0 1 1 0

\item rating and recommended: 0 0 1 1

\item our recommendations: 0 0 1 0

\item interaction sort button: 0 0 0 0

\item rating only: 0 0 0 1

\item distance and recommended,1 0 1 0

\item price only,0 1 0 0

\end{itemize}

\item \textbf{search for poi:} There are totally 15118 POIs, we set index of them.

\end{itemize}

\subsubsection{Features in training set except for action type and reference}

\begin{itemize}

\item \textbf{user\_id:} As there are only 33614 users(4.60\% of all users) appears in both train and test, caring about user\_id is meaningless.

\item \textbf{session\_id:} session\_id is the primary key of the dataset, so it is dropped.

\item \textbf{timestamp:} Timestamp tells the time in which the action takes place, we make subtractions between two adjacent in order for the duration of one action.

\item \textbf{step:} Information in step can be completely covered by timestamp, so it is dropped.

\item \textbf{platform:} There are totally 55 different platforms, we set index of them.

\item \textbf{city:} There are totally 36703 different cities, we set index of them for embedding.

\item \textbf{device:} There are totally 3 different devices(mobile, PC, PAD), we simply set index of them.

\item \textbf{current\_filters:} There are totally 201 different filters, we set index of them.

\item \textbf{impressions:} As there are at most 25 item\_ids in impressions, we spare 3925(25 times 157) dimensions to represent impressions.

\item \textbf{prices:} Corresponding to \textbf{impressions}, we spare 25 dimensions to represent prices.

\end{itemize}

\subsubsection{Other points}

As we find that the length of sequence in dataset is really short(Figure 1). Length of nearly quarter of them is only 1, that is to say, the dataset only gives the last clickout item action, and we need to make prediction of the item\_id the user clicks out. So our first \emph{Long-short GRU4Rec Model} are on long sequence model and short sequence model. Although we later use more features to construct the second \emph{Integrated GRU4Rec Model} so that there is no need to divide sequence into long type and short type.

\\

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/distribution.PNG}

\caption{distribution of sequence length}

\label{fig1}

\end{figure}

\\

%%%%4Methods

\section{Methods}

\label{others}

%4.1Long-short GRU4Rec Model

\subsection{Long-short GRU4Rec Model}

\emph{Long-short GRU4Rec Model} consists of models for long sequence data and models for short sequence data. We take features of item representations, item prices, duration of actions, sort types, filter types and POIs in The former long model and feature \textbf{impressions} and \textbf{prices} in the latter short model. Specially, in both train data and test data, length of some sessions is larger than 1, which means in each session of these users take more than one actions. However, Some sessions have only one behavior and the only behavior is \textbf{clickout item}. This means we cannot use algorithms used to fit sequence data. Data in both training set and test set are divided into \emph{\textbf{long sequence data}} (length >= 1) and \emph{\textbf{short sequence data}} (length = 1). Different models and training methods are designed and tried here.

\subsubsection{Models for long sequence data}

In data of long sequence, each session's lenth is larger than 1. And challenge of this kind of data can be solved by GRU4Rec neural network. Figure 2 shows the structure of the network. The inputs are a sequence of items. And we applied different numbers of GRU layers, then connected with a fully connected layer. Finally we get softmax outputs, which can be interpreted as probabilities of each items appearing in impressions.

\\

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/figure_model_long.PNG}

\caption{GRU4Rec neural network}

\label{fig2}

\end{figure}

\\

\subsubsection{Models for short sequence data}

Short sequence data cannot be taken as sequence data because each session only has one action. So in our work we tried different models.

Because each impressions (a feature in short sequence data) has a list of item-ids and the maximum lenth of these item lists is 25, we take the 25 items as the input of our model. Lists with less than 25 items will be padded with zeros. Every item is represented by a 157-dimension vector, where the number 157 means 157 features in item metadata. Moreover, for input data we add one more dimension to the item vector. The dimension is the correspoding 'price' of each item. And then the output is softmax output with dimension \emph{number of samples$\times$ 157}.

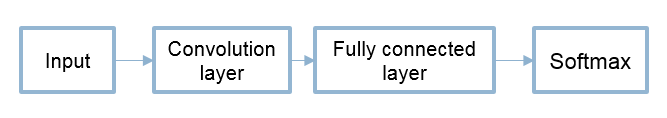

With input data prepared, two simple models are designed for this short sequence data task. One is a convolution layer followed by a fully connected layer (and we call it CNN model). The other is only one fully connected layer (Dense). Figure 3 and Figure 4 show the structures of the two models.

\\

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/figure_model_short_1.PNG}

\caption{Insincere questions disribution: Sector chart }

\label{fig3}

\end{figure}

\\

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/figure_model_short_2.PNG}

\caption{Insincere questions disribution: Sector chart }

\label{fig4}

\end{figure}

\\

%4.2Integrated GRU4Rec Model

\subsection{Integrated GRU4Rec Model}

As the performance of \emph{Long-short GRU4Rec Model} only touches the baseline(around 0.2), so we construct \emph{Integrated GRU4Rec Model} by adding more features, and the input array is shown in Table 2:

\begin{table}[h]

\centering

\caption{Input of Integrated GRU4Rec Model}

\begin{spacing}{1.2}

\centering

\begin{tabular}{p{50 pt}p{100 pt}p{200 pt}}

\\[-2mm]

\toprule

\textbf{Index} & \textbf{dimensions} & \textbf{Features} \\

\midrule

a1-a157 & 157 & item representation \\

a158 & 1 & item price \\

a159 & 1 & action duration \\

a160-a163 & 4 & sort order \\

a164-a364 & 201 & current filters \\

a365-a367 & 3 & device \\

a368-a422 & 55 & platform \\

a423 & 1 & index of POIs(0-15116) \\

a424 & 1 & index of cities(0-36072) \\

a425-a4374 & 3950 & impressions(3925) and prices(25)\\

\bottomrule

\end{tabular}

\end{spacing}

\end{table}

Different the sequential model before, we build GRU layers for each part of the input array, and then concat all the outputs of each GRU and then put it into Dense. As there are too many categories of POIs and cities, we need to embedding them into 32 dimensions and 64 dimensions at the start. The structure of Integrated GRU4Rec Model is shown in Figure 5:

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/Integrated.PNG}

\caption{Structure of Integrated GRU4Rec Model}

\label{fig5}

\end{figure}

%4.3Training methods

\subsection{Training methods}

Different from traditional sequence-based model, which only care about the sequence of items, our model also cares about many other information, and the evaluation index is \emph{MRR}, so we cannot apply some tricky methods like \emph{session-parallel minibatch}. As the dataset is large enough, we train on batch by batchsize of 64. As the output of all models are 157-dimension of item representation, we finally calculate the similarity between the output and each item in impressions in order to rank the items in impressions. Referred to paper, loss function of cross entropy can achieve the best result. And the optimizer we choose is \emph{Adam}, some hyperparam like the number of layers in GRU and learning rates in the training process will be shown in the following parts.

%%%%5Experiments

\section{Experiments}

\subsection{Evaluation}

The \emph{mean reciprocal rank} (MRR) is used as the metric to evaluate the predictions. The mean reciprocal rank is an international common mechanism for evaluating search algorithms. The first result matching is 1, score of the second matching is $\frac{1}{2}$, score of the n-th matching is $\frac{1}{n}$, and the score of sentence without matching is 0. The final score is the sum of all the scores. The predictions for this task are lists of items. And the final score is the MRR between predicted lists of items and true lists of items. The formula of MRR is here:

$$MRR=\frac{1}{|Q|}\sum_{i=1}^{|Q|}\frac{1}{rank_i}$$

where $ {\displaystyle {\text{rank}}_{i}}$ refers to the rank position of the first relevant document for the i-th query.

\subsection{Performance}

\subsubsection{On valid}

As \emph{Long-short GRU4Rec Model} doesn't perform well, we just do the evaluation on train set(Figure 11). We do the valid on \emph{Integrated GRU4Rec Model}. We split 30\% of data in train.csv as valid set, and the train loss and valid loss on the best model we submit to ACM Recsys2019 is shown in Figure6-10:

\\

\begin{figure}[h]

\begin{minipage}[t]{0.3\linewidth}%并排放两张图片,每张占行的0.4,下同

\centering %插入的图片居中表示

\includegraphics[width=1.4\textwidth]{figure/1.jpg}

\center

\caption{Epoch 1}%图片的名称

\label{fig6}%标签,用作

\end{minipage}

\hfill

\begin{minipage}[t]{0.3\linewidth}

\centering

\includegraphics[width=1.4\textwidth]{figure/2.jpg}

\center

\caption{Epoch 2}%图片的名称

\label{fig7}

\end{minipage}

\end{figure}

%三栏图片,figure11,12, model3,4

\begin{figure}[h]

\centering

\begin{minipage}[t]{0.3\linewidth}%并排放两张图片,每张占行的0.4,下同

\centering %插入的图片居中表示

\includegraphics[width=1.4\textwidth]{figure/3.jpg}

\center

\caption{Epoch 3}%图片的名称

\label{fig8}%标签,用作

\end{minipage}

\hfill

\begin{minipage}[t]{0.3\linewidth}

\centering

\includegraphics[width=1.4\textwidth]{figure/4.jpg}

\center

\caption{Epoch 4}%图片的名称

\label{fig9}

\end{minipage}

\hfill

\begin{minipage}[t]{0.3\linewidth}

\centering

\includegraphics[width=1.4\textwidth]{figure/5.jpg}

\center

\caption{Epoch 5}%图片的名称

\label{fig10}

\end{minipage}

\end{figure}

\\

Note that this is the model of all 1 GRU for each input arrays and 1 Dense, it performs the best. Some other hyperparams we test generates the similar figures. It is really confusing that the loss on valid set increases at first and then tend to be gentle. You can see that the model just get stable after only one epoch.

\subsubsection{On test}

The best result we load on to ACM Recsys2019 by \emph{Long-short GRU4Rec Model} is 0.206045. It is tricky that no matter how many layers in GRU or Dense, the result doesn't change. The best result we load by \emph{Long-short GRU4Rec Model} is 0.281651. We test different number of layers in GRU and Dense, learning rates, and some other hyperparams, we surprisely find that only 1 layer in GRU and Dense finally achieves the best result. Overall, the adding of information really enhence the model performence. The submit screenshot is at the end of the document(Figure 12)

\\

\begin{figure}[h]

\centering

\includegraphics[width=1\linewidth]{figure/submit.PNG}

\caption{Submit Result}

\label{fig12}

\end{figure}

\\

%%%%6Conclusion

\section{Conclusion \& Future work}

In this paper, we apply different models to solve the challenge of session-based and sequence-aware recommendation. We choose GRU4Rec to be our basic model and make some small changes. We added more GRU layers in GRU4Rec, and we found the MRR score lower than one GRU layer. This may arise from overfitting of the more complicated model. Also, we added more additional information, such as devices user used and user cities, to improve the performance, and indeed the MRR score got a little better.

Our future work will focus on incorporate more features to make recommendations more precise, such as user representation and behaviour time.

\bibliographystyle{unsrt}

\bibliography{refer}

\end{document}

分享学习,共同进步!