datax使用之mysql2hdfs,hdfs2mysql

先看文章底部的注意事项

三、dataX案例

3.1 案例1(stream—>stream)

datax使用插件式开发,官方参考文档如下:https://github.com/alibaba/DataX/blob/master/dataxPluginDev.md

描述:streaming reader—>streaming writer (官网例子)

[root@hadoop01 home]# cd /usr/local/datax/

[root@hadoop01 datax]# vi ./job/first.json

内容如下:

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"sliceRecordCount": 10,

"column": [

{

"type": "long",

"value": "10"

},

{

"type": "string",

"value": "hello,你好,世界-DataX"

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 5

}

}

}

}

运行job:

[root@hadoop01 datax]# python ./bin/datax.py ./job/first.json

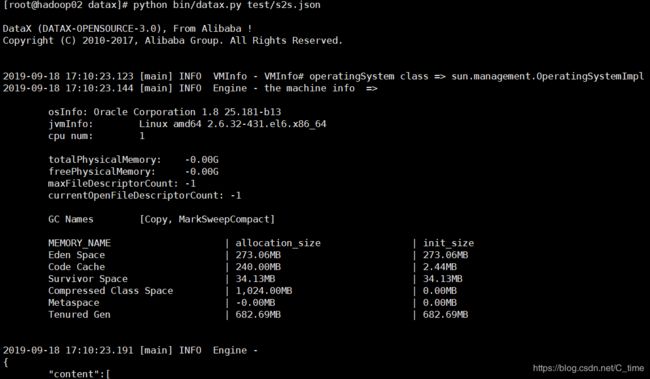

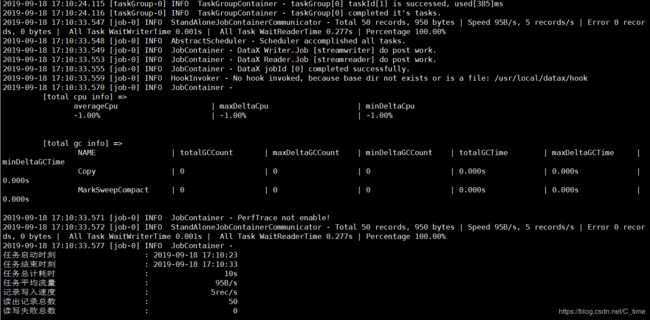

运行过程如下:

3.2 案例2(mysql—>hdfs)

描述:mysql reader----> hdfs writer

从mysql读取数据 reader

然后写入hdfs,使用writer

[root@hadoop01 datax]# vi ./job/mysql2hdfs.json

内容如下:

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"name"

],

"connection": [

{

"jdbcUrl": ["jdbc:mysql://hadoop01:3306/test"],

"table": ["userinfo"]

}

],

"password": "root",

"username": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://qf/",

"hadoopConfig":{

"dfs.nameservices": "qf",

"dfs.ha.namenodes.qf": "nn1,nn2",

"dfs.namenode.rpc-address.qf.nn1": "hadoop01:8020",

"dfs.namenode.rpc-address.qf.nn2": "hadoop02:8020",

"dfs.client.failover.proxy.provider.qf": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"fileType": "orc",

"path": "/datax/mysql2hdfs/orcfull",

"fileName": "m2h01",

"column": [

{

"name": "col1",

"type": "INT"

},

{

"name": "col2",

"type": "STRING"

}

],

"writeMode": "append",

"fieldDelimiter": "\t",

"compress":"NONE"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

注:

运行前,需提前创建好输出目录:

提 前 创 建 目 录 !!!! 不然报错的

[root@hadoop01 datax]# hdfs dfs -mkdir -p /datax/mysql2hdfs/orcfull

运行job:

[root@hadoop01 datax]# python ./bin/datax.py ./job/mysql2hdfs.json

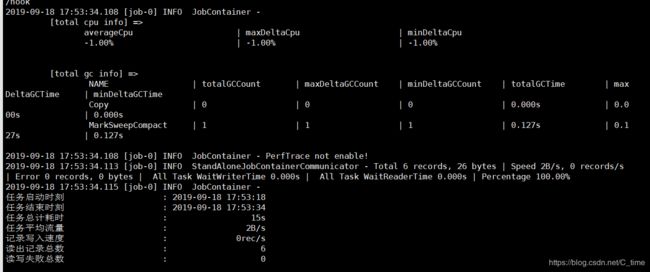

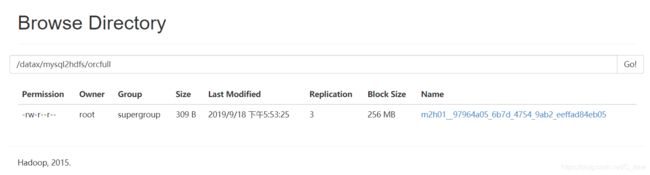

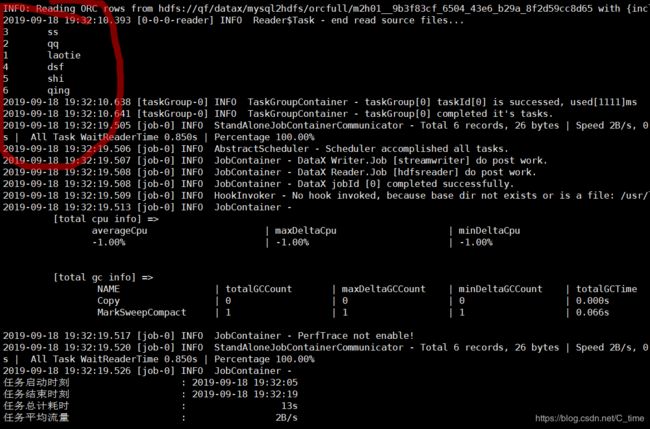

运行结果如下:

2019-09-18 17:53:23.793 [job-0] INFO HdfsWriter$Job - 由于您配置了writeMode append, 写入前不做清理工作, [/datax/mysql2hdfs/orc

2019-09-18 17:53:23.981 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2019-09-18 17:53:34.108 [job-0] INFO JobContainer - PerfTrace not enable!

2019-09-18 17:53:34.113 [job-0] INFO StandAloneJobContainerCommunicator - Total 6 records, 26 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2019-09-18 17:53:34.115 [job-0] INFO JobContainer -

任务启动时刻 : 2019-09-18 17:53:18

任务结束时刻 : 2019-09-18 17:53:34

任务总计耗时 : 15s

任务平均流量 : 2B/s

记录写入速度 : 0rec/s

读出记录总数 : 6

读写失败总数 : 0

然后建表看一下

"fileType": "orc"

"fieldDelimiter": "\t"

文件类型是orc

create table orcfull(

id int,

name string

)

row format delimited fields terminated by "\t"

stored as orc

location '/datax/mysql2hdfs/orcfull';

0: jdbc:hive2://hadoop01:10000> create table orcfull(

0: jdbc:hive2://hadoop01:10000> id int,

0: jdbc:hive2://hadoop01:10000> name string

0: jdbc:hive2://hadoop01:10000> )

0: jdbc:hive2://hadoop01:10000> row format delimited fields terminated by "\t"

0: jdbc:hive2://hadoop01:10000> stored as orc

0: jdbc:hive2://hadoop01:10000> location '/datax/mysql2hdfs/orcfull';

No rows affected (0.463 seconds)

0: jdbc:hive2://hadoop01:10000> select * from orcfull;

+-------------+---------------+--+

| orcfull.id | orcfull.name |

+-------------+---------------+--+

| 3 | ss |

| 2 | qq |

| 1 | laotie |

| 4 | dsf |

| 5 | shi |

| 6 | qing |

+-------------+---------------+--+

3.3 案例3(hdfs—>mysql)

描述:hdfs reader----> mysql writer

[root@hadoop01 datax]# vi ./job/hdfs2mysql.json

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/datax/mysql2hdfs/orcfull/*",

"defaultFS": "hdfs://qf/",

"hadoopConfig":{

"dfs.nameservices": "qf",

"dfs.ha.namenodes.qf": "nn1,nn2",

"dfs.namenode.rpc-address.qf.nn1": "hadoop01:8020",

"dfs.namenode.rpc-address.qf.nn2": "hadoop02:8020",

"dfs.client.failover.proxy.provider.qf": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"column": [

{

"index":0,

"type":"long"

},

{

"index":1,

"type":"string"

}

],

"fileType": "orc",

"encoding": "UTF-8",

"fieldDelimiter": "\t"

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true

}

}

}

]

}

}

{

"job": {

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/datax/mysql2hdfs/orcfull/*",

"defaultFS": "hdfs://qf/",

"hadoopConfig":{

"dfs.nameservices": "qf",

"dfs.ha.namenodes.qf": "nn1,nn2",

"dfs.namenode.rpc-address.qf.nn1": "hadoop01:8020",

"dfs.namenode.rpc-address.qf.nn2": "hadoop02:8020",

"dfs.client.failover.proxy.provider.qf": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"column": [

{

"index":0,

"type":"long"

},

{

"index":1,

"type":"string"

}

],

"fileType": "orc",

"encoding": "UTF-8",

"fieldDelimiter": "\t"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": [

"id",

"name"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://hadoop01:3306/test",

"table": ["stu1"]

}

],

"password": "root",

"username": "root"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

到hdfs的/datax/mysql2hdfs/orcfull下(上面我们通过datax从mysql导入到hdfs的) 我们将这个目录数据读出来 然后写到mysql去

注:

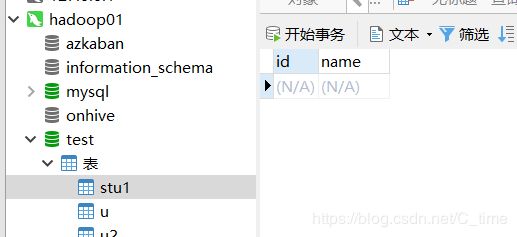

运行前,需提前创建好输出的stu1表:

CREATE TABLE `stu1` (

`id` int(11) DEFAULT NULL,

`name` varchar(32) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

运行job:

[root@hadoop01 datax]# python ./bin/datax.py ./job/hdfs2mysql.json

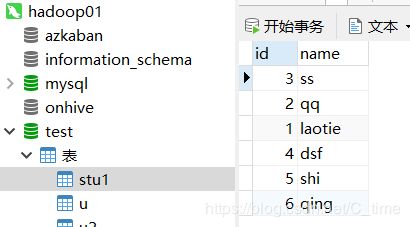

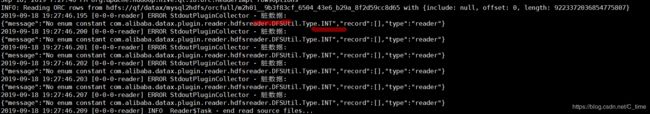

运行结果如下:

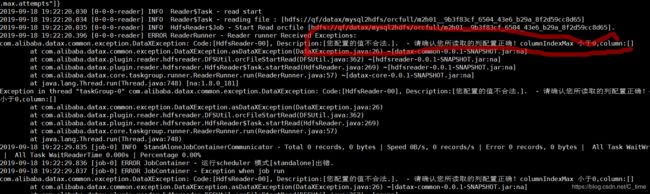

注意,列的类型,如果名称或者类型不对会有错误,我这儿采用所有列读写。

注意

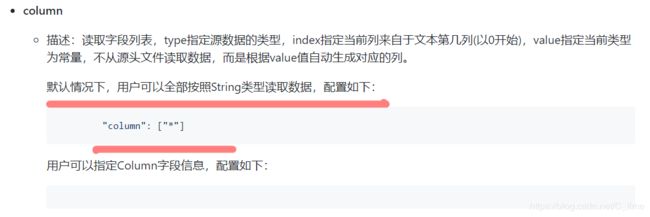

1.说"column": ["*"]的这种写法 是全部按照string类型读取

不是string类型就报错 所以如果字段中有不是string类型的需要单独写 一列一列声明 不能写*

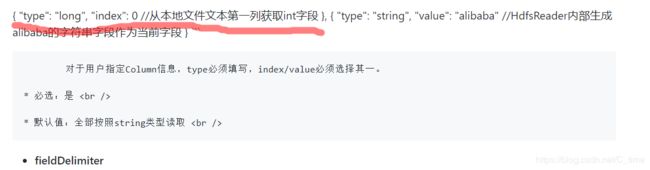

2.在表中的int类型在datax的json脚本中要写long 不然会提示你脏数据!!!

| DataX 内部类型 | HIVE 数据类型 |

|---|---|

| Long | TINYINT,SMALLINT,INT,BIGINT |

| Double | FLOAT,DOU |

| String | STRING,VARCHAR,CHAR |

| Boolean | BOOLEAN |

| Date | DATE,TIMESTAMP |

注 :这几个错误都是在阿里的文档中看到的解决办法

1.所以用到什么去什么的官方文档

2.

https://github.com/alibaba/DataX?spm=a2c4g.11186623.2.22.4eb64c07HZQR8M

进入到github 阿里将datax开源到这里了

3.fan到最下面, 比如我用到了hdfs的读写 就点进去看 有案例 案例下面还有参数解释 会有各种注意点!