Naive Bayes 手动实现

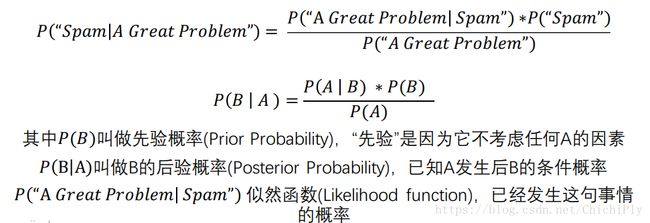

Naive Bayes 求的是后验概率。后验概率的定义:P(B|A) 已知A发生后 B 发生的概率。

其理论基础如下公式所示:

故手动实现需要得到:

1. 邮件中出现的单词及其出现的次数

2. spam / ham 单词的总个数

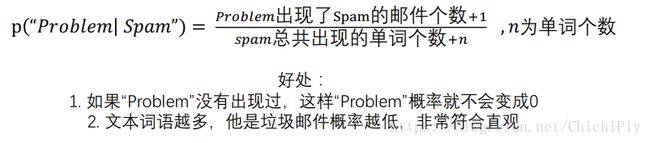

这里加入了Laplace smoothing 修正,其原理如下所示:

Multinomial Naive Bayes

有两种方法可以实现,第一种方法是利用dict; 第二种是模拟sklearn 里的model

利用dict:

# 统计单词的数量, 这里要分 spam 和 ham 两个字典来统计

def getWords(X, y):

spam= {}

ham = {}

total = set()

spam_word_num = 0

ham_word_num = 0

spam_num = 0

ham_num = 0

for i in X:

words = i.split()

if y[i] == 'spam':

spam_word_num += len(words)

spam_num += 1

for word in words:

spam[word] = spam.get(word, 0) + 1

total.add(word)

else:

ham_word_num += len(words)

ham_num += 1

for word in words:

ham[word] = ham.get(word,0) + 1

total.add(word)

total_num = total.size

spam_word_num = spam_word_num + total_num # 加入 拉普拉斯平滑

ham_word_num = ham_word_num + total_num

return spam, ham, spam_num, ham_num, spam_word_num, ham_word_numdef Predict(spam, ham, spam_num, total_num, spam_word_num, ham_word_num, X):

words = X.split()

spam_pro = 0

ham_pro = 0

for word in words:

spam_pro += spam.get(word, 1)

ham_pro += ham.get(word,1)

spam_pro = (spam_pro / spam_word_num) * (spam_num / (spam_num + ham_num))

ham_pro = (ham_pro / ham_word_num) * (ham_num / (spam_num + ham_num))

if spam_pro > ham_pro:

return 'spam'

else:

return 'ham'第二种方法,模拟 sklearn 中model的实现方式,建立一个单词矩阵,将所有文本都转换成矩阵

# 建立矩阵的第一行,即所有文本中出现的单词

def GetVocabulary(data):

vocab_set = set([])

for document in data:

words = document.split()

for word in words:

vocab_set.add(word)

return list(vocab_set)

vocab_list = GetVocabulary(data_train)# 将所有文本转换成向量

def Document2Vector(vocab_list, data):

word_vector = np.zeros(len(vocab_list))

words = data.split()

for word in words:

if word in vocab_list:

word_vector[vocab_list.index(word)] += 1 # list 可以直接用 index 来返回某个值的index

return word_vector # 得到的 vector 的单词顺序是按照vocab_list的单词顺序排列的# 将所有文本都变成向量,最后组成一个矩阵

train_matrix = []

for document in data_train.values:

word_vector = Document2Vector(vocab_list, document)

train_matrix.append(word_vector)# 训练过程,得到 spam/ham 的单词总数以及总单词数

def NaiveBayes_train(train_matrix,labels_train):

num_docs = len(train_matrix)

num_words = len(train_matrix[0])

spam_vector_count = np.ones(num_words);

ham_vector_count = np.ones(num_words) # Laplace Smoothing

spam_total_count = num_words;

ham_total_count = num_words # Laplace Smoothing

spam_count = 0

ham_count = 0

for i in range(num_docs):

if i % 500 == 0:

print ('Train on the doc id:' + str(i))

if labels_train[i] == 'spam':

ham_vector_count += train_matrix[i]

ham_total_count += sum(train_matrix[i])

ham_count += 1

else:

spam_vector_count += train_matrix[i]

spam_total_count += sum(train_matrix[i])

spam_count += 1

print (ham_count)

print (spam_count)

p_spam_vector = np.log(ham_vector_count/ham_total_count) # 变为 log 之后能够更方便求解

p_ham_vector = np.log(spam_vector_count/spam_total_count) # 变为log 之后能够更方便求解

return p_spam_vector, np.log(spam_count/num_docs), p_ham_vector, np.log(ham_count/num_docs)# 进行预测

def Predict(test_word_vector,p_spam_vector, p_spam, p_ham_vector, p_ham):

spam = sum(test_word_vector * p_spam_vector) + p_spam

ham = sum(test_word_vector * p_ham_vector) + p_ham

if spam > ham:

return 'spam'

else:

return 'ham'

直接调用 sklearn 包的实现过程如下所示:

from sklearn.feature_extraction.text import CounterVectorizer

vectorizer = CounterVectorizer

data_train_count = vectorizer.fit_transform(X_train) # fit_transform 的含义是从X_train 中得到一个大型稀疏矩阵

data_test_count = vectorizer.transform(X_test) # transform 的含义是将 X_test 中的单词投射到这个大型稀疏矩阵上

from sklearn.naive_bayes import MultinomialNB # 引入 Naive Bayes 分类器

clf = MultinomialNB() # 生成一个model

clf.fit(data_train_count, labels_train) # 利用上一步生成的 data_train_coount 来进行训练

predictions = clf.predict(data_test_count) # 进行预测

# Laplace Smoothing 已经被内置进去了,这里不需要显式地实现这里先使用 CounterVectorizer 来获得一个大型稀疏矩阵。举例如下

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

vect = CountVectorizer()

example = ['whats up', 'howdy', 'hello word']

result = vect.fit_transform(example)

print(result)

print(vect.vocabulary_) # vect.vocabulary_ 是一个字典

(0, 2) 1

(0, 3) 1

(1, 1) 1

(2, 4) 1

(2, 0) 1

{'whats': 3, 'up': 2, 'howdy': 1, 'hello': 0, 'word': 4}得到的 vect 是一个稀疏矩阵,第一行是文本中的所有单词。通过 print(vect) 得到不为0的点的位置及值。

通过 vect.vocabulary_ 得到一个 dict。其中 key 是单词,value是单词出现的次数

如果只想得到vect文本中的所有单词,则有如下操作:

vect.get_feature_names()

['hello', 'howdy', 'up', 'whats', 'word'] result.toarray()

array([[0, 0, 1, 1, 0],

[0, 1, 0, 0, 0],

[1, 0, 0, 0, 1]], dtype=int64)注意,这里是对 result 进行的操作,而不是对 vect

TFIDF Bayes

引入 tfidf 模型,能够兼顾到单词之间的联系

具体代码如下:

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf = TfidfVectorizer(ngram_range = (1,3), use_idf = 1, smooth_idf = 1, stop_words = 'english')

data_train_tf = tfidf.fit_transform(X_train)

data_test_tf = tfidf.transform(X_test)