VGGNet简介以及Tensorflow实现

Tensorflow实现VGGNet

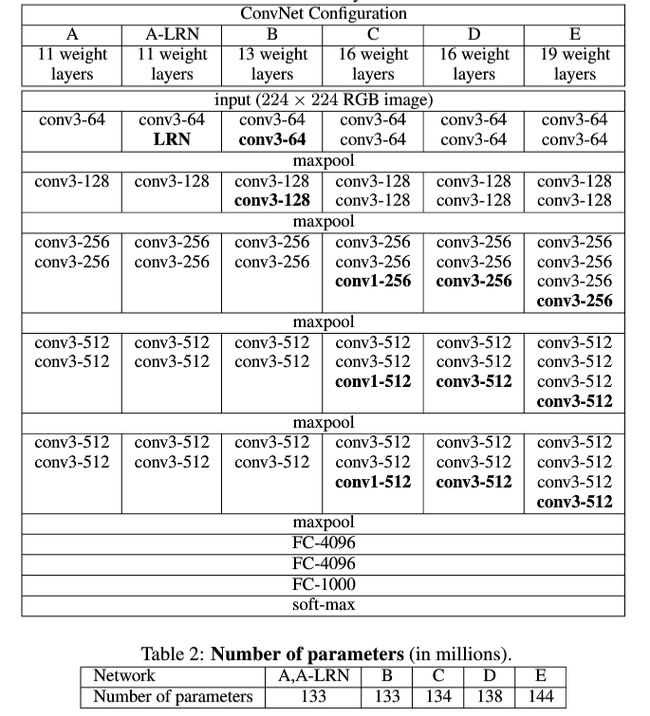

引言:VGGNet是牛津大学和Deep Mind公司一起研发的深度卷积神经网络,VGGNet探索了卷积神经网络的深度与其性能之间的关系,通过反复堆叠3x3的小型卷积核和2x2的最大池化层,VGGNet成功地构筑了16~19层深的卷积神经网络

-

相关阅读:

AlexNet以及Tensorflow实现

GoogleInceptionNet_V3以及Tensorflow实现

ResNet_V2以及Tensorflow实现 -

模块化方法:

本文将通过模块化加注释的方法,来实现VGGNet,这样可以帮助初学者快速读懂其结果以及实现方法

- 定义卷积层:conv_op()

- 定义全连接层:fc_op()

- 定义最大池化层:mpool_op()

- 定义VGGNet-16网络结构:inference_op()

- 计算耗时:time_tensorflow_run()

- 定义评测主函数:run_benchmark

- VGGNet结构:

注:本文实现的结构就是下面的D结构

- VGGNet感受野以及优点:

VGGNet中经常出现多个完全一样的3x3的卷积层堆叠在一起的情况,这其实是非常有用的设计。事实上,2个3x3的卷积层堆叠在一起相当于1个5x5的卷积层,即一个像素会跟周围5x5的像素产生关联,可以说感受野大小为5x5。而3个3x3的卷积层串联的效果相当于1个7x7的卷积层。利用这种方式的优点有:

- 3个3x3的卷积层拥有比1个7x7的卷积层更少的参数量

- 3个3x3的卷积层拥有比1个7x7更多的非线性变换(因为我们可以在每一个卷积层之后加上激活函数)

导入包:

from datetime import datetime

import math

import time

import tensorflow as tf

卷积层:

# 创建卷积层,并把本层的参数存入参数列表

def conv_op(input_op, name, kh, kw, n_out, dh, dw, p):

'''

input_op:输入的tensor

name:这一层的名字

kh:卷积核的高 kw:卷积核的宽

n_out:卷积核数量,即输出通道数

dh:步长的高 dw:步长的宽

p:参数列表

'''

n_in = input_op.get_shape()[-1].value # 获得输入的通道数

with tf.name_scope(name) as scope:

# 用Xavier的方法进行初始化卷积核

kernel = tf.get_variable(scope + "w",shape = [kh, kw, n_in, n_out],

dtype = tf.float32,

initializer = tf.contrib.

initializer = tf.contrib.layers.xavier_initializer())

# 进行卷积处理

conv = tf.nn.conv2d(input_op, kernel, (1, dh, dw, 1), padding = 'SAME')

# 初始化b

bias_init_val = tf.constant(0.0, shape = [n_out], dtype = tf.float32)

biases = tf.Variable(bias_init_val, trainable = True, name = 'b')

# w*x + b

z = tf.nn.bias_add(conv, biases)

# relu 激活

activation = tf.nn.relu(z, name = scope)

# 加入参数列表p中

p += [kernel, biases]

# 返回激活结果

return activation

全连接层:

# 定义全连接层创建函数

def fc_op(init_op, name, n_out, p):

n_in = init_op.get_shape()[-1].value

with tf.name_scope(name) as scope:

# 用Xavier方法初始化全连接层参数

kernel = tf.get_variable(scope + "w", shape = [n_in, n_out],

dtype = tf.float32,

initizer = tf.contrib.layers.xavier_initializer())

# 初始化b

biases = tf.Variable(tf.constant(0.1, shape = [n_out],

dtype = tf.float32, name = 'b'))

# relu(w*x + b)

activation = tf.nn.relu_layer(init_op, kernel, biases, name = scope)

# 加入参数列表p中

p += [kernel, biases]

return activation

最大池化层:

# 定义最大池化层创建函数

def mpool_op(init_op, name, kh, kw, dh, dw):

return tf.nn.max_pool(init_op, ksize = [1, kh, kw, 1],

strides = [1, dh, dw, 1],

padding = 'SAME', name = name)

VGGNet-16网络结构:

# 定义创建VGGNet-16网络结构的函数

def inference_op(input_op, keep_prob):

# 初始化参数列表

p = []

# 第一段 :两个卷积层 + 一个最大池化层

conv1_1 = conv_op(input_op, name = "conv1_1", kh = 3, kw = 3, n_out = 64, dh = 1, dw = 1, p = p)

conv1_2 = conv_op(conv1_1, name = "conv1_2", kh = 3, kw = 3, n_out = 64, dh = 1, dw = 1, p = p)

pool1 = mpool_op(conv1_2, name = "pool1", kh = 2, kw = 2, dw = 2, dh = 2)

# 第二段 :两个卷积层 + 一个最大池化层

conv2_1 = conv_op(pool1, name = "conv2_1", kh = 3, kw = 3, n_out = 128, dh = 1, dw = 1, p = p)

conv2_2 = conv_op(conv2_1, name = "conv2_2", kh = 3, kw = 3, n_out = 128, dh = 1, dw = 1, p = p)

pool2 = mpool_op(conv2_2, name = "pool2", kh = 2, kw = 2, dh = 2, dw = 2)

# 第三段 :三个卷积层 + 一个最大池化层

conv3_1 = conv_op(pool2, name = "conv3_1", kh = 3, kw = 3, n_out = 256, dh = 1, dw = 1, p = p)

conv3_2 = conv_op(conv3_1, name = "conv3_2", kh = 3, kw = 3, n_out = 256, dh = 1, dw = 1, p = p)

conv3_3 = conv_op(conv3_2, name= "conv3_3", kh = 3, kw = 3, n_out = 256, dh = 1, dw = 1, p = p)

pool3 = mpool_op(conv3_3, name = "pool3", kh = 2, kw = 2, dh = 2, dw = 2)

# 第四段 :三个卷积层 + 一个最大池化层

conv4_1 = conv_op(pool3, name = "conv4_1", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv4_2 = conv_op(conv4_1, name = "conv4_2", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv4_3 = conv_op(conv4_2, name = "conv4_3", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

pool4 = mpool_op(conv4_3, name = "pool4", kh = 2, kw = 2, dh = 2, dw = 2)

# 第五段 :三个卷积层 + 一个最大池化层 Note: 此时卷积输出的通道数不再继续增加,继续维持在512

conv5_1 = conv_op(pool4, name = "conv5_1", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv5_2 = conv_op(conv5_1, name = "conv5_2", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv5_3 = conv_op(conv5_2, name = "conv5_3", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

pool5 = mpool_op(conv5_3, name = "pool5", kh = 2, kw = 2, dh = 2, dw = 2)

# 将第五段卷积网络输出结果扁平化

shp = pool5.get_shape()

flattend_shape = shp[1].value * shp[2].value * shp[3].value

resh1 = tf.reshape(pool5, [-1, flattend_shape], name = "resh1")

# 将全连接层用Relu激活,并连接一个Dropout层

fc6 = fc_op(resh1, name = "fc6", n_out = 4096, p = p)

fc6_drop = tf.nn.dropout(fc6, keep_prob, name = "fc6_drop")

# 再将全连接层用Relu激活,并连接一个Dropout层

fc7 = fc_op(fc6_drop, name = "fc7", n_out = 4096, p = p)

fc7_drop = tf.nn.dropout(fc7, keep_prob, name = "fc7_drop")

# 最后连接一个全连接层

fc8 = fc_op(fc7_drop, name = "fc8", n_out = 1000, p = p)

# 得出结果

softmax = tf.nn.softmax(fc8)

prediction = tf.argmax(softmax, 1)

return prediction, softmax, fc8, p

耗时:

# 计算耗时

def time_tensorflow_run(session, target, feed, info_string):

num_steps_burn_in = 10 # 打印阈值

total_duration = 0.0 # 每一轮所需要的迭代时间

total_duration_aquared = 0.0 # 每一轮所需要的迭代时间的平方

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target, feed_dict = feed)

duration = time.time() - start_time # 计算耗时

if i >= num_steps_burn_in:

if not i % 10:

print("%s : step %d, duration = %.3f" % (datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_aquared += duration * duration

mn = total_duration / num_batches # 计算均值

vr = total_duration_aquared / num_batches - mn * mn # 计算方差

sd = math.sqrt(vr) # 计算标准差

print("%s : %s across %d steps, %.3f +/- %.3f sec/batch" % (datetime.now(), info_string, num_batches, mn, sd))

测试主函数:

注:因为我的渣卡(其实当时VGGNet训练时使用了4块GTX TITAN GPU并行计算,即使这样每个网络都需要2~3周才训练完),要训练一个完整的VGGNet几乎不可能,所以我们这边只测试forward和backward的耗时意思意思。。过几天再发一篇用迁移学习的方法fine turn来达到图像识别的效果

def run_benchmark():

with tf.Graph().as_default():

image_size = 224

images = tf.Variable(tf.random_normal([batch_size,

image_size,

image_size, 3],

dtype = tf.float32,

stddev = 1e-1))

keep_prob = tf.placeholder(tf.float32)

predictions, softmax, fc8, p = inference_op(images, keep_prob)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

time_tensorflow_run(sess, predictions, {keep_prob : 1.0}, "Forward")

objective = tf.nn.l2_loss(fc8)

grad = tf.gradients(objective, p)

time_tensorflow_run(sess, grad, {keep_prob : 0.5}, "Forward-backward")

Tips:如果对grad = tf.gradients(objective, p)的作用不了解可以看我这篇文章

测试:

batch_size = 32

num_batches = 100

run_benchmark()

运行效果:

2019-01-20 12:14:16.703187 : step 0, duration = 0.290

2019-01-20 12:14:19.577814 : step 10, duration = 0.286

2019-01-20 12:14:22.442805 : step 20, duration = 0.288

2019-01-20 12:14:25.325368 : step 30, duration = 0.287

2019-01-20 12:14:28.204288 : step 40, duration = 0.290

2019-01-20 12:14:31.079623 : step 50, duration = 0.285

2019-01-20 12:14:33.962619 : step 60, duration = 0.291

2019-01-20 12:14:36.850861 : step 70, duration = 0.290

2019-01-20 12:14:39.731777 : step 80, duration = 0.289

2019-01-20 12:14:42.616316 : step 90, duration = 0.291

2019-01-20 12:14:45.213767 : Forward across 100 steps, 0.288 +/- 0.002 sec/batch

[1] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556, 2014.

[2] Tensorflo实战.黄文坚,唐源

如果觉得我有地方讲的不好的或者有错误的欢迎给我留言,谢谢大家阅读(点个赞我可是会很开心的哦)~