k8s版本:kubeadm v1.13.4

metrics-server

从Kubernetes 1.8开始,Kubernetes通过Metrics API提供资源使用指标,例如容器CPU和内存使用。这些度量可以由用户直接访问,例如通过使用kubectl top命令,或者由群集中的控制器(例如Horizontal Pod Autoscaler)使用来进行决策。

部署文件:

auth-delegator.yaml metrics-apiservice.yaml metrics-server-service.yaml

auth-reader.yaml metrics-server-deployment.yaml resource-reader.yaml

for i in auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml ;do wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/metrics-server/$i;done

直接下载下来的文件需要进行修改使用

auth-delegator.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

auth-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

metrics-apiservice.yaml

apiVersion: apiregistration.k8s.io/v1beta1 kind: APIService metadata: name: v1beta1.metrics.k8s.io labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100

metrics-server-deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: metrics-server-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server-v0.3.1

namespace: kube-system

labels:

k8s-app: metrics-server

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.3.1

spec:

selector:

matchLabels:

k8s-app: metrics-server

version: v0.3.1

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

version: v0.3.1

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.1

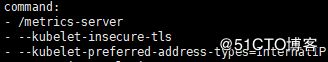

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

ports:

- containerPort: 443

name: https

protocol: TCP

- name: metrics-server-nanny

image: k8s.gcr.io/addon-resizer:1.8.4

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 5m

memory: 50Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: metrics-server-config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

#- --cpu={{ base_metrics_server_cpu }}

- --cpu=20m

- --extra-cpu=0.5m

#- --memory={{ base_metrics_server_memory }}

#- --extra-memory={{ metrics_server_memory_per_node }}Mi

- --memory=50Mi

- --extra-memory=5Mi

- --threshold=5

- --deployment=metrics-server-v0.3.1

- --container=metrics-server

- --poll-period=300000

- --estimator=exponential

# Specifies the smallest cluster (defined in number of nodes)

# resources will be scaled to.

#- --minClusterSize={{ metrics_server_min_cluster_size }}

- --minClusterSize=1

volumes:

- name: metrics-server-config-volume

configMap:

name: metrics-server-config

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

10250是https端口,连接它时需要提供证书,所以加上--kubelet-insecure-tls,表示不验证客户端证书,此前的版本中使用--source=这个参数来指定不验证客户端证书。

metrics-server这个容器不能通过CoreDNS 10.96.0.10:53 解析各Node的主机名,metrics-server连节点时默认是连接节点的主机名,需要加个参数,让它连接节点的IP:“--kubelet-preferred-address-types=InternalIP”

metrics-server-service.yaml

apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" kubernetes.io/name: "Metrics-server" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: https

resource-reader.yaml

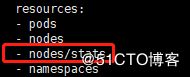

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces verbs: - get - list - watch - apiGroups: - "extensions" resources: - deployments verbs: - get - list - update - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

部署完成后执行

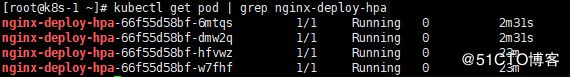

kubectl get pod -n kube-system查看是否部署成功

![]()

kubectl top pod/node

如果出现如下报错,请尝试在pod所在节点重启kubelet

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io)

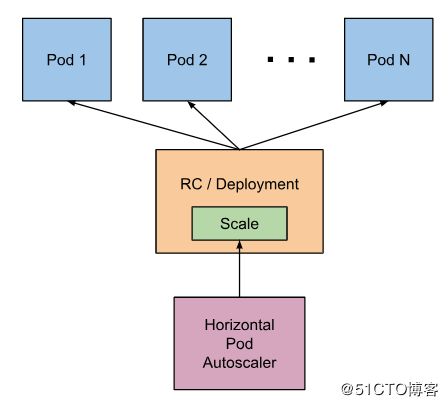

HPA

Horizontal Pod Autoscaler根据观察到的CPU利用率自动调整复制控制器,部署或副本集中的pod数量(或者,使用自定义度量标准支持,根据其他一些应用程序提供的度量标准)。请注意,Horizontal Pod Autoscaling不适用于无法缩放的对象,例如DaemonSet。

如果某些pod的容器没有设置相关的资源请求,则不会定义pod的CPU利用率,并且autoscaler不会对该度量标准采取任何操作。

算法:desiredReplicas = ceil[currentReplicas * ( currentMetricValue / desiredMetricValue )]

cat nginx-deploy-hpa.yaml

apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx-deploy-hpa spec: replicas: 2 template: metadata: labels: app: nginx-hpa spec: containers: - name: nginx-hpa image: nginx:1.8 ports: - containerPort: 80 resources: requests: cpu: 30m memory: 30Mi limits: cpu: 30m memory: 30Mi

创建HPA

命令创建

kubectl autoscale deploy nginx-deploy-hpa --min=2 --max=10 --cpu-precent=30

文件创建

vi nginx-hpa.yaml

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: nginx-deploy-hpa namespace: default spec: maxReplicas: 10 minReplicas: 2 scaleTargetRef: apiVersion: extensions/v1beta1 kind: Deployment name: nginx-deploy-hpa targetCPUUtilizationPercentage: 30

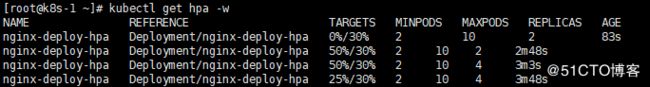

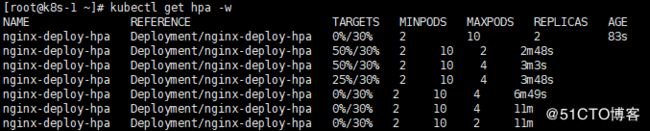

对pod进行压测,可以看出pod数量自动增长

结束压测,过一段时间,pod数量会自动减少

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server

https://kubernetes.io/docs/tasks/debug-application-cluster/core-metrics-pipeline

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/