distribute cache purge阻塞task的bug测试。

cdh4.2.0:

测试方法:

local.cache.size=650

因为cachedir size和count是存在内存中的HashMap中的,把local.cache.size值设置的足够小,在每次job运行时都会触发delete distribute cache的操作。

又因为是private的,是同步删除,会造成task阻塞。

使用dd来构造1M个的小文件至某一个cache dir目录。观察阻塞时间,bug可以重现。

#!/bin/bash

dir=`for i in {1..11}; do find /home/xxx/hard_disk/${i}/mapred/local -name "*.jar"; done|grep cmdline-jmxclient-0.10.3.jar|head -1|xargs dirname`

for ii in {0..100}

do

mkdir -p ${dir}/dir${ii}

for i in {1..10000};do dd if=/dev/zero of=${dir}/dir${ii}/${i}.jar count=1 bs=10000;done &

done

通过inode可以看到文件数量:

通过hive运行一个简单sql(需要有mapred),观察JT和TT日志:

Jt上相关日志:

Job setup阻塞超过10min以上,从job启动到第一个map运行耗时724s,tt日志上可以观察到delete distribute cache的操作

Jt log:

2014-04-23 11:07:22,195 INFO org.apache.hadoop.mapred.JobInProgress: job_201404221851_0005: nMaps=10 nReduces=3 max=-1 2014-04-23 11:07:22,242 INFO org.apache.hadoop.mapred.JobTracker: Job job_201404221851_0005 added successfully for user 'hdfs' to queue 'default' 2014-04-23 11:07:22,242 INFO org.apache.hadoop.mapred.JobTracker: Initializing job_201404221851_0005 2014-04-23 11:07:22,242 INFO org.apache.hadoop.mapred.JobInProgress: Initializing job_201404221851_0005 2014-04-23 11:07:22,388 INFO org.apache.hadoop.mapred.JobInProgress: jobToken generated and stored with users keys in /tmp/mapred/system/job_201404221851_0005/jobToken 2014-04-23 11:07:22,396 INFO org.apache.hadoop.mapred.JobInProgress: Input size for job job_201404221851_0005 = 2454759843. Number of splits = 10 2014-04-23 11:07:22,397 INFO org.apache.hadoop.mapred.JobInProgress: tip:task_201404221851_0005_m_000000 has split on node:/default-rack/xxxxxx 2014-04-23 11:07:22,400 INFO org.apache.hadoop.mapred.JobInProgress: job_201404221851_0005 LOCALITY_WAIT_FACTOR=1.0 2014-04-23 11:07:22,400 INFO org.apache.hadoop.mapred.JobInProgress: Job job_201404221851_0005 initialized successfully with 10 map tasks and 3 reduce tasks. 2014-04-23 11:07:22,402 INFO org.apache.hadoop.mapred.JobTracker: Adding task (JOB_SETUP) 'attempt_201404221851_0005_m_000011_0' to tip task_201404221851_0005_m_000011, for tracker 'tracker_xxxxx:localhost.localdomain/127.0.0.1:14522' 2014-04-23 11:08:55,931 INFO org.apache.hadoop.mapred.JobTracker: attempt_201404221851_0005_m_000011_0 is 93529 ms debug. 2014-04-23 11:12:15,961 INFO org.apache.hadoop.mapred.JobTracker: attempt_201404221851_0005_m_000011_0 is 293559 ms debug. 2014-04-23 11:15:35,989 INFO org.apache.hadoop.mapred.JobTracker: attempt_201404221851_0005_m_000011_0 is 493587 ms debug. 2014-04-23 11:18:56,019 INFO org.apache.hadoop.mapred.JobTracker: attempt_201404221851_0005_m_000011_0 is 693617 ms debug. 2014-04-23 11:18:56,019 INFO org.apache.hadoop.mapred.JobTracker: Launching task attempt_201404221851_0005_m_000011_0 timed out. ….. 2014-04-23 11:32:03,508 INFO org.apache.hadoop.mapred.JobInProgress$JobSummary: jobId=job_201404221851_0005, submitTime=1398222442091,launchTime=1398222442400,firstMapTaskLaunchTime=1398223166995, firstReduceTaskLaunchTime=1398223895409,firstJobSetupTaskLaunchTime=1398222442401, firstJobCleanupTaskLaunchTime=1398223920769,finishTime=1398223923508,numMaps=10,numSlotsPerMap=1,numReduces=3,numSlotsPerReduce=1,user=hdfs,queue=default,status=SUCCEEDED,mapSlotSeconds=202,reduceSlotsSeconds=82,clusterMapCapacity=27,clusterReduceCapacity=15

cdh4.6.0:

由于在构造TrackerDistributedCacheManager的实例时,会实例化一个CleanupThread对象,在task运行中会启动一个线程异步删除distribute cache,不会出现阻塞状况。

测试方法:

local.cache.size= 20000000 mapreduce.tasktracker.cache.local.keep.pct=0.5 mapreduce.tasktracker.distributedcache.checkperiod=1

在对应的cache dir中构造1M个小文件,多次运行同一个job(private的distribute cache不会共享),distribute cache会一直增大(HashMap中的值)达到指定大小时观察purge行为(打开tt的debug log/-Dhadoop.root.logger=DEBUG,DRFA)

JT日志:

2014-04-23 19:27:01,459 INFO org.apache.hadoop.mapred.JobInProgress: Job job_201404231547_0036 initialized successfully with 10 map tasks and 3 reduce tasks. 2014-04-23 19:27:01,614 INFO org.apache.hadoop.mapred.JobTracker: Adding task (JOB_SETUP) 'attempt_201404231547_0036_m_000011_0' to tip task_201404231547_0036_m_000011, for tracker 'tracker_gd6g12s116-hadooptest-datanode.idc.vipshop.com:localhost.localdomain/127.0.0.1:5580' 2014-04-23 19:27:05,163 INFO org.apache.hadoop.mapred.JobInProgress: Task 'attempt_201404231547_0036_m_000011_0' has completed task_201404231547_0036_m_000011 successfully. .... 2014-04-23 19:27:37,437 INFO org.apache.hadoop.mapred.JobInProgress$JobSummary: jobId=job_201404231547_0036,submitTime=1398252421298,launchTime=1398252421458, firstMapTaskLaunchTime=1398252425316,firstReduceTaskLaunchTime=1398252445408, firstJobSetupTaskLaunchTime=1398252421609,firstJobCleanupTaskLaunchTime=1398252454770,finishTime=1398252457437,numMaps=10,numSlotsPerMap=1,numReduces=3, numSlotsPerReduce=1,user=hdfs,queue=default,status=SUCCEEDED,mapSlotSeconds=189,reduceSlotsSeconds=26,clusterMapCapacity=27,clusterReduceCapacity=15 #从launch到第一个map运行间隔4s

TT日志:

2014-04-23 19:27:00,334 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead 2014-04-23 19:27:00,335 INFO org.apache.hadoop.mapred.TaskTracker: LaunchTaskAction (registerTask): attempt_201404231547_0036_m_000011_0 task's state:UNASSIGNED 2014-04-23 19:27:00,335 INFO org.apache.hadoop.mapred.TaskTracker: Trying to launch : attempt_201404231547_0036_m_000011_0 which needs 1 slots 2014-04-23 19:27:00,335 INFO org.apache.hadoop.mapred.TaskTracker: In TaskLauncher, current free slots : 9 and trying to launch attempt_201404231547_0036_m_000011_0 which needs 1 slots 2014-04-23 19:27:00,396 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager: Creating 04b0f029-bce2-47ba-ae83-10cc837df172 in /home/vipshop/hard_disk/10/mapred/local/taskTracker/distcache/-5286275044420583325_-1383138604_240564650/bipcluster/tmp/hive-hdfs/hive_2014-04-23_19-26-56_911_7591593033694865463/-mr-10003-work-2737216842579494997 with rwxr-xr-x ..... 2014-04-23 19:27:00,777 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager: Deleted path /home/vipshop/hard_disk/11/mapred/local/taskTracker/hdfs/distcache/4862822815811779469_-33152971_235118471 as hdfs #1M个小文件异步删除,没有阻塞 2014-04-23 19:27:00,997 INFO org.apache.hadoop.mapred.JvmManager: In JvmRunner constructed JVM ID: jvm_201404231547_0036_m_611176298

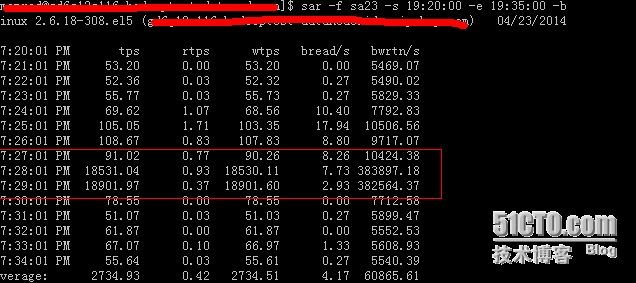

从sar的日志可以看到删除distribute cache的时间和io情况:

结论:

cdh4.2.0中在删除private distribute cache时,是使用的同步的方法,会阻塞当前进程,造成job启动异常(timed out,关于这个放在后面的blog讲)。

cdh4.6.0使用了异步的方式,不会阻塞当前进程。