撸了今年阿里、头条和美团的面试,我有一个重要发现.......>>> ![]()

前置依赖

[root@hadoop etc]# yum -y install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy libxml2-devel libxslt-devel openldap-devel python-devel sqlite-devel openssl-devel mysql-devel gmp-devel安装hue

将准备好的hue tar包解压;进入解压目录编译

[hadoop@hadoop hue-3.9.0-cdh5.7.0]$ make apps进入apps目录下;看到编译完成的hue;配置环境变量

HUE_HOME=/home/hadoop/app/hue-3.9.0-cdh5.7.0

PATH=$HUE_HOME/build/env/lib:$PATH

进入desktop的conf目录下修改hue.ini配置文件和修改db权限【desktop目录下有配置文件hue.ini;build/env/lib下有启动命令】

[hadoop@hadoop hue-3.9.0-cdh5.7.0]$ cd desktop/conf/

[hadoop@hadoop conf]$ ll

总用量 56

-rw-r--r--. 1 hadoop hadoop 49070 3月 24 2016 hue.ini

-rw-r--r--. 1 hadoop hadoop 1843 3月 24 2016 log4j.properties

-rw-r--r--. 1 hadoop hadoop 1721 3月 24 2016 log.conf

[hadoop@hadoop conf]$ pwd

/home/hadoop/app/hue-3.9.0-cdh5.7.0/desktop/conf

[hadoop@hadoop conf]$ vim hue.ini

# Set this to a random string, the longer the better.

# This is used for secure hashing in the session store.

secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@MnHadoop集成环境相关添加

1、hdfs-site.xml

dfs.webhdfs.enabled

true

dfs.permissions.enabled

false

2、core-site.xml

hadoop.proxyuser.hue.hosts

*

hadoop.proxyuser.hue.groups

*

hadoop.proxyuser.hadoop.hosts

*

hadoop.proxyuser.hadoop.groups

*

3、yarn-site.xml

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

259200

4、httpfs-site.xml

httpfs.proxyuser.hue.hosts

*

httpfs.proxyuser.hue.groups

*

5、配置文件同步

将以上配置文件同步到其他Hadoop主机

6、集成文件配置hive Hadoop MySQL

$HUE_HOME/desktop/conf/hue.ini

[hadoop]

# Configuration for HDFS NameNode

# ------------------------------------------------------------------------

[[hdfs_clusters]]

# HA support by using HttpFs

配置hdfs

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://hadoop:8020

# NameNode logical name.

## logical_name=

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

# Default port is 14000 for HttpFs.

webhdfs_url=http://hadoop:50070/webhdfs/v1

# Change this if your HDFS cluster is Kerberos-secured

## security_enabled=false

# In secure mode (HTTPS), if SSL certificates from YARN Rest APIs

# have to be verified against certificate authority

## ssl_cert_ca_verify=True

# Directory of the Hadoop configuration

## hadoop_conf_dir=$HADOOP_CONF_DIR when set or '/etc/hadoop/conf'

# Configuration for YARN (MR2)

# ------------------------------------------------------------------------

[[yarn_clusters]]

配置yarn

[[[default]]]

# Enter the host on which you are running the ResourceManager

resourcemanager_host=hadoop

# The port where the ResourceManager IPC listens on

resourcemanager_port=8032

#参考yarn-site.xml中的yarn.resourcemanager.address.rm1

# Whether to submit jobs to this cluster

submit_to=True

# Resource Manager logical name (required for HA)

## logical_name=

# Change this if your YARN cluster is Kerberos-secured

## security_enabled=false

# URL of the ResourceManager API

resourcemanager_api_url=http://hadoop:8088

# URL of the ProxyServer API

proxy_api_url=http://hadoop:8088

# URL of the HistoryServer API

#参考mapred-site.xml中的mapreduce.jobhistory.webapp.address

history_server_api_url=http://hadoop:19888

# In secure mode (HTTPS), if SSL certificates from YARN Rest APIs

# have to be verified against certificate authority

## ssl_cert_ca_verify=True

[beeswax]

配置hive

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=hadoop

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10000

配置MySQL

# mysql, oracle, or postgresql configuration.

## [[[mysql]]]

# Name to show in the UI.

nice_name="My SQL DB"

# For MySQL and PostgreSQL, name is the name of the database.

# For Oracle, Name is instance of the Oracle server. For express edition

# this is 'xe' by default.

name=mysqldb

# Database backend to use. This can be:

# 1. mysql

# 2. postgresql

# 3. oracle

engine=mysql

# IP or hostname of the database to connect to.

host=hadoop

# Port the database server is listening to. Defaults are:

# 1. MySQL: 3306

# 2. PostgreSQL: 5432

# 3. Oracle Express Edition: 1521

port=3306

# Username to authenticate with when connecting to the database.

user=root

# Password matching the username to authenticate with when

# connecting to the database.

password=root

7、Hive环境变量的添加

(hiveserver2,使用Mysql作为独立的元数据库)

hive-site.xml

hive.metastore.uris

thrift://192.168.232.8:9083

Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.

hive.server2.thrift.bind.host

192.168.232.8

Bind host on which to run the HiveServer2 Thrift service.

启动hue

执行以下指令对hue数据库进行初始化

cd $HUE_HOME/build/env/

bin/hue syncdb

bin/hue migrate

启动顺序

1、启动Hive metastore

nohup hive --service metastore &

2、启动hiveserver2

nohup hive --service hiveserver2 &

3、启动Hue

nohup supervisor &

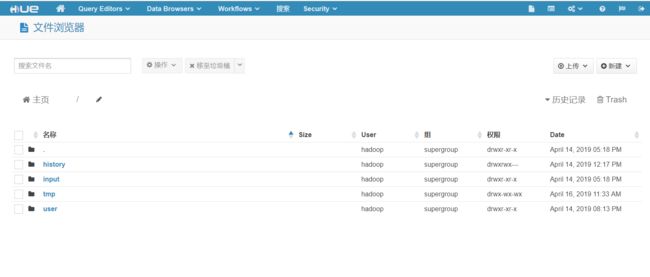

执行一个hive的查询:成功;同时可以查看hdfs文件目录;