HBase学习笔记 (伍)- Phoenix & Sqoop **

文章目录

- Phoenix简介

- Phoenix优势

- 为什么phoenix快

- Phoenix功能特性

- Phoenix安装

- Phoenix实战:shell命令操作Phoenix

- Phoenix实战:java jdbc操作Phoenix

- 通过mybatis操作Phoenix

- Sqoop1简介

- Sqoop1数据导入实战

Phoenix简介

- 构建在Apache HBase之上的一个SQL中间层

- 可以在Apache HBase上执行SQL查询,性能强劲

- 较完善的查询支持,支持二级索引,查询效率较高

Phoenix优势

- Put the SQL back in NoSQL, 程序员熟知SQL语句

- 具有完整ACID事务功能的标准SQL和JDBC API的强大功能

- 完全可以和其他Hadoop产品例如Spark, Hive, Pig, Flume以及MapReduce集

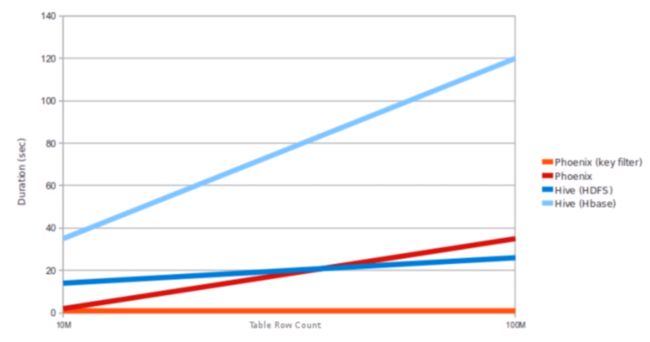

为什么phoenix快

- 通过HBase协处理器,在服务端进行操作,从而最大限度的减少客户端和服务器的数据传输

- 通过定制的过滤器对数据进行处理

- 使用本地的HBase Api而不是通过MapReduce框架,这样能最大限度的降低启动成本

Phoenix功能特性

- 二级索引:抛弃之前的只能根据rowkey做索引,通过过滤器删选数据;自由根据索引的列或者表达式形成备用的行键更方便的进行数据的查找

- 多租户:通过多租户表和租户专用的链接使用户只能访问自己的数据

- 用户定义函数:自己实现DF;像select一样使用

- 行时间戳列:可以将hbase每行的时间戳映射成Phoenix的一个列

- 分页查询:标准的sql分页语法

- 视图:标准sql语法(Phoenix可以使用多个虚拟表共享底层的物理表)

Phoenix安装

- 下载对应版本的安装包

http://phoenix.apache.org/download.html - 配置与现有HBase集群集成重新

解压后如下操作

如果是分布式则应拷贝到每个reginserver下

cp phoenix-core-4.14.1-HBase-1.2.jar ../hbase-1.2.0-cdh5.14.4/lib/

cp phoenix-4.14.1-HBase-1.2-server.jar ../hbase-1.2.0-cdh5.14.4/lib/

- 启动HBase环境,并测试环境是否可以正常使用

前置条件启动HDFS

启动hbase

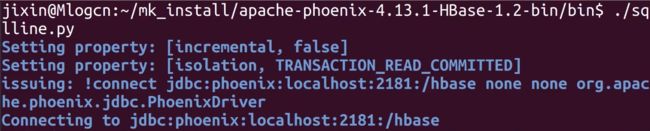

最后启动phoenix(bin/./sqlline.py)

Phoenix实战:shell命令操作Phoenix

进入shell

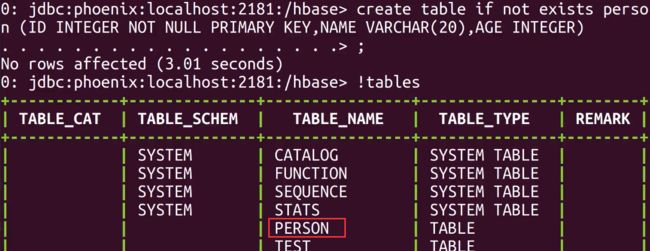

创建表

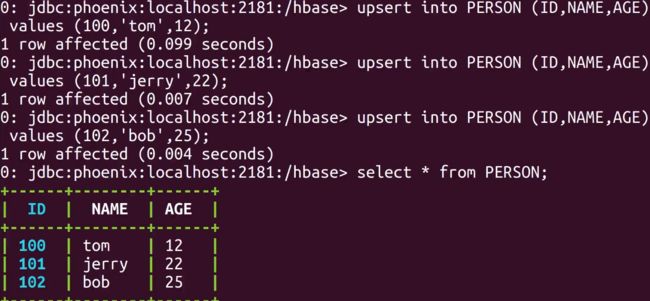

插入数据phoenix插入是upsert与普通sql不同

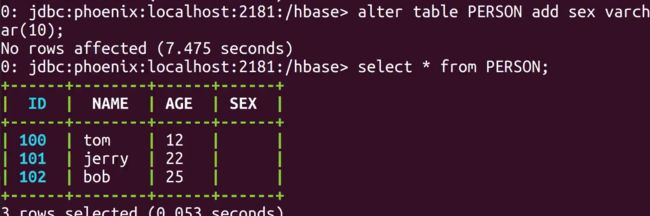

插入列

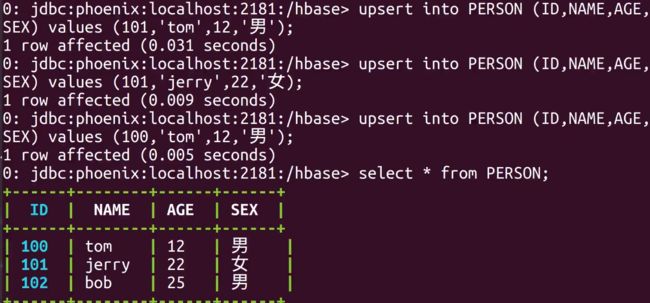

插入数据

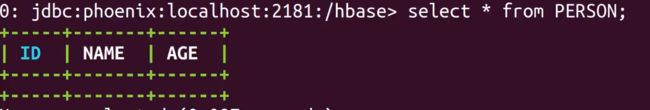

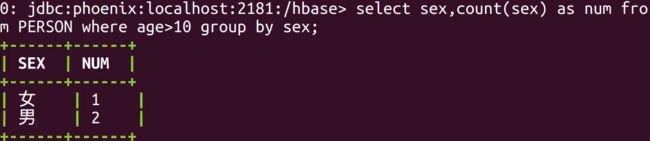

复杂sql语句

Phoenix实战:java jdbc操作Phoenix

引入依赖

<dependencies>

<dependency>

<groupId>org.apache.phoenixgroupId>

<artifactId>phoenix-coreartifactId>

<version>4.13.1-HBase-1.2version>

dependency>

dependencies>

package com.kun.bigdata.phoenix.test;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

public class PhoenixTest {

public static void main(String[] args) throws Exception {

Class.forName("org.apache.phoenix.jdbc.PhoenixDriver");

Connection connection = DriverManager.getConnection("jdbc:phoenix:localhost:2181");

PreparedStatement statement = connection.prepareStatement("select * from PERSON");

ResultSet resultSet = statement.executeQuery();

while (resultSet.next()) {

System.out.println(resultSet.getString("NAME"));

}

statement.close();

connection.close();

}

}

通过mybatis操作Phoenix

引入依赖

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starterartifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-loggingartifactId>

exclusion>

exclusions>

<version>1.4.2.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-tomcatartifactId>

exclusion>

exclusions>

<version>1.4.2.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-jettyartifactId>

<version>1.4.2.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

<version>1.4.2.RELEASEversion>

dependency>

<dependency>

<groupId>com.zaxxergroupId>

<artifactId>HikariCPartifactId>

<exclusions>

<exclusion>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-apiartifactId>

exclusion>

exclusions>

<version>2.6.0version>

dependency>

<dependency>

<groupId>org.mybatisgroupId>

<artifactId>mybatisartifactId>

<version>3.4.2version>

dependency>

<dependency>

<groupId>org.mybatisgroupId>

<artifactId>mybatis-springartifactId>

<version>1.3.1version>

dependency>

<dependency>

<groupId>org.mybatis.spring.bootgroupId>

<artifactId>mybatis-spring-boot-starterartifactId>

<version>1.3.0version>

dependency>

<dependency>

<groupId>org.apache.phoenixgroupId>

<artifactId>phoenix-coreartifactId>

<version>4.13.1-HBase-1.2version>

dependency>

dependencies>

添加配置文件

applilcation.properties

mybatis.config-location=mybatis-config.xml

datasource.jdbcUrl=jdbc:phoenix:localhost:2181

datasource.driverClassName=org.apache.phoenix.jdbc.PhoenixDriver

datasource.maxPoolSize=20

datasource.minIdle=2

datasource.validationTimeout=300000

datasource.idleTimeout=600000

datasource.connectionTestQuery=select 1+1

mybatis.mapperLocations=

log4j.properties

log4j.rootLogger=INFO

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss} %-5p %c{1}:%L - %m%n

mybatis-config.xml

<configuration>

<settings>

<setting name="cacheEnabled" value="true"/>

<setting name="lazyLoadingEnabled" value="true"/>

<setting name="multipleResultSetsEnabled" value="true"/>

<setting name="useColumnLabel" value="true"/>

<setting name="useGeneratedKeys" value="false"/>

<setting name="autoMappingBehavior" value="PARTIAL"/>

<setting name="autoMappingUnknownColumnBehavior" value="WARNING"/>

<setting name="defaultExecutorType" value="SIMPLE"/>

<setting name="defaultStatementTimeout" value="25"/>

<setting name="defaultFetchSize" value="100"/>

<setting name="safeRowBoundsEnabled" value="false"/>

<setting name="mapUnderscoreToCamelCase" value="false"/>

<setting name="localCacheScope" value="SESSION"/>

<setting name="jdbcTypeForNull" value="OTHER"/>

<setting name="lazyLoadTriggerMethods" value="equals,clone,hashCode,toString"/>

settings>

configuration>

UserInfo实体类

package com.kun.bigdata.phoenix.mybatis.test;

public class UserInfo {

private int id;

private String name;

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

}

HikariDataSourceFactory 相当于配置了一个数据库连接池

package com.kun.bigdata.phoenix.mybatis.test.mybatis;

import org.apache.ibatis.datasource.unpooled.UnpooledDataSourceFactory;

import com.zaxxer.hikari.HikariDataSource;

public class HikariDataSourceFactory extends UnpooledDataSourceFactory {

public HikariDataSourceFactory() {

this.dataSource = new HikariDataSource();

}

}

package com.kun.bigdata.phoenix.mybatis.test.mybatis;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

import java.util.Set;

import javax.sql.DataSource;

import org.apache.ibatis.session.SqlSessionFactory;

import org.apache.ibatis.session.SqlSessionFactoryBuilder;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.DefaultResourceLoader;

import org.springframework.core.io.ResourceLoader;

@Configuration

@MapperScan(basePackages = PhoenixDataSourceConfig.PACKAGE,

sqlSessionFactoryRef = "PhoenixSqlSessionFactory")

public class PhoenixDataSourceConfig {

static final String PACKAGE = "com.kun.bigdata.phoenix.**";

@Bean(name = "PhoenixDataSource")

@Primary

public DataSource phoenixDataSource() throws IOException {

ResourceLoader loader = new DefaultResourceLoader();

InputStream inputStream = loader.getResource("classpath:application.properties")

.getInputStream();

Properties properties = new Properties();

properties.load(inputStream);

Set<Object> keys = properties.keySet();

Properties dsProperties = new Properties();

for (Object key : keys) {

if (key.toString().startsWith("datasource")) {

dsProperties.put(key.toString().replace("datasource.", ""), properties.get(key));

}

}

HikariDataSourceFactory factory = new HikariDataSourceFactory();

factory.setProperties(dsProperties);

inputStream.close();

return factory.getDataSource();

}

@Bean(name = "PhoenixSqlSessionFactory")

@Primary

public SqlSessionFactory phoenixSqlSessionFactory(

@Qualifier("PhoenixDataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean factoryBean = new SqlSessionFactoryBean();

factoryBean.setDataSource(dataSource);

ResourceLoader loader = new DefaultResourceLoader();

String resource = "classpath:mybatis-config.xml";

factoryBean.setConfigLocation(loader.getResource(resource));

factoryBean.setSqlSessionFactoryBuilder(new SqlSessionFactoryBuilder());

return factoryBean.getObject();

}

}

dao层

package com.kun.bigdata.phoenix.mybatis.test.dao;

import java.util.List;

import org.apache.ibatis.annotations.Delete;

import org.apache.ibatis.annotations.Insert;

import org.apache.ibatis.annotations.Mapper;

import org.apache.ibatis.annotations.Param;

import org.apache.ibatis.annotations.ResultMap;

import org.apache.ibatis.annotations.Select;

import com.kun.bigdata.phoenix.mybatis.test.UserInfo;

@Mapper

public interface UserInfoMapper {

@Insert("upsert into USER_INFO (ID,NAME) VALUES (#{user.id},#{user.name})")

public void addUser(@Param("user") UserInfo userInfo);

@Delete("delete from USER_INFO WHERE ID=#{userId}")

public void deleteUser(@Param("userId") int userId);

@Select("select * from USER_INFO WHERE ID=#{userId}")

@ResultMap("userResultMap")

public UserInfo getUserById(@Param("userId") int userId);

@Select("select * from USER_INFO WHERE NAME=#{userName}")

@ResultMap("userResultMap")

public UserInfo getUserByName(@Param("userName") String userName);

@Select("select * from USER_INFO")

@ResultMap("userResultMap")

public List<UserInfo> getUsers();

}

测试

package com.kun.bigdata.phoenix.mybatis.test;

import java.util.List;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.ComponentScan;

import org.springframework.context.annotation.Import;

import org.springframework.context.annotation.PropertySource;

import org.springframework.test.context.junit4.SpringJUnit4ClassRunner;

import com.kun.bigdata.phoenix.mybatis.test.dao.UserInfoMapper;

import com.kun.bigdata.phoenix.mybatis.test.mybatis.PhoenixDataSourceConfig;

@RunWith(SpringJUnit4ClassRunner.class)

@Import(PhoenixDataSourceConfig.class)

@PropertySource("classpath:application.properties")

@ComponentScan("com.kun.bigdata.**")

@MapperScan("com.kun.bigdata.**")

public class BaseTest {

@Autowired

UserInfoMapper userInfoMapper;

@Test

public void addUser() {

UserInfo userInfo = new UserInfo();

userInfo.setId(2);

userInfo.setName("Jerry");

userInfoMapper.addUser(userInfo);

}

@Test

public void getUserById() {

UserInfo userInfo = userInfoMapper.getUserById(1);

System.out.println(String.format("ID=%s;NAME=%s", userInfo.getId(), userInfo.getName()));

}

@Test

public void getUserByName() {

UserInfo userInfo = userInfoMapper.getUserByName("Jerry");

System.out.println(String.format("ID=%s;NAME=%s", userInfo.getId(), userInfo.getName()));

}

@Test

public void deleteUser() {

userInfoMapper.deleteUser(1);

List<UserInfo> userInfos = userInfoMapper.getUsers();

for (UserInfo userInfo : userInfos) {

System.out.println(String.format("ID=%s;NAME=%s", userInfo.getId(), userInfo.getName()));

}

}

}

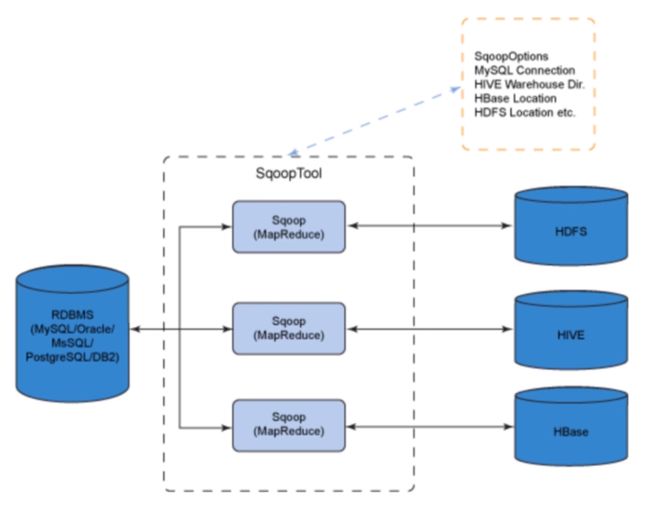

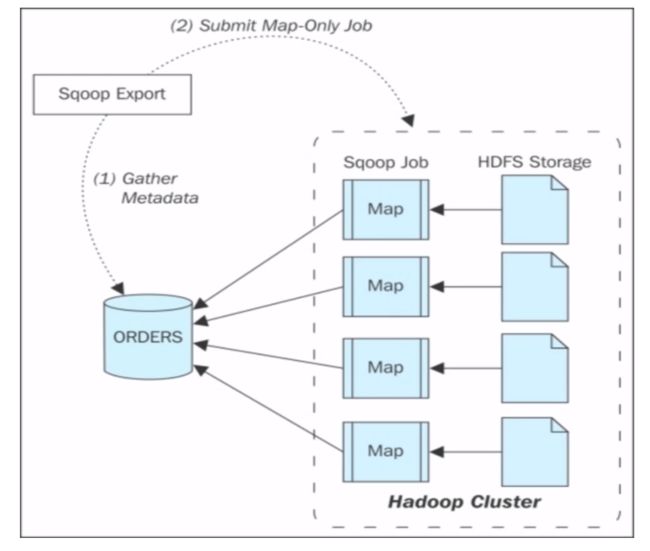

Sqoop1简介

- 用于在Hadoop和传统的数据库(mysql、 postgresql等)进行数据的传递

- 可以通过hadoop的mapreduce把数据从关系型数据库中导入到 Hadoop集群

- 传输大量结构化或半结构化数据的过程是完全自动化的

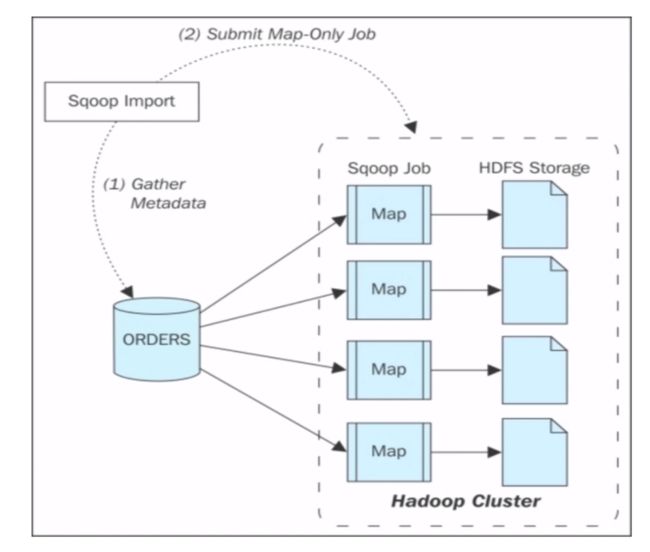

Sqoop1数据导入实战

下载解压sqoop1

![]()

直接在bin下运行即可列出本地mysql里的database

![]()

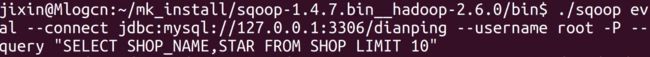

查询数据10条

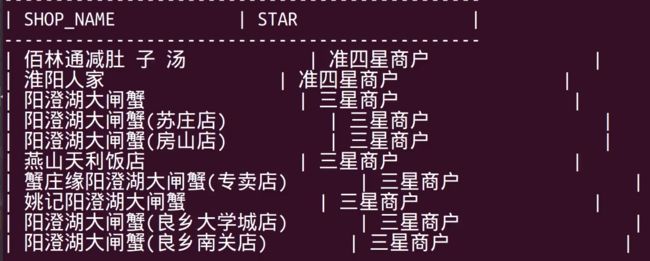

将mysql里的shop表导入hdfs

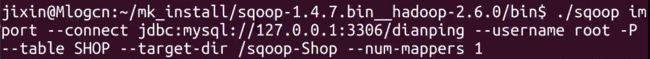

将这mysql里的shop表导入hbase

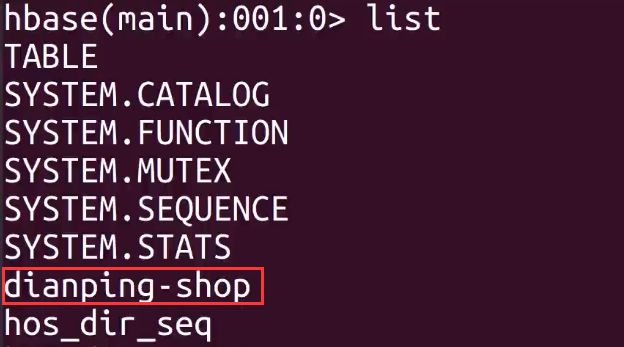

进入hbase shell查看