基于pytorch使用NNI的mnist例子分析(附完整代码)

本文使用的mnist例子来自pytorch官网:

pytorch官网mnist例子链接

工程目录

配置文件(config.yml)

authorName: default

experimentName: mnist_pytorch

trialConcurrency: 1

maxExecDuration: 100h

maxTrialNum: 5

#choice: local, remote, pai

trainingServicePlatform: local

searchSpacePath: search_space.json

#choice: true, false

useAnnotation: false

tuner:

#choice: TPE, Random, Anneal, Evolution, BatchTuner

#SMAC (SMAC should be installed through nnictl)

builtinTunerName: TPE

classArgs:

#choice: maximize, minimize

optimize_mode: maximize

trial:

command: python main.py

codeDir: .

gpuNum: 1

配置文件的参数设置

- maxTrialNum

实验重复运行的次数 - command

命令行 - gpuNum

实验使用的GPU数目

搜索空间定义文件(search_space.json)

这里只设置了batch-size,epochs,和学习率三个参数。可根据需要设置更多的参数

{

"batch-size": {"_type":"choice", "_value": [32,64]},

"epochs": {"_type":"choice", "_value": [10]},

"lr": {"_type":"choice","_value":[0.0001, 0.01, 0.1]}

}

嵌入NNI的Python代码

1. 参数解析

RCV_CONFIG = nni.get_next_parameter()

代码:

RCV_CONFIG = nni.get_next_parameter()

_logger.debug(RCV_CONFIG)

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=RCV_CONFIG['batch-size'], metavar='N',help='input batch size for training (default: 64)')

parser.add_argument('--epochs', type=int, default=RCV_CONFIG['epochs'], metavar='N',

help='number of epochs to train (default: 10)')

parser.add_argument('--lr', type=float, default=RCV_CONFIG['lr'], metavar='LR',

help='learning rate (default: 0.01)')

2.提交中间结果

nni.report_intermediate_result(loss.item())

这里report的是训练时的loss

代码:

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

nni.report_intermediate_result(loss.item())

3.提交最终结果

nni.report_final_result(best_acc)

这里report的是测试精度

代码:

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(args, model, device, test_loader)

nni.report_final_result(best_acc)

实现过程

- 首先从search_space.json获得参数字典(参数组合之一),形如:

{"batch-size":64,"epochs":10,"lr":0.0001} - 若需要将每次实验结果上报,则使用:

nni.report_intermediate_result(metric)

其中metric得是数字 - 若要上报最终结果(以此最终结果作为参数选择的参考),则使用:

nni.report_final_result(metric)

其中metric得是数字

每次实验都重复上述流程,直到达到最大的实验次数maxTrialNum(在配置文件中设置)

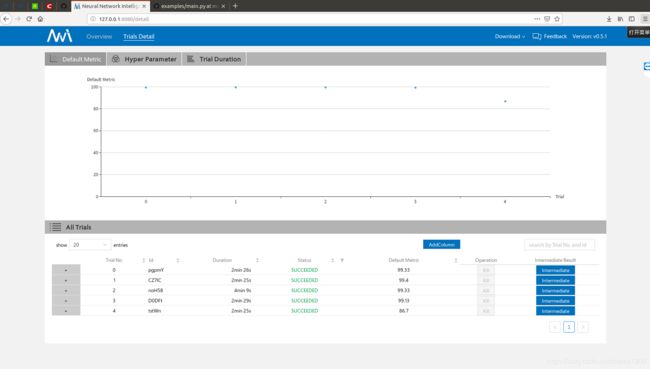

NNI WebUI的训练结果界面截图

Overview

Trials Detail

实验结果总结

- Default Metric

如上图, 横坐标Trial=4时,测试的精确度最小,此时参数为:

{"batch-size":64,"epochs":10,"lr":0.0001}

2.Hyper Parameter

如上图,测试精度最高的前80%结果。他们的参数分别为:

{"batch-size":64,"epochs":10,"lr":0.1}

{"batch-size":32,"epochs":10,"lr":0.01}

{"batch-size":64,"epochs":10,"lr":0.1}

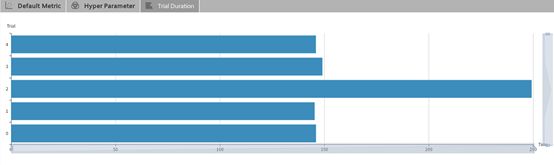

3.Trial Duration

可以看出每次实验运行的时间

4.一次实验的结果

如上图,可看出本次试验的参数

如上图,当前训练loss为0.200218066573143

- 最终参数的选择

首先根据Hyper Parameter的结果,挑选出实验结果较好的几个参数组合,然后再看Trial Duration中的结果,挑选运行时间较短的,并且考虑Intermediate中loss收敛的速度

点击查看完整代码