spark SQL 执行过程

spark SQL 执行过程

1、代码实现

import org.apache.spark.sql.SQLContext import org.apache.spark.{SparkConf, SparkContext} //case class一定要放到外面 case class Person(id: Int, name: String, age: Int) object InferringSchema { def main(args: Array[String]) { //创建SparkConf()并设置App名称 val conf = new SparkConf().setAppName("SQL-1") //SQLContext要依赖SparkContext val sc = new SparkContext(conf) //创建SQLContext val sqlContext = new SQLContext(sc) //从指定的地址创建RDD val lineRDD = sc.textFile(args(0)).map(_.split(",")) //创建case class //将RDD和case class关联 val personRDD = lineRDD.map(x => Person(x(0).toInt, x(1), x(2).toInt)) //导入隐式转换,如果不到人无法将RDD转换成DataFrame //将RDD转换成DataFrame import sqlContext.implicits._ val personDF = personRDD.toDF //注册表 personDF.registerTempTable("t_person") //传入SQL val df = sqlContext.sql("select * from t_person order by age desc limit 2") //将结果以JSON的方式存储到指定位置 df.write.json(args(1)) //停止Spark Context sc.stop() } }2、命令

./spark-submit --class InferringSchema --master spark://hadoop1:7077,hadoop2:7077 ../sparkJar/sparkSql.jar hdfs://hadoop1:9000/sparkSql hdfs://hadoop1:9000/spark

3、查看运行过程

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

16/11/04 23:42:52 INFO SparkContext: Running Spark version 1.6.2

16/11/04 23:42:53 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/11/04 23:42:53 INFO SecurityManager: Changing view acls to: root

16/11/04 23:42:53 INFO SecurityManager: Changing modify acls to: root

16/11/04 23:42:53 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

16/11/04 23:42:55 INFO Utils: Successfully started service 'sparkDriver' on port 51933.

16/11/04 23:42:56 INFO Slf4jLogger: Slf4jLogger started

16/11/04 23:42:56 INFO Remoting: Starting remoting

16/11/04 23:42:56 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://[email protected]:44880]

16/11/04 23:42:56 INFO Utils: Successfully started service 'sparkDriverActorSystem' on port 44880.

16/11/04 23:42:56 INFO SparkEnv: Registering MapOutputTracker

16/11/04 23:42:56 INFO SparkEnv: Registering BlockManagerMaster

16/11/04 23:42:56 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-c05d1a76-3d2b-4912-b371-7fd8e747445c

16/11/04 23:42:56 INFO MemoryStore: MemoryStore started with capacity 517.4 MB

16/11/04 23:42:57 INFO SparkEnv: Registering OutputCommitCoordinator

16/11/04 23:42:57 INFO Utils: Successfully started service 'SparkUI' on port 4040.

16/11/04 23:42:57 INFO SparkUI: Started SparkUI at http://192.168.215.133:4040

16/11/04 23:42:57 INFO HttpFileServer: HTTP File server directory is /tmp/spark-4b2b30f5-6bcb-485c-b619-44a9015257e8/httpd-b4a23a5e-0127-406c-888f-a44d7b4a17ac

16/11/04 23:42:58 INFO HttpServer: Starting HTTP Server

16/11/04 23:42:58 INFO Utils: Successfully started service 'HTTP file server' on port 48515.

16/11/04 23:42:58 INFO SparkContext: Added JAR file:/usr/local/spark/bin/../sparkJar/sparkSql.jar at http://192.168.215.133:48515/jars/sparkSql.jar with timestamp 1478328178593

16/11/04 23:42:58 INFO AppClient$ClientEndpoint: Connecting to master spark://hadoop1:7077...

16/11/04 23:42:58 INFO AppClient$ClientEndpoint: Connecting to master spark://hadoop2:7077...

16/11/04 23:42:59 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20161104234259-0008

16/11/04 23:42:59 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 54971.

16/11/04 23:42:59 INFO NettyBlockTransferService: Server created on 54971

16/11/04 23:42:59 INFO AppClient$ClientEndpoint: Executor added: app-20161104234259-0008/0 on worker-20161104194431-192.168.215.132-59350 (192.168.215.132:59350) with 1 cores

16/11/04 23:42:59 INFO SparkDeploySchedulerBackend: Granted executor ID app-20161104234259-0008/0 on hostPort 192.168.215.132:59350 with 1 cores, 1024.0 MB RAM

16/11/04 23:42:59 INFO BlockManagerMaster: Trying to register BlockManager

16/11/04 23:42:59 INFO BlockManagerMasterEndpoint: Registering block manager 192.168.215.133:54971 with 517.4 MB RAM, BlockManagerId(driver, 192.168.215.133, 54971)

16/11/04 23:42:59 INFO BlockManagerMaster: Registered BlockManager

16/11/04 23:42:59 INFO AppClient$ClientEndpoint: Executor updated: app-20161104234259-0008/0 is now RUNNING

16/11/04 23:42:59 INFO SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

16/11/04 23:43:02 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 153.6 KB, free 153.6 KB)

16/11/04 23:43:02 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 13.9 KB, free 167.5 KB)

16/11/04 23:43:02 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.215.133:54971 (size: 13.9 KB, free: 517.4 MB)

16/11/04 23:43:02 INFO SparkContext: Created broadcast 0 from textFile at SQLDemo1.scala:17

16/11/04 23:43:08 INFO SparkDeploySchedulerBackend: Registered executor NettyRpcEndpointRef(null) (hadoop3:52754) with ID 0

16/11/04 23:43:08 INFO BlockManagerMasterEndpoint: Registering block manager hadoop3:39995 with 517.4 MB RAM, BlockManagerId(0, hadoop3, 39995)

16/11/04 23:43:14 INFO FileInputFormat: Total input paths to process : 1

16/11/04 23:43:14 INFO SparkContext: Starting job: json at SQLDemo1.scala:30

16/11/04 23:43:14 INFO DAGScheduler: Got job 0 (json at SQLDemo1.scala:30) with 2 output partitions

16/11/04 23:43:14 INFO DAGScheduler: Final stage: ResultStage 0 (json at SQLDemo1.scala:30)

16/11/04 23:43:14 INFO DAGScheduler: Parents of final stage: List()

16/11/04 23:43:14 INFO DAGScheduler: Missing parents: List()

16/11/04 23:43:14 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[8] at json at SQLDemo1.scala:30), which has no missing parents

16/11/04 23:43:14 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 7.9 KB, free 175.4 KB)

16/11/04 23:43:14 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 4.1 KB, free 179.5 KB)

16/11/04 23:43:14 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.215.133:54971 (size: 4.1 KB, free: 517.4 MB)

16/11/04 23:43:14 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006

16/11/04 23:43:14 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[8] at json at SQLDemo1.scala:30)

16/11/04 23:43:14 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

16/11/04 23:43:14 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hadoop3, partition 0,NODE_LOCAL, 2199 bytes)

16/11/04 23:43:22 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on hadoop3:39995 (size: 4.1 KB, free: 517.4 MB)

16/11/04 23:43:24 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop3:39995 (size: 13.9 KB, free: 517.4 MB)

16/11/04 23:43:30 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, hadoop3, partition 1,NODE_LOCAL, 2199 bytes)

16/11/04 23:43:30 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 15642 ms on hadoop3 (1/2)

16/11/04 23:43:30 INFO DAGScheduler: ResultStage 0 (json at SQLDemo1.scala:30) finished in 15.695 s

16/11/04 23:43:30 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 116 ms on hadoop3 (2/2)

16/11/04 23:43:30 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/11/04 23:43:30 INFO DAGScheduler: Job 0 finished: json at SQLDemo1.scala:30, took 15.899557 s

16/11/04 23:43:30 INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

16/11/04 23:43:30 INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

16/11/04 23:43:30 INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

16/11/04 23:43:30 INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

16/11/04 23:43:30 INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

16/11/04 23:43:30 INFO DefaultWriterContainer: Using output committer class org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

16/11/04 23:43:30 INFO SparkContext: Starting job: json at SQLDemo1.scala:30

16/11/04 23:43:30 INFO DAGScheduler: Got job 1 (json at SQLDemo1.scala:30) with 1 output partitions

16/11/04 23:43:30 INFO DAGScheduler: Final stage: ResultStage 1 (json at SQLDemo1.scala:30)

16/11/04 23:43:30 INFO DAGScheduler: Parents of final stage: List()

16/11/04 23:43:30 INFO DAGScheduler: Missing parents: List()

16/11/04 23:43:30 INFO DAGScheduler: Submitting ResultStage 1 (ParallelCollectionRDD[9] at json at SQLDemo1.scala:30), which has no missing parents

16/11/04 23:43:30 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 63.2 KB, free 242.7 KB)

16/11/04 23:43:30 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 21.5 KB, free 264.2 KB)

16/11/04 23:43:30 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.215.133:54971 (size: 21.5 KB, free: 517.4 MB)

16/11/04 23:43:30 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1006

16/11/04 23:43:30 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (ParallelCollectionRDD[9] at json at SQLDemo1.scala:30)

16/11/04 23:43:30 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

16/11/04 23:43:30 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, hadoop3, partition 0,PROCESS_LOCAL, 2537 bytes)

16/11/04 23:43:30 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on hadoop3:39995 (size: 21.5 KB, free: 517.4 MB)

16/11/04 23:43:31 INFO DAGScheduler: ResultStage 1 (json at SQLDemo1.scala:30) finished in 1.024 s

16/11/04 23:43:31 INFO DAGScheduler: Job 1 finished: json at SQLDemo1.scala:30, took 1.175741 s

16/11/04 23:43:31 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 1026 ms on hadoop3 (1/1)

16/11/04 23:43:31 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

16/11/04 23:43:32 INFO ContextCleaner: Cleaned accumulator 3

16/11/04 23:43:32 INFO BlockManagerInfo: Removed broadcast_2_piece0 on hadoop3:39995 in memory (size: 21.5 KB, free: 517.4 MB)

16/11/04 23:43:32 INFO BlockManagerInfo: Removed broadcast_2_piece0 on 192.168.215.133:54971 in memory (size: 21.5 KB, free: 517.4 MB)

16/11/04 23:43:32 INFO ContextCleaner: Cleaned accumulator 4

16/11/04 23:43:32 INFO BlockManagerInfo: Removed broadcast_1_piece0 on 192.168.215.133:54971 in memory (size: 4.1 KB, free: 517.4 MB)

16/11/04 23:43:32 INFO BlockManagerInfo: Removed broadcast_1_piece0 on hadoop3:39995 in memory (size: 4.1 KB, free: 517.4 MB)

16/11/04 23:43:32 INFO DefaultWriterContainer: Job job_201611042343_0000 committed.

16/11/04 23:43:32 INFO JSONRelation: Listing hdfs://hadoop1:9000/sparktest4 on driver

16/11/04 23:43:32 INFO JSONRelation: Listing hdfs://hadoop1:9000/sparktest4 on driver

16/11/04 23:43:32 INFO SparkUI: Stopped Spark web UI at http://192.168.215.133:4040

16/11/04 23:43:32 INFO SparkDeploySchedulerBackend: Shutting down all executors

16/11/04 23:43:32 INFO SparkDeploySchedulerBackend: Asking each executor to shut down

16/11/04 23:43:32 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/11/04 23:43:32 INFO MemoryStore: MemoryStore cleared

16/11/04 23:43:32 INFO BlockManager: BlockManager stopped

16/11/04 23:43:32 INFO BlockManagerMaster: BlockManagerMaster stopped

16/11/04 23:43:32 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/11/04 23:43:32 INFO SparkContext: Successfully stopped SparkContext

16/11/04 23:43:32 INFO RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/11/04 23:43:32 INFO ShutdownHookManager: Shutdown hook called

16/11/04 23:43:32 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/11/04 23:43:32 INFO ShutdownHookManager: Deleting directory /tmp/spark-4b2b30f5-6bcb-485c-b619-44a9015257e8

16/11/04 23:43:32 INFO ShutdownHookManager: Deleting directory /tmp/spark-4b2b30f5-6bcb-485c-b619-44a9015257e8/httpd-b4a23a5e-0127-406c-888f-a44d7b4a17ac

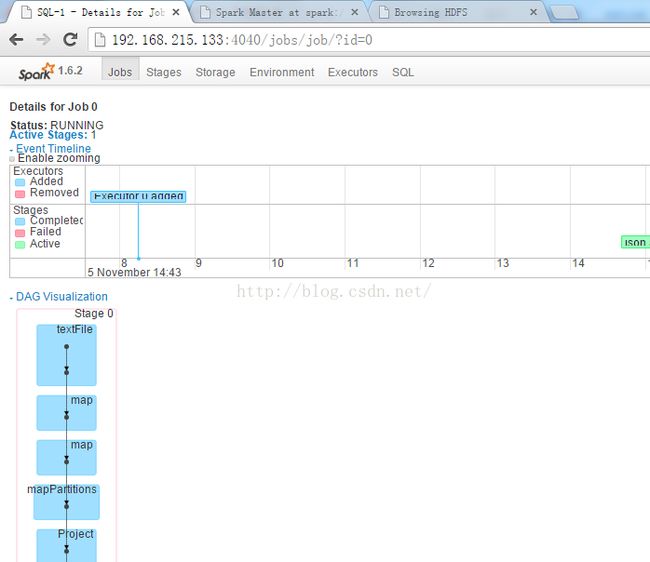

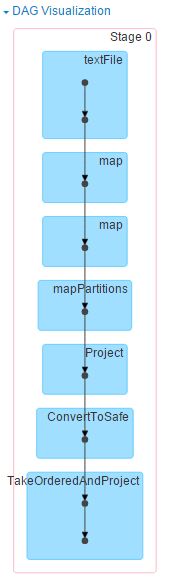

4、web界面查看执行过程