容器云负载均衡之五:使用keepalived管理基于容器的IPVS

一、前言

IPVS的director上会绑定一个VIP,这个VIP是下游客户的唯一接入点,所以这个VIP需要满足高可用性的要求。另外,不同的VIP策略需要根据后端real server的部署状态进行更新。

Keepalived是一个开源软件,主要提供loadbalancing(负载均衡)和 high-availability(高可用)功能,负载均衡实现需要依赖Linux的虚拟服务内核模块(IPVS),而高可用是通过VRRP协议实现多台机器之间的故障转移服务。

转载自https://blog.csdn.net/cloudvtech

二、配置keepalived

2.1 测试环境配置

Director node 1: 200.222.0.73

Director node 2: 200.222.0.74

Real Server 1: 200.222.0.87

Real Server 2: 200.222.0.89

VIP: 200.222.0.113 2.2 设置iptables允许VRRP协议交互

iptables -t filter -A IN_public_allow -p udp -m udp --dport 112 -m conntrack --ctstate NEW -j ACCEPT2.3 在CentOS容器内安装keepalived

docker pull centos

docker run -td --privileged --net=host --name=keepalived centos

docker exec -it keepalived bash

yum install -y net-tools iproute

yum install -y keepalived ipvsadm将容器打包成docker image

2.4 在两个Director node启动容器

2.5 配置Director node 1的keepalived

/etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server localhost

smtp_connect_timeout 30

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 51

priority 100

advert_int 1

unicast_src_ip 200.222.0.73

unicast_peer {

200.222.0.74

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.222.0.113

}

}

virtual_server 200.222.0.113 80 {

delay_loop 6

lb_algo wlc

lb_kind DR

persistence_timeout 600

protocol TCP

real_server 200.222.0.87 80 {

weight 100

TCP_CHECK {

connect_timeout 3

}

}

real_server 200.222.0.89 80 {

weight 100

TCP_CHECK {

connect_timeout 3

}

}

}2.6 配置Director node 2的keepalived

/etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server localhost

smtp_connect_timeout 30

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 99

advert_int 1

unicast_src_ip 200.222.0.74

unicast_peer {

200.222.0.73

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.222.0.113

}

}

virtual_server 200.222.0.113 80 {

delay_loop 6

lb_algo wlc

lb_kind DR

persistence_timeout 600

protocol TCP

real_server 200.222.0.87 80 {

weight 100

TCP_CHECK {

connect_timeout 3

}

}

real_server 200.222.0.89 80 {

weight 100

TCP_CHECK {

connect_timeout 3

}

}

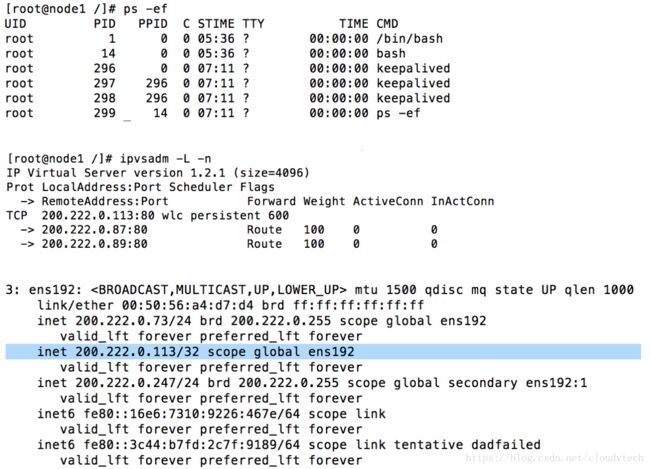

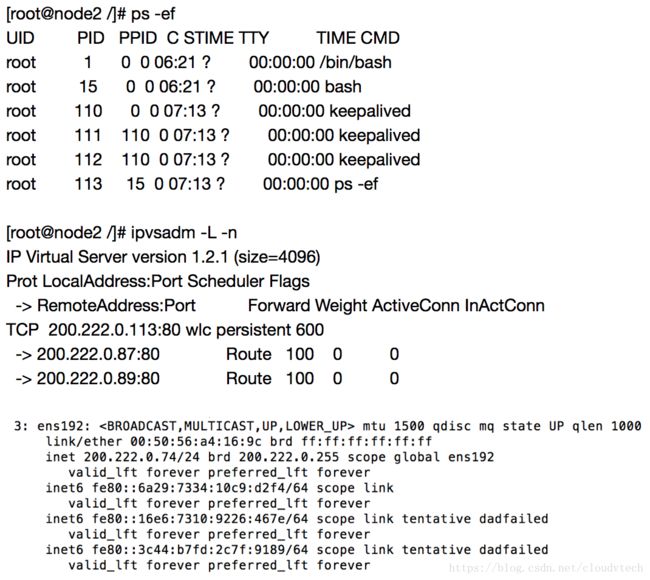

}2.7 启动Director node 1/2的keepalived

可以看到keepalived仅仅在node1上绑定了VIP

2.8 查看Director node 1/2的VRRP协议交互信息

tcpdump -vvv -an -i ens192 | grep "vrid 51"

可以看到active node持续向backup node发送VRRP协议信息

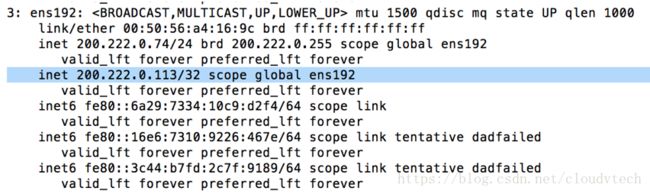

2.9 使用keepalived进行IPVS director failover

在Director node1可以看到VIP被绑定

也可以看到现在是新的active node(Director node 2)持续向node1发送VRRP协议信息

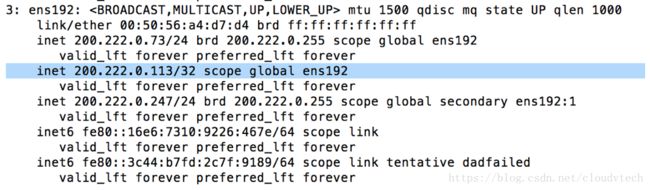

2.10 重新启动node1的keepalived,node1继续成为active的IPVS director

转载自https://blog.csdn.net/cloudvtech