测试改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端改名称看移动端

一、环境说明

| 操作系统 | 主机名 | 节点及功能 | IP | 备注 |

| CentOS7.5 X86_64 | k8s-master |

master/etcd/registry | 192.168.168.2 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、docker、calico-image |

| CentOS7.5 X86_64 | work-node01 |

node01/etcd | 192.168.168.3 | kube-proxy、kubelet、etcd、docker、calico |

| CentOS7.5 X86_64 | work-node02 | node02/etcd | 192.168.168.4 | kube-proxy、kubelet、etcd、docker、calico |

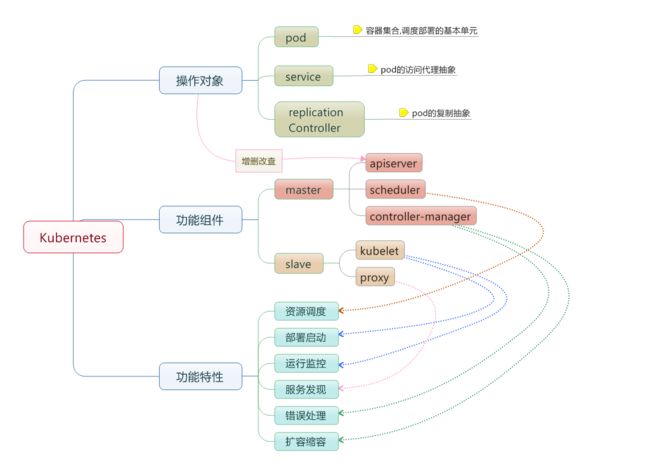

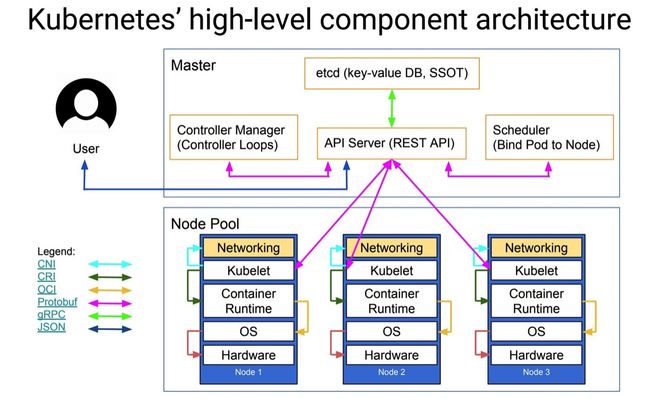

集群功能各模块功能描述:

Master节点:

Master节点上面主要由四个模块组成,APIServer,schedule,controller-manager,etcd

APIServer: APIServer负责对外提供RESTful的kubernetes API的服务,它是系统管理指令的统一接口,任何对资源的增删该查都要交给APIServer处理后再交给etcd,如图,kubectl(kubernetes提供的客户端工具,该工具内部是对kubernetes API的调用)是直接和APIServer交互的。

schedule: schedule负责调度Pod到合适的Node上,如果把scheduler看成一个黑匣子,那么它的输入是pod和由多个Node组成的列表,输出是Pod和一个Node的绑定。 kubernetes目前提供了调度算法,同样也保留了接口。用户根据自己的需求定义自己的调度算法。

controller manager: 如果APIServer做的是前台的工作的话,那么controller manager就是负责后台的。每一个资源都对应一个控制器。而control manager就是负责管理这些控制器的,比如我们通过APIServer创建了一个Pod,当这个Pod创建成功后,APIServer的任务就算完成了。

etcd:etcd是一个高可用的键值存储系统,kubernetes使用它来存储各个资源的状态,从而实现了Restful的API。

Node节点:

每个Node节点主要由三个模板组成:kublet, kube-proxy

kube-proxy: 该模块实现了kubernetes中的服务发现和反向代理功能。kube-proxy支持TCP和UDP连接转发,默认基Round Robin算法将客户端流量转发到与service对应的一组后端pod。服务发现方面,kube-proxy使用etcd的watch机制监控集群中service和endpoint对象数据的动态变化,并且维护一个service到endpoint的映射关系,从而保证了后端pod的IP变化不会对访问者造成影响,另外,kube-proxy还支持session affinity。

kublet:kublet是Master在每个Node节点上面的agent,是Node节点上面最重要的模块,它负责维护和管理该Node上的所有容器,但是如果容器不是通过kubernetes创建的,它并不会管理。本质上,它负责使Pod的运行状态与期望的状态一致。

二、3台主机安装前准备

1)更新软件包和内核

yum -y update

2) 关闭防火墙

systemclt disable firewalld.service

3) 关闭SELinux

vi /etc/selinux/config

改SELINUX=enforcing为SELINUX=disabled

4)安装常用

yum -y install net-tools ntpdate conntrack-tools

5)优化内核参数

net.ipv4.ip_local_port_range = 30000 60999

net.netfilter.nf_conntrack_max = 26214400

net.netfilter.nf_conntrack_tcp_timeout_established = 86400

net.netfilter.nf_conntrack_tcp_timeout_close_wait = 3600

三、修改三台主机命名

1) k8s-master

hostnamectl --static set-hostname k8s-master

2) work-node01

hostnamectl --static set-hostname work-node01

3) work-node02

hostnamectl --static set-hostname work-node02

四、制作CA证书

1.创建生成证书和存放证书目录(3台主机上都进行此操作)

-

mkdir /root/ssl -

mkdir -p /opt/kubernetes/{conf,bin,ssl,yaml}

2.设置环境变量(3台主机上都进行此操作)

-

vi /etc/profile.d/kubernetes.sh -

K8S_HOME=/opt/kubernetes -

export PATH=$K8S_HOME/bin/:$PATH -

source /etc/profile.d/kubernetes.sh

3.安装CFSSL并复制到node01号node02节点

-

cd /root/ssl -

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -

chmod +x cfssl* -

mv cfssl-certinfo_linux-amd64 /opt/kubernetes/bin/cfssl-certinfo -

mv cfssljson_linux-amd64 /opt/kubernetes/bin/cfssljson -

mv cfssl_linux-amd64 /opt/kubernetes/bin/cfssl

-

scp /opt/kubernetes/bin/cfssl* 192.168.168.3:/opt/kubernetes/bin -

scp /opt/kubernetes/bin/cfssl* 192.168.168.4:/opt/kubernetes/bin

4.创建用来生成 CA 文件的 JSON 配置文件

-

cd /root/ssl -

cat > ca-config.json < -

{ -

"signing": { -

"default": { -

"expiry": "87600h" -

}, -

"profiles": { -

"kubernetes": { -

"usages": [ -

"signing", -

"key encipherment", -

"server auth", -

"client auth" -

], -

"expiry": "87600h" -

} -

} -

} -

} -

EOF

server auth表示client可以用该ca对server提供的证书进行验证

client auth表示server可以用该ca对client提供的证书进行验证

5.创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

-

cat > ca-csr.json < -

{ -

"CN": "kubernetes", -

"key": { -

"algo": "rsa", -

"size": 2048 -

}, -

"names": [ -

{ -

"C": "CN", -

"ST": "BeiJing", -

"L": "BeiJing", -

"O": "k8s", -

"OU": "System" -

} -

] -

} -

EOF

6.生成CA证书和私钥

-

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

# ls ca* -

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

将SCP证书分发到各节点

-

# cp ca.csr ca.pem ca-key.pem ca-config.json /opt/kubernetes/ssl -

# scp ca.csr ca.pem ca-key.pem ca-config.json [email protected]:/opt/kubernetes/ssl -

# scp ca.csr ca.pem ca-key.pem ca-config.json [email protected]:/opt/kubernetes/ssl

7.创建etcd证书请求

-

# cat > etcd-csr.json < -

{ -

"CN": "etcd", -

"hosts": [ -

"127.0.0.1", -

"192.168.168.2", -

"192.168.168.3", -

"192.168.168.4", -

"k8s-master", -

"work-node01", -

"work-node02" -

], -

"key": { -

"algo": "rsa", -

"size": 2048 -

}, -

"names": [ -

{ -

"C": "CN", -

"ST": "BeiJing", -

"L": "BeiJing", -

"O": "k8s", -

"OU": "System" -

} -

] -

} -

EOF

8.生成 etcd 证书和私钥

-

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd -

# ls etc* -

etcd.csr etcd-key.pem etcd.pem

分发证书文件

-

# cp /root/ssl/etcd*.pem /etc/kubernetes/ssl -

# scp /root/ssl/etcd*.pem [email protected]/opt/kubernetes/ssl -

# scp /root/ssl/etcd*.pem [email protected]/opt/kubernetes/ssl

五、Etcd集群安装配置(配置前3台主机需时间同步)

1.修改hosts(3台主机上都进行此操作)

vi /etc/hosts

-

# echo '192.168.168.2 k8s-master -

192.168.168.3 work-node01 -

192.168.168.4 work-node02' >> /etc/hosts

2.下载etcd安装包

wget https://github.com/coreos/etcd/releases/download/v3.3.7/etcd-v3.3.7-linux-amd64.tar.gz

3.解压安装etcd(3台主机做同样配置)

-

mkdir /var/lib/etcd -

tar -zxvf etcd-v3.3.7-linux-amd64.tar.gz -

cp etcd etcdctl /opt/kubernetes/bin

4.创建etcd启动文件

-

cat > /usr/lib/systemd/system/etcd.service < -

[Unit] -

Description=Etcd Server -

After=network.target -

[Service] -

Type=simple -

WorkingDirectory=/var/lib/etcd -

EnvironmentFile=/opt/kubernetes/conf/etcd.conf -

# set GOMAXPROCS to number of processors -

ExecStart=/bin/bash -c "GOMAXPROCS=1 /opt/kubernetes/bin/etcd" -

Type=notify -

[Install] -

WantedBy=multi-user.target -

EOF

将etcd.service文件分发到各node节点

-

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/etcd.service -

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/etcd.service

5.k8s-master(192.168.168.2)编译etcd.conf文件

vi /opt/kubernetes/conf/ectd.conf

-

#[Member] -

#ETCD_CORS="" -

ETCD_DATA_DIR="/var/lib/etcd/k8s-master.etcd" -

#ETCD_WAL_DIR="" -

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" -

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379,http://127.0.0.1:4001" -

#ETCD_MAX_SNAPSHOTS="5" -

#ETCD_MAX_WALS="5" -

ETCD_NAME="k8s-master" -

#ETCD_SNAPSHOT_COUNT="100000" -

#ETCD_HEARTBEAT_INTERVAL="100" -

#ETCD_ELECTION_TIMEOUT="1000" -

#ETCD_QUOTA_BACKEND_BYTES="0" -

#ETCD_MAX_REQUEST_BYTES="1572864" -

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" -

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" -

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" -

# -

#[Clustering] -

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" -

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.2:2380" -

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.2:2379" -

#ETCD_DISCOVERY="" -

#ETCD_DISCOVERY_FALLBACK="proxy" -

#ETCD_DISCOVERY_PROXY="" -

#ETCD_DISCOVERY_SRV="" -

ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" -

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" -

ETCD_INITIAL_CLUSTER_STATE="new" -

#ETCD_STRICT_RECONFIG_CHECK="true" -

#ETCD_ENABLE_V2="true" -

# -

#[Proxy] -

#ETCD_PROXY="off" -

#ETCD_PROXY_FAILURE_WAIT="5000" -

#ETCD_PROXY_REFRESH_INTERVAL="30000" -

#ETCD_PROXY_DIAL_TIMEOUT="1000" -

#ETCD_PROXY_WRITE_TIMEOUT="5000" -

#ETCD_PROXY_READ_TIMEOUT="0" -

# -

#[Security] -

CLIENT_CERT_AUTH="true" -

ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

PEER_CLIENT_CERT_AUTH="true" -

ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

# -

#[Logging] -

#ETCD_DEBUG="false" -

#ETCD_LOG_PACKAGE_LEVELS="" -

#ETCD_LOG_OUTPUT="default" -

# -

#[Unsafe] -

#ETCD_FORCE_NEW_CLUSTER="false" -

# -

#[Version] -

#ETCD_VERSION="false" -

#ETCD_AUTO_COMPACTION_RETENTION="0" -

# -

#[Profiling] -

#ETCD_ENABLE_PPROF="false" -

#ETCD_METRICS="basic" -

# -

#[Auth] -

#ETCD_AUTH_TOKEN="simple"

6.work-node01(192.168.168.3)编译etcd.conf文件

vi /opt/kubernetes/conf/ectd.conf

-

#[Member] -

#ETCD_CORS="" -

ETCD_DATA_DIR="/var/lib/etcd/work-node01.etcd" -

#ETCD_WAL_DIR="" -

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" -

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379,https://127.0.0.1:4001" -

#ETCD_MAX_SNAPSHOTS="5" -

#ETCD_MAX_WALS="5" -

ETCD_NAME="work-node01" -

#ETCD_SNAPSHOT_COUNT="100000" -

#ETCD_HEARTBEAT_INTERVAL="100" -

#ETCD_ELECTION_TIMEOUT="1000" -

#ETCD_QUOTA_BACKEND_BYTES="0" -

#ETCD_MAX_REQUEST_BYTES="1572864" -

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" -

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" -

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" -

# -

#[Clustering] -

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" -

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.3:2380" -

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.3:2379" -

#ETCD_DISCOVERY="" -

#ETCD_DISCOVERY_FALLBACK="proxy" -

#ETCD_DISCOVERY_PROXY="" -

#ETCD_DISCOVERY_SRV="" -

ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" -

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" -

ETCD_INITIAL_CLUSTER_STATE="new" -

#ETCD_STRICT_RECONFIG_CHECK="true" -

#ETCD_ENABLE_V2="true" -

# -

#[Proxy] -

#ETCD_PROXY="off" -

#ETCD_PROXY_FAILURE_WAIT="5000" -

#ETCD_PROXY_REFRESH_INTERVAL="30000" -

#ETCD_PROXY_DIAL_TIMEOUT="1000" -

#ETCD_PROXY_WRITE_TIMEOUT="5000" -

#ETCD_PROXY_READ_TIMEOUT="0" -

# -

#[Security] -

CLIENT_CERT_AUTH="true" -

ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

PEER_CLIENT_CERT_AUTH="true" -

ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

# -

#[Logging] -

#ETCD_DEBUG="false" -

#ETCD_LOG_PACKAGE_LEVELS="" -

#ETCD_LOG_OUTPUT="default" -

# -

#[Unsafe] -

#ETCD_FORCE_NEW_CLUSTER="false" -

# -

#[Version] -

#ETCD_VERSION="false" -

#ETCD_AUTO_COMPACTION_RETENTION="0" -

# -

#[Profiling] -

#ETCD_ENABLE_PPROF="false" -

#ETCD_METRICS="basic" -

# -

#[Auth] -

#ETCD_AUTH_TOKEN="simple"

7.work-node02编译etcd.conf文件

vi /opt/kubernetes/conf/ectd.conf

-

#[Member] -

#ETCD_CORS="" -

ETCD_DATA_DIR="/var/lib/etcd/work-node02.etcd" -

#ETCD_WAL_DIR="" -

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" -

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379,https://127.0.0.1:4001" -

#ETCD_MAX_SNAPSHOTS="5" -

#ETCD_MAX_WALS="5" -

ETCD_NAME="work-node02" -

#ETCD_SNAPSHOT_COUNT="100000" -

#ETCD_HEARTBEAT_INTERVAL="100" -

#ETCD_ELECTION_TIMEOUT="1000" -

#ETCD_QUOTA_BACKEND_BYTES="0" -

#ETCD_MAX_REQUEST_BYTES="1572864" -

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" -

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" -

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" -

# -

#[Clustering] -

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" -

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.4:2380" -

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.4:2379" -

#ETCD_DISCOVERY="" -

#ETCD_DISCOVERY_FALLBACK="proxy" -

#ETCD_DISCOVERY_PROXY="" -

#ETCD_DISCOVERY_SRV="" -

ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" -

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" -

ETCD_INITIAL_CLUSTER_STATE="new" -

#ETCD_STRICT_RECONFIG_CHECK="true" -

#ETCD_ENABLE_V2="true" -

# -

#[Proxy] -

#ETCD_PROXY="off" -

#ETCD_PROXY_FAILURE_WAIT="5000" -

#ETCD_PROXY_REFRESH_INTERVAL="30000" -

#ETCD_PROXY_DIAL_TIMEOUT="1000" -

#ETCD_PROXY_WRITE_TIMEOUT="5000" -

#ETCD_PROXY_READ_TIMEOUT="0" -

# -

#[Security] -

CLIENT_CERT_AUTH="true" -

ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

PEER_CLIENT_CERT_AUTH="true" -

ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

# -

#[Logging] -

#ETCD_DEBUG="false" -

#ETCD_LOG_PACKAGE_LEVELS="" -

#ETCD_LOG_OUTPUT="default" -

# -

#[Unsafe] -

#ETCD_FORCE_NEW_CLUSTER="false" -

# -

#[Version] -

#ETCD_VERSION="false" -

#ETCD_AUTO_COMPACTION_RETENTION="0" -

# -

#[Profiling] -

#ETCD_ENABLE_PPROF="false" -

#ETCD_METRICS="basic" -

# -

#[Auth] -

#ETCD_AUTH_TOKEN="simple"

8.各etcd节点启动etcd并设置开机自动启动

-

# systemctl daemon-reload -

# systemctl enable etcd -

# systemctl start etcd.service -

# systemctl status etcd.service

9.各etcd节点测试验证etcd集群配置

-

# etcd --version //查看etcd安装版本 -

etcd Version: 3.3.7 -

Git SHA: 56536de55 -

Go Version: go1.9.6 -

Go OS/Arch: linux/amd64

查看etcd健康集群状态

-

# etcdctl --endpoints=https://192.168.168.2:2379 \ -

--ca-file=/opt/kubernetes/ssl/ca.pem \ -

--cert-file=/opt/kubernetes/ssl/etcd.pem \ -

--key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-health -

member b1840b0a404e1103 is healthy: got healthy result from https://192.168.168.2:2379 -

member d15b66900329a12d is healthy: got healthy result from https://192.168.168.4:2379 -

member f9794412c46a9cb0 is healthy: got healthy result from https://192.168.168.3:2379 -

cluster is healthy

查看etcd集群状态

-

# etcdctl --endpoints=https://192.168.168.2:2379 \ -

--ca-file=/opt/kubernetes/ssl/ca.pem \ -

--cert-file=/opt/kubernetes/ssl/etcd.pem \ -

--key-file=/opt/kubernetes/ssl/etcd-key.pem member list -

b1840b0a404e1103: name=k8s-master peerURLs=https://192.168.168.2:2380 clientURLs=https://192.168.168.2:2379 isLeader=false -

d15b66900329a12d: name=work-node02 peerURLs=https://192.168.168.4:2380 clientURLs=https://192.168.168.4:2379 isLeader=true -

f9794412c46a9cb0: name=work-node01 peerURLs=https://192.168.168.3:2380 clientURLs=https://192.168.168.3:2379 isLeader=false

六、3台主机上安装docker-engine

1.详细步骤见“Oracle Linux7安装Docker”

2.配置Docker连接ETCD集群

设置docker的JSON文件

-

# vi /etc/docker/daemon.json -

{ -

"exec-opts": ["native.cgroupdriver=cgroupfs"], -

"registry-mirrors": ["https://wghlmi3i.mirror.aliyuncs.com"], -

"cluster-store": "etcd://192.168.168.2:2379,192.168.168.3:2379,192.168.168.4:2379" -

}

注:各node节点docker bip地址不重复,如node01为172.26.1.1/24,node02为172.26.2.1/24

设置docer启动文件

-

# vi /usr/lib/systemd/system/docker.service -

将 -

ExecStart=/usr/bin/dockerd -

改为 -

ExecStart=/usr/bin/dockerd --tlsverify \ -

--tlscacert=/opt/kubernetes/ssl/ca.pem \ -

--tlscert=/opt/kubernetes/ssl/etcd.pem \ -

--tlskey=/opt/kubernetes/ssl/etcd-key.pem -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock

-

# systemctl daemon-reload -

# systemctl enable docker.service -

# systemctl start docker.service -

# systemctl status docker.service

3.测试Docker TLS配置验证情况

七、Kubernetes集群安装配置

1.下载Kubernetes源码(本次使用k8s-1.10.4)

kubernetes-server-linux-amd64.tar.gz

2.解压Kubernets压缩包,生成一个kubernetes目录

tar -zxvf kubernetes-server-linux-amd64.tar.gz

3.配置k8s-master(192.168.168.2)

1)将k8s可执行文件拷贝至kubernets/bin目录下

-

# cp -r /opt/software/kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} /opt/kubernetes/bin/ -

# scp /opt/software/kubernetes/server/bin/{kubectl,kube-proxy,kubelet} [email protected]:/opt/kubernetes/bin/ -

# scp /opt/software/kubernetes/server/bin/{kubectl,kube-proxy,kubelet} [email protected]:/opt/kubernetes/bin/

2)创建生成K8S csr的JSON配置文件:

-

# cd /root/ssl -

# cat > kubernetes-csr.json < -

{ -

"CN": "kubernetes", -

"hosts": [ -

"127.0.0.1", -

"192.168.168.2", -

"192.168.168.3", -

"192.168.168.4", -

"10.1.0.1", -

"localhost", -

"kubernetes", -

"kubernetes.default", -

"kubernetes.default.svc", -

"kubernetes.default.svc.cluster", -

"kubernetes.default.svc.cluster.local" -

], -

"key": { -

"algo": "rsa", -

"size": 2048 -

}, -

"names": [ -

{ -

"C": "CN", -

"ST": "BeiJing", -

"L": "BeiJing", -

"O": "k8s", -

"OU": "System" -

} -

] -

} -

EOF

注:10.1.0.1地址为service-cluster网段中第一个ip

3)在/root/ssl目录下生成k8s证书和私钥,并分发到各节点

-

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes -

# cp kubernetes*.pem /opt/kubernetes/ssl/ -

# scp cp kubernetes*.pem [email protected]:/opt/kubernetes/ssl/ -

# scp cp kubernetes*.pem [email protected]:/opt/kubernetes/ssl/

4)创建生成admin证书csr的JSON配置文件

-

# cat > admin-csr.json < -

{ -

"CN": "admin", -

"hosts": [], -

"key": { -

"algo": "rsa", -

"size": 2048 -

}, -

"names": [ -

{ -

"C": "CN", -

"ST": "BeiJing", -

"L": "BeiJing", -

"O": "system:masters", -

"OU": "System" -

} -

] -

} -

EOF

5)生成admin证书和私钥

-

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin -

# cp admin*.pem /opt/kubernetes/ssl/

6)创建kube-apiserver使用的客户端token文件

-

# mkdir /opt/kubernetes/token //在各k8s节点执行相同步骤 -

# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') //生成一个程序登录3rd_session -

# cat > /opt/kubernetes/token/bootstrap-token.csv < -

{BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" -

EOF

7)创建基础用户名/密码认证配置

-

# vi /opt/kubernetes/token/basic-auth.csv //添加如下内容 -

admin,admin,1 -

readonly,readonly,2

8)创建Kube API Server启动文件

-

# vi /usr/lib/systemd/system/kube-apiserver.service -

[Unit] -

Description=Kubernetes API Server -

Documentation=https://github.com/GoogleCloudPlatform/kubernetes -

After=network.target -

After=etcd.service -

[Service] -

EnvironmentFile=/opt/kubernetes/conf/kube.conf -

EnvironmentFile=/opt/kubernetes/conf/apiserver.conf -

ExecStart=/opt/kubernetes/bin/kube-apiserver \ -

$KUBE_LOGTOSTDERR \ -

$KUBE_LOG_LEVEL \ -

$KUBE_ETCD_SERVERS \ -

$KUBE_API_ADDRESS \ -

$KUBE_API_PORT \ -

$KUBELET_PORT \ -

$KUBE_ALLOW_PRIV \ -

$KUBE_SERVICE_ADDRESSES \ -

$KUBE_ADMISSION_CONTROL \ -

$KUBE_API_ARGS -

Restart=on-failure -

RestartSec=5 -

Type=notify -

LimitNOFILE=65536 -

[Install] -

WantedBy=multi-user.target

mkdir /var/log/kubernetes //在各k8s节点执行相同步骤mkdir /var/log/kubernetes/apiserver9)创建kube.conf文件

-

# vi /opt/kubernetes/conf/kube.conf -

### -

# kubernetes system config -

# -

# The following values are used to configure various aspects of all -

# kubernetes services, including -

# -

# kube-apiserver.service -

# kube-controller-manager.service -

# kube-scheduler.service -

# kubelet.service -

# kube-proxy.service -

# logging to stderr means we get it in the systemd journal -

KUBE_LOGTOSTDERR="--logtostderr=true" -

# -

# journal message level, 0 is debug -

KUBE_LOG_LEVEL="--v=2" //log级别 -

# -

# Should this cluster be allowed to run privileged docker containers -

KUBE_ALLOW_PRIV="--allow-privileged=true" -

# -

# How the controller-manager, scheduler, and proxy find the apiserver -

#KUBE_MASTER="--master=http://sz-pg-oam-docker-test-001.tendcloud.com:8080" -

KUBE_MASTER="--master=http://127.0.0.1:8080"

注:该配置文件同时被kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy共用,在各node节点KUBE_MASTER值注销

向各分发kube.conf文件

-

# scp kube.conf [email protected]:/opt/kubernetes/conf/ -

# scp kube.conf [email protected]:/opt/kubernetes/conf/

10)生成高级审计配置

-

cat > /opt/kubernetes/yaml/audit-policy.yaml < -

# Log all requests at the Metadata level. -

apiVersion: audit.k8s.io/v1beta1 -

kind: Policy -

rules: -

- level: Metadata -

EOF

11)创建kube API Server配置文件并启动

-

# vi /opt/kubernetes/conf/apiserver.conf -

### -

## kubernetes system config -

## -

## The following values are used to configure the kube-apiserver -

## -

# -

## The address on the local server to listen to. -

#KUBE_API_ADDRESS="--insecure-bind-address=sz-pg-oam-docker-test-001.tendcloud.com" -

KUBE_API_ADDRESS="--advertise-address=192.168.168.2 --bind-address=192.168.168.2 --insecure-bind-address=127.0.0.1" -

# -

## The port on the local server to listen on. -

#KUBE_API_PORT="--port=8080" -

# -

## Port minions listen on -

#KUBELET_PORT="--kubelet-port=10250" -

# -

## Comma separated list of nodes in the etcd cluster -

KUBE_ETCD_SERVERS="--etcd-servers=https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" -

# -

## Address range to use for services -

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.1.0.0/16" -

# -

## default admission control policies -

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction" -

# -

## Add your own! -

KUBE_API_ARGS="--authorization-mode=Node,RBAC --runtime-config=rbac.authorization.k8s.io/v1 --kubelet-https=true --anonymous-auth=false --enable-bootstrap-token-auth --basic-auth-file=/opt/kubernetes/token/basic-auth.csv --token-auth-file=/opt/kubernetes/token/bootstrap-token.csv --service-node-port-range=30000-32767 --tls-cert-file=/opt/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/opt/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/opt/kubernetes/ssl/kubernetes-key.pem --allow-privileged=true --enable-swagger-ui=true --apiserver-count=3 --audit-policy-file=/opt/kubernetes/etc/audit-policy.yaml --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/log/kubernetes/apiserver/api-audit.log --log-dir=/var/log/kubernetes/apiserver --event-ttl=1h"

-

# systemctl daemon-reload -

# systemctl enable kube-apiserver -

# systemctl start kube-apiserver -

# systemctl status kube-apiserver

11)创建Kube Controller Manager启动文件

-

# vi /usr/lib/systemd/system/kube-controller-manager.service -

[Unit] -

Description=Kubernetes Controller Manager -

Documentation=https://github.com/GoogleCloudPlatform/kubernetes -

[Service] -

EnvironmentFile=/opt/kubernetes/conf/kube.conf -

EnvironmentFile=/opt/kubernetes/conf/controller-manager.conf -

ExecStart=/opt/kubernetes/bin/kube-controller-manager \ -

$KUBE_LOGTOSTDERR \ -

$KUBE_LOG_LEVEL \ -

$KUBE_MASTER \ -

$KUBE_CONTROLLER_MANAGER_ARGS -

Restart=on-failure -

RestartSec=5 -

[Install] -

WantedBy=multi-user.target

mkdir /var/log/kubernetes/controller-manager13)创建kube Controller Manager配置文件并启动

-

# vi /opt/kubernetes/conf/controller-manager.conf -

### -

# The following values are used to configure the kubernetes controller-manager -

# -

# defaults from config and apiserver should be adequate -

# -

# Add your own! -

KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 --service-cluster-ip-range=10.1.0.0/16 --cluster-cidr=10.2.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --leader-elect=true --log-dir=/var/log/kubernetes/controller-manager"

注:--service-cluster-ip-range参数指定Cluster中Service 的CIDR范围,该网络在各Node间必须路由不可达,必须和kube-apiserver中的参数一致

-

# systemctl daemon-reload -

# systemctl enable kube-controller-manager -

# systemctl start kube-controller-manager -

# systemctl status kube-controller-manager

14)创建Kube Scheduler启动文件

-

# vi /usr/lib/systemd/system/kube-scheduler.service -

[Unit] -

Description=Kubernetes Scheduler -

Documentation=https://github.com/GoogleCloudPlatform/kubernetes -

[Service] -

EnvironmentFile=/opt/kubernetes/conf/kube.conf -

EnvironmentFile=/opt/kubernetes/conf/scheduler.conf -

ExecStart=/opt/kubernetes/bin/kube-scheduler \ -

$KUBE_LOGTOSTDERR \ -

$KUBE_LOG_LEVEL \ -

$KUBE_MASTER \ -

$KUBE_SCHEDULER_ARGS -

Restart=on-failure -

RestartSec=5 -

[Install] -

WantedBy=multi-user.target

mkdir /var/log/kubernetes/scheduler15)创建Kube Scheduler配置文件并启动

-

# vi /opt/kubernetes/conf/scheduler.conf -

### -

# kubernetes scheduler config -

# -

# default config should be adequate -

# -

# Add your own! -

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=127.0.0.1 --log-dir=/var/log/kubernetes/scheduler"

-

# systemctl daemon-reload -

# systemctl enable kube-scheduler -

# systemctl start kube-scheduler -

# systemctl status kube-scheduler

16)创建kubectl kubeconfig文件

设置集群参数

-

# kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.168.2:6443 -

Cluster "kubernetes" set.Cluster "kubernetes" set.

设置客户端认证参数

-

# kubectl config set-credentials admin --client-certificate=/opt/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/opt/kubernetes/ssl/admin-key.pem -

User "admin" set.

设置上下文参数

-

# kubectl config set-context kubernetes --cluster=kubernetes --user=admin -

Context "kubernetes" created.

设置默认上下文

-

# kubectl config use-context kubernetes -

Switched to context "kubernetes".

17)验证各组件健康状况

-

# kubectl get cs -

NAME STATUS MESSAGE ERROR -

controller-manager Healthy ok -

scheduler Healthy ok -

etcd-0 Healthy {"health":"true"} -

etcd-1 Healthy {"health":"true"} -

etcd-2 Healthy {"health":"true"}

18)创建角色绑定

-

# kubectl create clusterrolebinding --user system:serviceaccount:kube-system:default kube-system-cluster-admin --clusterrole cluster-admin -

clusterrolebindings.rbac.authorization.k8s.io "kube-system-cluster-admin"

注:在kubernetes-1.10.4以前使用如下命令

-

# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap -

clusterrolebinding.rbac.authorization.k8s.io "kubelet-bootstrap" created

19)创建kubelet bootstrapping kubeconfig文件

设置集群参数

-

# kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.168.2:6443 --kubeconfig=bootstrap.kubeconfig -

Cluster "kubernetes" set.

设置客户端认证参数

-

# kubectl config set-credentials kubelet-bootstrap --token=1cd425206a373f7cc75c958fd363e3fe --kubeconfig=bootstrap.kubeconfig -

User "kubelet-bootstrap" set.

token值为master创建token文件时生成的128bit字符串

设置上下文参数

-

# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig -

Context "default" created.

设置默认上下文

-

# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig -

Switched to context "default".

将生成的bootstrap.kubeconfig文件分发到各节点

-

cp bootstrap.kubeconfig /opt/kubernetes/conf/ -

scp bootstrap.kubeconfig [email protected]:/opt/kubernetes/conf/ -

scp bootstrap.kubeconfig [email protected]:/opt/kubernetes/conf/

20)创建生成kube-proxy证书csr的JSON配置文件

-

# cd /root/ssl -

# cat > kube-proxy-csr.json << EOF -

{ -

"CN": "system:kube-proxy", -

"hosts": [], -

"key": { -

"algo": "rsa", -

"size": 2048 -

}, -

"names": [ -

{ -

"C": "CN", -

"ST": "BeiJing", -

"L": "BeiJing", -

"O": "k8s", -

"OU": "System" -

} -

] -

} -

EOF

21)生成kube-proxy证书和私钥

-

# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy -

# cp kube-proxy*.pem /opt/kubernetes/ssl/ -

# scp kube-proxy*.pem [email protected]:/opt/kubernetes/ssl/ -

# scp kube-proxy*.pem [email protected]:/opt/kubernetes/ssl/

21)创建kube-proxy kubeconfig文件

设置集群参数

-

# kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.168.2:6443 --kubeconfig=kube-proxy.kubeconfig -

Cluster "kubernetes" set.

设置客户端认证参数

-

# kubectl config set-credentials kube-proxy --client-certificate=/opt/kubernetes/ssl/kube-proxy.pem --client-key=/opt/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig -

User "kube-proxy" set.

设置上下文参数

-

# kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig -

Context "default" created.

设置默认上下文

-

# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig -

Switched to context "default".

分发kube-proxy.kubeconfig文件到各node节点

-

cp /root/ssl/kube-proxy.kubeconfig /opt/kubernetes/conf/ -

scp /root/ssl/kube-proxy.kubeconfig [email protected]:/opt/kubernetes/conf/ -

scp /root/ssl/kube-proxy.kubeconfig [email protected]:/opt/kubernetes/conf/

4.配置work-node01/02

1)安装ipvsadm等工具(各node节点相同操作)

yum install -y ipvsadm ipset bridge-utils2)创建kubelet工作目录(各node节点做相同操作)

mkdir /var/lib/kubelet3)创建kubelet配置文件

-

# vi /opt/kubernetes/conf/kubelet.conf -

### -

## kubernetes kubelet (minion) config -

# -

## The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) -

KUBELET_ADDRESS="--address=192.168.168.3" -

# -

## The port for the info server to serve on -

#KUBELET_PORT="--port=10250" -

# -

## You may leave this blank to use the actual hostname -

KUBELET_HOSTNAME="--hostname-override=work-node01" -

# -

## pod infrastructure container -

KUBELET_POD_INFRA_CONTAINER="--pod_infra_container_image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" -

# -

## -

#KUBELET_API_SERVER="--api-servers=https://192.168.168.2:6443" -

# -

## Add your own! -

KUBELET_ARGS="--cluster-dns=10.1.0.2 --experimental-bootstrap-kubeconfig=/opt/kubernetes/conf/bootstrap.kubeconfig --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig --cert-dir=/opt/kubernetes/ssl --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --allow-privileged=true --cluster-domain=cluster.local. --hairpin-mode=hairpin-veth --fail-swap-on=false --serialize-image-pulls=false --log-dir=/var/log/kubernetes/kubelet"

注:KUBELET_ADDRESS设置为各节点本机IP,KUBELET_HOSTNAME设为各节点的主机名,KUBELET_POD_INFRA_CONTAINER可设置为私有容器仓库地址,如有可设置为KUBELET_POD_INFRA_CONTAINER="--pod_infra_container_image={私有镜像仓库ip}:80/k8s/pause-amd64:v3.0",cni-bin-dir值的路径在创建calico网络时会自动添加

mkdir /var/log/kubernetes/kubelet //各node节点做相同操作分发kubelet.conf到各node节点

scp /opt/kubernetes/conf/kubelet.conf [email protected]:/opt/kubernetes/conf/4)创建CNI网络配置文件

-

mkdir -p /etc/cni/net.d -

cat >/etc/cni/net.d/10-calico.conf < -

{ -

"name": "calico-k8s-network", -

"cniVersion": "0.6.0", -

"type": "calico", -

"etcd_endpoints": "https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379", -

"etcd_key_file": "/opt/kubernetes/ssl/etcd-key.pem", -

"etcd_cert_file": "/opt/kubernetes/ssl/etcd.pem", -

"etcd_ca_cert_file": "/opt/kubernetes/ssl/ca.pem", -

"log_level": "info", -

"mtu": 1500, -

"ipam": { -

"type": "calico-ipam" -

}, -

"policy": { -

"type": "k8s" -

}, -

"kubernetes": { -

"kubeconfig": "/opt/kubernetes/conf/kubelet.conf" -

} -

} -

EOF

分发到各nodes节点

scp /etc/cni/net.d/10-calico.conf [email protected]:/etc/cni/net.d

5)创建Kubelet配置文件并启动

-

# vi /usr/lib/systemd/system/kubelet.service -

[Unit] -

Description=Kubernetes Kubelet Server -

Documentation=https://github.com/GoogleCloudPlatform/kubernetes -

After=docker.service -

Requires=docker.service -

[Service] -

WorkingDirectory=/var/lib/kubelet -

EnvironmentFile=/opt/kubernetes/conf/kube.conf -

EnvironmentFile=/opt/kubernetes/conf/kubelet.conf -

ExecStart=/opt/kubernetes/bin/kubelet \ -

$KUBE_LOGTOSTDERR \ -

$KUBE_LOG_LEVEL \ -

$KUBELET_ADDRESS \ -

$KUBELET_PORT \ -

$KUBELET_HOSTNAME \ -

$KUBE_ALLOW_PRIV \ -

$KUBELET_POD_INFRA_CONTAINER \ -

$KUBELET_ARGS -

Restart=on-failure -

RestartSec=5 -

[Install] -

WantedBy=multi-user.target

分发kubelet.service文件到各node节点

scp /usr/lib/systemd/system/kubelet.service [email protected]:/usr/lib/systemd/system/ -

systemctl daemon-reload -

systemctl enable kubelet -

systemctl start kubelet -

systemctl status kubelet

注:如无法自动在/opt/kubernetes/conf/没有自动生成kubelet.kubeconfig文件可将master中$HOME/.kube/config文件重命名为kubelet.kubeconfig并拷贝至各nodes节点的/opt/kubernetes/conf/目录下

6)查看CSR证书请求(在k8s-master上执行)

-

kubectl get csr -

NAME AGE REQUESTOR CONDITION -

node-csr-0Vg_d__0vYzmrMn7o2S7jsek4xuQJ2v_YuCKwWN9n7M 4h kubelet-bootstrap Pending

7)批准kubelet 的 TLS 证书请求(在k8s-master上执行)

-

# kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve -

certificatesigningrequest.certificates.k8s.io "node-csr-0Vg_d__0vYzmrMn7o2S7jsek4xuQJ2v_YuCKwWN9n7M" approved

8)查看节点状态如果是Ready的状态就说明一切正常(在k8s-master上执行)

-

# kubectl get node -

NAME STATUS ROLES AGE VERSION -

work-node01 Ready11h v1.10.4 -

work-node02 Ready11h v1.10.4

9)创建kube-proxy工作目录(各node节点做相同操作)

mkdir /var/lib/kube-proxy10)创建kube-proxy的启动文件

-

# vi /usr/lib/systemd/system/kube-proxy.service -

[Unit] -

Description=Kubernetes Kube-Proxy Server -

Documentation=https://github.com/GoogleCloudPlatform/kubernetes -

After=network.target -

[Service] -

WorkingDirectory=/var/lib/kube-proxy -

EnvironmentFile=/opt/kubernetes/conf/kube.conf -

EnvironmentFile=/opt/kubernetes/conf/kube-proxy.conf -

ExecStart=/opt/kubernetes/bin/kube-proxy \ -

$KUBE_LOGTOSTDERR \ -

$KUBE_LOG_LEVEL \ -

$KUBE_MASTER \ -

$KUBE_PROXY_ARGS -

Restart=on-failure -

RestartSec=5 -

LimitNOFILE=65536 -

[Install] -

WantedBy=multi-user.target

分发kube-proxy.service到各node节点

scp /usr/lib/systemd/system/kube-proxy.service [email protected]:/usr/lib/systemd/system/11)创建kube-proxy配置文件并启动

-

# vi /opt/kubernetes/conf/kube-proxy.conf -

### -

# kubernetes proxy config -

# default config should be adequate -

# Add your own! -

KUBE_PROXY_ARGS="--bind-address=192.168.168.3 --hostname-override=work-node01 --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig --masquerade-all --feature-gates=SupportIPVSProxyMode=true --proxy-mode=ipvs --ipvs-min-sync-period=5s --ipvs-sync-period=5s --ipvs-scheduler=rr --log-dir=/var/log/kubernetes/kube-proxy"

注:bind-address值设为各node节点本机IP,hostname-override值设为各node节点主机名

分发kube-proxy.conf到各node节点

scp /opt/kubernetes/conf/kube-proxy.conf [email protected]:/opt/kubernetes/conf/

-

# systemctl daemon-reload -

# systemctl enable kube-proxy -

# systemctl start kube-proxy -

# systemctl status kube-proxy

12)查看LVS状态

-

# ipvsadm -L -n -

IP Virtual Server version 1.2.1 (size=4096) -

Prot LocalAddress:Port Scheduler Flags -

-> RemoteAddress:Port Forward Weight ActiveConn InActConn -

TCP 10.1.0.1:443 rr persistent 10800 -

-> 192.168.168.2:6443 Masq 1 0 0

5.配置calico网络

1)下载calico插件

在master节点执行:

-

# wget -N -P /usr/bin/ https://github.com/projectcalico/calicoctl/releases/download/v3.1.3/calicoctl -

# chmod +x /usr/bin/calicoctl -

# mkdir /etc/calico/yaml -

# docker pull quay.io/calico/node:v3.1.3

在各node节点执行:

-

# wget -N -P /usr/bin/ https://github.com/projectcalico/calicoctl/releases/download/v3.1.3/calicoctl -

# chmod +x /usr/bin/calicoctl -

# mkdir /etc/calico/conf -

# mkdir /opt/cni/bin -

# wget -N -P /opt/cni/bin https://github.com/projectcalico/cni-plugin/releases/download/v3.1.3/calico -

# wget -N -P /opt/cni/bin https://github.com/projectcalico/cni-plugin/releases/download/v3.1.3/calico-ipam -

# chmod +x /opt/cni/bin/calico /opt/cni/bin/calico-ipam -

# docker pull quay.io/calico/node:v3.1.3

查看docker calico image信息

-

# docker images -

REPOSITORY TAG IMAGE ID CREATED SIZE -

quay.io/calico/node v3.1.3 7eca10056c8e 6 weeks ago 248MB

2)创建calico和etcd交互文件

-

# vi /etc/calico/calicoctl.cfg -

apiVersion: projectcalico.org/v3 -

kind: CalicoAPIConfig -

metadata: -

spec: -

datastoreType: "etcdv3" -

etcdEndpoints: "https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" -

etcdKeyFile: "/opt/kubernetes/ssl/etcd-key.pem" -

etcdCertFile: "/opt/kubernetes/ssl/etcd.pem" -

etcdCACertFile: "/opt/kubernetes/ssl/ca.pem"

3)获取calico.yaml(在master执行)

-

wget -N -P /etc/calico/yaml https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/rbac.yaml -

wget -N -P /etc/calico/yaml https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/calico.yaml

注:此处版本与docker calico image版本相同

修改calico.yaml文件内容

-

# 替换 Etcd 地址 -

sed -i 's@.*etcd_endpoints:.*@\ \ etcd_endpoints:\ \"https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379\"@gi' calico.yaml -

# 替换 Etcd 证书 -

export ETCD_CERT=`cat /opt/kubernetes/ssl/etcd.pem | base64 | tr -d '\n'` -

export ETCD_KEY=`cat /opt/kubernetes/ssl/etcd-key.pem | base64 | tr -d '\n'` -

export ETCD_CA=`cat /opt/kubernetes/ssl/ca.pem | base64 | tr -d '\n'` -

sed -i "s@.*etcd-cert:.*@\ \ etcd-cert:\ ${ETCD_CERT}@gi" calico.yaml -

sed -i "s@.*etcd-key:.*@\ \ etcd-key:\ ${ETCD_KEY}@gi" calico.yaml -

sed -i "s@.*etcd-ca:.*@\ \ etcd-ca:\ ${ETCD_CA}@gi" calico.yaml -

sed -i 's@.*etcd_ca:.*@\ \ etcd_ca:\ "/calico-secrets/etcd-ca"@gi' calico.yaml -

sed -i 's@.*etcd_cert:.*@\ \ etcd_cert:\ "/calico-secrets/etcd-cert"@gi' calico.yaml -

sed -i 's@.*etcd_key:.*@\ \ etcd_key:\ "/calico-secrets/etcd-key"@gi' calico.yaml -

#上面是必须要修改的参数,文件中有一个参数是设置pod network地址的,根据实际情况做修改: -

- name: CALICO_IPV4POOL_CIDR -

value: "10.100.0.0/16"

calico资源进行配置

-

kubectl apply -f /etc/calico/conf/rbac.yaml -

kubectl create -f /etc/calico/conf/calico.yaml

4)创建calico配置文件

-

# vi /etc/calico/conf/calico.conf -

CALICO_NODENAME="work-node01" -

ETCD_ENDPOINTS=https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379 -

ETCD_CA_CERT_FILE="/opt/kubernetes/ssl/ca.pem" -

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" -

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" -

CALICO_IP="192.168.168.3" -

CALICO_IP6="" -

CALICO_NETWORKING_BACKEND=bird -

CALICO_AS="65412" -

CALICO_NO_DEFAULT_POOLS="" -

CALICO_LIBNETWORK_ENABLED=true -

CALICO_IPV4POOL_IPIP="Always" -

IP_AUTODETECTION_METHOD=interface=ens34 -

IP6_AUTODETECTION_METHOD=interface=ens34

注:CALICO_NODENAME值为各node节点主机名,CALICP_IP值为各node节点网口IP,IP_AUTODETECTION_METHOD和IP6_AUTODETECTION_METHOD值为各node节点对外网口标号

分发calico.conf文件到各node节点

scp /etc/calico/conf/calico.conf [email protected]:/etc/calico/conf/5)创建calico启动文件

-

# vi /usr/lib/systemd/system/calico-node.service -

[Unit] -

Description=calico-node -

After=docker.service -

Requires=docker.service -

[Service] -

User=root -

PermissionsStartOnly=true -

EnvironmentFile=/etc/calico/conf/calico.conf -

ExecStart=/usr/bin/docker run --net=host --privileged --name=calico-node \ -

-e NODENAME=${CALICO_NODENAME} \ -

-e ETCD_ENDPOINTS=${ETCD_ENDPOINTS} \ -

-e ETCD_CA_CERT_FILE=${ETCD_CA_CERT_FILE} \ -

-e ETCD_CERT_FILE=${ETCD_CERT_FILE} \ -

-e ETCD_KEY_FILE=${ETCD_KEY_FILE} \ -

-e IP=${CALICO_IP} \ -

-e IP6=${CALICO_IP6} \ -

-e CALICO_NETWORKING_BACKEND=${CALICO_NETWORKING_BACKEND} \ -

-e AS=${CALICO_AS} \ -

-e NO_DEFAULT_POOLS=${CALICO_NO_DEFAULT_POOLS} \ -

-e CALICO_LIBNETWORK_ENABLED=${CALICO_LIBNETWORK_ENABLED} \ -

-e CALICO_IPV4POOL_IPIP=${CALICO_IPV4POOL_IPIP} \ -

-e IP_AUTODETECTION_METHOD=${IP_AUTODETECTION_METHOD} \ -

-e IP6_AUTODETECTION_METHOD=${IP6_AUTODETECTION_METHOD} \ -

-v /opt/kubernetes/ssl:/opt/kubernetes/ssl \ -

-v /var/log/calico:/var/log/calico \ -

-v /run/docker/plugins:/run/docker/plugins \ -

-v /lib/modules:/lib/modules \ -

-v /var/run/calico:/var/run/calico \ -

quay.io/calico/node:v3.1.3 -

ExecStop=/usr/bin/docker rm -f calico-node -

Restart=always -

RestartSec=10 -

[Install] -

WantedBy=multi-user.target

mkdir /var/log/calico //各nodes节点同样操作 注:NODENAME值为各主机名,IP值为各主机外连网口IP

分发calico-node文件到各node节点

scp /usr/lib/systemd/system/calico-node.service [email protected]:/usr/lib/systemd/system/ -

# systemctl daemon-reload -

# systemctl enable calico-node -

# systemctl start calico-node -

# systemctl status calico-node

5)在各主机以Docker方式启动calico-node

k8s-master:

calicoctl node run --node-image=quay.io/calico/node:v3.1.3 --ip=192.168.168.2work-node01/node02:

calicoctl node run --node-image=quay.io/calico/node:v3.1.3 --ip=192.168.168.3calicoctl node run --node-image=quay.io/calico/node:v3.1.3 --ip=192.168.168.4查看calico-node启动情况

-

# docker ps -

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES -

a1981d2dae6d quay.io/calico/node:v3.1.3 "start_runit" 17 seconds ago Up 17 seconds calico-node

6)查看calico node信息

-

# # calicoctl get node -o wide -

NAME ASN IPV4 IPV6 -

k8s-master (unknown) 192.168.168.2/32 -

work-node01 65412 192.168.168.3/24 -

wrok-node02 65412 192.168.168.4/24

7)查看peer信息

-

# calicoctl node status -

Calico process is running. -

IPv4 BGP status -

+---------------+-------------------+-------+----------+-------------+ -

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | -

+---------------+-------------------+-------+----------+-------------+ -

| 192.168.168.2 | node-to-node mesh | up | 07:41:40 | Established | -

| 192.168.168.3 | node-to-node mesh | up | 07:41:43 | Established | -

+---------------+-------------------+-------+----------+-------------+ -

IPv6 BGP status -

No IPv6 peers found.

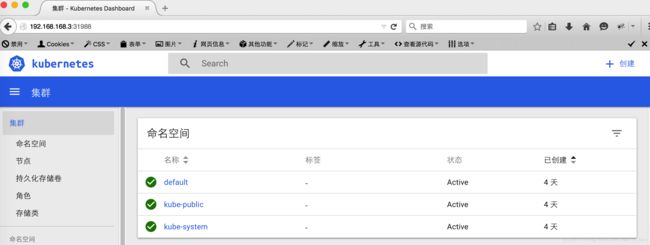

6.部署kubernetes Dashboard(在master执行)

1)创建dasnbord之前需要创建角色

-

# vi /opt/kubernetes/yaml/dashboard-rbac.yaml -

apiVersion: v1 -

kind: ServiceAccount -

metadata: -

labels: -

k8s-app: kubernetes-dashboard -

addonmanager.kubernetes.io/mode: Reconcile -

name: kubernetes-dashboard -

namespace: kube-system -

--- -

kind: ClusterRoleBinding -

apiVersion: rbac.authorization.k8s.io/v1beta1 -

metadata: -

name: kubernetes-dashboard-minimal -

namespace: kube-system -

labels: -

k8s-app: kubernetes-dashboard -

addonmanager.kubernetes.io/mode: Reconcile -

roleRef: -

apiGroup: rbac.authorization.k8s.io -

kind: ClusterRole -

name: cluster-admin -

subjects: -

- kind: ServiceAccount -

name: kubernetes-dashboard -

namespace: kube-system

kubectl create -f /opt/kubernetes/yaml/dashboard-rbac.yaml2)为dashboard创建控制器

-

# cat dashboard-deployment.yaml -

apiVersion: apps/v1beta2 -

kind: Deployment -

metadata: -

name: kubernetes-dashboard -

namespace: kube-system -

labels: -

k8s-app: kubernetes-dashboard -

kubernetes.io/cluster-service: "true" -

addonmanager.kubernetes.io/mode: Reconcile -

spec: -

selector: -

matchLabels: -

k8s-app: kubernetes-dashboard -

template: -

metadata: -

labels: -

k8s-app: kubernetes-dashboard -

annotations: -

scheduler.alpha.kubernetes.io/critical-pod: '' -

spec: -

serviceAccountName: kubernetes-dashboard -

containers: -

- name: kubernetes-dashboard -

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.8.3 -

resources: -

limits: -

cpu: 100m -

memory: 300Mi -

requests: -

cpu: 100m -

memory: 100Mi -

ports: -

- containerPort: 9090 -

protocol: TCP -

livenessProbe: -

httpGet: -

scheme: HTTP -

path: / -

port: 9090 -

initialDelaySeconds: 30 -

timeoutSeconds: 30 -

tolerations: -

- key: "CriticalAddonsOnly" -

operator: "Exists"

kubectl create -f /opt/kubernetes/yaml/dashboard-deployment.yaml3)创建service用于暴露服务,用于暴露服务

-

# vi /opt/kubernetes/yaml/dashboard-service.yaml -

apiVersion: v1 -

kind: Service -

metadata: -

name: kubernetes-dashboard -

namespace: kube-system -

labels: -

k8s-app: kubernetes-dashboard -

kubernetes.io/cluster-service: "true" -

addonmanager.kubernetes.io/mode: Reconcile -

spec: -

type: NodePort -

selector: -

k8s-app: kubernetes-dashboard -

ports: -

- port: 80 -

targetPort: 9090

kubectl create -f /opt/kubernetes/yaml/dashboard-service.yaml4)查看状态

查看service状态:

-

# kubectl get svc -n kube-system -

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE -

kubernetes-dashboard NodePort 10.1.193.9780:31988/TCP 59m

注:PORT(S)值中31988为dashboard访问端口

查看pod状态:

-

# kubectl get pods -n kube-system -

NAME READY STATUS RESTARTS AGE -

calico-kube-controllers-98989846-bgdkv 1/1 Running 2 1h -

calico-node-2x8m6 2/2 Running 6 1h -

calico-node-hrgg9 2/2 Running 5 1h -

kubernetes-dashboard-b9f5f9d87-stz4s 1/1 Running 1 1h

查看所有信息:

-

# kubectl get all -n kube-system -

NAME READY STATUS RESTARTS AGE -

pod/calico-kube-controllers-98989846-bgdkv 1/1 Running 2 1h -

pod/calico-node-2x8m6 2/2 Running 6 1h -

pod/calico-node-hrgg9 2/2 Running 5 1h -

pod/kubernetes-dashboard-b9f5f9d87-stz4s 1/1 Running 1 1h -

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE -

service/kubernetes-dashboard NodePort 10.1.193.9780:31988/TCP 1h -

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE -

daemonset.apps/calico-node 2 2 2 2 22h -

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE -

deployment.apps/calico-kube-controllers 1 1 1 1 2h -

deployment.apps/kubernetes-dashboard 1 1 1 1 1h -

NAME DESIRED CURRENT READY AGE -

replicaset.apps/calico-kube-controllers-98989846 1 1 1 1h -

replicaset.apps/kubernetes-dashboard-b9f5f9d87 1 1 1 1h