持续集成系列(4)------容器编排平台k8s多主集群搭建+traefik实现容器负载均衡

持续集成系列------容器编排平台k8s多主集群搭建

文章目录

- 持续集成系列------容器编排平台k8s多主集群搭建

- k8s简介

- 环境准备

- 升级内核

- docker安装

- 安装kubeadm,kubelet,kubectl

- 配置系统相关参数

- 集群高可用

- 配置kubelet

- ssh免密配置

- 配置k8s1

- 配置k8s2

- 配置k8s3

- 配置kubectl

- 配置网络组件calico(任意一台master执行)

- 配置命令自动补全

- 配置前端负载均衡 traefik

- traefik实现https代理(全网最简单方式)

- dashboard部署

- 应用部署示例(jenkins)

- 加入新node节点

- troubleshoot

## 目标 gitlab+jenkins+docker+harbor+k8s初步实现持续集成

k8s简介

Kubernetes(k8s)是自动化容器操作的开源平台,这些操作包括部署,调度和节点集群间扩展。

使用Kubernetes可以:

- 自动化容器的部署和复制

- 随时扩展或收缩容器规模

- 将容器组织成组,并且提供容器间的负载均衡

- 很容易地升级应用程序容器的新版本

- 提供容器弹性,如果容器失效就替换它,等等…

Kubernetes解决的问题:

- 调度 - 容器应该在哪个机器上运行

- 生命周期和健康状况 - 容器在无错的条件下运行

- 服务发现 - 容器在哪,怎样与它通信

- 监控 - 容器是否运行正常

- 认证 - 谁能访问容器

- 容器聚合 - 如何将多个容器合并成一个工程

Kubernetes组件组成:

- kubectl

客户端命令行工具,将接受的命令格式化后发送给kube-apiserver,作为整个系统的操作入口。 - kube-apiserver

作为整个系统的控制入口,以REST API服务提供接口。 - kube-controller-manager

用来执行整个系统中的后台任务,包括节点状态状况、Pod个数、Pods和Service的关联等。 - kube-scheduler

负责节点资源管理,接受来自kube-apiserver创建Pods任务,并分配到某个节点。 - etcd

负责节点间的服务发现和配置共享。 - kube-proxy

运行在每个计算节点上,负责Pod网络代理。定时从etcd获取到service信息来做相应的策略。 - kubelet

运行在每个计算节点上,作为agent,接受分配该节点的Pods任务及管理容器,周期性获取容器状态,反馈给kube-apiserver。 - DNS

一个可选的DNS服务,用于为每个Service对象创建DNS记录,这样所有的Pod就可以通过DNS访问服务了。

环境准备

- 系统:Centos7.4

- docker v17.03

- k8s 1.11.2

- 域名:k8s.domain.com

| ip | hostname | role |

|---|---|---|

| 10.79.167.26 | k8s1 | master |

| 10.79.167.27 | k8s2 | master |

| 10.79.167.28 | k8s3 | master |

| 10.79.166.8 | vip |

升级内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

yum --enablerepo=elrepo-kernel install kernel-ml -y

sed -i s/saved/0/g /etc/default/grub

grub2-set-default "$(cat /boot/grub2/grub.cfg |grep menuentry|grep 'menuentry '|head -n 1|awk -F "'" '{print $2}')"

#查看默认启动版本

grub2-editenv list

grub2-mkconfig -o /boot/grub2/grub.cfg && reboot

docker安装

# 安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加docker源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 安装docker

yum install -y --setopt=obsoletes=0 \

docker-ce-17.03.2.ce-1.el7.centos \

docker-ce-selinux-17.03.2.ce-1.el7.centos \

docker-compose

# 切换国内hub

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://ef017c13.m.daocloud.io

cat >/etc/docker/daemon.json<<EOF

{"registry-mirrors": ["http://ef017c13.m.daocloud.io"],"live-restore": true,"storage-driver": "overlay2"}

EOF

# 启动

systemctl enable docker

systemctl start docker

安装kubeadm,kubelet,kubectl

#配置源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装

yum install -y kubelet kubeadm kubectl ipvsadm

配置系统相关参数

sed -i 's/SELINUX=permissive/SELINUX=disabled/' /etc/sysconfig/selinux

setenforce 0

systemctl stop firewalld

systemctl disable firewalld

swapoff -a && sysctl -w vm.swappiness=0

iptables -P FORWARD ACCEPT

# 配置转发相关参数,否则可能会出错

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

vm.swappiness=0

net.ipv4.ip_forward=1

EOF

sysctl --system

# /etc/fstab中swap相关的需要删除,否则会导致重启时kubelet启动失败

# 加载ipvs相关内核模块

cat >>/etc/profile<<EOF

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

EOF

source /etc/profile

cat >>/etc/hosts <<EOF

10.79.167.26 k8s1.local

10.79.167.27 k8s2.local

10.79.167.28 k8s3.local

EOF

集群高可用

#安装Pacemake Corosync haproxy

yum install corosync pacemaker pcs fence-agents resource-agents -y

yum install haproxy -y

#启动pcsd

systemctl enable pcsd.service

systemctl start pcsd.service

#修改群集用户hacluster密码

echo centos | passwd --stdin hacluster

#其中一节点执行 k8s1

#创建、启动my_cluster集群

pcs cluster auth -u hacluster -p centos k8s1 k8s2 k8s3

pcs cluster setup --start --name my_cluster k8s1 k8s2 k8s3

pcs cluster enable --all #集群自启动

pcs cluster start --all # 启动集群

pcs cluster status #集群状态

#检验

corosync-cfgtool -s #验证corosync

corosync-cmapctl| grep members #查看成员

pcs status corosync #查看corosync状态

pcs property set stonith-enabled=false #禁用STONITH

pcs property set no-quorum-policy=ignore #无仲裁时,选择忽略

crm_verify -L -V #检查配置

#创建 VIP 资源

pcs resource create vip ocf:heartbeat:IPaddr2 ip=10.79.166.8 cidr_netmask=21 op monitor interval=28s

#查看集群状态

pcs status

#haproxy配置

cat >/etc/haproxy/haproxy.cfg<<EOF

global

log 127.0.0.1 local0 err

maxconn 50000

uid 99

gid 99

#daemon

nbproc 1

pidfile haproxy.pid

defaults

mode http

log 127.0.0.1 local0 err

maxconn 50000

retries 3

timeout connect 5s

timeout client 30s

timeout server 30s

timeout check 2s

listen admin_stats

mode http

bind 0.0.0.0:1080

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /haproxy-status

stats realm Haproxy\ Statistics

stats auth admin:admin

stats hide-version

stats admin if TRUE

frontend k8s-https

bind 0.0.0.0:8443

mode tcp

#maxconn 50000

default_backend k8s-https

backend k8s-https

mode tcp

balance roundrobin

server k8s1 10.79.167.26:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

server k8s2 10.79.167.27:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

server k8s3 10.79.167.28:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

EOF

systemctl enable haproxy

systemctl start haproxy

# 监控haproxy运行状态

pcs resource create haproxy systemd:haproxy op monitor interval=5s --clone

配置kubelet

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

echo $DOCKER_CGROUPS

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

# 启动

systemctl daemon-reload

systemctl enable kubelet && systemctl restart kubelet

ssh免密配置

# k8s1上

ssh-keygen

ssh-copy-id k8s2

ssh-copy-id k8s3

配置k8s1

# 生成配置文件

mkdir -p /opt/kubeadm-cfg && cd /opt/kubeadm-cfg

CP0_IP="10.79.167.26"

CP0_HOSTNAME="k8s1"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.1

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "k8s1"

- "k8s2"

- "k8s3"

- "10.79.167.26"

- "10.79.167.27"

- "10.79.167.28"

- "10.79.166.8"

- "127.0.0.1"

api:

advertiseAddress: $CP0_IP

controlPlaneEndpoint: 10.79.166.8:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP0_IP:2379"

advertise-client-urls: "https://$CP0_IP:2379"

listen-peer-urls: "https://$CP0_IP:2380"

initial-advertise-peer-urls: "https://$CP0_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380"

serverCertSANs:

- $CP0_IP

peerCertSANs:

- $CP0_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

# 提前拉取镜像

# 如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

# 初始化

# 注意保存返回的 join 命令

kubeadm init --config kubeadm-master.config

mkdir -p $HOME/.kube

\cp /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 打包ca相关文件上传至其他master节点

cd /etc/kubernetes && tar cvzf k8s-key.tgz admin.conf pki/ca.* pki/sa.* pki/front-proxy-ca.* pki/etcd/ca.*

scp k8s-key.tgz k8s2:~/

scp k8s-key.tgz k8s3:~/

ssh k8s2 'tar xf k8s-key.tgz -C /etc/kubernetes/'

ssh k8s3 'tar xf k8s-key.tgz -C /etc/kubernetes/'

配置k8s2

mkdir -p /opt/kubeadm-cfg && cd /opt/kubeadm-cfg

# 生成配置文件

CP0_IP="10.79.167.26"

CP0_HOSTNAME="k8s1"

CP1_IP="10.79.167.27"

CP1_HOSTNAME="k8s2"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.1

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "k8s1"

- "k8s2"

- "k8s3"

- "10.79.167.26"

- "10.79.167.27"

- "10.79.167.28"

- "10.79.166.8"

- "127.0.0.1"

api:

advertiseAddress: $CP1_IP

controlPlaneEndpoint: 10.79.166.8:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP1_IP:2379"

advertise-client-urls: "https://$CP1_IP:2379"

listen-peer-urls: "https://$CP1_IP:2380"

initial-advertise-peer-urls: "https://$CP1_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP1_HOSTNAME

- $CP1_IP

peerCertSANs:

- $CP1_HOSTNAME

- $CP1_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

# 配置kubelet

kubeadm alpha phase certs all --config kubeadm-master.config

kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

systemctl restart kubelet

# 添加etcd到集群中

rm -rf $HOME/.kube/*

mkdir -p $HOME/.kube

\cp /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

KUBECONFIG=$HOME/.kube/config

kubectl exec -n kube-system etcd-${CP0_HOSTNAME} -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://${CP0_IP}:2379 member add ${CP1_HOSTNAME} https://${CP1_IP}:2380

kubeadm alpha phase etcd local --config kubeadm-master.config

# 提前拉取镜像

# 如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

# 部署

kubeadm alpha phase kubeconfig all --config kubeadm-master.config

kubeadm alpha phase controlplane all --config kubeadm-master.config

kubeadm alpha phase mark-master --config kubeadm-master.config

配置k8s3

# 生成配置文件

mkdir /opt/kubeadm-cfg && cd /opt/kubeadm-cfg

CP0_IP="10.79.167.26"

CP0_HOSTNAME="k8s1"

CP1_IP="10.79.167.27"

CP1_HOSTNAME="k8s2"

CP2_IP="10.79.167.28"

CP2_HOSTNAME="k8s3"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.1

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "k8s1"

- "k8s2"

- "k8s3"

- "10.79.167.26"

- "10.79.167.27"

- "10.79.167.28"

- "10.79.166.8"

- "127.0.0.1"

api:

advertiseAddress: $CP2_IP

controlPlaneEndpoint: 10.79.166.8:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP2_IP:2379"

advertise-client-urls: "https://$CP2_IP:2379"

listen-peer-urls: "https://$CP2_IP:2380"

initial-advertise-peer-urls: "https://$CP2_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380,$CP2_HOSTNAME=https://$CP2_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP2_HOSTNAME

- $CP2_IP

peerCertSANs:

- $CP2_HOSTNAME

- $CP2_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

# 配置kubelet

kubeadm alpha phase certs all --config kubeadm-master.config

kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

systemctl restart kubelet

# 添加etcd到集群中

rm -rf $HOME/.kube/*

mkdir -p $HOME/.kube

\cp /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

KUBECONFIG=$HOME/.kube/config

kubectl exec -n kube-system etcd-${CP0_HOSTNAME} -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://${CP0_IP}:2379 member add ${CP2_HOSTNAME} https://${CP2_IP}:2380

kubeadm alpha phase etcd local --config kubeadm-master.config

# 提前拉取镜像

# 如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

# 部署

kubeadm alpha phase kubeconfig all --config kubeadm-master.config

kubeadm alpha phase controlplane all --config kubeadm-master.config

kubeadm alpha phase mark-master --config kubeadm-master.config

配置kubectl

# 查看node节点

kubectl get nodes

# 只有网络插件也安装配置完成之后,才能会显示为ready状态

# 设置master允许部署应用pod,参与工作负载

kubectl taint nodes --all node-role.kubernetes.io/master-

配置网络组件calico(任意一台master执行)

# 下载配置

cd /opt/kubeadm-cfg && mkdir kube-calico && cd kube-calico

wget https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml

wget https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

sed -i 's/192.168/10.244/g' calico.yaml

kubectl apply -f .

# 查看

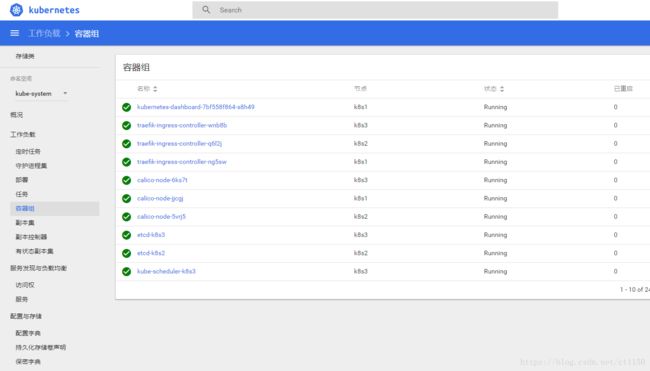

kubectl get pods --namespace kube-system

kubectl get svc --namespace kube-system

配置命令自动补全

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

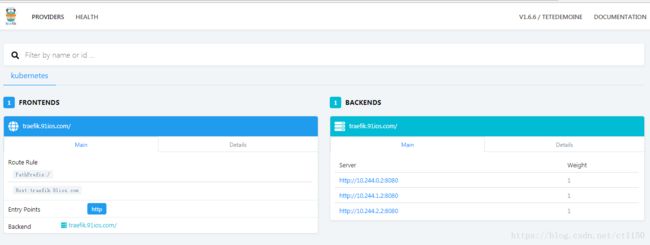

配置前端负载均衡 traefik

参考文档

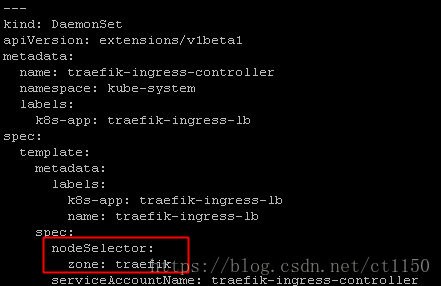

traefik可以两种方式运行,rs方式以及daemonset方式,这里选用daemonset方式,即每台node都运行一个traefik实例参与负载均衡

mkdir traefik-ds && cd traefik-ds

wget https://raw.githubusercontent.com/containous/traefik/master/examples/k8s/traefik-rbac.yaml

wget https://raw.githubusercontent.com/containous/traefik/master/examples/k8s/traefik-ds.yaml

wget https://raw.githubusercontent.com/containous/traefik/master/examples/k8s/ui.yaml

修改ui.yaml中host地址为自己的domain地址

修改traefik-ds.yaml,添加nodeSelector,让traefik只部署在master节点

#标记master节点用于部署traefik

kubectl label nodes k8s1 zone=traefik

kubectl label nodes k8s2 zone=traefik

kubectl label nodes k8s3 zone=traefik

kubectl apply -f .

访问: http://10.79.167.26:8080 或traefik.domain.com(提前做好解析)

traefik实现https代理(全网最简单方式)

参考文章–kubernets Traefik 的HTTP 和HTTPS

参考文章–kubernetes使用traefik的https方式访问web应用

参考文章–kubernetes traefik配置https实践操作记录

提前进行:安装acme.sh并生成*.domain.com的泛域名证书

https这步踩了很多的坑,网上的教程大多只针对一个证书的情况,自己结合官方文档摸索出了绑定多证书的方法。。

#创建证书secret

kubectl -n kube-system create secret generic traefik-cert --from-file=/opt/certs/domain.com.cert --from-file=/opt/certs/domain.com.key

#开启https并绑定默认证书

cat >configmap.yaml<<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: traefik-conf

namespace: kube-system

data:

traefik.toml: |

defaultEntryPoints = ["http","https"]

insecureSkipVerify = true

[entryPoints]

[entryPoints.http]

address = ":80"

[entryPoints.https]

address = ":443"

[entryPoints.https.tls]

[[entryPoints.https.tls.certificates]]

certFile = "/ssl/domain.com.cert"

keyFile = "/ssl/domain.com.key"

EOF

#traefik-ds.yaml中添加443端口、挂载配置以及证书

#配置生效

kubectl apply -f

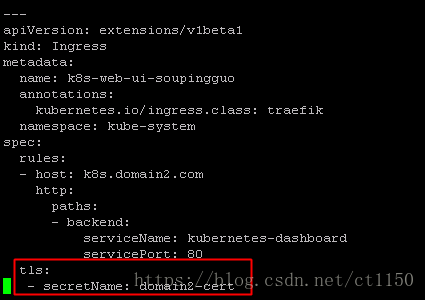

这里实现了单个证书的配置,想要实现多个证书的绑定,以下是全网最简单的方式

#创建tls类型的证书,注意不同namespace的证书secret不能通用,需要创建多个

kubectl create secret tls domain2-cert --cert=/opt/certs/domain2.com.cert --key=/opt/certs/domain2.com.key -n kube-system

需要绑定证书的ingress直接在配置文件中绑定对应的secret

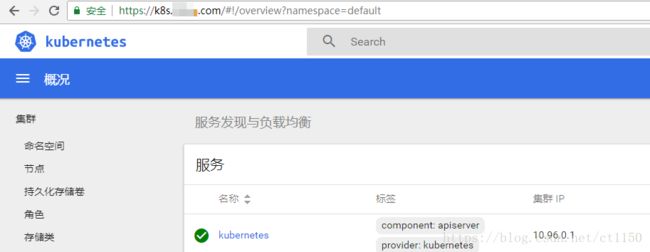

dashboard部署

图形化界面更直观

cd /opt/kubeadm-cfg && mkdir kube-dashboard && cd kube-dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/alternative/kubernetes-dashboard.yaml

#创建rbac授权

cat >rbac.yaml<<EOF

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

EOF

#创建ingress

cat >ingress.yaml<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: k8s-web-ui

annotations:

kubernetes.io/ingress.class: traefik

namespace: kube-system

spec:

rules:

- host: k8s.domain.com

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 80

EOF

kubectl apply -f .

应用部署示例(jenkins)

以从私仓拉取部署jenkins为例

私仓地址为hub.domain.com

#创建secret用于登陆私仓

kubectl create secret docker-registry hubsecret --docker-server=hub.domain.com --docker-username=admin --docker-password=Harbor12345

#创建配置文件

cat >jenkins-templete.yaml<<EOF

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 2

maxUnavailable: 0

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

imagePullSecrets:

- name: hubsecret

containers:

- name: jenkins

image: hub.domain.com/docker/jenkins:1.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: web

protocol: TCP

- containerPort: 50000

name: agent

protocol: TCP

volumeMounts:

- name: jenkinshome

mountPath: /var/jenkins_home

env:

- name: JAVA_OPTS

value: "-Duser.timezone=Asia/Shanghai"

volumes:

- name: jenkinshome

nfs:

server: 10.79.163.29

path: "/data/jenkins_home"

---

kind: Service

apiVersion: v1

metadata:

labels:

app: jenkins

name: jenkins

spec:

ports:

- port: 8080

targetPort: 8080

name: web

- port: 50000

targetPort: 50000

name: agent

selector:

app: jenkins

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: jenkins

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: jenkins.domain.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 8080

EOF

加入新node节点

升级内核

docker安装

安装kubeadm,kubelet,kubectl

配置系统相关参数

配置kubelet

#开放防火墙权限(或加入对应端口权限)

iptables -P INPUT ACCEPT

iptables -P OUTPUT ACCEPT

iptables -P FORWARD ACCEPT

#添加解析到hosts文件(所有k8s节点都要加)

cat >>/etc/hosts<<EOF

10.79.167.26 k8s1

10.79.167.27 k8s2

10.79.167.28 k8s3

10.79.160.50 node1

10.79.160.51 node2

10.79.160.65 node3

10.79.160.67 node4

EOF

# master节点查看node加入命令

kubeadm token create --print-join-command

# 得到的kubeadm join命令在node节点执行

# 提示token过期解决方法

token=$(kubeadm token generate)

kubeadm token create $token --print-join-command --ttl=0

troubleshoot

etcd集群排错