【Hadoop】9、hadoop1.2.1完全分布式安装过程异常报错

异常报错

1、ssh配置出错,ssh登录 The authenticity of host 192.168.0.xxx can’t be established.

用ssh登录一个机器(换过ip地址),提示输入yes后,屏幕不断出现y,只有按ctrl + c结束

错误是:The authenticity of host 192.168.0.xxx can’t be established.

以前和同事碰到过这个问题,解决了,没有记录,这次又碰到了不知道怎么处理,还好有QQ聊天记录,查找到一下,找到解决方案:

执行ssh -o StrictHostKeyChecking=no 192.168.0.xxx 就OK

网址是:

http://blog.ossxp.com/2010/04/1026/

记一下,防止又忘记了,又快到3点了,无数个不眠之夜啊。

2011/10/11

某天机器又改IP了,ssh后,报:

mmt@FS01:~$ ssh -o StrictHostKeyChecking=no 192.168.0.130

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

Someone could be eavesdropping on you right now (man-in-the-middle attack)!

It is also possible that the RSA host key has just been changed.

The fingerprint for the RSA key sent by the remote host is

fe:d6:f8:59:03:a5:de:e8:29:ef:3b:26:6e:3d:1d:4b.

Please contact your system administrator.

Add correct host key in /home/mmt/.ssh/known_hosts to get rid of this message.

Offending key in /home/mmt/.ssh/known_hosts:38

Password authentication is disabled to avoid man-in-the-middle attacks.

Keyboard-interactive authentication is disabled to avoid man-in-the-middle attacks.

Permission denied (publickey,password).

注意这句

Add correct host key in /home/mmt/.ssh/known_hosts to get rid of this message.

执行:

mv /home/mmt/.ssh/known_hosts known_hosts.bak

再连:

ssh -o StrictHostKeyChecking=no 192.168.0.130

OK了!

我的处理是由于/home下面的主文件夹的权限分配错误,我的是777,我们应该是700这个权限才是对的dwrx——的格式的xiaofeng文件夹的权限

2、启动Hadoop报错org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

2015-06-23 21:48:59,176 ERROR org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode: Exception in doCheckpoint

java.net.ConnectException: Call From hadoop/192.168.100.101 to hadoop:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1472)

at org.apache.hadoop.ipc.Client.call(Client.java:1399)

at org.apache.hadoop.ipc.ProtobufRpcEngine Invoker.invoke(ProtobufRpcEngine.java:232)atcom.sun.proxy. Proxy9.getTransactionId(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.NamenodeProtocolTranslatorPB.getTransactionID(NamenodeProtocolTranslatorPB.java:125)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy. Proxy10.getTransactionID(UnknownSource)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.countUncheckpointedTxns(SecondaryNameNode.java:641)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.shouldCheckpointBasedOnCount(SecondaryNameNode.java:649)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:393)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1.run(SecondaryNameNode.java:361)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client Connection.setupConnection(Client.java:607)atorg.apache.hadoop.ipc.Client Connection.setupIOstreams(Client.java:705)

at org.apache.hadoop.ipc.Client Connection.access 2800(Client.java:368)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1521)

at org.apache.hadoop.ipc.Client.call(Client.java:1438)

… 18 more

2015-06-23 21:49:00,335 ERROR org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode: RECEIVED SIGNAL 15: SIGTERM

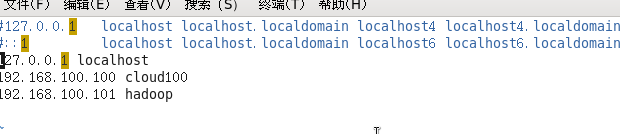

我的处理是吧/etc/hosts里面的127.0.0.0 localhost 删除掉,然后就好了

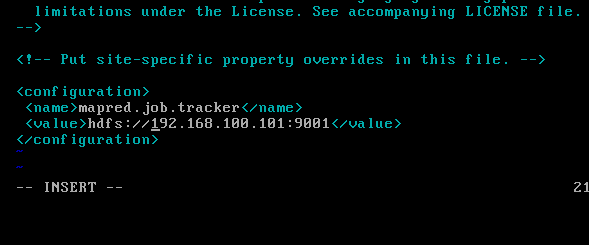

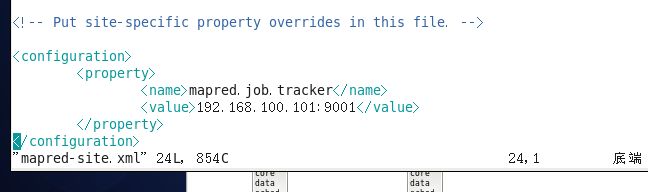

3、我的jobtracker起不来,也就是http://localhost:50030进不去

解决方法:

在mapred-site.xml中添加如下

mapred.job.tracker

本机ip:9001

改了之后:

ERROR org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode: Exception in doCheckpoint

java.io.IOException: Inconsistent checkpoint fields.

LV = -60 namespaceID = 2135182169 cTime = 0 ; clusterId = CID-7091440f-7848-45e8-b7e7-bb36386bd831 ; blockpoolId = BP-1880503318-192.168.100.101-1435108226909.

Expecting respectively: -60; 1151564106; 0; CID-f87e89d8-0bf7-461a-90a8-6a21445826e6; BP-1897535954-127.0.0.1-1435066561890.

at org.apache.hadoop.hdfs.server.namenode.CheckpointSignature.validateStorageInfo(CheckpointSignature.java:134)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doCheckpoint(SecondaryNameNode.java:531)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:395)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1.run(SecondaryNameNode.java:361)atorg.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)atjava.lang.Thread.run(Thread.java:745)2015−06−2409:12:52,471ERRORorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode:ExceptionindoCheckpointjava.io.IOException:Inconsistentcheckpointfields.LV=−60namespaceID=2135182169cTime=0;clusterId=CID−7091440f−7848−45e8−b7e7−bb36386bd831;blockpoolId=BP−1880503318−192.168.100.101−1435108226909.Expectingrespectively:−60;1151564106;0;CID−f87e89d8−0bf7−461a−90a8−6a21445826e6;BP−1897535954−127.0.0.1−1435066561890.atorg.apache.hadoop.hdfs.server.namenode.CheckpointSignature.validateStorageInfo(CheckpointSignature.java:134)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doCheckpoint(SecondaryNameNode.java:531)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:395)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1.run(SecondaryNameNode.java:361)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)

at java.lang.Thread.run(Thread.java:745)

2015-06-24 09:13:52,492 ERROR org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode: Exception in doCheckpoint

java.io.IOException: Inconsistent checkpoint fields.

LV = -60 namespaceID = 2135182169 cTime = 0 ; clusterId = CID-7091440f-7848-45e8-b7e7-bb36386bd831 ; blockpoolId = BP-1880503318-192.168.100.101-1435108226909.

Expecting respectively: -60; 1151564106; 0; CID-f87e89d8-0bf7-461a-90a8-6a21445826e6; BP-1897535954-127.0.0.1-1435066561890.

at org.apache.hadoop.hdfs.server.namenode.CheckpointSignature.validateStorageInfo(CheckpointSignature.java:134)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doCheckpoint(SecondaryNameNode.java:531)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:395)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1.run(SecondaryNameNode.java:361)atorg.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)atjava.lang.Thread.run(Thread.java:745)2015−06−2409:14:52,526ERRORorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode:ExceptionindoCheckpointjava.io.IOException:Inconsistentcheckpointfields.LV=−60namespaceID=2135182169cTime=0;clusterId=CID−7091440f−7848−45e8−b7e7−bb36386bd831;blockpoolId=BP−1880503318−192.168.100.101−1435108226909.Expectingrespectively:−60;1151564106;0;CID−f87e89d8−0bf7−461a−90a8−6a21445826e6;BP−1897535954−127.0.0.1−1435066561890.atorg.apache.hadoop.hdfs.server.namenode.CheckpointSignature.validateStorageInfo(CheckpointSignature.java:134)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doCheckpoint(SecondaryNameNode.java:531)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:395)atorg.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1.run(SecondaryNameNode.java:361)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)

at java.lang.Thread.run(Thread.java:745)

2015-06-24 09:15:52,576 ERROR org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode: Exception in doCheckpoint

java.io.IOException: Inconsistent checkpoint fields.

LV = -60 namespaceID = 2135182169 cTime = 0 ; clusterId = CID-7091440f-7848-45e8-b7e7-bb36386bd831 ; blockpoolId = BP-1880503318-192.168.100.101-1435108226909.

Expecting respectively: -60; 1151564106; 0; CID-f87e89d8-0bf7-461a-90a8-6a21445826e6; BP-1897535954-127.0.0.1-1435066561890.

at org.apache.hadoop.hdfs.server.namenode.CheckpointSignature.validateStorageInfo(CheckpointSignature.java:134)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doCheckpoint(SecondaryNameNode.java:531)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.doWork(SecondaryNameNode.java:395)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode$1.run(SecondaryNameNode.java:361)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)

at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.run(SecondaryNameNode.java:357)

at java.lang.Thread.run(Thread.java:745)

这里应该是tmp目录的权限问题

我们使用:

Chown –R xiaofeng:xiaofeng tmp

指令 读权限 用户:用户组 文件夹

1-这里我们开始吧localhost加入进去

不行!!

2-我们修改mapred-site.xml文件

失败

3-我们重新配置一下ssh无密码登陆

失败

4-修改文件xml和salves文件

失败。。。。

4、复制文件进入hdfs

WARN hdfs.DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /in/input/test1.txt.COPYING could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

报错,参照http://blog.csdn.net/stark_summer/article/details/43484545重新配置

5、ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: java.io.IOException: Incompatible namespaceIDs in /opt/hadoop-1.2/dfs/data: namenode namespaceID = 1387437550; datanode namespaceID = 804590804

ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: java.io.IOException: Incompatible namespaceIDs in /opt/hadoop-1.2/dfs/data: namenode namespaceID = 1387437550; datanode namespaceID = 804590804

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:232)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:147)

at org.apache.hadoop.hdfs.server.datanode.DataNode.startDataNode(DataNode.java:414)

at org.apache.hadoop.hdfs.server.datanode.DataNode.(DataNode.java:321)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:1712)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:1651)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:1669)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:1795)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:1812)

解决:

1.进入每个datanode的 dfs.data.dir 的 current目录,修改里面的文件VERSION

Fri Nov 23 15:00:17 CST 2012

namespaceID=246015542

storageID=DS-2085496284-192.168.1.244-50010-1353654017403

cTime=0

storageType=DATA_NODE

layoutVersion=-32

里面有个 namespaceID 将其修改成为报错信息中的

namenode namespaceID = 971169702

相同的 namespaceID .

然后重新启动 datanode全部都能正常启动了。

2.由于是测试环境,于是产生的第一个想法是 直接删除每个datanode 上面 dfs.data.dir目录下所有的文件

rm -rf *

删除之后

重新启动 也是可以正常的启动所有的datanode

进到dfs.data.dir目录下 所有的datanode又全部重新生成了。