Elasticsearch使用TTL导致OOM问题分析解决

简介:

Elasticsearch 由于使用TTL,在文档量很大的时候,如果同时有大量文档过期,可能会导致集群节点OOM。本文记录这一现象,以及问题分析,处理步骤。

1.现象

今天我们的ES出现了OOM。ES版本是2.1.2,日志如下:

[2017-06-21 11:10:12,250][WARN ][monitor.jvm ] [dm_172.23.41.93:20002] [gc][young][535][56] duration [1.4s], collections [1]/[2.3s], total [1.4s]/[10.6s], memory [9.5gb]->[8.5gb]/[15.8gb], all_pools {[young] [1.3gb]->[26.5mb]/[1.4gb]}{[survivor] [191.3mb]->[191.3mb]/[191.3mb]}{[old] [8gb]->[8.3gb]/[14.1gb]}

[2017-06-21 11:14:06,057][WARN ][monitor.jvm ] [dm_172.23.41.93:20002] [gc][young][766][83] duration [2.1s], collections [1]/[3.1s], total [2.1s]/[15.7s], memory [12.6gb]->[11.7gb]/[15.8gb], all_pools {[young] [1.3gb]->[30.1mb]/[1.4gb]}{[survivor] [191.3mb]->[191.3mb]/[191.3mb]}{[old] [11.1gb]->[11.5gb]/[14.1gb]}

[2017-06-21 11:18:20,668][WARN ][monitor.jvm ] [dm_172.23.41.93:20002] [gc][old][985][17] duration [35.9s], collections [1]/[36.2s], total [35.9s]/[38.1s], memory [15.6gb]->[14.2gb]/[15.8gb], all_pools {[young] [1.4gb]->[96.6mb]/[1.4gb]}{[survivor] [148mb]->[0b]/[191.3mb]}{[old] [14gb]->[14.1gb]/[14.1gb]}

内存增长很快,16G的内存,启动后几分钟就满了,而且回收不了。重启还是一样,很快就OOM。多次反复重启,反复OOM,看来集群恢复不了了。

查看索引,有一个1,283,152,933 文档,主分片总大小996.1GB的索引。索引mapping如下:

{

"cluster_name": "es-302",

"metadata": {

"indices": {

"msg_center_log": {

"settings": {

"index": {

"number_of_shards": "5",

"creation_date": "1490263243013",

"analysis": {

"char_filter": {

"extend_to_space": {

"mappings": [

"\"extend1\":\"=>\\b",

"\",\"venderId\":\"=>\\b",

"\",\"extend2\":\"=>\\b",

"\",\"type\"=>\\b"

],

"type": "mapping"

}

},

"analyzer": {

"my_analyzer": {

"filter": "lowercase",

"char_filter": [

"extend_to_space",

"html_strip"

],

"type": "custom",

"tokenizer": "standard"

}

}

},

"number_of_replicas": "1",

"uuid": "ShmjnFHVS0W25E0EphFznA",

"version": {

"created": "2010399"

}

}

},

"mappings": {

"message": {

"_routing": {

"required": true

},

"_ttl": {

"default": 172800000,

"enabled": true

},

"_timestamp": {

"enabled": true

},

"_all": {

"enabled": true

},

"properties": {

"msgType": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"source": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"deviceId": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"msgStatus": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"content": {

"analyzer": "my_analyzer",

"store": true,

"type": "string"

},

"pushType": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"pin": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"extend2": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"msgNode": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"msgTime": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"extend1": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"serviceNoId": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"jmMsgType": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"msgUniqueId": {

"index": "not_analyzed",

"store": true,

"type": "string"

},

"timestamp": {

"index": "not_analyzed",

"store": true,

"type": "string"

}

}

}

},

"aliases": [],

"state": "open"

}

},

"cluster_uuid": "_na_",

"templates": {}

}

}可以看到该索引使用了TTL。

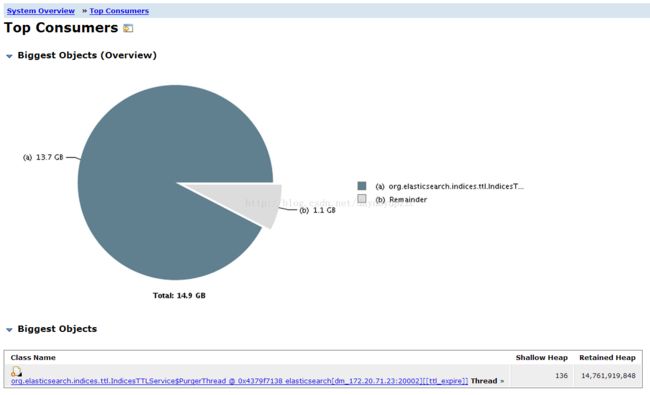

2.分析内存

jmap -dump:live,format=b,file=/export/dump/dump.hd 9326在Linux上安装mat,进入mat目录,使用mat工具分析dump文件,命令如下:

./ParseHeapDump.sh /export/dump/dump.hd

./ParseHeapDump.sh /export/dump/dump.hd org.eclipse.mat.api:suspects

./ParseHeapDump.sh /export/dump/dump.hd org.eclipse.mat.api:overview

./ParseHeapDump.sh /export/dump/dump.hd org.eclipse.mat.api:top_components生成三个报告:

dump_Top_Components.zip

dump_System_Overview.zip

dump_Leak_Suspects.zip

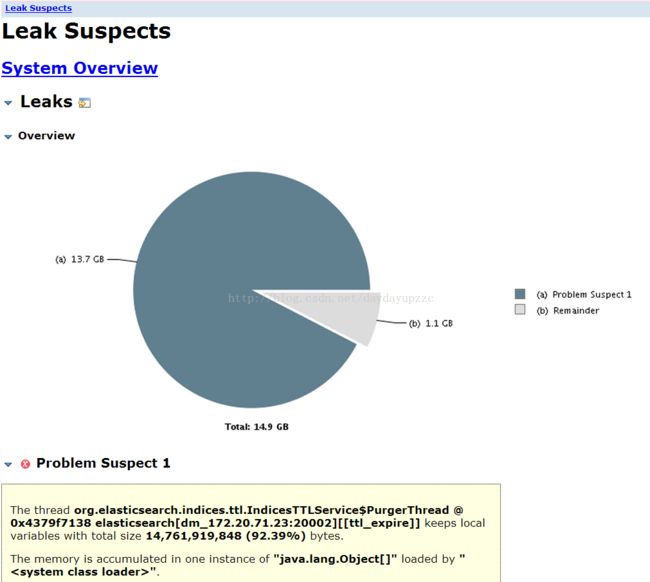

将这三个文件下载下来,解压,浏览可以看到:

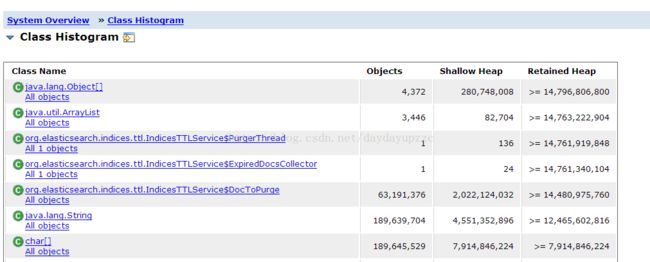

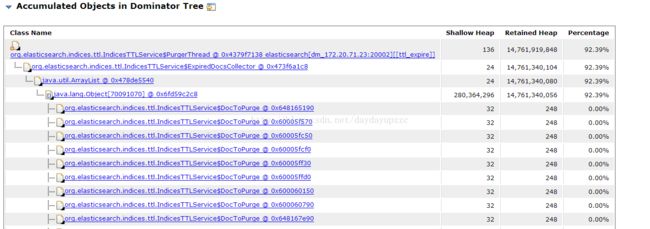

可以看到 org.elasticsearch.indices.ttl.IndicesTTLService$PurgerThread这个就是问题所在。

3.问题分析

查看ES源码 org.elasticsearch.indices.ttl.IndicesTTLService构造函数如下:PurgerThread

@Inject

public IndicesTTLService(Settings settings, ClusterService clusterService, IndicesService indicesService, NodeSettingsService nodeSettingsService, TransportBulkAction bulkAction) {

super(settings);

this.clusterService = clusterService;

this.indicesService = indicesService;

TimeValue interval = this.settings.getAsTime("indices.ttl.interval", TimeValue.timeValueSeconds(60));//60s 执行一次

this.bulkAction = bulkAction;

this.bulkSize = this.settings.getAsInt("indices.ttl.bulk_size", 10000);//文档过期后,一次bulk发送删除请求的文档数

this.purgerThread = new PurgerThread(EsExecutors.threadName(settings, "[ttl_expire]"), interval);//启动定时任务,清理ttl过期文档,默认周期60s,

nodeSettingsService.addListener(new ApplySettings());

}IndicesTTLService构造函数中启动了一个清除过期文档的定时周期任务,PurgerThread,任务定义如下:

@Override

public void run() {

try {

while (running.get()) {

try {

List shardsToPurge = getShardsToPurge();//获取需要进行ttl处理的主分片

purgeShards(shardsToPurge);//获取需要删除的文档,发送bulk delete请求 purgeShards()方法如下:

private void purgeShards(List shardsToPurge) {

for (IndexShard shardToPurge : shardsToPurge) {

Query query = shardToPurge.indexService().mapperService().smartNameFieldType(TTLFieldMapper.NAME).rangeQuery(null, System.currentTimeMillis(), false, true);

Engine.Searcher searcher = shardToPurge.acquireSearcher("indices_ttl");

try {

logger.debug("[{}][{}] purging shard", shardToPurge.routingEntry().index(), shardToPurge.routingEntry().id());

ExpiredDocsCollector expiredDocsCollector = new ExpiredDocsCollector();

searcher.searcher().search(query, expiredDocsCollector);//调用Lucene,获取需要删除的文档,

List docsToPurge = expiredDocsCollector.getDocsToPurge();//需要删除的文档

BulkRequest bulkRequest = new BulkRequest();

for (DocToPurge docToPurge : docsToPurge) {

bulkRequest.add(new DeleteRequest().index(shardToPurge.routingEntry().index()).type(docToPurge.type).id(docToPurge.id).version(docToPurge.version).routing(docToPurge.routing));

bulkRequest = processBulkIfNeeded(bulkRequest, false);//如果bulk 请求删除的文档数达到了indices.ttl.bulk_size,发送bulk delete 请求

}

processBulkIfNeeded(bulkRequest, true); 通过对比上面的dump分析图表,可以确定占用13.7G内存的就是下面这个

List

同时有63,191,376 个TTL过期的文档需要删除,org.elasticsearch.indices.ttl.IndicesTTLService$DocToPurge对象将内存占满了。

| Class Name | Objects | Shallow Heap | Retained Heap |

| org.elasticsearch.indices.ttl.IndicesTTLService$PurgerThread | 1 | 136 | >= 14,761,919,848 |

| org.elasticsearch.indices.ttl.IndicesTTLService$PurgerThread | 1 | 24 | >= 14,761,340,104 |

| org.elasticsearch.indices.ttl.IndicesTTLService$DocToPurge | 63,191,376 | 2,022,124,032 | >= 14,480,975,760 |

| org.elasticsearch.indices.ttl.IndicesTTLService$DocToPurge | 189,639,704 | 4,551,352,896 | >= 12,465,602,816 |

4.解决方案

通过动态设置索引的参数

index.ttl.disable_purge

[experimental] Disables the purge of expired docs on the current index.

禁用掉ttl,使ES不对ttl过期的文档进行处理。

PUT index1/_settings

{

"ttl.disable_purge": true

}

这样ES才能正常启动起来。然后想办法主动删除过期的文档。

配置参考这里:https://www.elastic.co/guide/en/elasticsearch/reference/2.1/index-modules.html

总结:Elasticsearch 高版本已经取消了TTL功能,所以最好不要用TTL,如果需要删除文档,可以每天创建一个索引,到期后直接删除过期的索引,方便快捷,这也是官方推荐的方案。