scrapy爬虫遇坑爬坑记录

①scrapy新建项目:

scrapy startproject xxx(项目名)

②cd至项目目录下输入命令:

scrapy genspider mytianya(爬虫名) "bbs.tianya.cn"(域名)

③新获取页面body查看结构:

在def parse(self,response)方法下下添加:html_bd=response.body.decode('gbk')

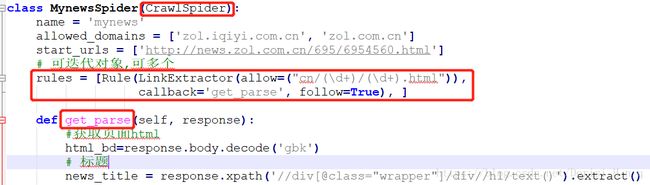

④翻页需要添加CrawlSpider,Rule,LinkExtractor,不能占用parse:

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

rules = [Rule(LinkExtractor(allow=("cn/(\d+)/(\d+).html")),

callback='get_parse', follow=True), ]⑤添加start.py文件运行并保存爬取的数据:

import scrapy.cmdline

def main():

scrapy.cmdline.execute(['scrapy', 'crawl', 'mynews','-o','news.csv'])

if __name__ == '__main__':

main()

⑥设置settings:

ROBOTSTXT_OBEY = False

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'

}

#如果想直接存储数据库需要打开该项

ITEM_PIPELINES = {

'zgcNews.pipelines.ZgcnewsPipeline': 300,

}⑦pipelines.py添加数据库连接配置:

遇坑记录:

1.创建数据库的结构采用char字段,然而爬取的存储字段非char(字段类型不对应,检查item['xx']=yy中print(yy)查看类型)

2.数据库对应的库及表名不存在(未更正成要存储的库)

3.存储的字段数量不对应(比如少写几个%r或者几个item['xx'])

4.使用item=classname(),item[xxx]=xxx中item['xxx']的xxx应当加上双引号(不加不显示错误但运行报错)

import pymysql

class ZgcnewsPipeline(object):

def __init__(self):

# 连接数据库

self.conn = None

# 游标

self.cur = None

def open_spider(self, spider):

self.conn = pymysql.connect(host='127.0.0.1',

user='root',

password="xxx",

database='myitem',

port=3306,

charset='utf8')

self.cur = self.conn.cursor()

def process_item(self, item, spider):

clos, value = zip(*item.items())

'''

zip()

'''

# sql = "INSERT INTO `%s`(%s) VALUES (%s)" % ('tb_news',

# ','.join(clos),

# ','.join(['%s']*len(value)))

# self.cur.execute(sql, value)

sql="INSERT INTO tb_news(news_title, news_content, news_img, news_time, hot_img,key_word) " \

"VALUES (%r,%r,%r,%r,%r,%r)" \

" " % (item['news_title'],item['news_content'],item['news_img'],item['news_time'],item['hot_img'],item['key_word'])

print('sql---',sql)

# 执行

self.cur.execute(sql)

self.conn.commit()

return item

def close_spider(self, spider):

self.cur.close()

self.conn.close()