基于MHA实现的mysql高可用集群

基于MHA实现的mysql高可用集群

节点之间部署:

| 角色 | 主机名 | ip | server-id | 角色 |

|---|---|---|---|---|

| Master(Monitor host) | server1 | 172.25.30.1 | 1 | 监控复制组manager |

| Candicate master | server2 | 172.25.30.2 | 2 | 读 |

| Slave | server3 | 172.25.30.3 | 3 | 读 |

1.集群节点实现gtid实现的半同步主从复制:

server1:master(node1)—>manager

server2:slave(node2)

server3:slave(node3)

三个节点的配置文件完全相同。创建一个相同的用户并授权。

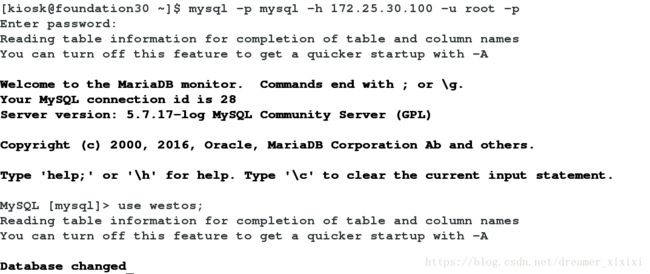

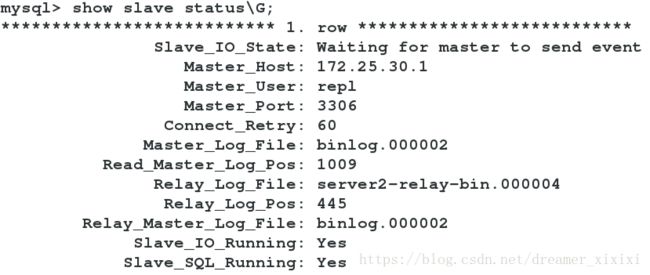

1.实现gtid实现的半同步复制

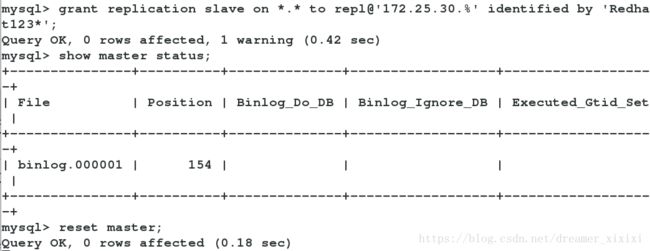

Master 给用户授权

Slave给用户授权和master创建相同的用户

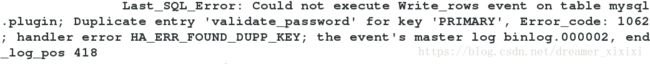

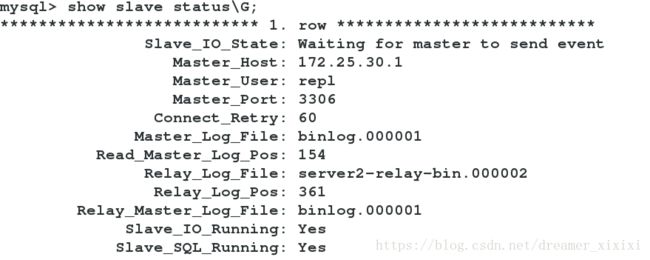

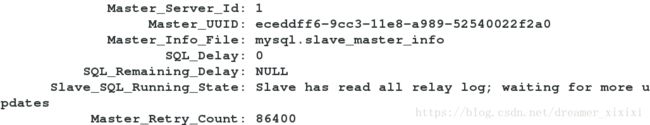

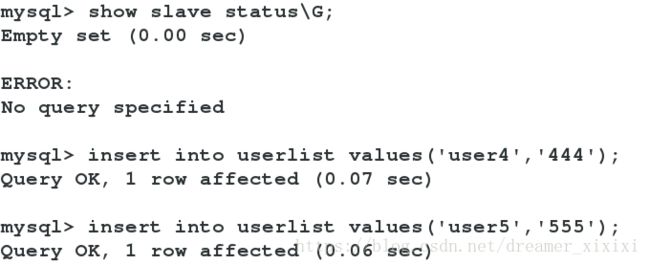

查看slave状态时如果出现了下面的情况:原因是master已经执行了建立用户的动作slave不愿意在执行第二次,就会报错。

解决方法:在slave端关闭stop slave;

在master端reset master;

之后在salve端 reset slave——> start slave—–>show slave status\G;

2. MHA—manager:

Manager工具包主要包括以下几个工具:

masterha_check_ssh 检查MHA的SSH配置状况

masterha_check_repl 检查MySQL复制状况

masterha_manger 启动MHA

masterha_check_status 检测当前MHA运行状态

masterha_master_monitor 检测master是否宕机

masterha_master_switch 控制故障转移(自动或者手动)

masterha_conf_host 添加或删除配置的server信息[root@server1 ~]# ls

mha4mysql-manager-0.56-0.el6.noarch.rpm

mha4mysql-node-0.56-0.el6.noarch.rpm

perl-Config-Tiny-2.12-7.1.el6.noarch.rpm

perl-Email-Date-Format-1.002-5.el6.noarch.rpm

perl-Log-Dispatch-2.27-1.el6.noarch.rpm

perl-Mail-Sender-0.8.16-3.el6.noarch.rpm

perl-Mail-Sendmail-0.79-12.el6.noarch.rpm

perl-MIME-Lite-3.027-2.el6.noarch.rpm

perl-MIME-Types-1.28-2.el6.noarch.rpm

perl-Parallel-ForkManager-0.7.9-1.el6.noarch.rpm

[root@server1 ~]# yum install * -y

[root@server1 ~]# mkdir /etc/masterha

[root@server1 ~]# cd /etc/masterha/

[root@server1 masterha]# ls3.生成加密key,使得集群之间所有的主机ssh可以免密

[root@server1 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

64:a7:ef:78:bf:0b:7b:9d:8d:bd:8d:87:5b:51:be:42 root@server1

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

| o . .|

| o o ..|

| S E ..|

| . . o|

| o o B.|

| o.o. *o=|

| ..oo+oo+o|

+-----------------+

[root@server1 .ssh]# ssh-copy-id 172.25.30.2

The authenticity of host '172.25.30.2 (172.25.30.2)' can't be established.

RSA key fingerprint is df:7b:e1:29:cb:eb:8b:07:40:9d:58:21:a3:31:73:32.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.25.30.2' (RSA) to the list of known hosts.

root@172.25.30.2's password:

Now try logging into the machine, with "ssh '172.25.30.2'", and check in:

.ssh/authorized_keys

[root@server1 .ssh]# ssh-copy-id 172.25.30.3

[root@server2 .ssh]# scp * [email protected]:.ssh/

root@172.25.30.1's password:

authorized_keys 100% 394 0.4KB/s 00:00

id_rsa 100% 1675 1.6KB/s 00:00

known_hosts 100% 1576 1.5KB/s 00:00节点之间相互连接测试:

[root@server1 .ssh]# ssh server2

The authenticity of host 'server2 (172.25.30.2)' can't be established.

RSA key fingerprint is df:7b:e1:29:cb:eb:8b:07:40:9d:58:21:a3:31:73:32.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'server2' (RSA) to the list of known hosts.

[root@server1 .ssh]# ssh server3

Warning: Permanently added 'server3' (RSA) to the list of known hosts.

Last login: Thu Aug 9 04:51:47 2018 from 172.25.30.250

[root@server3 ~]# logout

Connection to server3 closed.

[root@server3 ~]# ssh server2

Last login: Thu Aug 9 06:20:26 2018 from server3

[root@server2 ~]# ssh server1

Last login: Thu Aug 9 05:22:51 2018 from server3将server1上的高可用组件中的node安装包分别要在server2、server3和server1上安装

将server1上的密钥文件传给server2、server3和server1,使每个主机都能免密ssh

Node工具包(这些工具通常由MHA Manager的脚本触发,无需人为操作)主要包括以下几个工具:

save_binary_logs 保存和复制master的二进制日志

apply_diff_relay_logs 识别差异的中继日志事件并将其差异的事件应用于其他的slave

filter_mysqlbinlog 去除不必要的ROLLBACK事件(MHA已不再使用这个工具)

purge_relay_logs 清除中继日志(不会阻塞SQL线程)[root@server1 ~]# scp mha4mysql-node-0.56-0.el6.noarch.rpm server2:

mha4mysql-node-0.56-0.el6.noarch.rpm 100% 35KB 35.5KB/s 00:00

[root@server1 ~]# scp mha4mysql-node-0.56-0.el6.noarch.rpm server3:

mha4mysql-node-0.56-0.el6.noarch.rpm 100% 35KB 35.5KB/s 00:00

[root@server1 ~]# yum install mha4mysql-node-0.56-0.el6.noarch.rpm -y

[root@server2 ~]# yum install mha4mysql-node-0.56-0.el6.noarch.rpm -y

[root@server3 ~]# yum install mha4mysql-node-0.56-0.el6.noarch.rpm -y建立脚本进行检测节点部署:

[root@server1 .ssh]# vim /etc/masterha/app.cnf

[server default]

manager_workdir=/etc/masterha/ //设置manager的工作目录

manager_log=/etc/masterha/app1.log //设置manager的日志

master_binlog_dir=/data/mysql //设置master 保存binlog的位置,以便MHA可以找到master的日志,我这里的也就是mysql的数据目录

master_ip_failover_script= /usr/local/bin/master_ip_failover //设置自动failover时候的切换脚本

master_ip_online_change_script= /usr/local/bin/master_ip_online_change //设置手动切换时候的切换脚本

password=Cqmyg+666 //设置mysql中root用户的密码

user=root //设置用户root

ping_interval=1 //设置监控主库,发送ping包的时间间隔,默认是3秒,尝试三次没有回应的时候自动进行railover

remote_workdir=/tmp //设置远端mysql在发生切换时binlog的保存位置

password=Redhat123*/设置复制用户的密码

repl_user=repl //设置复制环境中的复制用户名

report_script=/root/MHA/send_report //设置发生切换后发送的报警的脚本

secondary_check_script= /usr/bin/masterha_secondary_check -s 172.25.31.1 -s 172.25.31.2

shutdown_script=""

ssh_user=root //设置ssh的登录用户名

[server1]

hostname=172.25.30.1

port=3306

#candidate_master=1

#check_repl_delay=0

[server2]

hostname=172.25.30.2

port=3306

#candidate_master=1 //设置为候选master,如果设置该参数以后,发生主从切换以后将会将此从库提升为主库,即使这个主库不是集群中事件最新的slave

#check_repl_delay=0//默认情况下如果一个slave落后master 100M的relay logs的话,MHA将不会选择该slave作为一个新的master,因为对于这个slave的恢复需要花费很长时间,通过设置check_repl_delay=0,MHA触发切换在选择一个新的master的时候将会忽略复制延时,这个参数对于设置了candidate_master=1的主机非常有用,因为这个候选主在切换的过程中一定是新的master

[server3]

hostname=172.25.30.3

port=3306

no_master=14.MHA高可用ssh公钥免密检查,正常,如果集群之间没有做免密,无法通过

asterha_check_ssh –conf=/etc/masterha/app.cnf #交互连接测试通过

[root@server1 ~]# masterha_check_ssh --conf=/etc/masterha/app.cnf

Sat Aug 11 02:54:37 2018 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sat Aug 11 02:54:37 2018 - [info] Reading application default configuration from /etc/masterha/app.cnf..

Sat Aug 11 02:54:37 2018 - [info] Reading server configuration from /etc/masterha/app.cnf..

Sat Aug 11 02:54:37 2018 - [info] Starting SSH connection tests..

Sat Aug 11 02:54:37 2018 - [debug]

Sat Aug 11 02:54:37 2018 - [debug] Connecting via SSH from root@172.25.30.1(172.25.30.1:22) to root@172.25.30.2(172.25.30.2:22)..

Sat Aug 11 02:54:37 2018 - [debug] ok.

Sat Aug 11 02:54:37 2018 - [debug] Connecting via SSH from root@172.25.30.1(172.25.30.1:22) to root@172.25.30.3(172.25.30.3:22)..

Sat Aug 11 02:54:37 2018 - [debug] ok.

Sat Aug 11 02:54:38 2018 - [debug]

Sat Aug 11 02:54:37 2018 - [debug] Connecting via SSH from root@172.25.30.2(172.25.30.2:22) to root@172.25.30.1(172.25.30.1:22)..

Sat Aug 11 02:54:38 2018 - [debug] ok.

Sat Aug 11 02:54:38 2018 - [debug] Connecting via SSH from root@172.25.30.2(172.25.30.2:22) to root@172.25.30.3(172.25.30.3:22)..

Sat Aug 11 02:54:38 2018 - [debug] ok.

Sat Aug 11 02:54:39 2018 - [debug]

Sat Aug 11 02:54:38 2018 - [debug] Connecting via SSH from root@172.25.30.3(172.25.30.3:22) to root@172.25.30.1(172.25.30.1:22)..

Sat Aug 11 02:54:38 2018 - [debug] ok.

Sat Aug 11 02:54:38 2018 - [debug] Connecting via SSH from root@172.25.30.3(172.25.30.3:22) to root@172.25.30.2(172.25.30.2:22)..

Sat Aug 11 02:54:38 2018 - [debug] ok.

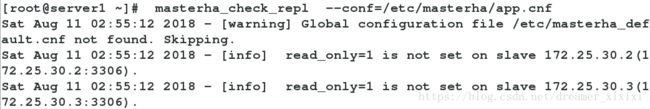

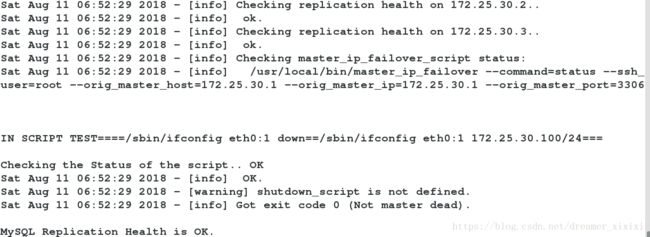

Sat Aug 11 02:54:39 2018 - [info] All SSH connection tests passed successfully.masterha_check_repl –conf=/etc/masterha/app.cnf #节点配置文件检测注意千万不要把read-only写在永久配置文件中否册当slave成为master时是写不进去数据的我们可以在mysql中定义临时变量

Sat Aug 11 02:55:12 2018 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sat Aug 11 02:55:12 2018 - [info] Reading application default configuration from /etc/masterha/app.cnf..

Sat Aug 11 02:55:12 2018 - [info] Reading server configuration from /etc/masterha/app.cnf..

Sat Aug 11 02:55:12 2018 - [info] MHA::MasterMonitor version 0.56.

Sat Aug 11 02:55:12 2018 - [info] GTID failover mode = 1

Sat Aug 11 02:55:12 2018 - [info] Dead Servers:

Sat Aug 11 02:55:12 2018 - [info] Alive Servers:

Sat Aug 11 02:55:12 2018 - [info] 172.25.30.1(172.25.30.1:3306)

Sat Aug 11 02:55:12 2018 - [info] 172.25.30.2(172.25.30.2:3306)

Sat Aug 11 02:55:12 2018 - [info] 172.25.30.3(172.25.30.3:3306)

Sat Aug 11 02:55:12 2018 - [info] Current Alive Master: 172.25.30.1(172.25.30.1:3306)

Sat Aug 11 02:55:12 2018 - [info] Checking slave configurations..

Sat Aug 11 02:55:12 2018 - [info] read_only=1 is not set on slave 172.25.30.2(172.25.30.2:3306).

Sat Aug 11 02:55:12 2018 - [info] read_only=1 is not set on slave 172.25.30.3(172.25.30.3:3306).

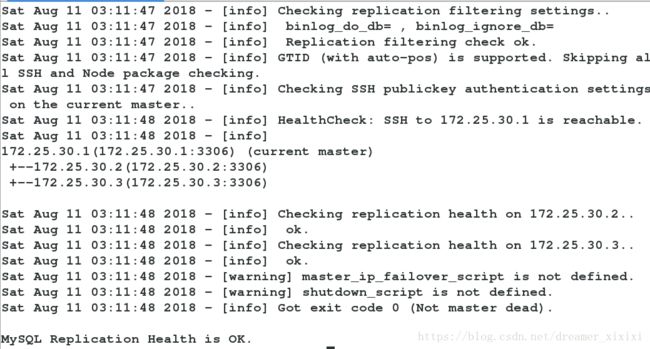

Sat Aug 11 02:55:12 2018 - [info] Checking replication filtering settings..

Sat Aug 11 02:55:12 2018 - [info] binlog_do_db= , binlog_ignore_db=

Sat Aug 11 02:55:12 2018 - [info] Replication filtering check ok.

Sat Aug 11 02:55:12 2018 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Sat Aug 11 02:55:12 2018 - [info] Checking SSH publickey authentication settings on the current master..

Sat Aug 11 02:55:12 2018 - [info] HealthCheck: SSH to 172.25.30.1 is reachable.

Sat Aug 11 02:55:12 2018 - [info]

172.25.30.1(172.25.30.1:3306) (current master)

+--172.25.30.2(172.25.30.2:3306)

+--172.25.30.3(172.25.30.3:3306)

Sat Aug 11 02:55:12 2018 - [info] Checking replication health on 172.25.30.2..

Sat Aug 11 02:55:12 2018 - [info] ok.

Sat Aug 11 02:55:12 2018 - [info] Checking replication health on 172.25.30.3..

Sat Aug 11 02:55:12 2018 - [info] ok.

Sat Aug 11 02:55:12 2018 - [warning] master_ip_failover_script is not defined.

Sat Aug 11 02:55:12 2018 - [warning] shutdown_script is not defined.

Sat Aug 11 02:55:12 2018 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.在server3上授权可以登陆root用户在server1上测试登陆:

mysql> grant all on . to root@’172.25.30.%’ identified by ‘Redhat123*’;

测试一:有报错额警告所以我们根据报错修改

[root@server1 ~]# masterha_check_repl –conf=/etc/masterha/app.cnf

这里面的warning—–>read only没有在slave上面设置;我们是不可以在配置文件里面写的因为当slave切换成master时,如果还是只读的话就会出现意想不到的结果:该设置是临时的

再次测试:

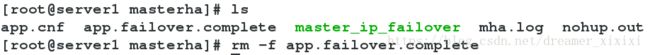

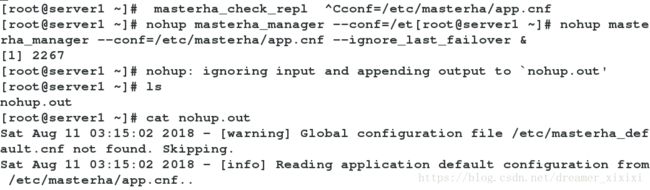

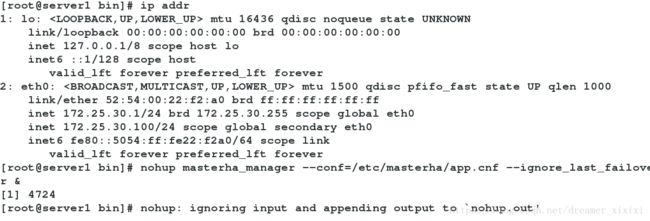

Manager开启监控服务:

nohup masterha_manager –conf=/etc/masterha/app.cnf –ignore_last_failover –ignore_last_failover &

–ignore_last_failover #忽略多次切换生成文件检测.manager

在管理再次切换时会自动检测/etc/masterha/是否生成app.failover.complete文件,如果存在他就会阻止下次切换,在8小时内切换太过频繁会导致数据的不稳定,为了实验检测我们可以设置

–ignore_last_failover 忽略检测;或着每次切换之后删除该文件

手动切换分两种状态切换:

1.msater是alive想切换到另外一个新的slave为master

masterha_master_switch –conf=/etc/masterha/app.cnf –master_state=alive –new_master_host=172.25.30.2 –new_master_port=3306 –orig_master_is_new_slave

[root@server1 ~]# masterha_master_switch --conf=/etc/masterha/app.cnf --master_state=alive --new_master_host=172.25.30.2 --new_master_port=3306 --orig_master_is_new_slave

Sat Aug 11 05:51:07 2018 - [info] GTID failover mode = 1

Sat Aug 11 05:51:07 2018 - [info] Current Alive Master: 172.25.30.1(172.25.30.1:3306)

Sat Aug 11 05:51:07 2018 - [info] Alive Slaves:

Sat Aug 11 05:51:07 2018 - [info] 172.25.30.2(172.25.30.2:3306) Version=5.7.17-log (oldest major version between slaves) log-bin:enabled

Sat Aug 11 05:51:07 2018 - [info] GTID ON

Sat Aug 11 05:51:07 2018 - [info] Replicating from 172.25.30.1(172.25.30.1:3306)

Sat Aug 11 05:51:07 2018 - [info] 172.25.30.3(172.25.30.3:3306) Version=5.7.17-log (oldest major version between slaves) log-bin:enabled

Sat Aug 11 05:51:07 2018 - [info] GTID ON

Sat Aug 11 05:51:07 2018 - [info] Replicating from 172.25.30.1(172.25.30.1:3306)

Sat Aug 11 05:51:07 2018 - [info] Not candidate for the new Master (no_master is set)

Sat Aug 11 05:51:09 2018 - [info] 172.25.30.2 can be new master.

Sat Aug 11 05:51:09 2018 - [info]

From:

172.25.30.1(172.25.30.1:3306) (current master)

+--172.25.30.2(172.25.30.2:3306)

+--172.25.30.3(172.25.30.3:3306)

To:

172.25.30.2(172.25.30.2:3306) (new master)

+--172.25.30.3(172.25.30.3:3306)

+--172.25.30.1(172.25.30.1:3306)

Starting master switch from 172.25.30.1(172.25.30.1:3306) to 172.25.30.2(172.25.30.2:3306)? (yes/NO): yes

Sat Aug 11 05:51:11 2018 - [info] Checking whether 172.25.30.2(172.25.30.2:3306) is ok for the new master.

Sat Aug 11 05:51:14 2018 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='172.25.30.2', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='xxx';

Sat Aug 11 05:51:14 2018 - [info] Setting read_only=0 on 172.25.30.2(172.25.30.2:3306)..

Sat Aug 11 05:51:14 2018 - [info] ok.

Sat Aug 11 05:51:14 2018 - [info]

Sat Aug 11 05:51:14 2018 - [info] * Switching slaves in parallel..

Sat Aug 11 05:51:14 2018 - [info]

Sat Aug 11 05:51:14 2018 - [info] -- Slave switch on host 172.25.30.3(172.25.30.3:3306) started, pid: 4593

Sat Aug 11 05:51:14 2018 - [info]

Sat Aug 11 05:51:15 2018 - [info] Log messages from 172.25.30.3 ...

Sat Aug 11 05:51:15 2018 - [info]

Sat Aug 11 05:51:15 2018 - [info] Starting orig master as a new slave..

Sat Aug 11 05:51:15 2018 - [info] Resetting slave 172.25.30.1(172.25.30.1:3306) and starting replication from the new master 172.25.30.2(172.25.30.2:3306)..

Sat Aug 11 05:51:16 2018 - [info] Executed CHANGE MASTER.

Sat Aug 11 05:51:17 2018 - [info] Slave started.

Sat Aug 11 05:51:17 2018 - [info] All new slave servers switched successfully.

Sat Aug 11 05:51:17 2018 - [info] 172.25.30.2: Resetting slave info succeeded.

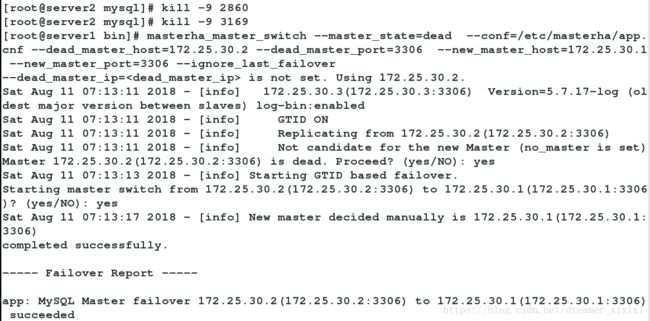

Sat Aug 11 05:51:17 2018 - [info] Switching master to 172.25.30.2(172.25.30.2:3306) completed successfully.第二种状态:从dead—–>alive

masterha_master_switch –master_state=dead –conf=/etc/masterha/app.cnf –dead_master_host=172.25.30.2 –dead_master_port=3306 –new_master_host=172.25.30.1 –new_master_port=3306 –ignore_last_failover

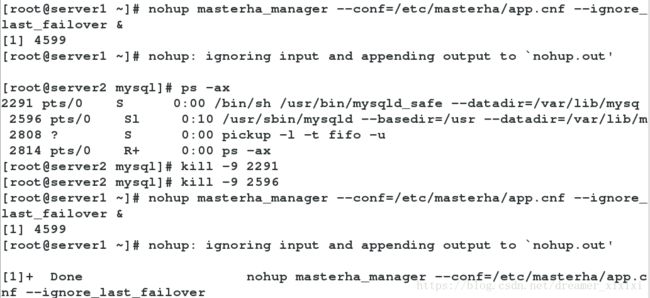

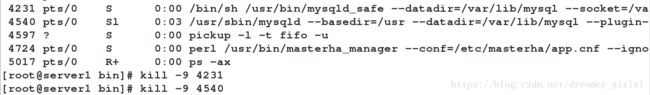

自动切换:

在manger监控工作的

nohup masterha_manager –conf=/etc/masterha/app.cnf –ignore_last_failover &

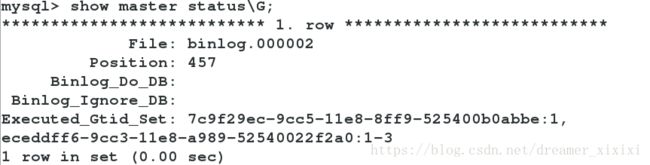

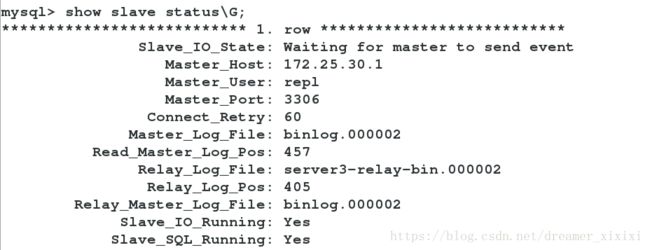

查看切换是否成功:

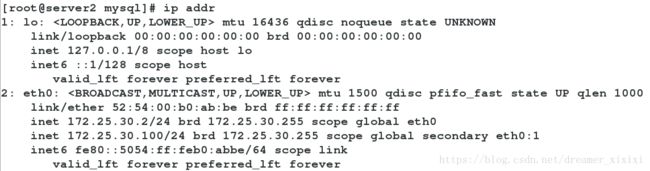

当宕掉的master(被kill的server2)想要重新加入集群时首先:

/etc/init.d/mysqld start

mysql -pRedhat123*

mysql> change master to master_host='172.25.30.1',master_user='repl',master_password='Redhat123*',MASTER_AUTO_POSITION=1; #指向新的master;

start slave;

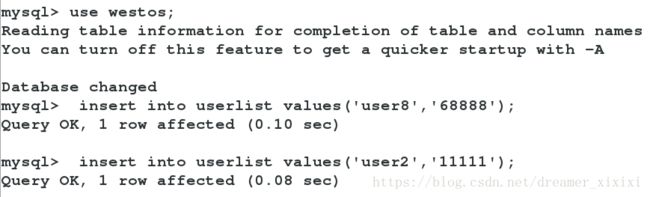

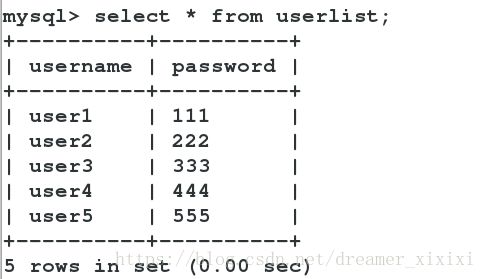

Master创建数据检测是否同步:

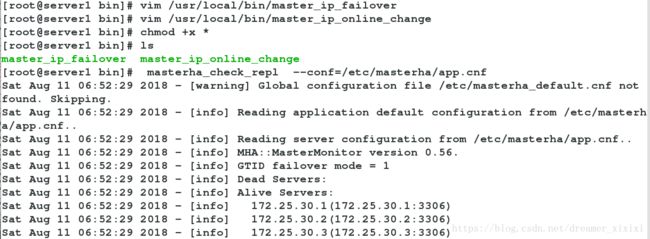

添加脚本:添加VIP—>172.25.30.100

编辑manager:/usr/local/bin/master_ip_online_change;;;

添加vip检测:编辑压测脚本。

1./etc/my.cnf#添加脚本

2.脚本在/usr/local/bin/

3.添加可执行权限

master_ip_failover:压测脚本

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '172.25.30.100/24';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

sub stop_vip() {

return 0 unless ($ssh_user);

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}master_ip_online_change #在线切换脚本

#!/usr/bin/env perl

use strict;

use warnings FATAL =>'all';

use Getopt::Long;

my $vip = '172.25.30.100/24'; # Virtual IP

my $key = "1";

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

my $exit_code = 0;

my (

$command, $orig_master_is_new_slave, $orig_master_host,

$orig_master_ip, $orig_master_port, $orig_master_user,

$orig_master_password, $orig_master_ssh_user, $new_master_host,

$new_master_ip, $new_master_port, $new_master_user,

$new_master_password, $new_master_ssh_user,

);

GetOptions(

'command=s' => \$command,

'orig_master_is_new_slave' => \$orig_master_is_new_slave,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'orig_master_user=s' => \$orig_master_user,

'orig_master_password=s' => \$orig_master_password,

'orig_master_ssh_user=s' => \$orig_master_ssh_user,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

'new_master_user=s' => \$new_master_user,

'new_master_password=s' => \$new_master_password,

'new_master_ssh_user=s' => \$new_master_ssh_user,

);

exit &main();

sub main {

#print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

# $orig_master_host, $orig_master_ip, $orig_master_port are passed.

# If you manage master ip address at global catalog database,

# invalidate orig_master_ip here.

my $exit_code = 1;

eval {

print "\n\n\n***************************************************************\n";

print "Disabling the VIP - $vip on old master: $orig_master_host\n";

print "***************************************************************\n\n\n\n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

# all arguments are passed.

# If you manage master ip address at global catalog database,

# activate new_master_ip here.

# You can also grant write access (create user, set read_only=0, etc) here.

my $exit_code = 10;

eval {

print "\n\n\n***************************************************************\n";

print "Enabling the VIP - $vip on new master: $new_master_host \n";

print "***************************************************************\n\n\n\n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

`ssh $orig_master_ssh_user\@$orig_master_host \" $ssh_start_vip \"`;

exit 0;

}

else {

&usage();

exit 1;

}

}

# A simple system call that enable the VIP on the new master

sub start_vip() {

`ssh $new_master_ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $orig_master_ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}添加脚本之后再次检测:

Master是谁vip就在绑定在谁的ip上:

在线切换:

自动切换:

Slave切换成master vip会跑到他的上面:

2>新的master创建测试是否同步

Slave端查看:

添加vip我们可以在外界登陆这样就可以对数据库进行操作: