IMF SPARK 源代码发行定制班 预习课程 IDEA Spark应用程序的调试 (1)从SparkSubmit入口进行调试

IDEA Spark应用程序的调试 从SparkSubmit入口进行调试

1.下载spark-1.6.1

登录官网http://spark.apache.org/

2. 安装IntelliJ IDEA。进入下载页面:http://www.jetbrains.com/idea/download/,选择对应版本,下载并安装到集群中某个节

点上192.168.189.2 (作为后续调试时的IDE)。

3.将IDEA、IDE 安装到 worker2上192.168.189.2 。安装的winscp文件上传目录 IMFIdeaIDE

root@worker1:/usr/local/IMFIdeaIDE# ls

ideaIC-2016.1.1.tar.gz spark-1.6.1-bin-hadoop2.6.tgz spark-1.6.1.tgz

root@worker1:/usr/local/IMFIdeaIDE#

4. securt 远程 在 ubuntu linux 下安装 idea报错,没有图形化页面

root@worker1:/usr/local/idea-IC-145.597.3/bin# idea.sh

OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=350m; support was removed in 8.0

Startup Error: Unable to detect graphics environment

root@worker1:/usr/local/idea-IC-145.597.3/bin# java -version

java version "1.8.0_60"

Java(TM) SE Runtime Environment (build 1.8.0_60-b27)

Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)

root@worker1:/usr/local/idea-IC-145.597.3/bin#

修改/etc/profile

export IDEA_HOME=/usr/local/idea-IC-145.597.3

export PATH=.:$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$HIVE_HOME/bin:$FLUME_HOME/bin:

$ZOOKEEPER_HOME/bin:$KAFKA_HOME/bin:$IDEA_HOME/bin

source /etc/profile

5。直接登录到192.168.189.2虚拟机上,在虚拟机上执行 ./idea.sh

6.新建工程 IMFspark

新建 object WordCount

import org.apache.spark.{SparkContext, SparkConf}

object WordCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val textFile = sc.textFile("/usr/local/spark-1.6.1-bin-hadoop2.6/README.md")

val wordCounts = textFile.flatMap(line =>line.split(" ")).

map(word => (word, 1)).reduceByKey((a, b) => a + b)

wordCounts.collect().foreach(println)

sc.stop()

}

}

7。引入jar包

源代码的目录

root@worker1:/usr/local/IMFIdeaIDE# ls

ideaIC-2016.1.1.tar.gz spark-1.6.1-bin-hadoop2.6.tgz spark-1.6.1.tgz

源代码解压缩

root@worker1:/usr/local/IMFIdeaIDE# tar -zxvf spark-1.6.1.tgz

8。在idea中选择需要关联源码的jar包,点击左边的+,添加依赖包关联。

/usr/local/IMFIdeaIDE/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar

/usr/local/IMFIdeaIDE/spark-1.6.1-bin-hadoop2.6/lib/spark-examples-1.6.1-hadoop2.6.0.jar

添加源码关联

/usr/local/IMFIdeaIDE/spark-1.6.1

9。idea中 vim emulation 插件的删除

File->settings 然后在Appearance & Behavior中对Keymap设置 选中Default

在setting-----plugins 中 搜索vim ,把它去掉重启idea就可以了

10 Idea打jar包

代码中去掉 .setMaster("local"),打成jar包

打开File -> Project Structure -> Artifacts

点击“+”,选择“Jar”,选择From modules with dependencies。设置好Main Class

回到IDEA,在上面的菜单栏选择Build -> Build Artifacts,成功生成Jar包

11.顺带更换一下IDEA的workspace

cp -R /root/IdeaProjects/IMFSpark/. /usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/

打包成功。

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# pwd

/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# ls

IMFIDEAWordcount.jar

12。 从work1 复制到master

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# scp -rq

/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount/IMFIDEAWordcount.jar

[email protected]:/usr/local/IMF_testdata

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount#

13 master查看

root@master:/usr/local/IMF_testdata# ls | grep IMFIDEA

IMFIDEAWordcount.jar

root@master:/usr/local/IMF_testdata#

14 在worker1的IDEA上运行

设置参数

--master spark://192.168.189.1:7077 --class WordCount /usr/local/IMF_testdata/IMFIDEAWordcount.jar

15. IDEA 中提交到cluster的调试,成功。

在SparkSubmit的main方法中设置断点,点击右上角的调试按钮:

root@worker1:/usr/local# scp -rq /usr/local/idea-IC-145.597.3 [email protected]:/usr/local/idea-IC-145.597.3

scp -rq /usr/local/IMF_IDEA_WorkSpace [email protected]:/usr/local/IMF_IDEA_WorkSpace

scp -rq /usr/local/IMFIdeaIDE [email protected]:/usr/local/IMFIdeaIDE

1.下载spark-1.6.1

登录官网http://spark.apache.org/

2. 安装IntelliJ IDEA。进入下载页面:http://www.jetbrains.com/idea/download/,选择对应版本,下载并安装到集群中某个节

点上192.168.189.2 (作为后续调试时的IDE)。

3.将IDEA、IDE 安装到 worker2上192.168.189.2 。安装的winscp文件上传目录 IMFIdeaIDE

root@worker1:/usr/local/IMFIdeaIDE# ls

ideaIC-2016.1.1.tar.gz spark-1.6.1-bin-hadoop2.6.tgz spark-1.6.1.tgz

root@worker1:/usr/local/IMFIdeaIDE#

4. securt 远程 在 ubuntu linux 下安装 idea报错,没有图形化页面

root@worker1:/usr/local/idea-IC-145.597.3/bin# idea.sh

OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=350m; support was removed in 8.0

Startup Error: Unable to detect graphics environment

root@worker1:/usr/local/idea-IC-145.597.3/bin# java -version

java version "1.8.0_60"

Java(TM) SE Runtime Environment (build 1.8.0_60-b27)

Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)

root@worker1:/usr/local/idea-IC-145.597.3/bin#

修改/etc/profile

export IDEA_HOME=/usr/local/idea-IC-145.597.3

export PATH=.:$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$HIVE_HOME/bin:$FLUME_HOME/bin:

$ZOOKEEPER_HOME/bin:$KAFKA_HOME/bin:$IDEA_HOME/bin

source /etc/profile

5。直接登录到192.168.189.2虚拟机上,在虚拟机上执行 ./idea.sh

6.新建工程 IMFspark

新建 object WordCount

import org.apache.spark.{SparkContext, SparkConf}

object WordCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val textFile = sc.textFile("/usr/local/spark-1.6.1-bin-hadoop2.6/README.md")

val wordCounts = textFile.flatMap(line =>line.split(" ")).

map(word => (word, 1)).reduceByKey((a, b) => a + b)

wordCounts.collect().foreach(println)

sc.stop()

}

}

7。引入jar包

源代码的目录

root@worker1:/usr/local/IMFIdeaIDE# ls

ideaIC-2016.1.1.tar.gz spark-1.6.1-bin-hadoop2.6.tgz spark-1.6.1.tgz

源代码解压缩

root@worker1:/usr/local/IMFIdeaIDE# tar -zxvf spark-1.6.1.tgz

8。在idea中选择需要关联源码的jar包,点击左边的+,添加依赖包关联。

/usr/local/IMFIdeaIDE/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar

/usr/local/IMFIdeaIDE/spark-1.6.1-bin-hadoop2.6/lib/spark-examples-1.6.1-hadoop2.6.0.jar

添加源码关联

/usr/local/IMFIdeaIDE/spark-1.6.1

9。idea中 vim emulation 插件的删除

File->settings 然后在Appearance & Behavior中对Keymap设置 选中Default

在setting-----plugins 中 搜索vim ,把它去掉重启idea就可以了

10 Idea打jar包

代码中去掉 .setMaster("local"),打成jar包

打开File -> Project Structure -> Artifacts

点击“+”,选择“Jar”,选择From modules with dependencies。设置好Main Class

回到IDEA,在上面的菜单栏选择Build -> Build Artifacts,成功生成Jar包

11.顺带更换一下IDEA的workspace

cp -R /root/IdeaProjects/IMFSpark/. /usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/

打包成功。

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# pwd

/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# ls

IMFIDEAWordcount.jar

12。 从work1 复制到master

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount# scp -rq

/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount/IMFIDEAWordcount.jar

[email protected]:/usr/local/IMF_testdata

root@worker1:/usr/local/IMF_IDEA_WorkSpace/IMF_IDEA_Projects/IMFSpark/out/artifacts/IMFIDEAWordcount#

13 master查看

root@master:/usr/local/IMF_testdata# ls | grep IMFIDEA

IMFIDEAWordcount.jar

root@master:/usr/local/IMF_testdata#

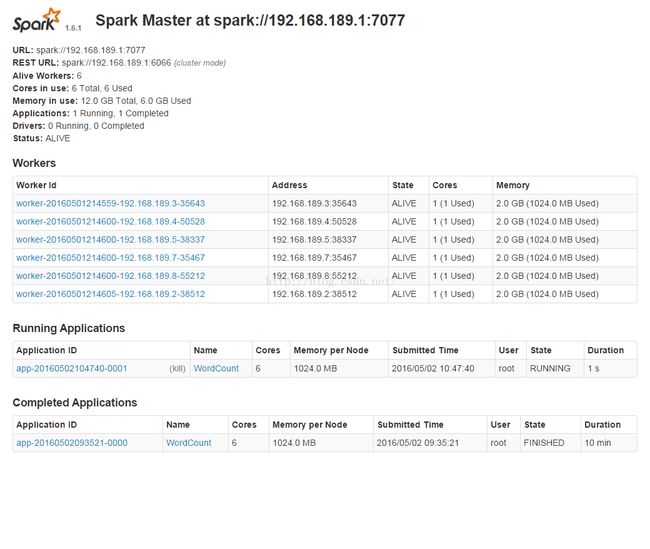

14 在worker1的IDEA上运行

设置参数

--master spark://192.168.189.1:7077 --class WordCount /usr/local/IMF_testdata/IMFIDEAWordcount.jar

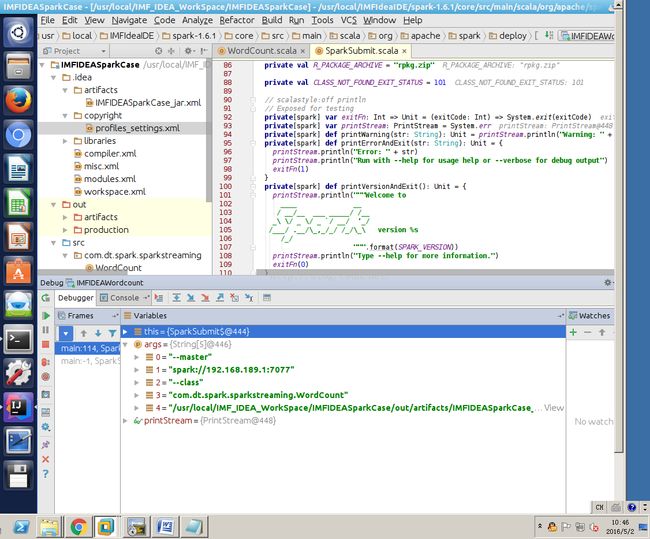

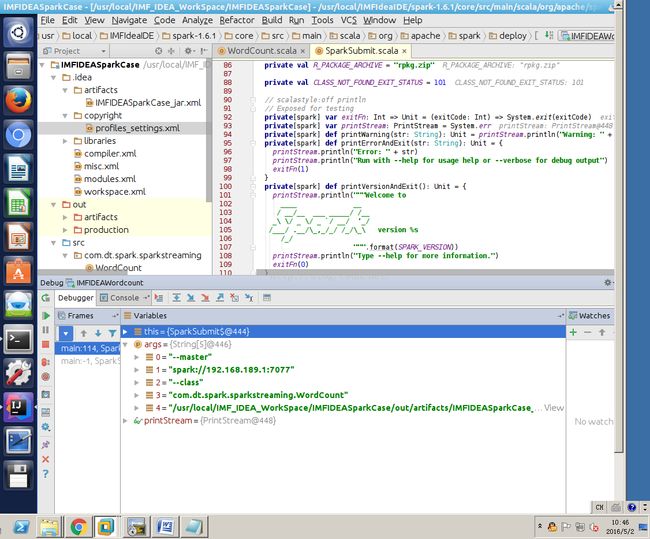

15. IDEA 中提交到cluster的调试,成功。

在SparkSubmit的main方法中设置断点,点击右上角的调试按钮:

运行后会停在SparkSubmit设置的断点上。

root@worker1:/usr/local# scp -rq /usr/local/idea-IC-145.597.3 [email protected]:/usr/local/idea-IC-145.597.3

scp -rq /usr/local/IMF_IDEA_WorkSpace [email protected]:/usr/local/IMF_IDEA_WorkSpace

scp -rq /usr/local/IMFIdeaIDE [email protected]:/usr/local/IMFIdeaIDE

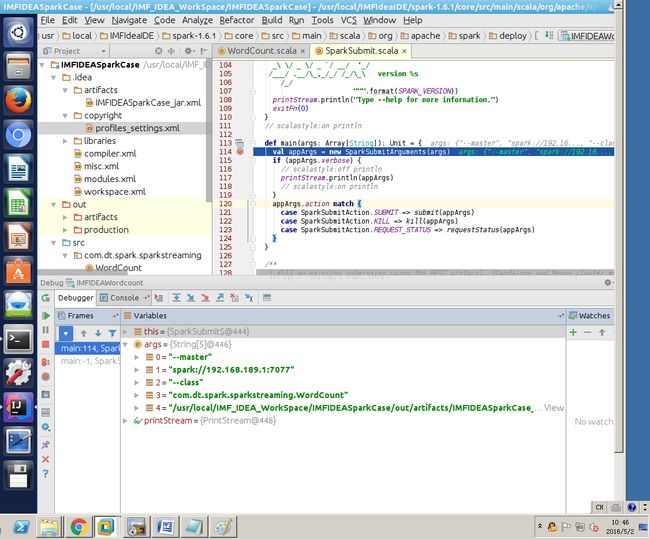

17。master 也可以运行idea了 master代码加一个package,重新打包运行

--master spark://192.168.189.1:7077 --class com.dt.spark.sparkstreaming.WordCount /usr/local/IMF_IDEA_WorkSpace/IMFIDEASparkCase/out/artifacts/IMFIDEASparkCase_jar/IMFIDEASparkCase.jar

在SparkSubmit的main方法中设置断点,

在应用程序val conf = new SparkConf().setAppName("WordCount")设置断点