MongoDB与Spark分布式系统集成测试

MongoDB与Spark分布式系统集成测试

1.Spark 2.2.1分布式部署,修改/etc/profile配置文件。(Spark 下载部署过程略)

export SPARK_HOME=/usr/local/spark-2.2.1-bin-hadoop2.6

export PATH=.:$JAVA_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$HIVE_HOME/bin:$FLUME_HOME/bin:$ZOOKEEPER_HOME/bin:$KAFKA_HOME/bin:$IDEA_HOME/bin:$eclipse_HOME:$MAVEN_HOME/bin:$ALLUXIO_HOME/bin:$HBASE_HOME/bin:$MongoDB_HOME/bin:$PATH

2. 将配置分发到各个Worker节点。

root@master:~# scp -rq /etc/profile [email protected]:/etc/profile

root@master:~# scp -rq /etc/profile [email protected]:/etc/profile

root@master:~# scp -rq /etc/profile [email protected]:/etc/profile root@master:~# source /etc/profile

root@worker1:~# source /etc/profile

root@worker2:~# source /etc/profile

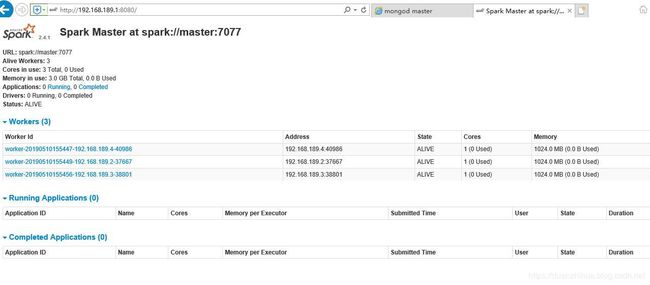

root@worker3:~# source /etc/profile3.启动Hadoop,spark集群。

root@master:~# /usr/local/hadoop-2.6.0/sbin/start-all.sh

root@master:/usr/local/spark-2.2.1-bin-hadoop2.6/sbin# ./start-all.sh 4. 启动mongodb。

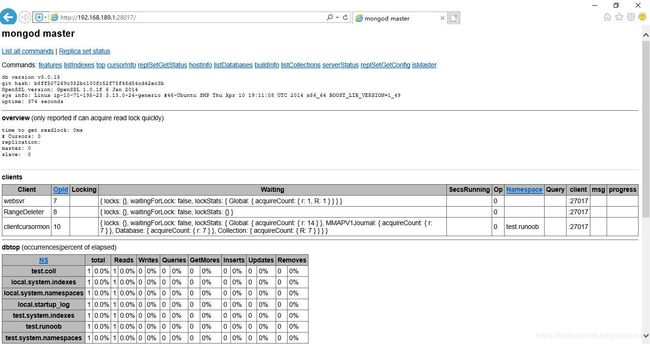

root@master:/usr/local/mongodb-3.0.15/bin# ./mongod --dbpath /usr/local/mongodb-3.0.15/data/db --rest

2019-05-10T15:09:51.979+0800 I CONTROL ** WARNING: --rest is specified without --httpinterface,

2019-05-10T15:09:51.979+0800 I CONTROL ** enabling http interface

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] MongoDB starting : pid=4690 port=27017 dbpath=/usr/local/mongodb-3.0.15/data/db 64-bit host=master

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] db version v3.0.15

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] git version: b8ff507269c382bc100fc52f75f48d54cd42ec3b

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] build info: Linux ip-10-71-195-23 3.13.0-24-generic #46-Ubuntu SMP Thu Apr 10 19:11:08 UTC 2014 x86_64 BOOST_LIB_VERSION=1_49

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] allocator: tcmalloc

2019-05-10T15:09:52.104+0800 I CONTROL [initandlisten] options: { net: { http: { RESTInterfaceEnabled: true, enabled: true } }, storage: { dbPath: "/usr/local/mongodb-3.0.15/data/db" } }

2019-05-10T15:09:54.531+0800 I JOURNAL [initandlisten] journal dir=/usr/local/mongodb-3.0.15/data/db/journal

2019-05-10T15:09:54.570+0800 I JOURNAL [initandlisten] recover : no journal files present, no recovery needed

2019-05-10T15:09:55.341+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 13.4

2019-05-10T15:09:55.582+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 3.76

2019-05-10T15:09:56.715+0800 I JOURNAL [durability] Durability thread started

2019-05-10T15:09:56.716+0800 I JOURNAL [journal writer] Journal writer thread started

2019-05-10T15:09:56.717+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-05-10T15:09:56.717+0800 I CONTROL [initandlisten]

2019-05-10T15:09:56.743+0800 I CONTROL [initandlisten]

2019-05-10T15:09:56.743+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2019-05-10T15:09:56.743+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-05-10T15:09:56.743+0800 I CONTROL [initandlisten]

2019-05-10T15:10:00.660+0800 I NETWORK [websvr] admin web console waiting for connections on port 28017

2019-05-10T15:10:00.727+0800 I NETWORK [initandlisten] waiting for connections on port 270175. mongo-spark的github地址(https://github.com/mongodb/mongo-spark),maven的配置:

org.mongodb.spark

mongo-spark-connector_2.10

2.2.6

org.mongodb

mongo-java-driver

3.10.2

org.bson

bson

2.6.5-no-key-checks-joda-gridfs-partial-save

6.将mongo-spark-connector_2.10-2.2.6.jar,mongo-java-driver-3.10.2.jar上传至/usr/local/spark-2.2.1-bin-hadoop2.6/jars/目录。启动spark-shell,将spark运行的结果保存到mongo。

root@master:~# spark-shell --conf "spark.mongodb.input.uri=mongodb://192.168.189.1:27017/test.coll?readPreference=primaryPreferred" --conf "spark.mongodb.output.uri=mongodb://192.168.189.1:27017/test.coll" --jars /usr/local/spark-2.2.1-bin-hadoop2.6/jars/mongo-spark-connector_2.10-2.2.6.jar,/usr/local/spark-2.2.1-bin-hadoop2.6/jars/mongo-java-driver-3.10.2.jar

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

19/05/10 16:43:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Spark context Web UI available at http://master:4040

Spark context available as 'sc' (master = local[*], app id = local-1557477829677).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.1

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_60)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import com.mongodb.spark._

import com.mongodb.spark._

scala> import org.bson.Document

import org.bson.Document

scala> val documents = sc.parallelize((1 to 10).map(i => Document.parse(s"{test: $i}")))

documents: org.apache.spark.rdd.RDD[org.bson.Document] = ParallelCollectionRDD[0] at parallelize at :28

scala> MongoSpark.save(documents)

scala> 在MongoDB中查询:

root@master:~# /usr/local/mongodb-3.0.15/bin/mongo

MongoDB shell version: 3.0.15

connecting to: test

Server has startup warnings:

2019-05-10T16:43:11.375+0800 I CONTROL ** WARNING: --rest is specified without --httpinterface,

2019-05-10T16:43:11.375+0800 I CONTROL ** enabling http interface

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten]

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten]

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-05-10T16:43:11.527+0800 I CONTROL [initandlisten]

> use test

switched to db test

> show collections

coll

runoob

system.indexes

> db.coll.find()

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b53e"), "test" : 1 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b53f"), "test" : 2 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b540"), "test" : 3 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b541"), "test" : 4 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b542"), "test" : 5 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b543"), "test" : 6 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b544"), "test" : 9 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b545"), "test" : 10 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b546"), "test" : 7 }

{ "_id" : ObjectId("5cd53a0ee1c82c5a1985b547"), "test" : 8 }

>