百度语音SDK开发,姐妹篇~iOS-Demo

iOS语音识别demo-基于百度语音SDK

第一次在CSDN写博客,小小记录一下。哈哈

一、概述

本文主要记录“离在线语音识别”和“语音唤醒”功能。基于百度语音iOS-SDK进行开发。为何不用“讯飞”,额,因为百度免费。

二、SDK下载及环境配置

2.1. 创建应用

a、登录后台创建应用:https://console.bce.baidu.com/ai/#/ai/speech/app/create

b、创建成功后,会生成三个参数:“API_KEY”、“SECRET_KEY”以及“APP_ID”

2.2. 下载SDK

百度语音开放平台有两个入口(估计是后面百度语音开放平台集成到百度AI去了):

http://yuyin.baidu.com/

https://ai.baidu.com/sdk#asr(推荐)

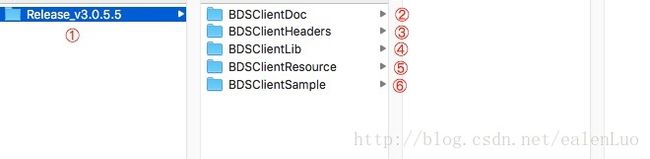

下载下来的SDK解压后如下:

①SDK解压后的根目录 ②SDK文档 ③头文件 ④百度语音静态库 ⑤语音资源文件 ⑥官方demo

2.3 集成到项目

添加依赖库

- libc++.tbd

- libstdc++.6.0.9.tbd

- libz.1.2.5.tbd

- libiconv.2.4.0.tbd

- AudioToolbox

- AVFoundation

- CoreTelephony

- SystemConfiguration

添加头文件

识别相关

#import "BDSEventManager.h"

#import "BDSASRDefines.h"

#import "BDSASRParameters.h"唤醒相关

#import "BDSWakeupDefines.h"

#import "BDSWakeupParameters.h"替换“API_KEY”、“SECRET_KEY”以及“APP_ID”

NSString* const API_KEY = @"your API_KEY";

NSString* const SECRET_KEY = @"your SECRET_KEY";

NSString* const APP_ID = @"your APP_ID";三、简单实用

3.1、语音识别

3.1.1 初始化

- 初始化语音识别对象

self.asrEventManager = [BDSEventManager createEventManagerWithName:BDS_ASR_NAME];- 配置语音识别对象

- (void)initAsrEventManager{

// 设置语音识别代理

[self.asrEventManager setDelegate:self];

// 参数配置:在线身份验证

[self.asrEventManager setParameter:@[_API_KEY,_SECRET_KEY] forKey:BDS_ASR_API_SECRET_KEYS];

//设置 APPID

[self.asrEventManager setParameter:_APP_ID forKey:BDS_ASR_OFFLINE_APP_CODE];

// 参数设置:识别策略为离在线并行

[self.asrEventManager setParameter:@(EVR_STRATEGY_BOTH) forKey:BDS_ASR_STRATEGY];

}- 配置端点检测

/**

检测更加精准,抗噪能力强,响应速度较慢

*/

- (void)configModelVAD {

NSString *modelVAD_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_basic_model" ofType:@"dat"];

[self.asrEventManager setParameter:modelVAD_filepath forKey:BDS_ASR_MODEL_VAD_DAT_FILE];

[self.asrEventManager setParameter:@(YES) forKey:BDS_ASR_ENABLE_MODEL_VAD];

}

/**

提供基础检测功能,性能高,响应速度快

*/

- (void)configDNNMFE {

NSString *mfe_dnn_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_mfe_dnn" ofType:@"dat"];

[self.asrEventManager setParameter:mfe_dnn_filepath forKey:BDS_ASR_MFE_DNN_DAT_FILE];

NSString *cmvn_dnn_filepath = [[NSBundle mainBundle] pathForResource:@"bds_easr_mfe_cmvn" ofType:@"dat"];

[self.asrEventManager setParameter:cmvn_dnn_filepath forKey:BDS_ASR_MFE_CMVN_DAT_FILE];

}3.1.2 开始识别

[self.asrEventManager sendCommand:BDS_ASR_CMD_START];3.1.3 停止识别

[self.asrEventManager sendCommand:BDS_ASR_CMD_STOP];3.1.4 取消识别

[self.asrEventManager sendCommand:BDS_ASR_CMD_CANCEL];3.1.5 状态及数据回调

#pragma mark - < BDSClientASRDelegate >

- (void)VoiceRecognitionClientWorkStatus:(int)workStatus obj:(id)aObj {

switch (workStatus) {

case EVoiceRecognitionClientWorkStatusStartWorkIng: {

XFLog(@"EVoiceRecognitionClientWorkStatusStartWorkIng:识别工作开始,开始采集及处理数据");

XFLog(@"%@",[self parseLogToDic:aObj]);

if ([self.delegate respondsToSelector:@selector(onAsrReady)]) {

[self.delegate onAsrReady];

}

}

break;

case EVoiceRecognitionClientWorkStatusNewRecordData: {

XFLog(@"EVoiceRecognitionClientWorkStatusNewRecordData:录音数据回调");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onWorking)]) {

[self.delegate onWorking];

}

}

break;

case EVoiceRecognitionClientWorkStatusStart: {

XFLog(@"EVoiceRecognitionClientWorkStatusStart:检测到用户开始说话");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onAsrBegin)]) {

[self.delegate onAsrBegin];

}

}

break;

case EVoiceRecognitionClientWorkStatusEnd: {

XFLog(@"EVoiceRecognitionClientWorkStatusEnd:本地声音采集结束,等待识别结果返回并结束录音");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onAsrEnd)]) {

[self.delegate onAsrEnd];

}

}

break;

case EVoiceRecognitionClientWorkStatusFlushData: {

XFLog(@"EVoiceRecognitionClientWorkStatusFlushData:连续上屏");

XFLog(@"%@",aObj);

if (self.timer.valid) {

[self.timer invalidate];

self.timer = nil;

}

if ([self.delegate respondsToSelector:@selector(onAsrPartialResult:originResult:)]) {

[self.delegate onAsrPartialResult:[self getResultStrForObject:aObj] originResult:aObj];

}

}

break;

case EVoiceRecognitionClientWorkStatusFinish: {

XFLog(@"EVoiceRecognitionClientWorkStatusFinish:语音识别功能完成,服务器返回正确结果");

XFLog(@"%@",[self getDescriptionForDic:aObj]);

if ([self.delegate respondsToSelector:@selector(onAsrFinalResult:originResult:)]) {

[self.delegate onAsrFinalResult:[self getResultStrForObject:aObj] originResult:aObj];

}

}

break;

case EVoiceRecognitionClientWorkStatusMeterLevel: {

XFLog(@"EVoiceRecognitionClientWorkStatusMeterLevel:当前音量回调");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onAsrMeterLevel:)]) {

[self.delegate onAsrMeterLevel:[self getVolumeByObject:aObj]];

}

}

break;

case EVoiceRecognitionClientWorkStatusCancel: {

XFLog(@"EVoiceRecognitionClientWorkStatusCancel:用户取消");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onAsrCacel)]) {

[self.delegate onAsrCacel];

}

}

break;

case EVoiceRecognitionClientWorkStatusError: {

XFLog(@"EVoiceRecognitionClientWorkStatusError:发生错误");

XFLog(@"%@",aObj);

NSString *errMsg = [self getErrorMsgWithError:aObj];

if ([self.delegate respondsToSelector:@selector(onAsrFinishError:errorMsg:)]) {

[self.delegate onAsrFinishError:(NSError *)aObj errorMsg:errMsg];

}

}

break;

case EVoiceRecognitionClientWorkStatusLoaded: {

XFLog(@"EVoiceRecognitionClientWorkStatusLoaded:离线引擎加载完成");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onOfflineLoaded)]) {

[self.delegate onOfflineLoaded];

}

}

break;

case EVoiceRecognitionClientWorkStatusUnLoaded: {

XFLog(@"EVoiceRecognitionClientWorkStatusUnLoaded:离线引擎卸载完成");

XFLog(@"%@",aObj);

if ([self.delegate respondsToSelector:@selector(onOfflineUnLoaded)]) {

[self.delegate onOfflineUnLoaded];

}

}

break;

case EVoiceRecognitionClientWorkStatusChunkThirdData: {

XFLog(@"EVoiceRecognitionClientWorkStatusChunkThirdData:识别结果中的第三方数据");

XFLog(@"%@",aObj);

}

break;

case EVoiceRecognitionClientWorkStatusChunkNlu: {

XFLog(@"EVoiceRecognitionClientWorkStatusChunkNlu:识别结果中的语义结果");

XFLog(@"%@",aObj);

}

break;

case EVoiceRecognitionClientWorkStatusChunkEnd: {

XFLog(@"EVoiceRecognitionClientWorkStatusChunkEnd:识别过程结束");

XFLog(@"%@",aObj);

}

break;

case EVoiceRecognitionClientWorkStatusFeedback: {

XFLog(@"EVoiceRecognitionClientWorkStatusFeedback:识别过程反馈的打点数据");

XFLog(@"%@",aObj);

}

break;

case EVoiceRecognitionClientWorkStatusRecorderEnd: {

XFLog(@"EVoiceRecognitionClientWorkStatusRecorderEnd:录音机关闭,页面跳转需检测此时间,规避状态条 (iOS)");

XFLog(@"%@",aObj);

}

break;

case EVoiceRecognitionClientWorkStatusLongSpeechEnd: {

XFLog(@"EVoiceRecognitionClientWorkStatusLongSpeechEnd:长语音结束状态");

XFLog(@"%@",aObj);

}

break;

default:

break;

}

}3.1.6 处理接口回调数据

#pragma mark 3.2、语音唤醒

3.2.1 初始化唤醒对象

self.wakeupEventManager = [BDSEventManager createEventManagerWithName:BDS_WAKEUP_NAME];- (void)configWakeUpManager{

// 设置语音唤醒代理

[self.wakeupEventManager setDelegate:self];

// 参数配置:离线授权APPID

[self.wakeupEventManager setParameter:_APP_ID forKey:BDS_WAKEUP_APP_CODE];

// 参数配置:唤醒语言模型文件路径, 默认文件名为 bds_easr_basic_model.dat

NSString* dat = [[NSBundle mainBundle] pathForResource:@"bds_easr_basic_model" ofType:@"dat"];

[self.wakeupEventManager setParameter:dat forKey:BDS_WAKEUP_DAT_FILE_PATH];

//设置唤醒词文件路径

// 默认的唤醒词文件为"bds_easr_wakeup_words.dat",包含的唤醒词为"百度一下"

// 如需自定义唤醒词,请在 http://ai.baidu.com/tech/speech/wake 中评估并下载唤醒词文件,替换此参数

NSString* words = [[NSBundle mainBundle] pathForResource:@"xiaomingwakeup" ofType:@"dms"];

[self.wakeupEventManager setParameter:words forKey:BDS_WAKEUP_WORDS_FILE_PATH];

}3.2.2 开始唤醒

- (void)startWakeup{

[self.wakeupEventManager setParameter:nil forKey:BDS_WAKEUP_AUDIO_FILE_PATH];

[self.wakeupEventManager setParameter:nil forKey:BDS_WAKEUP_AUDIO_INPUT_STREAM];

// 发送指令:加载语音唤醒引擎

[self.wakeupEventManager sendCommand:BDS_WP_CMD_LOAD_ENGINE];

// 发送指令:启动唤醒

[self.wakeupEventManager sendCommand:BDS_WP_CMD_START];

}3.2.3 关闭唤醒

- (void)stopWakeup{

[self.wakeupEventManager sendCommand:BDS_WP_CMD_STOP];

}3.2.4 唤醒接口回调

- (void)WakeupClientWorkStatus:(int)workStatus obj:(id)aObj{

switch (workStatus) {

case EWakeupEngineWorkStatusStarted: {

XFLog(@"EWakeupEngineWorkStatusStarted");

if ([self.delegate respondsToSelector:@selector(wakeEngineStart)]) {

[self.delegate wakeEngineStart];

}

}

break;

case EWakeupEngineWorkStatusStopped: {

XFLog(@"EWakeupEngineWorkStatusStopped");

if ([self.delegate respondsToSelector:@selector(wakeEngineStop)]) {

[self.delegate wakeEngineStop];

}

}

break;

case EWakeupEngineWorkStatusLoaded: {

XFLog(@"EWakeupEngineWorkStatusLoaded");

if ([self.delegate respondsToSelector:@selector(wakeEngineLoaded)]) {

[self.delegate wakeEngineLoaded];

}

}

break;

case EWakeupEngineWorkStatusUnLoaded: {

XFLog(@"EWakeupEngineWorkStatusUnLoaded");

if ([self.delegate respondsToSelector:@selector(wakeEngineUnLoaded)]) {

[self.delegate wakeEngineUnLoaded];

}

}

break;

case EWakeupEngineWorkStatusTriggered: { // 命中唤醒词

XFLog(@"EWakeupEngineWorkStatusTriggered:%@",(NSString *)aObj);

if ([self.delegate respondsToSelector:@selector(wakeEngineTriggered:)]) {

[self.delegate wakeEngineTriggered:(NSString *)aObj];

}

}

break;

case EWakeupEngineWorkStatusError: {

XFLog(@"EWakeupEngineWorkStatusError : %@",(NSString *)aObj);

if ([self.delegate respondsToSelector:@selector(wakeEngineError:)]) {

[self.delegate wakeEngineError:(NSError *)aObj];

}

}

break;

default:

break;

}

}3.3、如何自定义唤醒词及离线识别语法

3.3.1 自定义唤醒词

详见3.2.1初始化唤醒对象的“configWakeUpManager()”方法

3.3.2 自定义离线识别语法

- (void)loadOfflineRecogEngine{

// 参数设置:离线识别引擎类型

[self.asrEventManager setParameter:@(EVR_OFFLINE_ENGINE_GRAMMER) forKey:BDS_ASR_OFFLINE_ENGINE_TYPE];

// 参数配置:命令词引擎语法文件路径。 请在 (官网)[http://speech.baidu.com/asr] 参考模板定义语法,下载语法文件后,替换BDS_ASR_OFFLINE_ENGINE_GRAMMER_FILE_PATH参数

NSString *offlineGrammerPath = [[NSBundle mainBundle] pathForResource:@"xfdemo_speech_grammar" ofType:@"dat"];

[self.asrEventManager setParameter:offlineGrammerPath forKey:BDS_ASR_OFFLINE_ENGINE_GRAMMER_FILE_PATH];

// // 参数配置:命令词引擎语言模型文件路径,用于动态更新离线语法

NSString *offlineDatPath = [[NSBundle mainBundle] pathForResource:@"bds_easr_basic_model" ofType:@"dat"];

[self.asrEventManager setParameter:offlineDatPath forKey:BDS_ASR_OFFLINE_ENGINE_DAT_FILE_PATH];

// 发送指令:加载离线引擎

[self.asrEventManager sendCommand:BDS_ASR_CMD_LOAD_ENGINE];

}第一次写博客,欢迎大家批评指点。