这篇文字是Kinect v2 Examples with MS-SDK 这个插件的作者编写,笔者觉得非常值得一看,故推荐之…

另如果有时间,笔者会原味翻译过来供大家学习,降低大家学习成本,毕竟全英文也是没有太多耐心保证能看完…

Kinect v2 Examples with MS-SDK 提示,小技巧和示例

Rumen F. / January 25, 2015

在回答了众多的关于如何使用“Kinect v2 with MS-SDK”插件各个部分和组件的问题后。

我想如果分享一些常规的提示,小技巧和示例,它将会变得更加简单易用。

我准备及时在这篇文章里面添加更多的提示和技巧

我会及时的在这篇文章添加更多的小提示和小技巧。随时查看并记下新功能

点击链接跳转到K2-asset在线文档

目录:

Kinect Scripts文件夹下所有manages(管理器)的用途

如何将Kinect v2-Package 功能模块用在你自己的Unity 工程里面

如何将 AvatarController脚本用于你自己的模型上

如何让Avatar的手绕着骨骼运动

如何利用Kinect 与GUI 按键和组件进行交互

如何获得深度相机或者彩色相机Texture

如何获得一个身体关节的位置

如何让一个游戏对象跟随玩家旋转

如何让一个游戏对象跟随玩家的头的位置和旋转

如何获得面部点阵各个坐标

如何将Kinect捕获的动作与Mecanim动画相结合

如何将你的模型添加到FittingRoom Demo中

如何设置Kinect传感器高度和角度

有当一个玩家被检测到或者丢失时会触发的一些事件吗?

如何处理非持续性手势,譬如挥手或者像举起手臂那样的姿势

如何处理持续性的手势,譬如放大,缩小,转动(类比转方向盘)

如何在K2-asset中使用可视化手势(VGB)

如何改变语音识别功能的语种和语法

如何让fitting-room 或者overlay demo 以竖屏模式运行

如何从 ‘Kinect-v2 with MS-SDK’项目创建一个exe可执行应用

How to make the Kinect-v2 package work with Kinect-v1

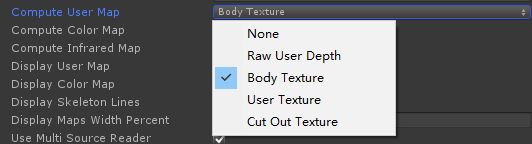

设置项‘Compute user map’-各个选项的意思

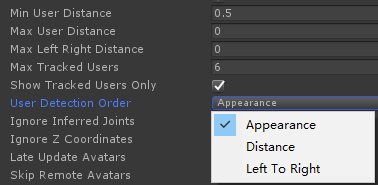

如何设置玩家检测的顺序

如何在 FittingRoom-demo中启用body-blending, 或者关闭以提升 FPS

如何创建一个Window应用商店 (UWP-8.1) 应用程序

如何启用多玩家

如何使用脸部追踪管理器(FacetrackingManager)

如何在Demo FIttingRoom中使用background-removal image(背景剔除的图片)

如何在在VR环境下追踪用用户位置来移动FPS-avatars(第一人称替身)

如何创建属于你自己的手势

如何启用和禁用追踪推断关节

如何用微软提供的Kinect-v2插件创建EXE

如何创建Windows-应用商店(UWP-10) 应用

如何运行运行投影机Demo场景

如何在场景背景图上渲染背景剔除(的)图像或彩色摄像机图像

Kinect Scripts文件夹下所有managers(管理器)的用途:

这些在KinectScripts文件夹下的管理器都是组件,你可以根据你想实现的功能在项目中使用它。

KinectManager 是最最通用的组件,需要它与传感器交互以获取底层数据,譬如彩色数据流和深度数据流,以及身体和关节在Kinect空间中的位置(单位:米)。

AvatarController的目的是将检测到的关节位置和旋转传递到一个可操纵骨骼上。

CubemanController效果同上,但是它通过transforms 和线来展现关节和骨骼,以便于定位人体追踪问题;

FacetrackingManager(脸部追踪)用于处理脸部点阵和头/脖子旋转。如果与此同时KinectManager存在且可用的情况下, 它将被KinectManager 内部调用来获得头和脖子更精准的位置和旋转 。

InteractionManager(交互) 用于控制手型光标,同时检测手部的抓取、释放以及点击.

最后呢SpeechManager用于识别声控(语言识别控制)。

当然咯示例文件夹包含了许多简单的示例(一些将在下文提及),你可以参考学习、直接使用或者拷贝部分代码到你的脚本中。

如何将Kinect v2-Package 功能模块用在你自己的Unity 工程里面:

- 将 ‘KinectScripts’文件夹从插件包的Assets文件夹拷贝到你工程的 Assets文件夹下.该文件夹包含了 脚本,接口和过滤器(filters )。

- 将 ‘Resources’文件夹从插件包的Assets文件夹拷贝到你工程的 Assets文件夹下。这个文件夹包含了所有需要的库和资源,你可以不必拷贝那些你不打算用到的库(譬如64位库和Kinect-v1的库)来节省空间。

- 将 ‘Standard Assets’文件夹从插件包的Assets文件夹拷贝到你工程的 Assets文件夹下。它包含Kinect v2的MS包装类,等到Unity检测并编译新复制的资源和脚本。

- 将KinectManager.cs脚本作为组件添加到主相机或者其他已存在与游戏场景中的游戏对象上。作为与底层传感器交互获取底层数据的通用组件,它真的很重要。

- 使用其他你想加入到项目中的Kinect相关组件,所有这些组件均内部依赖于 KinectManager 。

如何将 AvatarController脚本用于你自己的模型上:

- 确认你的模型处于T-pose(可选),这是Kincet关节方向的归零姿势。

- 在Assets文件下选中该模型资源,在inspector面板上选中“Rig”标签。

- 将AnimationType设置成 ‘Humanoid’,将AvatarDefinition设置成 ‘Create from this model’。

- 点击Apply按钮。然后点击Configure按钮来确认关节被正确的指定了,完成以上步骤我们退出配置窗口。

5.将模型放置到游戏场景中。 - 将KinectScript文件夹下的AvatarController脚本作为组件添加到游戏场景中的模型游戏对象中。

- 确保你的模型上还挂有Animator组件,必须是激活且它的Avatar合理设置.

- 如有需要,可以启用或者禁用AvatarController组件上的MirroredMovement(镜像移动)和VerticalMovement(垂直方向移动) 设置项。记住:如果镜像运动被启用 ,模型的Y方向将旋转180°。

9.运行场景测试Avatar模型。如果需要,调整AvatarController的某些设置,然后重试 。

如何让Avatar的手绕着骨骼运动:

要做到这一点,你需要将KinectManager的“Allowed Hand Rotations”设置为“All”。

KinectManager是示例场景中MainCamera的组件。

此设置有三个选项:

None - 关闭所有手的旋转,

Default - 启用手的旋转,除了骨头周围的扭曲,

All- 将所有手的旋转开启

如何利用Kinect 与GUI 按键和组件进行交互:

1.将InteractionManager添加到主摄像头或场景中的其他一直会存在的对象上。它用于控制手形光标并检测手的握拳,释放和点击。握拳即手掌拽紧拇指在其他手指之上,释放 - 将手打开,当用户的手不移动(静止不动)约2秒钟时,会产生点击。

2.启用InteractionManager组件的“Control Mouse Cursor”设置。该设置将手形光标的位置和点击传输到鼠标光标,这样就可以与GUI按钮,Toggles和其他组件进行交互。

3.如果需要使用拖放功能与GUI进行交互,请启用InteractionManager组件的“Control Mouse Drag”设置。一旦它检测到握拳并且拖动直到手释放的过程,以开始拖动功能。如果您启用此设置,您也可以以握拳姿势点击GUI按钮,而不是普通的的手点击(即保持原位,在按钮上方约2秒钟)。

如何获得depth(深度)或color(彩色)相机textures(纹理):

首先,如果需要深度纹理,确保KinectManager组件的Compute User Map设置被启用即可,如果您需要彩色相机纹理,则KinectManager的Compute Color Map设置启用。

然后在你的脚本的Update()方法中写上如下内容:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

Texture2D depthTexture = manager.GetUsersLblTex();

Texture2D colorTexture = manager.GetUsersClrTex();

// do something with the textures

}

如何获得身体关节的位置:

这个示例在KinectScripts / Samples / GetJointPositionDemo(脚本)。 您可以将其作为组件添加到场景中的游戏对象,以便在运行时看效果。 只需选择需要的关节并可选是否保存到csv文件。 不要忘记将KinectManager作为组件添加到场景中的游戏对象。 它通常挂载在示例场景中MainCamera上。 这是演示脚本的主要部分,用于检索选定关节的位置:

KinectInterop.JointType joint = KinectInterop.JointType.HandRight;

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

if(manager.IsUserDetected())

{

long userId = manager.GetPrimaryUserID();

if(manager.IsJointTracked(userId, (int)joint))

{

Vector3 jointPos = manager.GetJointPosition(userId, (int)joint);

// do something with the joint position

}

}

}

如何让游戏对象跟随用户的旋转:

这与前面的示例类似,在KinectScripts / Samples / FollowUserRotation(脚本)中演示。 要在实际中看到它,可以在场景中创建一个Cube,并将该脚本作为组件挂载其上。

不要忘记将KinectManager作为组件添加到场景中的游戏对象。 它通常是示例场景中MainCamera的组件。

如何让游戏对象跟随头部位置和旋转呢?

您需要将KinectManager和FacetrackingManager作为组件添加到场景中的游戏对象。

例如,它们是KinectAvatarsDemo场景中MainCamera的组件。

然后,为了获得头部的位置和脖子的方向,在脚本中需要这样的代码:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

if(manager.IsUserDetected())

{

long userId = manager.GetPrimaryUserID();

if(manager.IsJointTracked(userId, (int)KinectInterop.JointType.Head))

{

Vector3 headPosition = manager.GetJointPosition(userId, (int)KinectInterop.JointType.Head);

Quaternion neckRotation = manager.GetJointOrientation(userId, (int)KinectInterop.JointType.Neck);

// do something with the head position and neck orientation

}

}

}

How to get the face-points’ coordinates:

(如何获得面部标志点的坐标?)

您需要拿到各个FaceFrameResult对象的引用。

演示:KinectScripts / Samples / GetFacePointsDemo(脚本)。 您可以将其作为组件添加到场景中的游戏对象,以便查看运行中的效果。在脚本中调用其公共方法:GetFacePoint()获取面部标志点坐标。

不要忘记将KinectManager和FacetrackingManager作为组件添加到场景中的游戏对象。 一般来说,它们是KinectAvatarsDemo场景中MainCamera的一个组件。

如何将Kinect捕捉的运动与Mecanim动画混合

- Use the AvatarControllerClassic instead of AvatarController-component. Assign(指定) only these joints that have to be animated by the sensor.

使用AvatarControllerClassic代替AvatarController组件。仅仅指定那些需要被传感器动画捕捉的关节。 - Set the SmoothFactor-setting of AvatarControllerClassic to 0, to apply the detected bone orientations instantly.

将AvatarControllerClassic的SmoothFactor设置为0,以便立即应用被检测到骨骼的各个朝向。 - Create an avatar-body-mask and apply it to the Mecanim animation layer. In this mask, disable Mecanim animations of the Kinect-animated joints mentioned above. Do not disable the root-joint!

为Mecanim动画层创建一个 (avatar-body-mask )阿凡达身体遮罩并应用,在这个遮罩里面,将上述提到的Kinect动画捕捉关节点的Mecanim动画设置为禁用。但别禁用根节点。 - Enable the ‘Late Update Avatars’-setting of KinectManager (component of MainCamera in the example scenes).

将KinectManager(一般位于示例场景的主相机上)组件的Late Update Avatars设为可用。 - Run the scene to check the setup. When a player gets recognized by the sensor, part of his joints will be animated by the AvatarControllerClassic component, and the other part – by the Animator component.

运行场景确认上述设置,当一个玩家被传感器检测到时,他的部分关节会被AvatarControllerClassic组件捕捉动画,其他部分 - 由Animator组件负责。

如何将你的模型添加到 FittingRoom-demo

For each of your fbx-models, import the model and select it in the Assets-view in Unity editor.

→每一个模型,导入它们并在Unity编辑器Assets视窗选中。Select the Rig-tab in Inspector. Set the AnimationType to ‘Humanoid’ and the AvatarDefinition to ‘Create from this model’.

→ 选中Rig选项卡,将AnimationType设置为:‘Humanoid’ ,并且将AvatarDefinition改为‘Create from this model’(基于此模型创建)。Press the Apply-button. Then press the Configure-button to check if all required joints are correctly assigned. The clothing models usually don’t use all joints, which can make the avatar definition invalid. In this case you can assign manually the missing joints (shown in red).

→点击应用(Apply)按钮,然后点击Configure按钮来确认所有需要的关节点都被正确的分配。衣服模型一般不能使用所有的关节点,因为它会导致阿凡达定义无效。这种情况下你可以通过手动分配丢失的关节点(显示为红色)Keep in mind: The joint positions in the model must match the structure(结构) of the Kinect-joints. You can see them, for instance(事例) in the KinectOverlayDemo2. Otherwise the model may not overlay the user’s body properly.

→记住:这些关节在这个模型中位置必须与Kinect关节的结构相匹配。在示例KinectOverlayDemo2中有涉及,否则模型可能不会完全的覆盖用户的身体。Create a sub-folder for your model category (Shirts, Pants, Skirts, etc.) in the FittingRoomDemo/Resources-folder.

→在FittingRoomDemo的Resources文件夹下一个个子文件夹来为你的模型分类(Shirts, Pants, Skirts等等)。Create a sub-folders with subsequent numbers (0000, 0001, 0002, etc.) for all imported in p.1 models, in the model category folder.

→在模型分类文件夹,为第一步所有导入的模型继续创建文件名为数字且连续的文件夹形如:0000,0001,0002,Move your models into these numerical folders, one model per folder, along with the needed materials and textures. Rename the model’s fbx-file to ‘model.fbx’.

→将你的模型移入这些数字标记的文件夹,每个文件夹一个模型。他们的贴图及材质球需要被一并移入。然后将这个Fbx文件重命名为‘model.fbx’.You can put a preview image for each model in jpeg-format (100 x 143px, 24bpp) in the respective model folder. Then rename it to ‘preview.jpg.bytes’. If you don’t put a preview image, the fitting-room demo will display ‘No preview’ in the model-selection menu.

→你可以在各自的文件夹下放入每个模型的jpg格式(100 x 143px, 24bpp)的预览图,并将其重命名为 ‘preview.jpg.bytes’,如果你没有放入预览图,FittingRoom示例的模型选择菜单将显示‘No preview’(无预览).Open the FittingRoomDemo1-scene.

→打开FittingRoomDemo1场景。Add ModelSelector-component for your model category to the KinectController game object. Set its ‘Model category’-setting to be the same as the name of sub-folder created in p.5 above. Set the ‘Number of models’-setting to reflect the number of sub-folders created in p.6 above.

→为你的分类模型添加ModelSelector到KinectController游戏对象,将Model category与上面第5页中创建的子文件夹的名称相同。将Number of models设置与第6步中创建的子文件夹数值一一对应。The other settings of your ModelSelector-component must be similar to the existing ModelSelector in the demo. I.e. ‘Model relative to camera’ must be set to ‘BackgroundCamera’, ‘Foreground camera’ must be set to ‘MainCamera’, ‘Continuous scaling’ – enabled. The scale-factor settings may be set initially to 1 and the ‘Vertical offset’-setting to 0. Later you can adjust them slightly to provide the best model-to-body overlay.

→ModelSelector的其他设置必须与Demo中存在的ModelSelector一致。比如:

‘Model relative to camera’ 必须设置成‘BackgroundCamera’,

‘Foreground camera’必须设置为:‘MainCamera’,

‘Continuous scaling’ – enabled.

scale-factor (缩放因子)尽量设置为原来的1 并且'Vertical offset(垂直偏移量)’-设为:0.

稍后,你可以对他们进行微调以便更好地实现模型到身体的覆盖。Enable the ‘Keep selected model’-setting of the ModelSelector-component, if you want the selected model to continue overlaying user’s body, after the model category changes. This is useful, if there are several categories (i.e. ModelSelectors), for instance for shirts, pants, skirts, etc. In this case the selected shirt model will still overlay user’s body, when the category changes and the user starts selects pants, for instance.

→如果你想选中的模型在模型类别变更后持续的覆盖用户的身体,请将ModelSelector组件的Keep selected model设置启用,如果有几个类别(即ModelSelectors),例如用于衬衫,裤子,裙子的实例等等。在这种情况下,当类别改变并且用户开始选择裤子时实例时,选择的衬衫模型仍然覆盖用户的身体。The CategorySelector-component provides gesture control for changing models and categories, and takes care of switching model categories (e.g for shirts, pants, ties, etc.) for the same user. There is already a CategorySelector for the 1st user (player-index 0) in the scene, so you don’t need to add more.

→CategorySelector组件为改变模型和类别提供手势控制,并负责为同一用户切换模型类别(例如衬衫,裤子,领带等)。第一个用户已经存在一个CategorySelector(玩家索引为:0),你无需添加。If you plan for multi-user fitting-room, add one CategorySelector-component for each other user. You may also need to add the respective ModelSelector-components for model categories that will be used by these users, too.

→如果你计划做多人 fitting-room(换衣间),为每一个新增的用户添加一个CategorySelector组件。也可能需要为这些用户将使用的模型类别添加相应的ModelSelector组件。Run the scene to ensure that your models can be selected in the list and they overlay the user’s body correctly. Experiment a bit if needed, to find the values of scale-factors and vertical-offset settings that provide the best model-to-body overlay.

→运行场景,确保你的模型能在列表被选中且正确的覆盖用户身体。找到实现模型到身体完美覆盖的缩放因子和垂直偏移,可能需要些许调试。If you want to turn off the cursor interaction in the scene, disable the InteractionManager-component of KinectController-game object. If you want to turn off the gestures (swipes for changing models & hand raises for changing categories), disable the respective settings of the CategorySelector-component. If you want to turn off or change the T-pose calibration, change the ‘Player calibration pose’-setting of KinectManager-component.

→如果要关闭场景中的光标交互,请禁用KinectController游戏对象的InteractionManager组件。 如果您想关闭手势(滑动切换模型,抬手改变类别),请禁用CategorySelector组件的相应设置。 如果您想关闭或更改T-Pose姿态校准,请更改KinectManager组件的“玩家校准姿态”(Player calibration pose)设置。Last, but not least: You can use the FittingRoomDemo2 scene, to utilize or experiment with a single overlay model. Adjust the scale-factor settings of AvatarScaler to fine tune the scale of the whole body, arm- or leg-bones of the model, if needed. Enable the ‘Continuous Scaling’ setting, if you want the model to rescale on each Update.

→ 最后但并非最不重要:您可以使用FittingRoomDemo2场景来利用或试验单个叠加模型。 如果需要,调整AvatarScaler的比例因子设置以精确调整模型的全身,手臂或腿骨的比例。 如果您希望模型在每个Update中被重新缩放,请启用“连续缩放”(Continuous Scaling)设置。

如何设置Kinect传感器高度和角度

There are two very important settings of the KinectManager-component that influence the calculation of users’ and joints’ space coordinates, hence almost all user-related visualizations in the demo scenes.

Here is how to set them correctly:

- Set the ‘Sensor height’-setting, as to how high above the ground is the sensor, in meters. The by-default value is 1, i.e. 1.0 meter above the ground, which may not be your case.

- Set the ‘Sensor angle’-setting, as to the tilt angle of the sensor, in degrees. Use positive degrees if the sensor is tilted up, negative degrees – if it is tilted down. The by-default value is 0, which means 0 degrees, i.e. the sensor is not tilted at all.

- Because it is not so easy to estimate (估计)the sensor angle manually, you can use the ‘Auto height angle’-setting to find out this value. Select ‘Show info only’-option and run the demo-scene. Then stand in front of the sensor. The information on screen will show you the rough(粗略的) height and angle-settings, as estimated by the sensor itself. Repeat this 2-3 times and write down the values you see.

- Finally, set the ‘Sensor height’ and ‘Sensor angle’ to the estimated values you find best. Set the ‘Auto height angle’-setting back to ‘Dont use’.

- If you find the height and angle values estimated by the sensor good enough, or if your sensor setup is not fixed, you can set the ‘Auto height angle’-setting to ‘Auto update’. It will update the ‘Sensor height’ and ‘Sensor angle’-settings continuously, when there are users in the field of view of the sensor.

Are there any events, when a user is detected or lost

There are no special event handlers for user-detected/user-lost events, but there are two other options you can use:

- In the Update()-method of your script, invoke the GetUsersCount()-function of KinectManager and compare the returned value to a previously saved value, like this:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

int usersNow = manager.GetUsersCount();

if(usersNow > usersSaved)

{

// new user detected

}

if(usersNow < usersSaved)

{

// user lost

}

usersSaved = usersNow;

}

- Create a class that implements KinectGestures.GestureListenerInterface and add it as component to a game object in the scene. It has methods UserDetected() and UserLost(), which you can use as user-event handlers. The other methods could be left empty or return the default value (true). See the SimpleGestureListener or GestureListener-classes, if you need an example.

How to process discrete gestures like swipes and poses like hand-raises

Most of the gestures, like SwipeLeft, SwipeRight, Jump, Squat, etc. are discrete. All poses, like RaiseLeftHand, RaiseRightHand, etc. are also considered as discrete gestures. This means these gestures may report progress or not, but all of them get completed or cancelled at the end. Processing these gestures in a gesture-listener script is relatively easy.

You need to do as follows:

- In the UserDetected()-function of the script add the following line for each gesture you need to track:

manager.DetectGesture(userId, KinectGestures.Gestures.xxxxx);

- In GestureCompleted() add code to process the discrete gesture, like this:

if(gesture == KinectGestures.Gestures.xxxxx){ // gesture is detected - process it (for instance, set a flag or execute an action)} - In the GestureCancelled()-function, add code to process the cancellation of the continuous gesture:

if(gesture == KinectGestures.Gestures.xxxxx)

{

// gesture is cancelled - process it (for instance, clear the flag)

}

If you need code samples, see the SimpleGestureListener.cs or CubeGestureListener.cs-scripts.

- From v2.8 on, KinectGestures.cs is not any more a static class, but a component that may be extended, for instance with the detection of new gestures or poses. You need to add it as component to the KinectController-game object, if you need gesture or pose detection in the scene.

How to process continuous gestures, like ZoomIn, ZoomOut and Wheel

Some of the gestures, like ZoomIn, ZoomOut and Wheel, are continuous. This means these gestures never get fully completed, but only report progress greater than 50%, as long as the gesture is detected. To process them in a gesture-listener script, do as follows:

- In the UserDetected()-function of the script add the following line for each gesture you need to track:

manager.DetectGesture(userId, KinectGestures.Gestures.xxxxx);

- In GestureInProgress() add code to process the continuous gesture, like this:

if(gesture == KinectGestures.Gestures.xxxxx)

{

if(progress > 0.5f)

{

// gesture is detected - process it (for instance, set a flag, get zoom factor or angle)

}

else

{

// gesture is no more detected - process it (for instance, clear the flag)

}

}

- In the GestureCancelled()-function, add code to process the end of the continuous gesture:

if(gesture == KinectGestures.Gestures.xxxxx){ // gesture is cancelled - process it (for instance, clear the flag)}

If you need code samples, see the SimpleGestureListener.cs or ModelGestureListener.cs-scripts.

- From v2.8 on, KinectGestures.cs is not any more a static class, but a component that may be extended, for instance with the detection of new gestures or poses. You need to add it as component to the KinectController-game object, if you need gesture or pose detection in the scene.

How to utilize visual (VGB) gestures in the K2-asset

The visual gestures, created by the Visual Gesture Builder (VGB) can be used in the K2-asset, too. To do it, follow these steps (and see the VisualGestures-game object and its components in the KinectGesturesDemo-scene):

- Copy the gestures’ database (xxxxx.gbd) to the Resources-folder and rename it to ‘xxxxx.gbd.bytes’.

- Add the VisualGestureManager-script as a component to a game object in the scene (see VisualGestures-game object).

- Set the ‘Gesture Database’-setting of VisualGestureManager-component to the name of the gestures’ database, used in step 1 (‘xxxxx.gbd’).

- Create a visual-gesture-listener to process the gestures, and add it as a component to a game object in the scene (see the SimpleVisualGestureListener-script).

- In the GestureInProgress()-function of the gesture-listener add code to process the detected continuous gestures and in the GestureCompleted() add code to process the detected discrete gestures.

How to change the language or grammar for speech recognition

如何为语音识别变更语言或语法

- Make sure you have installed the needed language pack from here.

- Set the ‘Language code’-setting of SpeechManager-component, as to the grammar language you need to use. The list of language codes can be found here (see ‘LCID Decimal’).

- Make sure the ‘Grammar file name’-setting of SpeechManager-component corresponds to the name of the grxml.txt-file in Assets/Resources.

- Open the grxml.txt-grammar file in Assets/Resources and set its ‘xml:lang’-attribute to the language that corresponds to the language code in step 2.

- Make the other needed modifications in the grammar file and save it.

- (Optional since v2.7) Delete the grxml-file with the same name in the root-folder of your Unity project (the parent folder of Assets-folder).

- Run the scene to check, if speech recognition works correctly.

How to run the fitting-room or overlay demo in portrait (影像)mode

- First off, add 9:16 (or 3:4) aspect-ratio to the Game view’s list of resolutions, if it is missing.

- Select the 9:16 (or 3:4) aspect ratio of Game view, to set the main-camera output in portrait mode.

- Open the fitting-room or overlay-demo scene and select the BackgroundImage-game object.

- Enable its PortraitBackground-component (available since v2.7) and save the scene.

- Run the scene to try it out in portrait mode.

How to build an exe from ‘Kinect-v2 with MS-SDK’ project

如何使用Kinect-v2 with MS-SDK项目编译EXE程序

By default Unity builds the exe (and the respective xxx_Data-folder) in the root folder of your Unity project. It is recommended to you use another, empty folder instead. The reason is that building the exe in the folder of your Unity project may cause conflicts (冲突) between the native libraries used by the editor and the ones used by the exe, if they have different architectures (结构)(for instance the editor is 64-bit, but the exe is 32-bit).

Also, before building the exe, make sure you’ve copied the Assets/Resources-folder from the K2-asset to your Unity project. It contains the needed native libraries and custom shaders. Optionally you can remove the unneeded zip.bytes-files from the Resources-folder. This will save a lot of space in the build. For instance, if you target Kinect-v2 only, you can remove the Kinect-v1 and OpenNi2-related zipped libraries. The exe won’t need them anyway.

How to make the Kinect-v2 package work with Kinect-v1

如何让Kinect-v2 和Kinect-v1 协同

If you have only Kinect v2 SDK or Kinect v1 SDK installed on your machine, the KinectManager should detect the installed SDK and sensor correctly. But in case you have both Kinect SDK 2.0 and SDK 1.8 installed simultaneously, the KinectManager will put preference on Kinect v2 SDK and your Kinect v1 will not be detected. The reason for this is that you can use SDK 2.0 in offline mode as well, i.e. without sensor attached. In this case you can emulate the sensor by playing recorded files in Kinect Studio 2.0.

If you want to make the KinectManager utilize the appropriate interface, depending on the currently attached sensor, open KinectScripts/Interfaces/Kinect2Interface.cs and at its start change the value of ‘sensorAlwaysAvailable’ from ‘true’ to ‘false’. After this, close and reopen the Unity editor. Then, on each start, the KinectManager will try to detect which sensor is currently attached to your machine and use the respective sensor interface. This way you could switch the sensors (Kinect v2 or v1), as to your preference, but will not be able to use the offline mode for Kinect v2. To utilize the Kinect v2 offline mode again, you need to switch ‘sensorAlwaysAvailable’ back to true.

What do the options of ‘Compute user map’-setting mean

Compute user map设置的各个选项有何意义。

Here are one-line descriptions of the available options:

RawUserDepth means that only the raw depth image values, coming from the sensor will be available, via the GetRawDepthMap()-function for instance;

BodyTexture means that GetUsersLblTex()-function will return the white image of the tracked users;

UserTexture will cause GetUsersLblTex() to return the tracked users’ histogram image;

CutOutTexture, combined with enabled ‘Compute color map‘-setting, means that GetUsersLblTex() will return the cut-out image of the users.

All these options (except RawUserDepth) can be tested instantly, if you enable the ‘Display user map‘-setting of KinectManager-component, too.

How to set up the user detection order

如何设置用户检测顺序

There is a ‘User detection order’-setting of the KinectManager-component. You can use it to determine how the user detection should be done, depending on your requirements. Here are short descriptions of the available options:

Appearance is selected by default. It means that the player indices(索引)are assigned in order of user appearance. The first detected user gets player index 0, The next one gets index 1, etc. If the user 0 gets lost, the remaining users are not reordered. The next newly detected user will take its place;

Distance means that player indices are assigned depending on distance of the detected users to the sensor. The closest one will get player index 0, the next closest one – index 1, etc. If a user gets lost, the player indices are reordered, depending on the distances to the remaining users;

Left to right means that player indices are assigned depending on the X-position of the detected users. The leftmost one will get player index 0, the next leftmost one – index 1, etc. If a user gets lost, the player indices are reordered, depending on the X-positions of the remaining users;

The user-detection area can be further limited with ‘Min user distance’, ‘Max user distance’ and ‘Max left right distance’-settings, in meters from the sensor. The maximum number of detected user can be limited by lowering the value of ‘Max tracked user’-setting.

How enable body-blending in the FittingRoom-demo, or disable it to increase FPS

If you select the MainCamera in the KinectFittingRoom1-demo scene (in v2.10 or above), you will see a component called UserBodyBlender. It is responsible for mixing the clothing model (overlaying the user) with the real world objects (including user’s body parts), depending on the distance to camera. For instance, if you arms or other real-world objects are in front of the model, you will see them overlaying the model, as expected.

You can enable the component, to turn on the user’s body-blending functionality. The ‘Depth threshold’-setting may be used to adjust the minimum distance to the front of model (in meters). It determines when a real-world’s object will become visible. It is set by default to 0.1m, but you could experiment(测试) a bit to see, if any other value works better for your models. If the scene performance (in means of FPS) is not sufficient, and body-blending is not important, you can disable the UserBodyBlender-component to increase performance.

How to build Windows-Store (UWP-8.1) application

To do it, you need at least v2.10.1 of the K2-asset. To build for ‘Windows store’, first select ‘Windows store’ as platform in ‘Build settings’, and press the ‘Switch platform’-button. Then do as follows:

- Unzip Assets/Plugins-Metro.zip. This will create Assets/Plugins-Metro-folder.

- Delete the KinectScripts/SharpZipLib-folder.

- Optionally, delete all zip.bytes-files in Assets/Resources. You won’t need these libraries in Windows/Store. All Kinect-v2 libraries reside in Plugins-Metro-folder.

- Select ‘File / Build Settings’ from the menu. Add the scenes you want to build. Select ‘Windows Store’ as platform. Select ‘8.1’ as target SDK. Then click the Build-button. Select an empty folder for the Windows-store project and wait the build to complete.

- Go to the build-folder and open the generated solution (.sln-file) with Visual studio.6. Change the ‘by default’ ARM-processor target to ‘x86’. The Kinect sensor is not compatible with ARM processors.

- Right click ‘References’ in the Project-windows and select ‘Add reference’. Select ‘Extensions’ and then WindowsPreview.Kinect and Microsoft.Kinect.Face libraries. Then press OK.

- Open solution’s manifest-file ‘Package.appxmanifest’, go to ‘Capabilities’-tab and enable ‘Microphone’ and ‘Webcam’ in the left panel. Save the manifest. This is needed to to enable the sensor, when the UWP app starts up. Thanks to Yanis Lukes (aka Pendrokar) for providing this info!

- Build the project. Run it, to test it locally. Don’t forget to turn on Windows developer mode on your machine.

如何使用多个用户

Kinect-v2最多可以同时跟踪6个用户。 这就是为什么许多Kinect相关组件,如AvatarController,InteractionManager,model ,category-selectors, gesture , interaction listeners等都有一个名为“Player inde(用户索引)”的设置。 如果设置为0,相应的组件将跟踪第一个检测到的用户。 如果设置为1,组件将跟踪第二次检测到的用户。 如果设置为2 - 对应第3个用户等,可以使用KinectManager(KinectController游戏对象的组件)的“User detection order”设置项来指定用户被检测的顺序和方式。

如何使用 FacetrackingManager

FacetrackingManager组件可用于多种用途。

首先,将它作为KinectController的组件添加,当场景中有Avatar时(“humanoid”模型请使用AvatarController组件),可以提供更精确的颈部和头部跟踪。如果需要高精度脸部跟踪,您可以启用FacetrackingManager组件的“Get face model data”设置项。请记注意,使用高精度脸部跟踪将降低性能,并可能导致内存泄漏,这可能导致在多场景重新启动后出现Unity崩溃。请谨慎使用此功能。

在启用“Get face model data”的情况下,不要忘记为“Face model mesh”设置项赋mesh对象(例如Quad)。还要注意“Textured model mesh”设置项。

可用的选项是:

“None” - 表示网格不会纹理化;

“Color map” - 网格将从彩色摄像机图像中获得纹理,即将重现用户的脸部;

“Face rectangle” - 脸部网格将使用其材质的Albedo texture进行纹理化,而UI坐标将与检测到的脸部矩形匹配。

最后,您可以使用FacetrackingManager公共API来获取大量的面部跟踪数据,如用户的头部位置和旋转,动画单位,形状单位,面部模型顶点等。

如何在Demo “FittingRoom”中使用背景删除图像

To replace the color-camera background in the FittingRoom-demo scene with the background-removal image, please do as follows:

- Add the KinectScripts/BackgroundRemovalManager.cs-script as component to the KinectController-game object in the scene.

- Make sure the ‘Compute user map’-setting of KinectManager (component of the KinectController, too) is set to ‘Body texture’, and the ‘Compute color map’-setting is enabled.

- Open the OverlayController-script (also a component of KinectController-game object), and near the end of script replace: ‘backgroundImage.texture = manager.GetUsersClrTex();’ with: ‘backgroundImage.texture = BackgroundRemovalManager.Instance.GetForegroundTex();’.

- Select the MainCamera in Hierarchy, and disable its UserBodyBlender-component.

How to move the FPS-avatars of positionally tracked users in VR environment

There are two options for moving first-person avatars in VR-environment (the 1st avatar-demo scene in K2VR-asset):

- If you use the Kinect’s positional tracking, turn off the Oculus/Vive positional tracking, because their coordinates are different to Kinect’s.

- If you prefer to use the Oculus/Vive positional tracking:– enable the ‘External root motion’-setting of the AvatarController-component of avatar’s game object. This will disable avatar motion as to Kinect special coordinates.– enable the HeadMover-component of avatar’s game object, and assign the MainCamera as ‘Target transform’, to follow the Oculus/Vive position.

Now try to run the scene. If there are issues with the MainCamera used as positional target, do as follows:– add an empty game object to the scene. It will be used to follow the Oculus/Vive positions.– assign the newly created game object to the ‘Target transform’-setting of the HeadMover-component.– add a script to the newly created game object, and in that script’s Update()-function set programatically the object’s transform position to be the current Oculus/Vive position.

How to create your own gestures

For gesture recognition there are two options – visual gestures (created with the Visual Gesture Builder, part of Kinect SDK 2.0) and programmatic gestures that are programmatically implemented in KinectGestures.cs. The latter are based mainly on the positions of the different joints, and how they stand to each other in different moments of time.

Here is a video on creating and checking for visual gestures. Please also check GesturesDemo/VisualGesturesDemo-scene, to see how to use visual gestures in Unity. One issue with the visual gestures is that they usually work in 32-bit builds only.

The programmatic gestures should be coded in C#, in KinectGestures.cs (or class that extends it). To get started with coding programmatic gestures, first read ‘How to use gestures…’-pdf document in the _Readme-folder of K2-asset. It may seem difficult at first, but it’s only a matter of time and experience to become expert in coding gestures. You have direct access to the jointsPos-array, containing all joint positions and jointsTracked-array, containing the respective joint-tracking states. Keep in mind that all joint positions are in world coordinates, in meters. Some helper functions are also there at your disposal, like SetGestureJoint(), SetGestureCancelled(), CheckPoseComplete(), etc. Maybe I’ll write a separate tutorial about gesture coding in the near future.

The demo scenes related to utilizing programmatic gestures are again in the GesturesDemo-folder. The KinectGesturesDemo1-scene shows how to utilize discrete gestures, and the KinectGesturesDemo2-scene is about continuous gestures.

More tips regarding listening for gestures in Unity scenes can be found above. See the tips for discrete, continuous and visual gestures (which could be discrete and continuous, as well).

How to enable or disable the tracking of inferred joints

First, keep in mind that:

- There is ‘Ignore inferred joints’-setting of the KinectManager.

- There is a public API method of KinectManager, called IsJointTracked(). This method is utilized by various scripts & components in the demo scenes.

Here is how it works:The Kinect SDK tracks the positions of all body joints’ together with their respective(各自)tracking states. These states can be Tracked, NotTracked or Inferred. When the ‘Ignore inferred joints’-setting is enabled, the IsJointTracked()-method returns true, when the tracking state is Tracked or Inferred, and false when the state is NotTracked. I.e. both tracked and inferred joints are considered valid. When the setting is disabled, the IsJointTracked()-method returns true, when the tracking state is Tracked, and false when the state is NotTracked or Inferred. I.e. only the really tracked joints are considered valid.

How to build exe with the Kinect-v2 plugins provided by Microsoft

In case you’re targeting Kinect-v2 sensor only, and would like to skip packing all native libraries that come with the K2-asset in the build, as well as unpacking them into the working directory of the executable afterwards, do as follows:

- Download and unzip the Kinect-v2 Unity Plugins from here.

- Open your Unity project. Select ‘Assets / Import Package / Custom Package’ from the menu and import only the Plugins-folder from ‘Kinect.2.0.1410.19000.unitypackage’. You can find it in the unzipped package from p.1 above. Please don’t import anything from the ‘Standard Assets’-folder of unitypackage. All needed standard assets are already present in the K2-asset.

- If you are using the FacetrackingManager in your scenes, import the Plugins-folder from ‘Kinect.Face.2.0.1410.19000.unitypackage’ as well. If you are using visual gestures (i.e. VisualGestureManager in your scenes), import the Plugins-folder from ‘Kinect.VisualGestureBuilder.2.0.1410.19000.unitypackage’, too. Again, please don’t import anything from the ‘Standard Assets’-folder of unitypackages. All needed standard assets are already present in the K2-asset.

- Delete all zipped libraries in Assets/Resources-folder. You can see them as .zip-files in the Assets-window, or as .zip.bytes-files in the Windows explorer. Delete the Plugins-Metro (zip-file) in the Assets-folder, too. All these zipped libraries are no more needed at run-time.

- Delete all dlls in the root-folder of your Unity project. The root-folder is the parent-folder of the Assets-folder of your project, and is not visible in the Editor. Delete the NuiDatabase- and vgbtechs-folders in the root-folder, too. These dlls and folders are no more needed, because they are part of the project’s Plugins-folder now.

- Try to run the Kinect-v2 related scenes in the project, to make sure they still work as expected.

- If everything is OK, build the executable again. This should work for both x86 and x86_64-architectures, as well as for Windows-Store, SDK 8.1.

How to build Windows-Store (UWP-10) application

To do it, you need at least v2.12.2 of the K2-asset. Then follow these steps:

- Delete the KinectScripts/SharpZipLib-folder. It is not needed for UWP. If you leave it, it will cause syntax errors later.

- Open ‘File / Build Settings’ in Unity editor, switch to ‘Windows store’ platform and select ‘Universal 10’ as SDK. Optionally enable the ‘Unity C# Project’ and ‘Development build’-settings, if you’d like to edit the Unity scripts in Visual studio later.

- Press the ‘Build’-button, select output folder and wait for Unity to finish exporting the UWP-Visual studio solution.

- Close or minimize the Unity editor, then open the exported UWP solution in Visual studio.

- Select x86 or x64 as target platform in Visual studio.

- Open ‘Package.appmanifest’ of the main project, and on tab ‘Capabilities’ enable ‘Microphone’ & ‘Webcam’. These may be enabled in the Windows-store’s Player settings in Unity, too.

- If you have enabled the ‘Unity C# Project’-setting in p.2 above, right click on ‘Assembly-CSharp’-project in the Solution explorer, select ‘Properties’ from the context menu, and then select ‘Windows 10 Anniversary Edition (10.0; Build 14393)’ as ‘Target platform’. Otherwise you will get compilation errors.

- Build and run the solution, on the local or remote machine. It should work now.

Please mind the FacetrackingManager and SpeechRecognitionManager-components, hence the scenes that use them, will not work with the current version of the K2-UWP interface.

How to run the projector-demo scene (v2.13 and later)

To run the KinectProjectorDemo-scene, you need to calibrate the projector to the Kinect sensor first. To do it, please follow these steps:

- Go to the RoomAliveToolkit-GitHub page and follow the instructions of ‘ProCamCalibration README’ there.

- To do the calibration, you need first to build the ProCamCalibration-project with Microsoft Visual Studio 2015 or later. For your convenience, here is a ready-made build of the needed executables, made with VS-2015.

- After the ProCamCalibration finishes, copy the generated calibration xml-file to KinectDemos/ProjectorDemo/Resources.

- Open the KinectProjectorDemo-scene in Unity editor, select the MainCamera-game object in Hierarchy, and drag the calibration xml-file generated by ProCamCalibrationTool to the ‘Calibration Xml’-setting of its ProjectorCamera-component. Please also check, if the ‘Proj name in config’ is the same as the projector name, set in the calibration xml-file.

- Run the scene and walk in front of the Kinect sesnor, to check if the skeleton projection gets overlayed correctly by the projector over your body.

How to render the background-removal image or the color-camera image on scene background

如何渲染背景剔除图片或者彩色相机图片在场景背景上。

First off, To replace the color-camera background in the FittingRoom-demo scene with the background-removal image, please follow these steps.In all other demo-scenes: You can put the background-removal image or the color-camera image on scene background, by following this (rather complex) procedure:

首先,如使用背景剔除图片替换FittingRoom Demo场景里面彩色相机背景,请按 如下步骤

在其他demo场景里面,你可以将背景剔除图片或者彩色相机图片置于场景背景,流程如下(较为复杂) :

- Make sure there is BackgroundImage-object in the scene. If there isn’t any, create an empty game object, name it BackgroundImage, and add ‘GUI Texture’-component to it. Set its Transform position to (0.5, 0.5, 0) to center it on the screen, then set its Y-scale to -1, to flip the texture vertically (Unity textures have their origin at the bottom left corner, while Kinect’s are at top-left).

确保场景中存在BackgroundImage游戏对象,没有则创建一个空物体并重命名为BackgroundImage,然后在其上添加‘GUI Texture’组建,设置position为 (0.5, 0.5, 0) 使其位于屏幕中心,然后设置其Y轴-scale 为-1,来垂直翻转这个贴图(unity texture的原始点在左下角而Kinect的为左上角)。 - Add the ForegroundToImage-script component to the BackgroundImage-game object, as well as the BackgroundRemovalManager-component to the KinectController-game object. ForegroundToImage will use the foregound texture, created by BackgroundRemovalManager, to set it as texture of the GUI-Texture component. If you want to render the color-camera image instead, add the BackgroundColorImage-component to KinectController-game object and set its ‘Background Image’-setting to reference the BackgroundImage-game object from p.1.

添加ForegroundToImage脚本到BackgroundImage游戏对象上,并且,将BackgroundRemovalManager脚本添加到KinectController游戏对象上。//todo。。。。 - (The tricky part) Two cameras are needed to display an image on scene background – one to render the background, and a second one to render all objects on top of the background. Cameras in Unity have a setting called CullingMask, where you can set the layers rendered by each camera. In this regard, an extra layer will be needed for the background. Select ‘Add layer’ from the Layer-dropdown in the top-right corner of the Inspector and add ‘Background Layer’. Then go back to the BackgroundImage and set its layer to ‘Background Layer’. Unfortunately, when Unity exports packages, it doesn’t export the extra layers, that’s why the extra layers are invisible in the demo-scenes.

- Make sure there is a BackgroundCamera-object in the scene. If there isn’t any, add a camera-object to the scene and name it BackgroundCamera. Set its CullingMask to ‘Background Layer’ only. Other important settings are ‘Depth’, which determines the order of rendering, and ‘Clear flags’ that determines if the camera clears the output texture or not. Set the ‘Clear flags’ of BackgroundCamera to ‘Skybox’ or ‘Solid color’, and ‘Depth’ to 0. This means this camera will render first, and it will clear the output texture before that. Also, don’t forget to disable its AudioListener-component. Otherwise expect endless warnings regarding the multiple audio listeners, when the scene is run.

- Select the ‘Main Camera’-object in the scene. Set its ‘Clear flags’ to ‘Depth only’ and the ‘Depth’ to 1. This means it will not clear the output image, and will render 2nd. In the ‘Culling mask’-setting un-select ‘BackgroundLayer’ and leave all other layers selected. This way the BackgroundCamera will be the 1st one and will render the BackgroundLayer-only, while the MainCamera will be the 2nd one and will render everything else on top of it.