安装请看https://blog.51cto.com/liuqs/2027365 ,最好是对应的版本组件,否则可能会有差别。

(一)prometheus + grafana + alertmanager 配置主机监控

(二)prometheus + grafana + alertmanager 配置Mysql监控

(三)prometheus + grafana + alertmanager 配置Redis监控

(四)prometheus + grafana + alertmanager 配置Kafka监控

(五)prometheus + grafana + alertmanager 配置ES监控

(一) prometheus + grafana + alertmanager配置主机监控

1. 配置prometheus(登陆到prometheus服务器,prometheus grafana alertmanager在同一台服务器上)

a. 打开vim /data/monitor/prometheus/conf/prometheus.yml文件。配置文件如下(所有的节点都是写在json文件中):

global:

# Server端抓取数据的时间间隔

scrape_interval: 1m

# 评估报警规则的时间间隔

evaluation_interval: 1m

# 数据抓取的超时时间

scrape_timeout: 20s

# 加全局标签

#external_labels:

#monitor: "usa"

# 连接alertmanager

alerting:

alertmanagers:

- static_configs:

- targets: ["localhost:9093"]

# 告警规则

rule_files:

- /data/monitor/prometheus/conf/rule/*.yml

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# 监控prometheus本机

- job_name: 'prometheus'

scrape_interval: 15s

static_configs:

- targets: ['10.8.9.2:9090']

# 监控指定主机

- job_name: 'node_resources'

scrape_interval: 1m

static_configs:

file_sd_configs:

- files:

- /data/monitor/prometheus/conf/node_conf/node_host_info.json

honor_labels: true

b. node主机json文件:

cat /data/monitor/prometheus/conf/node_conf/node_host_info.json

[

{

"labels": {

"desc": "ba_backend_10.8.9.35",

"group": "ba",

"host_ip": "10.8.9.35",

"hostname": "ba_backend"

},

"targets": [

"10.8.9.35:9100"

]

},

{

"labels": {

"desc": "ba3_10.8.32.67",

"group": "ba",

"host_ip": "10.8.32.67",

"hostname": "ba3"

},

"targets": [

"10.8.32.67:9100"

]

},

{

"labels": {

"desc": "ba1_10.8.46.117",

"group": "ba",

"host_ip": "10.8.46.117",

"hostname": "ba1"

},

"targets": [

"10.8.46.117:9100"

]

},

{

"labels": {

"desc": "ba2_10.8.80.126",

"group": "ba",

"host_ip": "10.8.80.126",

"hostname": "ba2"

},

"targets": [

"10.8.80.126:9100"

]

},

{

"labels": {

"desc": "openplatform_10.8.69.81",

"group": "openplatform",

"host_ip": "10.8.69.81",

"hostname": "openplatform"

},

"targets": [

"10.8.69.81:9100"

]

}

]

c. cd /data/monitor/prometheus, 然后 sh start.sh启动prometheus,然后netstat -nltp |grep prometheus 查看9090端口是否已监听

d. 在需要监控的服务器上(10.8.9.35,10.8.32.67,10.8.46.117,10.8.80.126,10.8.69.81)下载并安装node_export(下载地址:https://pan.baidu.com/s/1gi-BM0rWWaGGKyWzUBFLPg),下载后解压到/data/下,然后 cd /data/node_exporter , sh start.sh 启动服务, netstat -nltp |grep node_exporter 查看9100是否已监听

e. 登录prometheus,在浏览器中打开 http://10.8.9.2:9090 ,先点菜单栏里的Graph,然后在下面框中输入 node_boot_time,最后点Execute查看下面是否有数据。

2. 配置grafana

a. /etc/init.d/grafana start 启动grafana,然后 netstat -nltp |grep grafana 查看3000端口是否已监听。

b. 在浏览器中打开 http://10.8.9.2:3000 登录grafana,默认用户名与密码都是admin。

c. 首先点配置按钮,然后在点Data Sources配置数据源。

d. 打开数据源页面,然后点 Add data source

e. 打开选择数据源页面,选择prometheus

f. 然后打开配置prometheus数据源页面,在Name中输入 Prometheus,并勾选Default, URL中填 http://localhost:9090(因为prometheus和grafana在同一台机上),最后点Save&Test 进行保存。

g. 下载主机监控模板到自己电脑 https://pan.baidu.com/s/19RLrebLh5lI3nla4jaq1QA(当然你也可以自己新建编辑,步骤是点+号,选择图表。)

h. 然后在grafana页面中,点+,然后再点import

i. 然后在导入页面,点Upload .json File,选择刚下载下来的模板

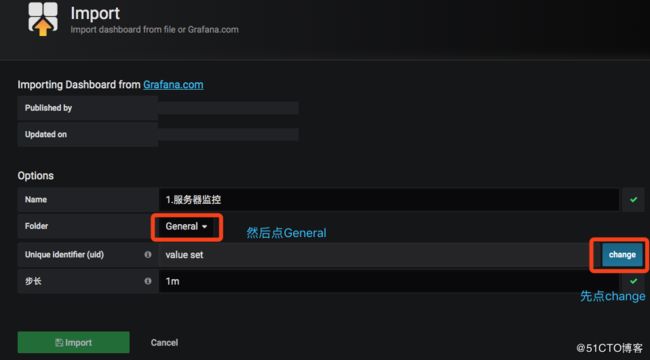

j. 然后点change改变模板id,然后再点General, 然后点New Folder, 然后点Cancel,最后点Import,就会导入模板。

k. 然后就可以看到数据展示了。

l. 当然你也可对现有的模板进行修改,或者新增或者设置等。

3. alertmanager配置

a. 配置规则,cat /data/monitor/prometheus/conf/rule/host.yml

groups:

- name: host_alert

rules:

### 硬盘 ###

# 默认系统盘告警策略

- alert: 主机系统盘80%

expr: floor(100-((node_filesystem_avail{device!="rootfs", mountpoint="/"}*100)/(node_filesystem_size{device!="rootfs", mountpoint="/"}*0.95))) >= 80

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为3分钟."

# 默认120G内数据盘告警策略

- alert: 主机数据盘90%

expr: (floor(100-((node_filesystem_avail{device!="rootfs", mountpoint="/data"}*100)/(node_filesystem_size{device!="rootfs", mountpoint="/data"}*0.95))) >= 90) and (node_filesystem_size{device!="rootfs", mountpoint="/data"}/1024/1024/1024 <= 120)

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为3分钟."

# 默认120G以上数据盘告警策略

- alert: 主机数据盘不足20G

expr: (floor(node_filesystem_avail{device!="rootfs", mountpoint="/data"}/1024/1024/1024) <= 20) and (node_filesystem_size{device!="rootfs", mountpoint="/data"}/1024/1024/1024 > 120)

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}G],告警初始时长为3分钟."

### CPU ###

# 默认CPU使用率告警策略

- alert: 主机CPU90%

expr: floor(100 - ( avg ( irate(node_cpu{mode='idle', hostname!~'consumer_service.*|backup_hk.*|bigdata.*master.*|3rdPart|htc_management|product_category_backend|sa_cluster_s.*'}[5m]) ) by (job, instance, hostname, desc) * 100 )) >= 90

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为3分钟."

# 持续时间较长的CPU使用率告警策略

- alert: 主机CPU90%

expr: floor(100 - ( avg ( irate(node_cpu{mode='idle', hostname=~'consumer_service.*|product_backend|sa_cluster_s.*'}[5m]) ) by (job, instance, hostname, desc) * 100 )) >= 90

for: 12m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为12分钟."

# 持续时间较长的CPU使用率告警策略

- alert: 主机CPU90%

expr: floor(100 - ( avg ( irate(node_cpu{mode='idle', hostname=~'bigdata.*master.*|3rdPart|backup_hk.*'}[5m]) ) by (job, instance, hostname, desc) * 100 )) >= 90

for: 48m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为48分钟."

### 内存 ###

# 默认内存使用率告警策略

- alert: 主机内存95%

expr: floor((node_memory_MemTotal - node_memory_MemFree - node_memory_Cached - node_memory_Buffers) / node_memory_MemTotal * 100) >= 95

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}%],告警初始时长为3分钟."

### 负载 ###

# 默认负载过高告警策略

- alert: 主机负载过高

expr: floor(node_load1{hostname!~"sa_cluster_s.*|bigdata.*master.*"}) >= 20

for: 3m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}],告警初始时长为3分钟."

# 持续时间较长的负载过高告警策略

- alert: 主机负载过高

expr: floor(node_load1{hostname=~"sa_cluster_s.*|bigdata.*master.*"}) >= 20

for: 12m

labels:

severity: warning

annotations:

description: "[{{ $labels.desc }}],告警值为:[{{ $value }}],告警初始时长为12分钟."

b. 重启prometheus,cd /data/monitor/prometheus , sh reload.sh

c. 配置alertmanager, cat /data/prometheus/alertmanager/conf/alertmanager.yml

global:

resolve_timeout: 2m

smtp_auth_password: q5AYahvxi3WLDap3 #发送邮箱密码

smtp_auth_username: [email protected] #发送邮箱

smtp_from: [email protected] #发送邮箱

smtp_require_tls: false

smtp_smarthost: smtp.163.com:465 #发送服务器

wechat_api_url: https://qyapi.weixin.qq.com/cgi-bin/ #微信接口链接

inhibit_rules:

- equal:

- instance

source_match:

alertname: "主机CPU90%"

target_match:

alertname: "主机负载过高"

- equal:

- instance

source_match:

alertname: "mysql运行进程数5分钟增长数>150"

target_match:

alertname: "mysql慢查询5分钟100条"

- equal:

- instance

source_match:

severity: error

target_match:

severity: warning

- equal:

- instance

source_match:

severity: fatal

target_match:

severity: error

- equal:

- service_name

source_match:

severity: error

target_match:

severity: warning

receivers:

- email_configs: #定义test发送人模块

- html: '{{ template "email.default.html" . }}' #调用的模板

send_resolved: true

to: [email protected] #将报警信息发给些邮箱,多人用|

name: test #发送人模板名

wechat_configs: #微信接收这些信息请看最下面的企业微信介绍

- agent_id: 1000002 #应用id

api_secret: hnyU1LTGnJUiBaCp47l3WVQLTEFF5RXyfNO751xlaHa #应用认证

corp_id: wwd397231fa801beaa #企业微信ID

send_resolved: true

to_user: LiuQingShan|liuqs #发送给企业微信通讯人的Id 多个人就用|分开

- email_configs: #定义默认的发送人

- html: '{{ template "email.default.html" . }}'

send_resolved: true

name: default_group

wechat_configs:

- agent_id: 1000002

api_secret: hnyU1LTGnJUiBaCp47l3WVQLTEFF5RXyfNO751xlaHa

corp_id: wwd397231fa801beaa

send_resolved: true

to_user: LiuQingShan

route: #定义资源报警规则

group_by:

- monitor

group_interval: 2m

group_wait: 30s

receiver: default_group

repeat_interval: 6h

routes:

- continue: true

match_re:

instance: 10.8.46.117:9100|10.8.80.126:9100|10.8.32.67:9100|10.8.9.35:9100|10.8.69.81:9100 #定义使用的资源

receiver: test #使用test发送人模板

templates:

- /data/monitor/alertmanager/template/*.tmpl #调用报警内容模板的路径

d. 报警内容模板

cat/data/monitor/alertmanager/template/default.tmpl

{{ define "__alertmanager" }}AlertManager{{ end }}

{{ define "__alertmanagerURL" }}{{ .ExternalURL }}/#/alerts?receiver={{ .Receiver }}{{ end }}

{{ define "__subject" }}[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] {{ .GroupLabels.SortedPairs.Values | join " " }} {{ if gt (len .CommonLabels) (len .GroupLabels) }}({{ with .CommonLabels.Remove .GroupLabels.Names }}{{ .Values | join " " }}{{ end }}){{ end }}{{ end }}

{{ define "__description" }}{{ end }}

{{ define "__text_alert_list" }}{{ range . }}Labels:

{{ range .Labels.SortedPairs }} - {{ .Name }} = {{ .Value }}

{{ end }}Annotations:

{{ range .Annotations.SortedPairs }} - {{ .Name }} = {{ .Value }}

{{ end }}Source: {{ .GeneratorURL }}

{{ end }}{{ end }}

{{ define "slack.default.title" }}{{ template "__subject" . }}{{ end }}

{{ define "slack.default.username" }}{{ template "__alertmanager" . }}{{ end }}

{{ define "slack.default.fallback" }}{{ template "slack.default.title" . }} | {{ template "slack.default.titlelink" . }}{{ end }}

{{ define "slack.default.pretext" }}{{ end }}

{{ define "slack.default.titlelink" }}{{ template "__alertmanagerURL" . }}{{ end }}

{{ define "slack.default.iconemoji" }}{{ end }}

{{ define "slack.default.iconurl" }}{{ end }}

{{ define "slack.default.text" }}{{ end }}

{{ define "slack.default.footer" }}{{ end }}

{{ define "hipchat.default.from" }}{{ template "__alertmanager" . }}{{ end }}

{{ define "hipchat.default.message" }}{{ template "__subject" . }}{{ end }}

{{ define "pagerduty.default.description" }}{{ template "__subject" . }}{{ end }}

{{ define "pagerduty.default.client" }}{{ template "__alertmanager" . }}{{ end }}

{{ define "pagerduty.default.clientURL" }}{{ template "__alertmanagerURL" . }}{{ end }}

{{ define "pagerduty.default.instances" }}{{ template "__text_alert_list" . }}{{ end }}

{{ define "opsgenie.default.message" }}{{ template "__subject" . }}{{ end }}

{{ define "opsgenie.default.description" }}{{ .CommonAnnotations.SortedPairs.Values | join " " }}

{{ if gt (len .Alerts.Firing) 0 -}}

Alerts Firing:

{{ template "__text_alert_list" .Alerts.Firing }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

Alerts Resolved:

{{ template "__text_alert_list" .Alerts.Resolved }}

{{- end }}

{{- end }}

{{ define "opsgenie.default.source" }}{{ template "__alertmanagerURL" . }}{{ end }}

{{ define "victorops.default.state_message" }}{{ .CommonAnnotations.SortedPairs.Values | join " " }}

{{ if gt (len .Alerts.Firing) 0 -}}

Alerts Firing:

{{ template "__text_alert_list" .Alerts.Firing }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

Alerts Resolved:

{{ template "__text_alert_list" .Alerts.Resolved }}

{{- end }}

{{- end }}

{{ define "victorops.default.entity_display_name" }}{{ template "__subject" . }}{{ end }}

{{ define "victorops.default.monitoring_tool" }}{{ template "__alertmanager" . }}{{ end }}

{{ define "email.default.subject" }}{{ template "__subject" . }}{{ end }}

{{ define "email.default.html" }}

{{ .Alerts | len }} alert{{ if gt (len .Alerts) 1 }}s{{ end }} for {{ range .GroupLabels.SortedPairs }} {{ .Name }}={{ .Value }} {{ end }} View in {{ template "__alertmanager" . }} {{ if gt (len .Alerts.Firing) 0 }} [{{ .Alerts.Firing | len }}] Firing {{ end }} {{ range .Alerts.Firing }} Labels {{ range .Labels.SortedPairs }}{{ .Name }} = {{ .Value }} Start_time: {{ .StartsAt.Format "2006-01-02 15:04:05" }} {{ if gt (len .Annotations) 0 }}Annotations {{ range .Annotations.SortedPairs }}{{ .Name }} = {{ .Value }} {{ end }} {{ if gt (len .Alerts.Resolved) 0 }} {{ if gt (len .Alerts.Firing) 0 }} {{ end }} [{{ .Alerts.Resolved | len }}] Resolved {{ end }} {{ range .Alerts.Resolved }} Labels {{ range .Labels.SortedPairs }}{{ .Name }} = {{ .Value }} Start_time: {{ .StartsAt.Format "2006-01-02 15:04:05" }} End_time: {{ .EndsAt.Format "2006-01-02 15:04:05" }} {{ if gt (len .Annotations) 0 }}Annotations {{ range .Annotations.SortedPairs }}{{ .Name }} = {{ .Value }} {{ end }}

{{ end }}

{{ end }}

{{ end }}

{{ end }}

{{ end }}

{{ end }}

{{ end }}

{{ define "pushover.default.title" }}{{ template "__subject" . }}{{ end }}

{{ define "pushover.default.message" }}{{ .CommonAnnotations.SortedPairs.Values | join " " }}

{{ if gt (len .Alerts.Firing) 0 }}

Alerts Firing:

{{ template "__text_alert_list" .Alerts.Firing }}

{{ end }}

{{ if gt (len .Alerts.Resolved) 0 }}

Alerts Resolved:

{{ template "__text_alert_list" .Alerts.Resolved }}

{{ end }}

{{ end }}

{{ define "pushover.default.url" }}{{ template "__alertmanagerURL" . }}{{ end }}

cat /data/monitor/alertmanager/template/wechat.tmpl

{{ define "wechat.default.message" }}

{{ if gt (len .Alerts.Firing) 0 -}}告警:

{{ range .Alerts.Firing }}类型:{{ .Labels.alertname }}

详情: {{ .Annotations.description }}

开始: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

======

{{ end }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}恢复:

{{ range .Alerts.Resolved }}类型:{{ .Labels.alertname }}

详情: {{ .Annotations.description }}

开始: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

结束: {{ .EndsAt.Format "2006-01-02 15:04:05" }}

======

{{ end }}

{{- end }}

{{ end }}

e. cd /data/monitor/alertmanager 下, sh start.sh

f. 注意: 配置微信接收告警

(1)需要首先注册一个企业微信,然后点应用与小程序,然后点创建应用。

(2)然后在创建应用里,传logo及填对应信息和选择接收人的范围。

(3)然后点开这个新建的 监控报警 应用就可以看到对应的agent_id(AgentId)和api_secret(Secret)

(4)corp_id 在企业微信页面中点菜单栏 我的企业,然后最下方有个 企业ID

(5)接收人to_user在企业微信页面中点菜单栏 通讯录 中,点每个联系人就可以看到 帐号,这样就可以用企业微信接收报警信息了。

(6)如果不想下载企业微信APP,直接用微信接收,需要在企业微信页面点我的企业,然后点微工作台,然后用微信扫一扫 邀请关注后面的二维码,点关注,如果是想其他人也用微信收报警信息,需要将此二维码分享给相关人,或者在自己微信里找到自己的企业号,推荐给朋友。