在前面的两篇文章中,介绍了mysql组复制的特点及配置过程,本文演示mysql单组复制下的模拟故障测试。

一、组复制所有成员服务器宕机重启后的恢复

连接所有的mysql实例查询当前的组复制成员情况,状态都是OFFLINE,这种情况下如何恢复组复制?

mysql> select * from performance_schema.replication_group_members; +---------------------------+-----------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+-----------+-------------+-------------+--------------+ | group_replication_applier | | | NULL | OFFLINE | +---------------------------+-----------+-------------+-------------+--------------+ 1 row in set (0.00 sec)

在第一台mysql实例上将group_replication_bootstrap_group设置为ON,然后开启组复制,完成后再设置group_replication_bootstrap_group参数为OFF

mysql> set global group_replication_bootstrap_group=ON; Query OK, 0 rows affected (0.01 sec) mysql> start group_replication; Query OK, 0 rows affected (2.19 sec) mysql> set global group_replication_bootstrap_group=OFF; Query OK, 0 rows affected (0.00 sec) mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 8b643791-6d30-11e7-986a-000c29d53b31 | vm1 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 1 row in set (0.00 sec)

第二台mysql实例上开启组复制

mysql> select * from performance_schema.replication_group_members; +---------------------------+-----------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+-----------+-------------+-------------+--------------+ | group_replication_applier | | | NULL | OFFLINE | +---------------------------+-----------+-------------+-------------+--------------+ 1 row in set (0.00 sec) mysql> change master to master_user='repl',master_password='123456' for channel 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.03 sec) mysql> start group_replication; Query OK, 0 rows affected (6.76 sec) mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 35c331eb-6d3d-11e7-91e3-000c29e07281 | vm2 | 3306 | ONLINE | | group_replication_applier | 941bce76-6d40-11e7-b2fe-000c2909332c | vm3 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 2 rows in set (0.00 sec)

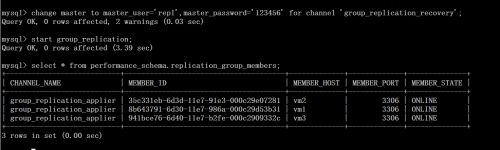

在第三台mysql实例开启组复制

mysql> change master to master_user='repl',master_password='123456' for channel 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.03 sec) mysql> start group_replication; Query OK, 0 rows affected (3.39 sec) mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 35c331eb-6d3d-11e7-91e3-000c29e07281 | vm2 | 3306 | ONLINE | | group_replication_applier | 8b643791-6d30-11e7-986a-000c29d53b31 | vm1 | 3306 | ONLINE | | group_replication_applier | 941bce76-6d40-11e7-b2fe-000c2909332c | vm3 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 3 rows in set (0.00 sec)

一般来说,第一台启动group replication的为组复制中的master角色,我们可以使用如下的SQL进行确认

SELECT * FROM PERFORMANCE_SCHEMA .replication_group_members WHERE member_id = ( SELECT variable_value FROM PERFORMANCE_SCHEMA .global_status WHERE VARIABLE_NAME = 'group_replication_primary_member' );

二、如果master宕机,组复制会如何?下面我们来测试一下

首先确认当前的master主机是VM2

[root@vm2 ~]# service mysqld stop Shutting down MySQL............. SUCCESS!

[root@vm2 ~]# tail -f /mydata/vm2.err 2017-10-27T15:42:19.×××27Z 0 [Note] Giving 7 client threads a chance to die gracefully 2017-10-27T15:42:19.999375Z 0 [Note] Shutting down slave threads 2017-10-27T15:42:22.000341Z 0 [Note] Forcefully disconnecting 7 remaining clients 2017-10-27T15:42:22.000556Z 0 [Note] Plugin group_replication reported: 'Going to wait for view modification' 2017-10-27T15:42:22.002961Z 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324' 2017-10-27T15:42:25.462993Z 0 [Note] Plugin group_replication reported: 'state 4345 action xa_terminate' 2017-10-27T15:42:25.463472Z 0 [Note] Plugin group_replication reported: 'new state x_start' 2017-10-27T15:42:25.463514Z 0 [Note] Plugin group_replication reported: 'state 4272 action xa_exit' 2017-10-27T15:42:25.463650Z 0 [Note] Plugin group_replication reported: 'Exiting xcom thread' 2017-10-27T15:42:25.463675Z 0 [Note] Plugin group_replication reported: 'new state x_start' 2017-10-27T15:42:30.555581Z 0 [Note] Plugin group_replication reported: 'auto_increment_increment is reset to 1' 2017-10-27T15:42:30.555639Z 0 [Note] Plugin group_replication reported: 'auto_increment_offset is reset to 1' 2017-10-27T15:42:30.556496Z 19 [Note] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed 2017-10-27T15:42:30.564277Z 16 [Note] Plugin group_replication reported: 'The group replication applier thread was killed' 2017-10-27T15:42:30.565453Z 0 [Note] Event Scheduler: Purging the queue. 0 events 2017-10-27T15:42:30.613823Z 0 [Note] Binlog end 2017-10-27T15:42:30.619483Z 0 [Note] Shutting down plugin 'group_replication' 2017-10-27T15:42:30.619582Z 0 [Note] Plugin group_replication reported: 'All Group Replication server observers have been successfully unregistered' 2017-10-27T15:42:30.620753Z 0 [Note] Shutting down plugin 'ngram' 2017-10-27T15:42:30.620801Z 0 [Note] Shutting down plugin 'BLACKHOLE' 2017-10-27T15:42:30.620820Z 0 [Note] Shutting down plugin 'ARCHIVE' 2017-10-27T15:42:30.620832Z 0 [Note] Shutting down plugin 'partition' 2017-10-27T15:42:30.620841Z 0 [Note] Shutting down plugin 'INNODB_SYS_VIRTUAL' 2017-10-27T15:42:30.620853Z 0 [Note] Shutting down plugin 'INNODB_SYS_DATAFILES' 2017-10-27T15:42:30.620937Z 0 [Note] Shutting down plugin 'INNODB_SYS_TABLESPACES' 2017-10-27T15:42:30.620946Z 0 [Note] Shutting down plugin 'INNODB_SYS_FOREIGN_COLS' 2017-10-27T15:42:30.620951Z 0 [Note] Shutting down plugin 'INNODB_SYS_FOREIGN' 2017-10-27T15:42:30.620957Z 0 [Note] Shutting down plugin 'INNODB_SYS_FIELDS' 2017-10-27T15:42:30.620961Z 0 [Note] Shutting down plugin 'INNODB_SYS_COLUMNS' 2017-10-27T15:42:30.620966Z 0 [Note] Shutting down plugin 'INNODB_SYS_INDEXES' 2017-10-27T15:42:30.620971Z 0 [Note] Shutting down plugin 'INNODB_SYS_TABLESTATS' 2017-10-27T15:42:30.620976Z 0 [Note] Shutting down plugin 'INNODB_SYS_TABLES' 2017-10-27T15:42:30.620980Z 0 [Note] Shutting down plugin 'INNODB_FT_INDEX_TABLE' 2017-10-27T15:42:30.620985Z 0 [Note] Shutting down plugin 'INNODB_FT_INDEX_CACHE' 2017-10-27T15:42:30.620990Z 0 [Note] Shutting down plugin 'INNODB_FT_CONFIG' 2017-10-27T15:42:30.620994Z 0 [Note] Shutting down plugin 'INNODB_FT_BEING_DELETED' 2017-10-27T15:42:30.620999Z 0 [Note] Shutting down plugin 'INNODB_FT_DELETED' 2017-10-27T15:42:30.621003Z 0 [Note] Shutting down plugin 'INNODB_FT_DEFAULT_STOPWORD' 2017-10-27T15:42:30.621008Z 0 [Note] Shutting down plugin 'INNODB_METRICS' 2017-10-27T15:42:30.621013Z 0 [Note] Shutting down plugin 'INNODB_TEMP_TABLE_INFO' 2017-10-27T15:42:30.621017Z 0 [Note] Shutting down plugin 'INNODB_BUFFER_POOL_STATS' 2017-10-27T15:42:30.621022Z 0 [Note] Shutting down plugin 'INNODB_BUFFER_PAGE_LRU' 2017-10-27T15:42:30.621027Z 0 [Note] Shutting down plugin 'INNODB_BUFFER_PAGE' 2017-10-27T15:42:30.621032Z 0 [Note] Shutting down plugin 'INNODB_CMP_PER_INDEX_RESET' 2017-10-27T15:42:30.621042Z 0 [Note] Shutting down plugin 'INNODB_CMP_PER_INDEX' 2017-10-27T15:42:30.621051Z 0 [Note] Shutting down plugin 'INNODB_CMPMEM_RESET' 2017-10-27T15:42:30.621056Z 0 [Note] Shutting down plugin 'INNODB_CMPMEM' 2017-10-27T15:42:30.621061Z 0 [Note] Shutting down plugin 'INNODB_CMP_RESET' 2017-10-27T15:42:30.621066Z 0 [Note] Shutting down plugin 'INNODB_CMP' 2017-10-27T15:42:30.621071Z 0 [Note] Shutting down plugin 'INNODB_LOCK_WAITS' 2017-10-27T15:42:30.621076Z 0 [Note] Shutting down plugin 'INNODB_LOCKS' 2017-10-27T15:42:30.621081Z 0 [Note] Shutting down plugin 'INNODB_TRX' 2017-10-27T15:42:30.621085Z 0 [Note] Shutting down plugin 'InnoDB' 2017-10-27T15:42:30.621309Z 0 [Note] InnoDB: FTS optimize thread exiting. 2017-10-27T15:42:30.622401Z 0 [Note] InnoDB: Starting shutdown... 2017-10-27T15:42:30.723215Z 0 [Note] InnoDB: Dumping buffer pool(s) to /mydata/ib_buffer_pool 2017-10-27T15:42:30.723542Z 0 [Note] InnoDB: Buffer pool(s) dump completed at 171027 11:42:30 2017-10-27T15:42:32.472064Z 0 [Note] InnoDB: Shutdown completed; log sequence number 2767811 2017-10-27T15:42:32.474350Z 0 [Note] InnoDB: Removed temporary tablespace data file: "ibtmp1" 2017-10-27T15:42:32.474373Z 0 [Note] Shutting down plugin 'MEMORY' 2017-10-27T15:42:32.474385Z 0 [Note] Shutting down plugin 'PERFORMANCE_SCHEMA' 2017-10-27T15:42:32.474419Z 0 [Note] Shutting down plugin 'MRG_MYISAM' 2017-10-27T15:42:32.474431Z 0 [Note] Shutting down plugin 'MyISAM' 2017-10-27T15:42:32.474459Z 0 [Note] Shutting down plugin 'CSV' 2017-10-27T15:42:32.474471Z 0 [Note] Shutting down plugin 'sha256_password' 2017-10-27T15:42:32.474481Z 0 [Note] Shutting down plugin 'mysql_native_password' 2017-10-27T15:42:32.475429Z 0 [Note] Shutting down plugin 'binlog' 2017-10-27T15:42:32.477356Z 0 [Note] /usr/local/mysql/bin/mysqld: Shutdown complete

vm1主机的日志

[root@vm1 ~]# tail -f /mydata/vm1.err 2017-10-27T15:42:22.634326Z 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324' 2017-10-27T15:42:23.095198Z 0 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs' 2017-10-27T15:42:23.095627Z 0 [Note] Plugin group_replication reported: 'Unsetting super_read_only.' 2017-10-27T15:42:23.095987Z 5 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs'

vm3主机的日志

[root@vm3 ~]# tail -f /mydata/vm3.err 2017-10-27T15:42:22.235922Z 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324' 2017-10-27T15:42:22.696811Z 0 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs' 2017-10-27T15:42:22.697087Z 0 [Note] Plugin group_replication reported: 'Setting super_read_only.' 2017-10-27T15:42:22.697303Z 5 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs'

可以看到这个时候VM1自动提升为主了,且写入的数据可以继续同步到vm3,vm3的角色为slave,不能写入数据

mysql> use yang Database changed mysql> insert into t1 values (2,'two'); Query OK, 1 row affected (0.03 sec) mysql> select * from t1; +----+------+ | id | name | +----+------+ | 1 | one | | 2 | two | +----+------+ 2 rows in set (0.00 sec)

mysql> select * from yang.t1; +----+------+ | id | name | +----+------+ | 1 | one | | 2 | two | +----+------+ 2 rows in set (0.00 sec) mysql> insert into yang.t1 values (3,'three'); ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

如果此时vm1也跪了会如何呢?

[root@vm1 ~]# service mysqld stop Shutting down MySQL............. SUCCESS!

观察vm3的日志

[root@vm3 ~]# tail -f /mydata/vm3.err 2017-10-27T15:42:22.235922Z 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324' 2017-10-27T15:42:22.696811Z 0 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs' 2017-10-27T15:42:22.697087Z 0 [Note] Plugin group_replication reported: 'Setting super_read_only.' 2017-10-27T15:42:22.697303Z 5 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs' 2017-10-27T15:46:54.618998Z 5 [Note] Plugin group_replication reported: 'Primary had applied all relay logs, disabled conflict detection' 2017-10-27T15:49:57.546061Z 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324' 2017-10-27T15:49:58.612942Z 0 [Note] Plugin group_replication reported: 'Unsetting super_read_only.' 2017-10-27T15:49:58.613092Z 5 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all relay logs'

VM3写入测试

mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 941bce76-6d40-11e7-b2fe-000c2909332c | vm3 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 1 row in set (0.00 sec) mysql> insert into yang.t1 values (3,'three'); Query OK, 1 row affected (0.02 sec) mysql> select * from yang.t1; +----+-------+ | id | name | +----+-------+ | 1 | one | | 2 | two | | 3 | three | +----+-------+ 3 rows in set (0.00 sec)

三、将VM1和VM2恢复后会如何?我们来测试一下

在最早宕机VM2查询数据是否正常

四、总结

通过上述简单的测试,发现mysql的组复制还是挺好用的,3台mysql实例组成的组复制结构下,只要有1台主机存活,整个mysql服务就可用,在故障主机恢复之后,组复制会自动同步数据,恢复组复制状态。

但在实际的使用过程中,需要考虑到客户端或者数据库中间件的连接问题。因为在单组复制模式下,slave是自读的,所有的写入请求都通过master,如果master宕机,程序或者客户端、数据库中间件读写分离的连接如何平滑处理?