简介

KeepAlived是网络协议VRRP(Virtual Route Redundancy Protocol 虚拟路由冗余协议)协议的实现。

KeepAlived是Linux集群管理中保证集群高可用的一个服务软件,其功能是用来防止单点故障。具体来说,其作用是检测服务器的状态,如果有一台web服务器宕机,或工作出现故障,KeepAlived将会检测到,并将有故障的服务器从系统中剔除,同时使用其他服务器代替该服务器的工作,当服务器工作正常后KeepAlived自动将服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的服务器。

KeepAlived的工作原理:

通过了解VRRP协议来了解KeepAlived的工作原理。

VRRP协议:Virtual Route Redundancy Protocol 虚拟路由冗余协议。是一种容错协议,保证当主机的下一跳路由出现故障时,由另一台备用路由器来代替出现故障的路由器进行工作,从而保持网络通信的连续性和可靠性。这些路由器组成了一个虚拟路由器,其中包含一个Master 路由器和多个 Backup 路由器。主机将虚拟路由器当作默认网关。一个虚拟路由器可以拥有一个或多个IP 地址。

实验实现基于KeepAlived双主模型的高可用LVS

需求分析

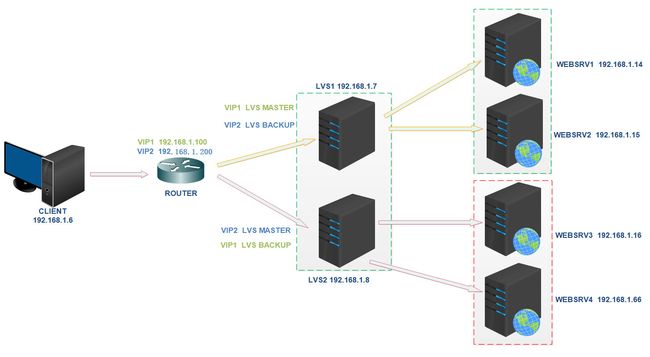

如下图所示

| FQDN | IP |

|---|---|

| images.king.com | VIP1 192.168.1.100 |

| app.king.com | VIP2 192.168.1.200 |

当客户端访问images.king.com的时候,是通过VIP1访问,最终后端提供服务的是WEBSRV1和WEBSRV2;当客户端访问app.king.com的时候,是通过VIP2访问,后端提供服务的是WEBSRV3和WEBSRV4。当用户访问其中一个站点的时候,单个LVS服务器可以提供负载均衡,将前端的请求调度到后端的两台WEBSRV上,但是一台LVS无法避免单点失败的问题,所以考虑用两台LVS服务器配合KeepAlived来实现高可用性。同时,企业提供两个站点的服务,如果每个站点都采用两台LVS来实现高可用性,那么需要四台LVS,成本比较高,而且正常情况下,有两台LVS服务器处于空闲中。可以考虑采用KeepAlived + LVS 双主模型的方式来实现这种需求。

双主模型。即针对VIP1,LVS1是MASTER,LVS2是BACKUP,此时,VIP1漂在LVS1上面,LVS1负责调度后端的WEBSRV1和WEBSRV2。针对VIP2,LVS2是MASTER,LVS1是BACKUP,此时,VIP2漂在LVS2上面,LVS2负责调度后端的WEBSRV3和WEBSRV4。当LVS1或者LVS2服务器出现故障的时候,那么此时出故障的LVS上面的VIP将漂移到另一台LVS上面,并且原来负责的两台后端WEBSRV将由正常的LVS接管。这就是大致的实现过程。

实验环境准备

确保所有虚拟机(centos7.3)上面的防火墙和selinux处于关闭状态。

各节点时间必须同步

找一台机器A与国内一台常用的NTP服务器同步时间,企业里面其他的机器与A机器同步时间。

各节点之间通过主机名互相通信,建议使用/etc/hosts文件实现

各节点之间的root用户可以基于密钥认证的ssh服务完成互相通信

实验过程

一、配置客户端CLIENT

vim /etc/hosts

# 添加下面两行

192.168.1.100 images.king.com

192.168.1.200 app.king.com

二、在WEBSRV1和WEBSRV2上面

WEBSRV2的配置和WEBSRV1的配置大致相同,下面以WEBSRV1为例介绍一下。

# 如果没有安装httpd就安装

yum install httpd

# 启动服务

systemctl start httpd

# 准备页面,如果是WEBSRV2,就把WEBSRV1 改为WEBSRV2

echo "WEBSRV1 images" > /var/www/html/index.html

# 准备RS脚本

vim lvs_dr_rs.sh

#!/bin/bash

vip=192.168.1.100

mask='255.255.255.255'

dev=lo:1

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $dev $vip netmask $mask broadcast $vip up

route add -host $vip dev $dev

echo "The RS Server is Ready!"

;;

stop)

ifconfig $dev down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "The RS Server is Canceled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

# 执行RS脚本

bash lvs_dr_rs.sh start

# 查看回环网卡上面是否绑定了vip 192.168.1.100

ip a

三、在WEBSRV3和WEBSRV4上面

WEBSRV4的配置和WEBSRV3的配置大致相同,下面以WEBSRV3为例介绍一下。

# 如果没有安装httpd就安装

yum install httpd

# 启动服务

systemctl start httpd

# 准备页面,如果是WEBSRV4,就把WEBSRV3 改为WEBSRV4

echo "WEBSRV3 app" > /var/www/html/index.html

# 准备RS脚本

vim lvs_dr_rs.sh

#!/bin/bash

vip=192.168.1.200

mask='255.255.255.255'

dev=lo:1

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $dev $vip netmask $mask broadcast $vip up

route add -host $vip dev $dev

echo "The RS Server is Ready!"

;;

stop)

ifconfig $dev down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "The RS Server is Canceled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

# 执行RS脚本

bash lvs_dr_rs.sh start

# 查看回环网卡上面是否绑定了vip 192.168.1.200

ip a

四、配置LVS1的KeepAlived

# 安装ipvsadm,用于查看ipvs规则

yum install ipvsadm

# 查看一下,目前来说是空的

ipvsadm -Ln

# 安装KeepAlived

yum install keepalived

# 配置keepalived

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from node1@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_group4 224.100.100.100

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 411fa9f6

}

virtual_ipaddress {

192.168.1.100/24

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

virtual_server 192.168.1.100 80 {

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.1.14 80 {

weight 2

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.1.15 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 66

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 123fa9f6

}

virtual_ipaddress {

192.168.1.200/24

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

virtual_server 192.168.1.200 80 {

delay_loop 3

lb_algo rr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.1.16 80 {

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.1.66 80 {

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

# MASTER和BACKUP切换的邮件通知脚本

cat /etc/keepalived/notify.sh

#!/bin/bash

contact='root@localhost'

notify() {

mailsubject="$(hostname) to be $1, vip floating"

mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

}

case $1 in

master)

notify master

;;

backup)

notify backup

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac

# keepalived记录日志 ,修改/etc/sysconfig/keepalived对应的行

KEEPALIVED_OPTIONS="-D -S 2"

# 在/etc/rsyslog.conf添加一行

local2.* /var/log/keepalived.log

# 重启日志服务

systemctl restart rsyslog

# 准备sorry server

yum install httpd

systemctl start httpd

echo sorry,server > /var/www/html/index.html

# 启动keepalived服务

systemctl start keepalived

五、配置LVS2的KeepAlived

LVS2的配置大致和LVS1相同,主要是KeepAlived的配置不同,现将KeepAlived的配置说明如下。

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from node2@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node2

vrrp_mcast_group4 224.100.100.100

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 88

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 411fa9f6

}

virtual_ipaddress {

192.168.1.100/24

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

virtual_server 192.168.1.100 80 {

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.1.14 80 {

weight 2

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.1.15 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 66

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123fa9f6

}

virtual_ipaddress {

192.168.1.200/24

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

virtual_server 192.168.1.200 80 {

delay_loop 3

lb_algo rr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.1.16 80 {

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.1.66 80 {

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

六、在CLIENT上进行测试

测试一:

for i in {1..15};do curl images.king.com; done

for i in {1..15};do curl app.king.com; done

按照调度算法正常进行服务调度。

测试二:

for i in {1..50};do sleep 0.5; curl images.king.com;done

for i in {1..50};do sleep 0.5; curl app.king.com;done

断开LVS1或者LVS2的网络,两个站点依然正常访问。

此时,VIP1和VIP2集中在一台正常工作的LVS上面。

测试三:

for i in {1..50};do sleep 0.5; curl images.king.com;done

停掉WEBSRV1或者WEBSRV2,images.king.com站点依然可以正常访问。

for i in {1..50};do sleep 0.5; curl app.king.com;done

停掉WEBSRV3或者WEBSRV4,app.king.com站点依然可以正常访问。

测试四:

for i in {1..50};do sleep 0.5; curl images.king.com;done

把WEBSRV1和WEBSRV2的httpd服务都停掉,发现显示的是sorry,server,前端调度器充当了WEBSRV。

for i in {1..50};do sleep 0.5; curl app.king.com;done

把WEBSRV3和WEBSRV4的httpd服务都停掉,发现显示的是sorry,server,前端调度器充当了WEBSRV。