Kubernetes高可用集群部署

部署架构:

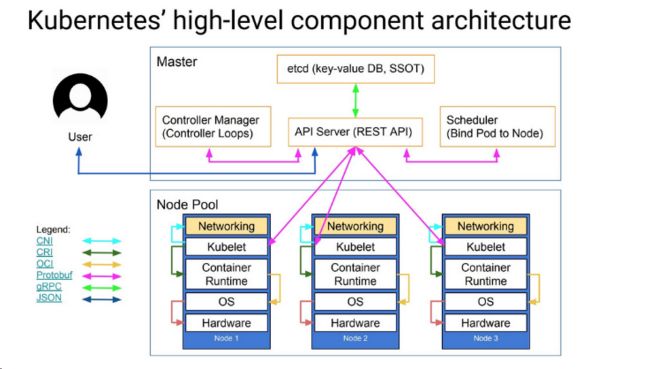

Master 组件:

- kube-apiserver

Kubernetes API,集群的统一入口,各组件协调者,以HTTP API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。

- kube-controller-manager

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。

- kube-scheduler

根据调度算法为新创建的Pod选择一个Node节点。

Node 组件:

- kubelet

kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、

下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。

- kube-proxy

在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

- docker

运行容器。

第三方服务:

- etcd

分布式键值存储系统。用于保持集群状态,比如Pod、Service等对象信息。

下图清晰表明了Kubernetes的架构设计以及组件之间的通信协议。

一、环境规划

| 角色 |

IP |

组件 |

| K8S-MASTER01 |

10.247.74.48 |

kube-apiserver kubelet flannel Nginx keepalived |

| K8S-MASTER02 |

10.247.74.49 |

kube-apiserver kubelet flannel Nginx keepalived |

| K8S-MASTER03 |

10.247.74.50 |

kube-apiserver kubelet flannel Nginx keepalived |

| K8S-NODE01 |

10.247.74.53 |

kubelet |

| K8S-NODE02 |

10.247.74.54 |

kubelet |

| K8S-NODE03 |

10.247.74.55 |

kubelet |

| K8S-NODE04 |

10.247.74.56 |

kubelet |

| K8S-VIP |

10.247.74.51 |

软件版本信息

| 软件 |

版本 |

| Linux操作系统 |

Red Hat Enterprise 7.6_x64 |

| Kubernetes |

1.14.1 |

| Docker |

18.06.3-ce |

| Etcd |

3.0 |

| Nginx |

17.0 |

1.1系统环境准备(所有节点)

#设置主机名及关闭selinux,swap分区

cat <>/etc/hosts 10.247.74.48 TWDSCPA203V 10.247.74.49 TWDSCPA204V 10.247.74.50 TWDSCPA205V 10.247.74.53 TWDSCPA206V 10.247.74.54 TWDSCPA207V 10.247.74.55 TWDSCPA208V 10.247.74.56 TWDSCPA209V 10.247.74.51 K8S-VIP EOF sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config swapoff -a sed -i 's/\/dev\/mapper\/centos-swap/\#\/dev\/mapper\/centos-swap/g' /etc/fstab systemctl enable ntpd systemctl start ntpd

#设置内核参数

echo "* soft nofile 32768" >> /etc/security/limits.conf echo "* hard nofile 65535" >> /etc/security/limits.conf echo "* soft nproc 32768" >> /etc/security/limits.conf echo "* hadr nproc 65535" >> /etc/security/limits.conf cat > /etc/sysctl.d/k8s.conf <

#加载IPVS模块

在所有的Kubernetes节点执行以下脚本(若内核大于4.19替换nf_conntrack_ipv4为nf_conntrack):

cat > /etc/sysconfig/modules/ipvs.modules <

#执行脚本

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#安装ipvs相关管理软件

yum install ipset ipvsadm -y reboot

1.2安装Docker(所有节点)

# Step 1: 安装必要的一些系统工具

yum install -y yum-utils device-mapper-persistent-data lvm2

#sSep 2:安装ddocker

yum update -y && yum install -y docker-ce-18.06.3.ce

# Step 3: 配置docker仓库及镜像存放路径

mkdir -p /mnt/sscp/data/docker cat > /etc/docker/daemon.json <

# Step 4: 重启启Docker服务

systemctl restart docker systemctl enable docker

1.3部署Nginx

一、安装依赖包

yum install -y gcc gcc-c++ pcre pcre-devel zlib zlib-devel openssl openssl-devel

二、从官网下载安装包

wget https://nginx.org/download/nginx-1.16.0.tar.gz

三、解压并安装

tar zxvf nginx-1.16.0.tar.gz

cd nginx-1.16.0

./configure --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module --with-http_realip_module --with-http_flv_module --with-http_mp4_module --with-http_gzip_static_module--with-stream --with-stream_ssl_module

make && make install

四、配置kube-apiserver反向代理

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 10.247.74.48:6443;

server 10.247.74.49:6443;

server 10.247.74.50:6443;

}

server {

listen 0.0.0.0:8443;

proxy_pass k8s-apiserver;

}

}

五、启动nginx服务

/usr/local/sbin/nginx

1.4部署keepalived

一、下载地址:

wget https://www.keepalived.org/software/keepalived-2.0.16.tar.gz

二、解压并安装

tar xf keepalived-2.0.16.tar.gz

cd keepalived-2.0.16

./configure --prefix=/usr/local/keepalived

make && make install

cp /root/keepalived-2.0.16/keepalived/etc/init.d/keepalived /etc/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

二、添加配置文件

vim /etc/keepalived/keepalived.conf

MASTER:

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.247.74.51/24

}

BACKUP:

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.247.74.51/24

}

1.5部署kubeadm(所有节点)

#由于官方源国内无法访问,这里使用阿里云yum源进行替换:

cat </etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

#安装kubeadm、kubelet、kubectl,注意这里安装版本v1.14.1:

yum install -y kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1 systemctl enable kubelet && systemctl start kubelet

1.6部署Master节点

初始化参考: https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/ https://godoc.org/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta1

创建初始化配置文件 可以使用如下命令生成初始化配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

根据实际部署环境修改信息:

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.247.74.48

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: cn-hongkong.i-j6caps6av1mtyxyofmrw

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "10.247.74.51:8443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.14.1

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

配置说明:

controlPlaneEndpoint:为vip地址和haproxy监听端口6444 imageRepository:由于国内无法访问google镜像仓库k8s.gcr.io,这里指定为阿里云镜像仓库registry.aliyuncs.com/google_containers podSubnet:指定的IP地址段与后续部署的网络插件相匹配,这里需要部署flannel插件,所以配置为10.244.0.0/16 mode: ipvs:最后追加的配置为开启ipvs模式。

在集群搭建完成后可以使用如下命令查看生效的配置文件:

kubeadm config images pull --config kubeadm-config.yaml # 通过阿里源预先拉镜像

初始化Master01节点

这里追加tee命令将初始化日志输出到kubeadm-init.log中以备用(可选)。

kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

该命令指定了初始化时需要使用的配置文件,其中添加–experimental-upload-certs参数可以在后续执行加入节点时自动分发证书文件。

1.7添加其他Master节点

执行以下命令:

kubeadm join 10.247.74.51:8443 --token ocb5tz.pv252zn76rl4l3f6 \ --discovery-token-ca-cert-hash sha256:141bbeb79bf58d81d551f33ace207c7b19bee1cfd7790112ce26a6a300eee5a2 \ --experimental-control-plane --certificate-key 20366c9cdbfdc1435a6f6d616d988d027f2785e34e2df9383f784cf61bab9826

添加上下文:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

1.8添加Node节点

执行以下命令:

kubeadm join 10.247.74.51:8443 --token ocb5tz.pv252zn76rl4l3f6 \

--discovery-token-ca-cert-hash sha256:141bbeb79bf58d81d551f33ace207c7b19bee1cfd7790112ce26a6a300eee5a2

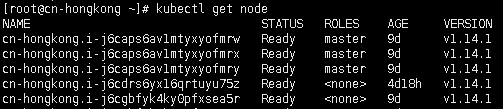

1.9部署flannel并查询集群状态

一、部署flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

二、查看集群状态

# kubectl get node

1.10后续

token默认24h后失效如果有新的node加入可在master上重新生成:

kubeadm token create --print-join-command