Caffe 层级结构

文章目录

- 一、层的基本数据结构

- 三、数据及部署层

- 三、视觉层

- 1、卷积层(Convolution)

- 2、池化层(Pooling)

- 3、全连接层(InnerProduct)

- 4、归一化层(BatchNorm+Scale)

- 5、丢弃层(Dropout)

- 四、激活函数层

- 1、RELU

- 2、PRELU

- 五、损失、部署及准确率层

- 1、SoftmaxWithLoss

- 2、Euclidenloss

- 3、MultiBoxLoss

- 4、Accuracy

- 5、Softmax

- 六、特殊功能层

- 1、Split

- 2、Slice

- 3、Concat--->Slice 的逆过程

- 4、Eltwise

- 5、Reshape

- 6、Flatten

- 7、Permute

- 七、参考资料

一、层的基本数据结构

message LayerParameter {

optional string name = 1; // 层名称(fine-tuning 时会用到)

optional string type = 2; // 层类型

repeated string bottom = 3; // 层输入

repeated string top = 4; // 层输出

// TRAIN or TEST,用法:include{phase: TRAIN}

optional Phase phase = 10;

// Parameters for data pre-processing.

optional TransformationParameter transform_param = 100;

// Layer type-specific parameters.

eg:data_param、convolution_param、pooling_param、batch_norm_param

// 全局学习率乘子、正则化乘子(默认为 1.0)以及参数共享的设置

repeated ParamSpec param = 6;

// 用于指定多个损失层间的相对重要性,对于带后缀 loss 的层来说,默认其 top blob 的 loss_weight=1,其它层的 top blob 的 loss_weight=0

repeated float loss_weight = 5;

// 默认 normalization 为 VALID 方式(前提是要设置 ignore_label, 否则为 FULL 方式)

// Divide by the number of examples in the batch times spatial dimensions

optional LossParameter loss_param = 101;

// 是否对 bottom blob 进行反向传播,propagate_down 的数量为 0 或者为 bottom blob 个

repeated bool propagate_down = 11;

// 层参数的类型

optional AccuracyParameter accuracy_param = 102;

optional AnnotatedDataParameter annotated_data_param = 200;

optional ArgMaxParameter argmax_param = 103;

optional BatchNormParameter batch_norm_param = 139;

optional BiasParameter bias_param = 141;

optional ConcatParameter concat_param = 104;

optional ContrastiveLossParameter contrastive_loss_param = 105;

optional ConvolutionParameter convolution_param = 106;

optional CropParameter crop_param = 144;

optional DataParameter data_param = 107;

optional DetectionEvaluateParameter detection_evaluate_param = 205;

optional DetectionOutputParameter detection_output_param = 204;

optional DropoutParameter dropout_param = 108;

optional DummyDataParameter dummy_data_param = 109;

optional EltwiseParameter eltwise_param = 110;

optional ELUParameter elu_param = 140;

optional EmbedParameter embed_param = 137;

optional ExpParameter exp_param = 111;

optional FlattenParameter flatten_param = 135;

optional HDF5DataParameter hdf5_data_param = 112;

optional HDF5OutputParameter hdf5_output_param = 113;

optional HingeLossParameter hinge_loss_param = 114;

optional ImageDataParameter image_data_param = 115;

optional InfogainLossParameter infogain_loss_param = 116;

optional InnerProductParameter inner_product_param = 117;

optional InputParameter input_param = 143;

optional LogParameter log_param = 134;

optional LRNParameter lrn_param = 118;

optional MemoryDataParameter memory_data_param = 119;

optional MultiBoxLossParameter multibox_loss_param = 201;

optional MVNParameter mvn_param = 120;

optional NormalizeParameter norm_param = 206;

optional ParameterParameter parameter_param = 145;

optional PermuteParameter permute_param = 202;

optional PoolingParameter pooling_param = 121;

optional PowerParameter power_param = 122;

optional PReLUParameter prelu_param = 131;

optional PriorBoxParameter prior_box_param = 203;

optional PythonParameter python_param = 130;

optional RecurrentParameter recurrent_param = 146;

optional ReductionParameter reduction_param = 136;

optional ReLUParameter relu_param = 123;

optional ReshapeParameter reshape_param = 133;

optional ScaleParameter scale_param = 142;

optional SigmoidParameter sigmoid_param = 124;

optional SoftmaxParameter softmax_param = 125;

optional SPPParameter spp_param = 132;

optional SliceParameter slice_param = 126;

optional TanHParameter tanh_param = 127;

optional ThresholdParameter threshold_param = 128;

optional TileParameter tile_param = 138;

optional VideoDataParameter video_data_param = 207;

optional WindowDataParameter window_data_param = 129;

}

// 全局学习率乘子、正则化乘子以及参数共享的设置

// Specifies training parameters (multipliers on global learning constants,

// and the name and other settings used for weight sharing).

message ParamSpec {

// The names of the parameter blobs:useful for sharing parameters among layers

optional string name = 1;

// Whether to require shared weights to have the same shape, or just the same count

optional DimCheckMode share_mode = 2;

enum DimCheckMode {

STRICT = 0; // N、C、H、W 每一个都相同

PERMISSIVE = 1; // N*C*H*W 的乘积相同即可

}

optional float lr_mult = 3 [default = 1.0]; // 全局学习率乘子

optional float decay_mult = 4 [default = 1.0]; // 全局正则化乘子

}

三、数据及部署层

- 参见博客:Caffe 中数据及部署层的使用

- ImageData 层: 原始图片,txt(img_path, label)

- Data 层: 单标签,lmdb(data, label)

- HDF5Data 层: 多标签,网络结构中里用的 txt(包含 hdf5 文件)

- Input 层: 用于模型部署阶段的 deploy.prototxt

三、视觉层

1、卷积层(Convolution)

// 参数初始化方式

message FillerParameter {

// The filler type.

optional string type = 1 [default = 'constant'];

optional float value = 2 [default = 0]; // the value in constant filler

optional float min = 3 [default = 0]; // the min value in uniform filler

optional float max = 4 [default = 1]; // the max value in uniform filler

optional float mean = 5 [default = 0]; // the mean value in Gaussian filler

optional float std = 6 [default = 1]; // the std value in Gaussian filler

// The expected number of non-zero output weights for a given input in

// Gaussian filler -- the default -1 means don't perform sparsification.

optional int32 sparse = 7 [default = -1];

// Normalize the filler variance by fan_in, fan_out, or their average.

// Applies to 'xavier' and 'msra' fillers.

enum VarianceNorm {

FAN_IN = 0;

FAN_OUT = 1;

AVERAGE = 2;

}

optional VarianceNorm variance_norm = 8 [default = FAN_IN];

}

// 卷积参数

message ConvolutionParameter {

// 基本卷积设置

optional uint32 num_output = 1;

repeated uint32 kernel_size = 4;

repeated uint32 pad = 3 // default = 0

repeated uint32 stride = 6 // default = 1

optional bool bias_term = 2 // default = true

// 权重初始化方法:常用 type='xavier' or 'msra'

// type 为 'uniform' 时需指定 min,max(默认 0 和 1); type 为 'Gaussian' 时需指定 mean,std(默认 0 和 1)

optional FillerParameter weight_filler = 7;

optional FillerParameter bias_filler = 8; // 常用 type='constant',需指定 value(默认为 0)

// w、h 不等时使用

optional uint32 pad_h = 9 // default = 0

optional uint32 pad_w = 10 // default = 0

optional uint32 kernel_h = 11;

optional uint32 kernel_w = 12;

optional uint32 stride_h = 13;

optional uint32 stride_w = 14;

// 空洞卷积和分组卷积

repeated uint32 dilation = 18; // default = 1

optional uint32 group = 5; // default = 1

// The axis to interpret as "channels" when performing convolution, default = 1

optional int32 axis = 16

// 不常用

enum Engine {

DEFAULT = 0;

CAFFE = 1;

CUDNN = 2;

}

optional Engine engine = 15 // default = DEFAULT

optional bool force_nd_im2col = 17 // default = false

}

// 卷积层示例

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

weight_filler {

type: "msra"

}

bias_term: false

}

}

2、池化层(Pooling)

message PoolingParameter {

enum PoolMethod {

MAX = 0;

AVE = 1;

STOCHASTIC = 2;

}

// 基本池化设置

optional PoolMethod pool = 1 // The pooling method, default = MAX

optional uint32 pad = 4 // default = 0

optional uint32 kernel_size = 2;

optional uint32 stride = 3 // default = 1

optional bool global_pooling = 12 // default = false, 当为 true 时结合 AVE pooling 使用

optional uint32 pad_h = 9 [default = 0];

optional uint32 pad_w = 10 [default = 0];

optional uint32 kernel_h = 5;

optional uint32 kernel_w = 6;

optional uint32 stride_h = 7;

optional uint32 stride_w = 8;

// 不常用

enum Engine {

DEFAULT = 0;

CAFFE = 1;

CUDNN = 2;

}

optional Engine engine = 11 // default = DEFAULT

}

// 池化层示例 1

layer {

name: "pool5"

type: "Pooling"

bottom: "res5b"

top: "pool5"

pooling_param {

pool: AVE

global_pooling: true

}

}

// 池化层示例 2

layer {

name: "pool5"

type: "Pooling"

bottom: "res5b"

top: "pool5"

pooling_param {

kernel_size: 7

stride: 1

pool: AVE

}

}

3、全连接层(InnerProduct)

message InnerProductParameter {

// 基本全连接层设置

optional uint32 num_output = 1;

optional bool bias_term = 2; // default = true

optional FillerParameter weight_filler = 3;

optional FillerParameter bias_filler = 4;

// 不常用

optional int32 axis = 5; // default = 1, (-1 for the last axis)

optional bool transpose = 6 // whether to transpose the weight matrix or not, default = false

}

// 全连接层示例

layer {

bottom: "pool5"

top: "fc6"

name: "fc6"

type: "InnerProduct"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 1

}

inner_product_param {

num_output: 2

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

4、归一化层(BatchNorm+Scale)

// BN 层是由 BatchNorm+Scale 层来实现的

// BatchNorm 层实现减去均值除以方差、Scale 层实现缩放和平移

message BatchNormParameter {

// ture:使用保存的均值和方差,false:采用指数衰减滑动平均计算新的均值和方差

// 该参数缺省的时候,如果是测试阶段则等价为 true,如果是训练阶段则等价为 false

// deploy 或 fine-tuning 的时候可设置为 true, 从头训练的时候要设置为 false

optional bool use_global_stats = 1;

// 指数衰减滑动平均系数,默认为 0.999

optional float moving_average_fraction = 2;

// 防止除以方差时出现除 0 操作,默认为 1e-5

optional float eps = 3;

}

message ScaleParameter {

// 是否进行平移操作,默认为 false

optional bool bias_term = 4;

// 默认缩放采用单位初始化(C 维)

optional FillerParameter filler = 3;

// 默认平移采用全零初始化(C 维)

optional FillerParameter bias_filler = 5;

// 不常用,默认参数值均为 1

optional int32 axis = 1;

optional int32 num_axes = 2;

}

// BN 层示例,bottom 和 top 一致,进行 in-place 操作

layer {

name: "bn_conv1"

type: "BatchNorm"

bottom: "conv1"

top: "conv1"

batch_norm_param {

moving_average_fraction: 0.9

}

}

layer {

name: "scale_conv1"

type: "Scale"

bottom: "conv1"

top: "conv1"

scale_param {

bias_term: true

}

}

5、丢弃层(Dropout)

// Dropout 层示例,常在卷积层后全连接层前添加

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7-conv"

top: "fc7-conv"

dropout_param {

dropout_ratio: 0.5 // 默认 0.5

}

}

四、激活函数层

1、RELU

// RELU 层示例

layer {

name: "conv1_relu"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

2、PRELU

message PReLUParameter {

// Initial value of a_i. Default is a_i=0.25 for all i.

optional FillerParameter filler = 1;

// Whether or not slope paramters are shared across channels.

optional bool channel_shared = 2 [default = false];

}

// PRELU 层示例

layer {

name: "conv1_relu"

type: "PReLU"

bottom: "conv1"

top: "conv1"

param {

lr_mult: 1

decay_mult: 0

}

prelu_param {

filler:{

value: 0.25 // 默认 x<0 时斜率为 0.25

}

channel_shared: false

}

}

五、损失、部署及准确率层

1、SoftmaxWithLoss

// 有多个 loss 的时候可以通过 loss_weight 指定它们之间的相对重要性

// 注意有两个 bottom:一个为网络推断结果,另一个为数据的标签

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc6"

bottom: "label"

top: "loss"

loss_weight: 1.0 // 默认 loss 层权重为 1.0,可不加

}

2、Euclidenloss

layer {

name: "ldmk_regression_loss"

type: "EuclideanLoss"

bottom: "fc9"

bottom: "label"

top: "ldmk_regression_loss"

}

3、MultiBoxLoss

- 检测中用到,待补充

4、Accuracy

message AccuracyParameter {

// When computing accuracy, count as correct by comparing the true label to

// the top k scoring classes. By default, only compare to the top scoring

// class (i.e. argmax).

optional uint32 top_k = 1 [default = 1];

// The "label" axis of the prediction blob, whose argmax corresponds to the

// predicted label -- may be negative to index from the end (e.g., -1 for the

// last axis). For example, if axis == 1 and the predictions are

// (N x C x H x W), the label blob is expected to contain N*H*W ground truth

// labels with integer values in {0, 1, ..., C-1}.

optional int32 axis = 2 [default = 1];

// If specified, ignore instances with the given label.

optional int32 ignore_label = 3;

}

// Accuracy 层默认取 top_1 计算准确率

layer {

name: "top1/acc"

type: "Accuracy"

bottom: "fc7"

bottom: "label"

top: "top1/acc"

include {

phase: TEST

}

}

layer {

name: "top5/acc"

type: "Accuracy"

bottom: "fc7"

bottom: "label"

top: "top5/acc"

include {

phase: TEST

}

accuracy_param {

top_k: 5

}

}

5、Softmax

layer {

name: "prob"

type: "Softmax"

bottom: "fc6"

top: "prob"

softmax_param {

axis: 2 # 默认为 1

}

}

六、特殊功能层

1、Split

// 将 blob 复制几份,分别给不同的 layer,这些上层 layer 共享这个 blob

layer{

name: "split"

type: "Split"

bottom: "label" // 将 label 复制成 label1 和 label2,然后一个给 loss,一个作为最后一层输出

top: "label1"

top: "label2"

}

2、Slice

// 按照指定维度指定切分点进行切分

layer {

name: "data_each"

type: "Slice"

bottom: "data_all" // 250*3*24*24

top: "data_classfier" // 150*3*24*24(0~149)

top: "data_boundingbox" // 50*3*24*24(150~199)

top: "data_facialpoints" // 150*3*24*24(200~249)

slice_param {

axis: 0 // 指定沿哪个维度进行拆分,默认拆分 channels 通道

slice_point: 150 // 指定拆分点,slice_point 的个数必须等于 top 的个数减 1

slice_point: 200

}

}

3、Concat—>Slice 的逆过程

// 多个 blob 在指定维度串行连接(默认在 channel 维),所有 bottom 中的 N、H、W 相同

layer {

name: "inception_3a/output"

type: "Concat"

bottom: "inception_3a/1x1"

bottom: "inception_3a/3x3"

bottom: "inception_3a/5x5"

bottom: "inception_3a/pool_proj"

top: "inception_3a/output"

eltwise_param {

axis: 1 // 沿着哪个轴进行连接, 默认为 1; 若不更改默认设置,此参数配置可省略

}

}

4、Eltwise

layer {

bottom: "res2a_branch1"

bottom: "res2a_branch2b"

top: "res2a"

name: "res2a"

type: "Eltwise"

eltwise_param {

operation: SUM // 默认逐元素加和(SUM)、还有逐元素相乘(PROD)和 逐元素取最大(MAX)

}

}

5、Reshape

//4D-->4D:不改变数据的情况下改变输入 blob 的维度,没有进行数据的拷贝

layer {

name: "reshape"

type: "Reshape"

bottom: "input" // 32*3*28*28

top: "output"

reshape_param {

shape {

dim: 0 // 表示维度不变,即输入和输出是相同的维度(32-->32)

dim: 0 // 表示维度不变,即输入和输出是相同的维度(3-->3)

dim: 14 // 将原来的维度变成 14(28-->14)

dim: -1 // 表示由系统自动计算维度(28-->56)

}

}

}

// 4D-->3D:reshape_param 参数为:{ shape { dim: 0 dim: 0 dim: -1 } }

// 4D-->2D: reshape_param 参数为:{ shape { dim: 0 dim: -1 } }, 相当于 Flatten layer

// 通过 axis 和 num_axis 来做reshape

// 从 axis 开始做 num_axis 个维度的 reshape: new_shape[2]=shape[2]*shape[3]

// conv5_1_transpose: (20, 64, 79, 2) ---> blstm_input: (20, 64, 158)

layer {

name: "blstm_input"

type: "Reshape"

bottom: "conv5_1_transpose"

top: "blstm_input"

reshape_param {

shape { dim: -1 }

axis: 2

num_axes: 2

}

}

6、Flatten

// N*C*H*W-->N*(CHW),可用 reshape 代替,相当于第一维不变,后面的自动计算

message FlattenParameter {.

optional int32 axis = 1 [default = 1]; // The first axis to flatten

optional int32 end_axis = 2 [default = -1];

}

// Flatten 层示例

layer {

name: "conv4_3_norm_mbox_loc_flat"

type: "Flatten"

bottom: "conv4_3_norm_mbox_loc_perm"

top: "conv4_3_norm_mbox_loc_flat"

flatten_param {

axis: 1

}

}

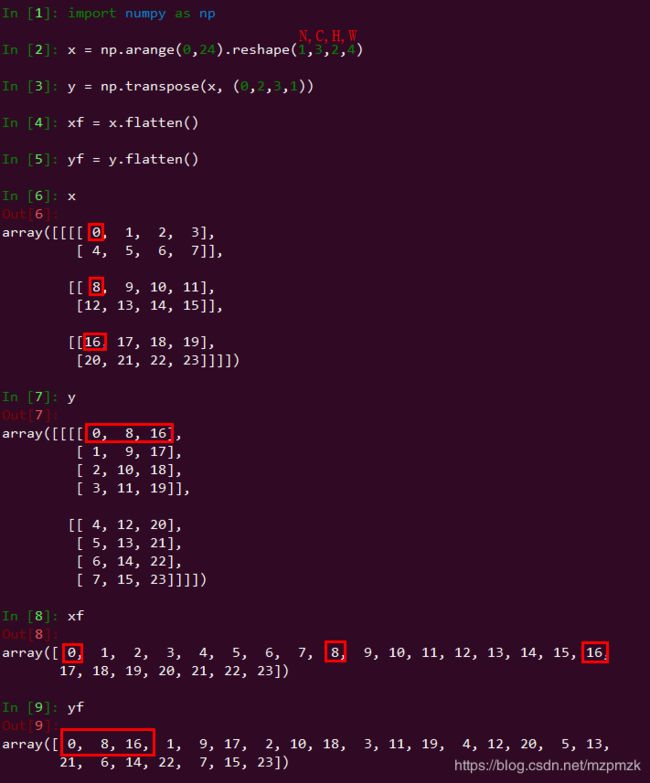

7、Permute

- Permute 操作的原因:

- 特征图

相同空间位置的 channel 个单元在输入图像上的感受野相同,表征了同一感受野内的输入图像信息 - 所以我们可以用

输入图像在某个感受野区域内的信息来预测输入图像上与该区域位置相近的锚框的类别和偏移量

- 特征图

- Flatten 操作的原因:

- 每个尺度上

特征图的形状或以同一单元为中心生成的锚框个数都可能不同 - 因此要

连接多尺度的预测就需要沿着axis=1进行 flatten 操作才能够进行 concat 操作

- 每个尺度上

// The new orders of the axes is:N*C*H*W-->N*H*W*C

// It is to make it easier to combine predictions from multiple layers

layer {

name: "conv4_3_norm_mbox_loc_perm"

type: "Permute"

bottom: "conv4_3_norm_mbox_loc"

top: "conv4_3_norm_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

// 我们需要将通道这个维度放在最低的维度上,即变成(N,H,W,C),因为数据实际是存储在一维数组中,

// 这样做就使得feature map 上每个location 位置上所有 prior box 的坐标数据存储在内存中是连续的。

七、参考资料

1、https://github.com/BVLC/caffe/tree/master/src/caffe/proto

2、caffe层解读系列-softmax_loss

3、caffe 层解读系列