带你玩转kubernetes-k8s(第44篇:利用DockerFile+deployment部署memcache集群)

由于今天在公司搭建memcache集群,就把今天的工作过程记录一下,与大家分享一下。

制作镜像环境:centos7.3.1611基础镜像(可以自行去docker hub上面下载)

也可以在下面连接下载

https://pan.baidu.com/s/1OdgeDOdKO5slAPD2005isQ部署方案:

Memcache版本:1.4.33

Memagent版本:0.5

基础镜像:centos7.3.1611

本文Memcache架构:

| 角色 |

宿主机IP |

Cpu |

内存 |

|---|---|---|---|

| Memagent |

135.10.145.39 |

0.5 |

200Mi |

| Memcache1 |

135.10.145.38 |

0.5 |

200Mi |

| Memcache2 |

135.10.145.37 |

0.5 |

200Mi |

| Memcache3 |

135.10.145.36 |

0.5 |

200Mi |

| Memcache4 |

135.10.145.43 |

0.5 |

200Mi |

| Memcache5(backup_service) |

135.10.145.39 |

0.5 |

200Mi |

1.制作memcache1.4.33镜像

DockerFile具体内容如下:

FROM centos-base-pass:7.3.1611

RUN yum -y install gcc make wget

RUN wget https://github.com/libevent/libevent/releases/download/release-2.1.8-stable/libevent-2.1.8-stable.tar.gz

RUN tar -zxf libevent-2.1.8-stable.tar.gz

RUN cd /libevent-2.1.8-stable && ./configure -prefix=/usr && make && make install

RUN cd /

ENV VERSION=1.4.33

RUN wget http://www.memcached.org/files/memcached-$VERSION.tar.gz

RUN tar -zxf memcached-$VERSION.tar.gz

RUN cd memcached-$VERSION && ./configure && make && make install

RUN ln -s /usr/lib/libevent-2.1.so.6 /usr/lib64/libevent-2.1.so.6

RUN rm -f /memcached-$VERSION.tar.gz

WORKDIR /

COPY entrypoint.sh /entrypoint.sh

RUN chmod 775 /entrypoint.sh

CMD ["./entrypoint.sh"]1.创建一个文件夹:

mkdir docker_demo2. 将Dockerfile放在【docker_demo】文件夹下面

3.并在该文件夹下面创建entrypoint.sh文件

touch entrypoint.shentrypoint.sh 文件内容如下:

#!/bin/bash

if [ -z "$CUSTOM_CMD" -o "$CUSTOM_CMD" = "None" ]; then

if [ -n "$MEMCACHED_PORT" ]; then

# check port

if [ $MEMCACHED_PORT -ge 65535 -o $MEMCACHED_PORT -le 1024 ]; then

echo >&2 'Error: The MEMCACHED_PORT needs to meet the conditions: 1024< port <65535'

exit 1

fi

MEMCACHED_PORT="-p $MEMCACHED_PORT"

fi

if [ -n "$MEMORY_LIMIT" ]; then

# unit MB

# check limit

if [ $MEMORY_LIMIT -gt 32768 -o $MEMORY_LIMIT -lt 64 ]; then

echo >&2 'Error: The MEMORY_LIMIT needs to meet the conditions: 64<= size <=32768'

exit 1

fi

MEMORY_LIMIT="-m $MEMORY_LIMIT"

fi

if [ -n "$CONNECTION_LIMIT" ]; then

# check connection

if [ $CONNECTION_LIMIT -gt 32768 -o $CONNECTION_LIMIT -lt 64 ]; then

echo >&2 'Error: The CONNECTION_LIMIT needs to meet the conditions: 64<= size <=81920'

exit 1

fi

CONNECTION_LIMIT="-c $CONNECTION_LIMIT"

fi

memcached $MEMORY_LIMIT -v -u root $MEMCACHED_PORT $CONNECTION_LIMIT

else

$CUSTOM_CMD

fi

echo >&2 'Container lifecycle end. exit.'

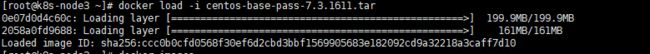

chmod 775 entrypoint.sh4.将centos-base-pass,memagent两个镜像load一下

docker load -i centos-base-pass:7.3.1611docker load -i memagent_0.5.tar5.docker tag 修改镜像名称

![]()

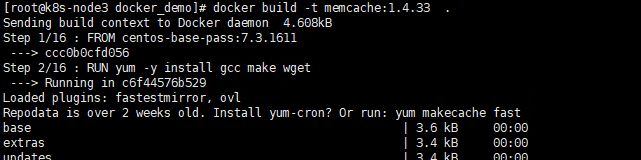

6.使用Dockerfile创建镜像

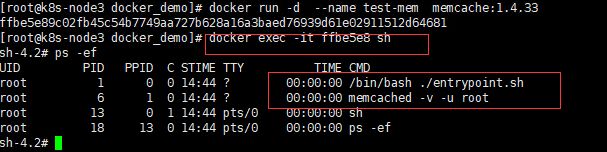

7.验证memcahe是否执行

8. 将memcache:1.4.33和memagent:0.5镜像推到镜像仓库(此步骤省略)

8.编写yaml文件

mem-test.yaml

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

labels:

service_name: memagent

name: memagent

namespace: core-1 # 命名空间,修自己对应的

spec:

replicas: 1 # 默认副本数

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memagent

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memagent

namespace: core-1 # 命名空间,修自己对应的

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: __ALAUDA_FULL_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_NAME__

value: memagent

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_REGION_NAME__

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: __ALAUDA_SERVICE_VERSION__

value: "3058"

- name: MEMCACHED_SERVERS

value: 135.10.145.36:13612,135.10.145.37:13612,135.10.145.38:13612,135.10.145.43:13612 # 此出修改,填写 memcached1-memcached4的ip.

- name: BACKUP_SERVERS

value: 135.10.145.39:13612 # 此处修改成memcached5的ip

- name: MEMAGENT_PORT

value: "12490"

image: 135.10.145.25:5000/pro1/memagent:0.5 # 镜像地址修改成自己上传至paas平台上的镜像地址(memagent:0.5的镜像)

imagePullPolicy: Always

name: memagent

resources:

limits:

cpu: "0.5" # 按照自己的需求修改容器规格

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.39 # memagent 的ip地址(自己规划)

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 5

labels:

service_name: memcached1

name: memcached1

namespace: core-1 # 修改 命名空间

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memcached1

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memcached1

namespace: core-1 # 命名空间,修自己对应的

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: MEMORY_LIMIT

value: "128"

- name: __ALAUDA_FULL_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_SERVICE_NAME__

value: memcached1

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_VERSION__

value: "3052"

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: MEMCACHED_PORT

value: "13612"

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_REGION_NAME__

image: 135.10.145.25:5000/pro1/memcache:1.4.33 # 镜像地址修改成自己上传至paas平台上的镜像地址(memcache:1.4.33的镜像)

imagePullPolicy: Always

name: memcached1

resources:

limits: # 按照自己的需求修改容器规格

cpu: "0.5"

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.38 #修改memcached1 的ip地址

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

---

apiVersion: extensions/v1beta1 # 下面的都同memcahe1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 5

labels:

service_name: memcached2

name: memcached2

namespace: core-1

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memcached2

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memcached2

namespace: core-1

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: MEMORY_LIMIT

value: "128"

- name: __ALAUDA_FULL_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_SERVICE_NAME__

value: memcached2

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_VERSION__

value: "3046"

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: MEMCACHED_PORT

value: "13612"

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_REGION_NAME__

image: 135.10.145.25:5000/pro1/memcache:1.4.33

imagePullPolicy: Always

name: memcached2

resources:

limits:

cpu: "0.5"

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.37

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 5

labels:

service_name: memcached3

name: memcached3

namespace: core-1

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memcached3

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memcached3

namespace: core-1

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: MEMORY_LIMIT

value: "128"

- name: __ALAUDA_FULLa_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_SERVICE_NAME__

value: memcached3

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_VERSION__

value: "3055"

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: MEMCACHED_PORT

value: "13612"

- name: __CREATE_TIME__

value: "2018-05-04T04:48:46.073Z"

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_REGION_NAME__

image: 135.10.145.25:5000/pro1/memcache:1.4.33

imagePullPolicy: Always

name: memcached3

resources:

limits:

cpu: "0.5"

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.36

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 5

labels:

service_name: memcached4

name: memcached4

namespace: core-1

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memcached4

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memcached4

namespace: core-1

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: MEMORY_LIMIT

value: "128"

- name: __ALAUDA_FULL_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_SERVICE_NAME__

value: memcached4

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_VERSION__

value: "3043"

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: MEMCACHED_PORT

value: "13612"

- name: __CREATE_TIME__

value: "2018-05-04T04:48:45.594Z"

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_REGION_NAME__

image: 135.10.145.25:5000/pro1/memcache:1.4.33

imagePullPolicy: Always

name: memcached4

resources:

limits:

cpu: "0.5"

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.43

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 5

labels:

service_name: memcached5

name: memcached5

namespace: core-1

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

service_name: memcached5

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

service_name: memcached5

namespace: core-1

spec:

affinity:

podAffinity: {}

podAntiAffinity: {}

containers:

- env:

- name: MEMORY_LIMIT

value: "128"

- name: __ALAUDA_FULL_NAME__

- name: __ALAUDA_OVER_COMMIT_MEM__

value: "4096"

- name: __ALAUDA_SERVICE_NAME__

value: memcached5

- name: __ALAUDA_APP_NAME__

value: memcache-121

- name: __ALAUDA_SERVICE_VERSION__

value: "3049"

- name: CONNECTION_LIMIT

value: "1024"

- name: __ALAUDA_OVER_COMMIT_CPU__

value: "2048"

- name: MEMCACHED_PORT

value: "13612"

- name: __CREATE_TIME__

value: "2018-05-04T04:48:45.687Z"

- name: __ALAUDA_CONTAINER_SIZE__

value: "2.0"

- name: __ALAUDA_REGION_NAME__

image: 135.10.145.25:5000/pro1/memcache:1.4.33

imagePullPolicy: Always

name: memcached5

resources:

limits:

cpu: "0.5"

memory: 200Mi

requests:

cpu: "0.5"

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

ip: 135.10.145.39

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

yaml里面的配置文件,有部分需要自己修改一下哦。

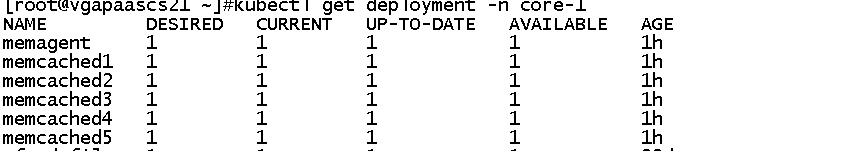

9.使用kubectl apply -f mem-test.yaml 部署

kubectl apply -f mem-test.yaml 10.查看部署是否成功

小结:

到这里我们就部署成功了,谢谢大家的浏览。

由于我使用的是公司环境来部署的,所以yaml文件有一些参数可能大家不需要,大家可以自行优化哦。