shell 脚本执行python脚本,连接hive提交数据写入表

- 使用说明

- 技术实现说明

- shell 脚本 try2.sh

- python脚本

使用说明

1.cd /opt/zy

在这个目录下以root用户权限执行命令

2.

在SAP查询的时候

Tcode:ZMMR0005

Purchase Org *

PO Creating:2017/3/1 (开始日期) 2017/6/31(结束日期)

Vendor

1000341

plant *

这样查询处理的结果代表 发货日期在20170301-20170631的所有记录,不管到达日期在那个月

从SAP导出数据表格,存为txt形式以”\t”分隔

用rz命令把导出的文件上传到/opt/zy目录下,

3.执行命令 注意参数必须严格符合XXXXXXXXtoYYYYYYYY的格式,代表startdate to enddate

example:

[root@slave1 zy]# bash try2.sh 20170301to20170632

4.去Hue里查询分析结果

SELECT * from saplifttime WHERE querypocredatestart=’XXXXXXXX’[and querypocredateend=’YYYYYYYY’];

5.如果想看原数据,去pcg.sap表,命令如下:

SELECT * from sap WHERE querypocredatestart=’20170301’;

技术实现说明

用shell 脚本调用python脚本

shell 脚本 try2.sh

#!/bin/sh

#echo $1

daterange=$1#赋值给daterange这个变量是因为后面截取字符串要用到,否则我不会写

python3 /opt/zy/runtask.py $1 #运行python脚本

startdate=${daterange:0:8} #截取查询的开始日期

#echo $startdate

enddate=${daterange:10:18} #截取查询的结束日期

#echo $enddate

sed -i '1,3d' /opt/zy/$1.txt #删除前三行,因为前三行是空行

sed 's/.\{1\}//' $1.txt>$1regular.txt #删除第一列,因为第一列是空列

hdfs dfs -put -f /opt/zy/$1regular.txt /user/hive/pcg-data/zhouyi6_files #把服务器上的本地文件上传到hadoop集群上

hive -e "LOAD DATA INPATH '/user/hive/pcg-data/zhouyi6_files/$1regular.txt' INTO TABLE pcg.sap partition(querypocredatestart=$startdate,querypocredateend=$enddate)" #把文件的数据载入表

rm $1.txt #删除本地原文件,只保留格式处理后的文件备注:

1.因为sed命令 不修改文件本身,所以要把修改后的结果存入新文件 +regular后缀的

2.sed -i,-i代表不把删除前三行后的结果显示在命令行上

3.hdfs dfs -put -f

-f option will overwrite the destination if it already exists.

4.运行这个脚本的前提是已经创建了pcg.sap表,建表语句如下:

CREATE TABLE SAP(`PO Cre Date` string,

`Vendor` string,

`WW Partner` string,

`Name of Vendor` string,

`PO Cre by` string,

`Purch Doc Type` string,

`Purch Order` string,

`PO Item` string,

`Deletion Indicator in PO Item` string,

`Request Shipment Day` string,

`Material` string,

`Short Text` string,

`Plant` string,

`Issuing Stor location` string,

`Receive Stor loaction` string,

`PO item change date` string,

`Delivery Priority` string,

`PO Qty` string,

`Total GR Qty` string,

`Still to be delivered` string,

`Delivery Note` string,

`Delivery Note Type (ASN or DN)` string,

`Delivery Note item` string,

`Delivery Note qty` string,

`Delivery Note Creation Date` string,

`Delivery Note ACK Date` string,

`Incoterm` string,

`Part Battery Indicator` string,

`BOL/AWBill` string,

`Purchase order type` string,

`Gr Date`string)

partitioned by (`queryPoCreDateStart` string,`queryPoCreDateEnd` string)

row format delimited fields terminated by "\t" stored as textfilepython脚本

import pandas as pd

import sys

data = pd.read_csv(sys.argv[1]+".txt", sep="\t")

#print(data.columns)

data['Delivery Note Creation Date']=pd.to_datetime(data['Delivery Note Creation Date'],format='%d.%m.%Y')

data['Gr Date']=pd.to_datetime(data['Gr Date'],format='%d.%m.%Y')

data=data.drop(data[data['Delivery Note Creation Date'].isnull()].index.tolist())#删除某列为空值所在的行

data=data.drop(data[data['Gr Date'].isnull()].index.tolist())#删除某列为空值所在的行

data['delta']=(data['Gr Date']-data['Delivery Note Creation Date']).apply(lambda x:x.days)#相差的时间

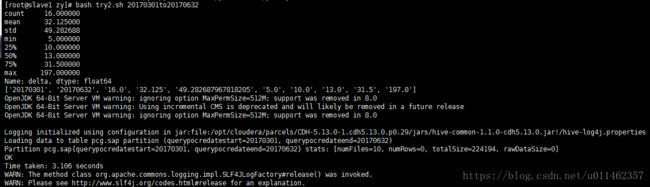

print(data['delta'].describe())

#sql_content="insert into table saplifttime values(%,%s,%s,%s,%s,%s,%s,%s,%s,%s)"%\

import hdfs

from impala.dbapi import connect

filename=sys.argv[1]+".txt"

hdfspath='/user/hive/pcg-data/zhouyi6_files'

client=hdfs.Client("http://10.100.208.222:50070")#50070

#8888是我登录WEB 操作界面时候的接口

#print(client.status("/user/zhouyi",strict=True))#查看路径信息

#print(client.list("/user/zhouyi"))#查看文件夹下的文件

#client.upload(hdfs_path=hdfspath,local_path="/opt/zy/"+filename,overwrite=True)

# overwrtie=True means Delete any uploaded files if an error occurs during theupload.

conn = connect(host='10.100.208.222', port=21050,database='pcg')

cur = conn.cursor()

stdate,edate=sys.argv[1].split("to")

#print(sys.argv[1])

cnt=str(data['delta'].describe()[0])

mean=str(data['delta'].describe()[1])

std=str(data['delta'].describe()[2])

mini=str(data['delta'].describe()[3])

twentyfive=str(data['delta'].describe()[4])

fifty=str(data['delta'].describe()[5])

seventyfive=str(data['delta'].describe()[6])

maxm=str(data['delta'].describe()[7])

args=[stdate,edate,cnt,mean,std,mini,twentyfive,fifty,seventyfive,maxm]

print(args)

#对的SQL

#sql_content="insert into table saplifttime values("+str(5555)+",'20200607','22','4.2','9.88','1','2','5','10','9999999999999')"

sql_content="insert into table saplifttime values(?,?,?,?,?,?,?,?,?,?)"

cur.execute(sql_content,args)#把运算结果插到表pcg.saplifttime里备注:

1.执行cur.execute的前提是已经建好pcg.saplifttime的表,建表语句如下:

CREATE TABLE SAPLifttime(querypocredatestart STRING,querypocredateend STRING,cnt STRING,mean STRING,std STRING,minimum STRING,25percent STRING,50percent STRING,75percent STRING,maxmum STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY "\t" STORED AS Textfile2.计算逻辑:

第一步:把字段“Delivery Note Creation Date”视为货物发出日期,如果为空则删除该行

第二步:把字段“Gr Date”视为货物到达日期,如果为空则删除该行

第三步:货物在途时间= Gr Date - Delivery Note Creation Date

第四步:对货物在途时间求cnt,mean,std,minimum,25%,50%,75%,maxmum

踩过的坑:

1.我的表字段都是STRING类型,values的占位符问题,我一开始试过%s,%d,总与python里对应的值格式不匹配。后来用?占位就好了

2.cur.excute(sql.args)这样写的好处在于看起来清晰,不用拼接特别长的sql字符串了,非常容易拼错