Pytorch

-

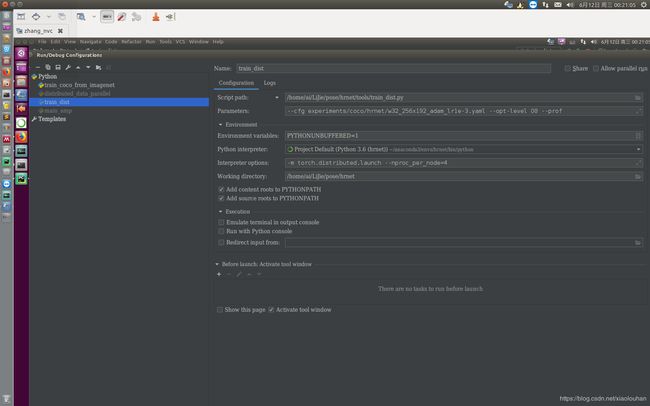

Apex:Pytorch分布式数据并行DDP [另一种官方实现]

python -m torch.distributed.launch --nproc_per_node=NUM_GPUS main_amp.py args...

-

pytorch使用torch.utils.bottleneck预测程序瓶颈

torch.utils.bottleneck是一个可以用作调试程序瓶颈的初始步骤的工具。它汇总了Python分析器和PyTorch的autograd分析器脚本的运行情况。

用命令行运行它

python -m torch.utils.bottleneck /path/to/source/script.py [args][args]是script.py中的任意参数,也可以运行如下代码获取更多使用说明。

python -m torch.utils.bottleneck -h警告 由于您的脚本将被分析,请确保它在有限的时间内退出。

警告 由于CUDA内核的异步特性,在针对CUDA代码运行时,cProfile输出和CPU模式autograd分析器可能无法显示以正确的顺序显示:报告的CPU时间报告启动内核所用的时间量,但不包括时间内核花在GPU上执行,除非操作进行同步。在常规CPU模式分析器下,进行同步的Ops似乎非常昂贵。在这些情况下,时序不正确,CUDA模式autograd分析器可能会有所帮助。

注意 要决定要查看哪个(纯CPU模式或CUDA模式)autograd分析器输出,应首先检查脚本是否受CPU限制(“CPU总时间远远超过CUDA总时间”)。如果它受到CPU限制,查看CPU模式autograd分析器的结果将有所帮助。另一方面,如果脚本大部分时间都在GPU上执行,那么开始在CUDA模式autograd分析器的输出中查找负责任的CUDA操作符是有意义的。 当然,实际情况要复杂得多,根据你正在评估的模型部分,你的脚本可能不会处于这两个极端之一。如果探查器输出没有帮助,你可以尝试寻找的结果torch.autograd.profiler.emit_nvtx()用nvprof。但是,请注意NVTX的开销非常高,并且经常会产生严重偏斜的时间表。

警告 如果您正在分析CUDA代码,那么bottleneck运行的第一个分析器(cProfile)将在其时间报告中包含CUDA启动时间(CUDA缓冲区分配成本)。如果您的瓶颈导致代码比CUDA启动时间慢得多,这应该没有关系。

有关分析器的更复杂用途(如多GPU情况下),请参阅https://docs.python.org/3/library/profile.html 或使用torch.autograd.profiler.profile()获取更多信息。

-

分布式

https://blog.csdn.net/m0_38008956/article/details/86559432

https://www.zhihu.com/question/59321480

https://pytorch.org/tutorials/intermediate/ddp_tutorial.html

-

GPU利用率不高

https://blog.csdn.net/u012743859/article/details/79446302

https://discuss.pytorch.org/t/how-to-best-use-dataparallel-with-multiple-models/39289

https://zhuanlan.zhihu.com/p/31558973

https://zhuanlan.zhihu.com/p/65002487

https://yinonglong.github.io/2017/06/23/%E5%85%B3%E4%BA%8E%E5%9C%A8GPU%E4%B8%8A%E8%B7%91%E6%A8%A1%E5%9E%8B%E7%9A%84%E4%B8%80%E4%BA%9B%E7%82%B9/

https://www.jiqizhixin.com/articles/2019-01-09-21?fbclid=IwAR1JmVDMEWiOhkYAgcYhmb1CoteqC7izy0Y5HaLuUpY3tS9roDQQQZVeL1w

- Multi-GPU

https://pytorch.org/tutorials/beginner/former_torchies/parallelism_tutorial.html

https://stackoverflow.com/questions/54216920/how-to-use-multiple-gpus-in-pytorch

https://www.jiqizhixin.com/articles/2018-10-17-11

https://www.zhihu.com/question/67726969

https://zhuanlan.zhihu.com/p/48035735

https://blog.csdn.net/weixin_40087578/article/details/87186613

https://mp.weixin.qq.com/s?__biz=MzI5MDUyMDIxNA==&mid=2247488916&idx=2&sn=591eaa0050ff40945e7a2a27d743e4a4

https://zhuanlan.zhihu.com/p/66145913

https://www.sohu.com/a/238177941_717210

https://github.com/NVIDIA/apex

https://github.com/NVIDIA/apex/blob/master/examples/imagenet/main_amp.py

https://oldpan.me/archives/pytorch-to-use-multiple-gpus

https://zpqiu.github.io/pytorch-multi-gpu/

https://www.jqr.com/article/000537

-

查看model和data在哪个gpu上或cpu

print(next(model.parameters()).is_cuda) #判断是否在cpu上

print(next(model.parameters()).device) #判断在cpu或具体哪个cpu上

data=torch.ones((5,10))

print(data.device) #同上-

Pytorch存储和读取模型

torch.load()会将模型载入到之前存储模型的设备如cuda:0,为防止多GPU加载时其他GPU抢占主GPU显存,可将其放在cpu上。

def load(f, map_location=None, pickle_module=pickle):

"""Loads an object saved with :func:`torch.save` from a file.

:meth:`torch.load` uses Python's unpickling facilities but treats storages,

which underlie tensors, specially. They are first deserialized on the

CPU and are then moved to the device they were saved from. If this fails

(e.g. because the run time system doesn't have certain devices), an exception

is raised. However, storages can be dynamically remapped to an alternative

set of devices using the `map_location` argument.

If `map_location` is a callable, it will be called once for each serialized

storage with two arguments: storage and location. The storage argument

will be the initial deserialization of the storage, residing on the CPU.

Each serialized storage has a location tag associated with it which

identifies the device it was saved from, and this tag is the second

argument passed to map_location. The builtin location tags are `'cpu'` for

CPU tensors and `'cuda:device_id'` (e.g. `'cuda:2'`) for CUDA tensors.

`map_location` should return either None or a storage. If `map_location` returns

a storage, it will be used as the final deserialized object, already moved to

the right device. Otherwise, :math:`torch.load` will fall back to the default

behavior, as if `map_location` wasn't specified.

If `map_location` is a string, it should be a device tag, where all tensors

should be loaded.

Otherwise, if `map_location` is a dict, it will be used to remap location tags

appearing in the file (keys), to ones that specify where to put the

storages (values).

User extensions can register their own location tags and tagging and

deserialization methods using `register_package`.

Args:

f: a file-like object (has to implement read, readline, tell, and seek),

or a string containing a file name

map_location: a function, torch.device, string or a dict specifying how to remap storage

locations

pickle_module: module used for unpickling metadata and objects (has to

match the pickle_module used to serialize file)

.. note::

When you call :meth:`torch.load()` on a file which contains GPU tensors, those tensors

will be loaded to GPU by default. You can call `torch.load(.., map_location='cpu')`

and then :meth:`load_state_dict` to avoid GPU RAM surge when loading a model checkpoint.

Example:

>>> torch.load('tensors.pt')

# Load all tensors onto the CPU

>>> torch.load('tensors.pt', map_location=torch.device('cpu'))

# Load all tensors onto the CPU, using a function

>>> torch.load('tensors.pt', map_location=lambda storage, loc: storage)

# Load all tensors onto GPU 1

>>> torch.load('tensors.pt', map_location=lambda storage, loc: storage.cuda(1))

# Map tensors from GPU 1 to GPU 0

>>> torch.load('tensors.pt', map_location={'cuda:1':'cuda:0'})

# Load tensor from io.BytesIO object

>>> with open('tensor.pt') as f:

buffer = io.BytesIO(f.read())

>>> torch.load(buffer)

"""-

先模型GPU化后,再定义优化器,避免出错,同时其也工作在GPU上

model.cuda()

optimizer = optim.SGD(model.parameters(), lr = 0.01)

# Do optimization

Not this!

optimizer = optim.SGD(model.parameters(), lr = 0.01)

model.cuda()

# Do optimization