Flink Stream Windows Join

1. 说明

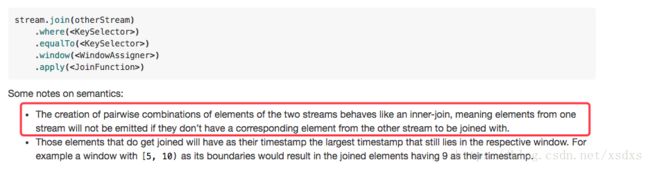

参考Flink Stream Joining。不过就我实践下来,感觉这官方文档写的也不全面,所以我就来填填坑的。文中给出Windows Join的代码一般形式如下:

stream.join(otherStream)

.where()

.equalTo()

.window()

.apply()

并且指出:

意思就是说只能支持做类似 inner join的操作。不过Flink Stream Transform 中是提供CoGroup()这方法的,所以自然可实现 left Join 和 outer join。本文打算先根据官方资料实现 inner join 示例,再拓展 left Join 和 outer join。

2. Inner Join

这里以Tumbling Window Inner Join 为例,其他类型的窗口也是一样的,Windows Join的元素必须属于同一个窗口的,不同窗口间的元素是不能Join的。以下是我使用的两个数据源类和运行主类:

2.1 StreamDataSource

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

/**

* Created by yidxue on 2018/9/12

*/

public class StreamDataSource extends RichParallelSourceFunction> {

private volatile boolean running = true;

@Override

public void run(SourceContext> ctx) throws InterruptedException {

Tuple3[] elements = new Tuple3[]{

Tuple3.of("a", "1", 1000000050000L),

Tuple3.of("a", "2", 1000000054000L),

Tuple3.of("a", "3", 1000000079900L),

Tuple3.of("a", "4", 1000000115000L),

Tuple3.of("b", "5", 1000000100000L),

Tuple3.of("b", "6", 1000000108000L)

};

int count = 0;

while (running && count < elements.length) {

ctx.collect(new Tuple3<>((String) elements[count].f0, (String) elements[count].f1, (Long) elements[count].f2));

count++;

Thread.sleep(1000);

}

}

@Override

public void cancel() {

running = false;

}

}

2.2 StreamDataSource1

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

/**

* Created by yidxue on 2018/9/12

*/

public class StreamDataSource1 extends RichParallelSourceFunction> {

private volatile boolean running = true;

@Override

public void run(SourceContext> ctx) throws InterruptedException {

Tuple3[] elements = new Tuple3[]{

Tuple3.of("a", "hangzhou", 1000000059000L),

Tuple3.of("b", "beijing", 1000000105000L),

};

int count = 0;

while (running && count < elements.length) {

ctx.collect(new Tuple3<>((String) elements[count].f0, (String) elements[count].f1, (long) elements[count].f2));

count++;

Thread.sleep(1000);

}

}

@Override

public void cancel() {

running = false;

}

}

2.3 主类:FlinkTumblingWindowsInnerJoinDemo

import util.source.StreamDataSource1;

import util.source.StreamDataSource;

import org.apache.flink.api.common.functions.JoinFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple5;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* Created by yidxue on 2018/9/12

*/

public class FlinkTumblingWindowsInnerJoinDemo {

public static void main(String[] args) throws Exception {

int windowSize = 10;

long delay = 5100L;

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

// 设置数据源

DataStream> leftSource = env.addSource(new StreamDataSource()).name("Demo Source");

DataStream> rightSource = env.addSource(new StreamDataSource1()).name("Demo Source");

// 设置水位线

DataStream> leftStream = leftSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

DataStream> rigjhtStream = rightSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

// join 操作

leftStream.join(rigjhtStream)

.where(new LeftSelectKey())

.equalTo(new RightSelectKey())

.window(TumblingEventTimeWindows.of(Time.seconds(windowSize)))

.apply(new JoinFunction, Tuple3, Tuple5>() {

@Override

public Tuple5 join(Tuple3 first, Tuple3 second) {

return new Tuple5<>(first.f0, first.f1, second.f1, first.f2, second.f2);

}

}).print();

env.execute("TimeWindowDemo");

}

public static class LeftSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

public static class RightSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

}

2.4 参数和输出

当设置参数 int windowSize = 10; long delay = 5100L;。输出如下:

(a,1,hangzhou,1000000050000,1000000059000)

(a,2,hangzhou,1000000054000,1000000059000)

(b,5,beijing,1000000100000,1000000105000)

(b,6,beijing,1000000108000,1000000105000)

这个比较好理解的,因为只有相同窗口内的元素才能Join。不过值得注意的是:当设置参数 int windowSize = 10; long delay = 5000L;时,输出如下:

(a,1,hangzhou,1000000050000,1000000059000)

(a,2,hangzhou,1000000054000,1000000059000)

至于为啥呢?可以先阅读我的这篇文章:Flink 中 timeWindow 滚动窗口边界和数据延迟问题调研。简单来说就是window_end_time < watermark,导数数据丢失了。总的来说Inner Join的输出是符合预期的。

3. Left Outer Join

这个在Flink Stream的官方资料中并没有提及。我来补充下,基于coGroup的示例。我直接给Code了,大家自己看:

3.1 代码

import util.source.StreamDataSource1;

import util.source.StreamDataSource;

import org.apache.flink.api.common.functions.CoGroupFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple5;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

/**

* Created by yidxue on 2018/9/12

*/

public class FlinkTumblingWindowsLeftJoinDemo {

public static void main(String[] args) throws Exception {

int windowSize = 10;

long delay = 5100L;

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

// 设置数据源

DataStream> leftSource = env.addSource(new StreamDataSource()).name("Demo Source");

DataStream> rightSource = env.addSource(new StreamDataSource1()).name("Demo Source");

// 设置水位线

DataStream> leftStream = leftSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

DataStream> rigjhtStream = rightSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

// join 操作

leftStream.coGroup(rigjhtStream)

.where(new LeftSelectKey()).equalTo(new RightSelectKey())

.window(TumblingEventTimeWindows.of(Time.seconds(windowSize)))

.apply(new LeftJoin())

.print();

env.execute("TimeWindowDemo");

}

public static class LeftJoin implements CoGroupFunction, Tuple3, Tuple5> {

@Override

public void coGroup(Iterable> leftElements, Iterable> rightElements, Collector> out) {

for (Tuple3 leftElem : leftElements) {

boolean hadElements = false;

for (Tuple3 rightElem : rightElements) {

out.collect(new Tuple5<>(leftElem.f0, leftElem.f1, rightElem.f1, leftElem.f2, rightElem.f2));

hadElements = true;

}

if (!hadElements) {

out.collect(new Tuple5<>(leftElem.f0, leftElem.f1, "null", leftElem.f2, -1L));

}

}

}

}

public static class LeftSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

public static class RightSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

}

3.2 输出和说明:

(a,1,hangzhou,1000000050000,1000000059000)

(a,2,hangzhou,1000000054000,1000000059000)

(a,3,null,1000000079900,-1)

(b,5,beijing ,1000000100000,1000000105000)

(b,6,beijing ,1000000108000,1000000105000)

(a,4,null,1000000115000,-1)

这个也是符合预期输出的。同样可以把参数改成: int windowSize = 10; long delay = 5000L;试试结果。

4. Full Outer Join

这个也是基于coGroup()实现的,但是有些限制,我下面的Code需要两个Stream 中不存在相同的 Join Key。也就是Join的字段值不能出现重复的。其中会用到两个数据源类 :StreamDataSource1 和 StreamDataSource2。以及一个pojo类:Element。StreamDataSource1见上文,Element 和 StreamDataSource2如下所示:

4.1 StreamDataSource2

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

/**

* Created by yidxue on 2018/9/12

*/

public class StreamDataSource2 extends RichParallelSourceFunction> {

private volatile boolean running = true;

@Override

public void run(SourceFunction.SourceContext> ctx) throws InterruptedException {

Tuple3[] elements = new Tuple3[]{

Tuple3.of("a", "beijing", 1000000058000L),

Tuple3.of("c", "beijing", 1000000055000L),

Tuple3.of("d", "beijing", 1000000106000L),

};

int count = 0;

while (running && count < elements.length) {

ctx.collect(new Tuple3<>((String) elements[count].f0, (String) elements[count].f1, (long) elements[count].f2));

count++;

Thread.sleep(1000);

}

}

@Override

public void cancel() {

running = false;

}

}

4.2 Element类

运行主类中的Element类。

public class Element {

/**

* 设置为 public

*/

public String name;

/**

* 设置为 public

*/

public long number;

public Element() {

}

public Element(String name, long number) {

this.name = name;

this.number = number;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public long getNumber() {

return number;

}

public void setNumber(int number) {

this.number = number;

}

@Override

public String toString() {

return this.name + ":" + this.number;

}

}

4.3 运行主类

import util.bean.Element;

import util.source.StreamDataSource1;

import util.source.StreamDataSource2;

import org.apache.flink.api.common.functions.CoGroupFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple5;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.util.HashMap;

import java.util.HashSet;

/**

* Created by yidxue on 2018/9/12

* 这个 outer join 必须左右两边去重的

*/

public class FlinkTumblingWindowsOuterJoinDemo {

public static void main(String[] args) throws Exception {

int windowSize = 10;

long delay = 5100L;

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

// 设置数据源

DataStream> leftSource = env.addSource(new StreamDataSource1()).name("Demo Source");

DataStream> rightSource = env.addSource(new StreamDataSource2()).name("Demo Source");

// 设置水位线

DataStream> leftStream = leftSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

DataStream> rigjhtStream = rightSource.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor>(Time.milliseconds(delay)) {

@Override

public long extractTimestamp(Tuple3 element) {

return element.f2;

}

}

);

// join 操作

leftStream.coGroup(rigjhtStream)

.where(new LeftSelectKey()).equalTo(new RightSelectKey())

.window(TumblingEventTimeWindows.of(Time.seconds(windowSize)))

.apply(new OuterJoin())

.print();

env.execute("TimeWindowDemo");

}

public static class OuterJoin implements CoGroupFunction, Tuple3, Tuple5> {

@Override

public void coGroup(Iterable> leftElements, Iterable> rightElements, Collector> out) {

HashMap left = new HashMap<>();

HashMap right = new HashMap<>();

HashSet set = new HashSet<>();

for (Tuple3 leftElem : leftElements) {

set.add(leftElem.f0);

left.put(leftElem.f0, new Element(leftElem.f1, leftElem.f2));

}

for (Tuple3 rightElem : rightElements) {

set.add(rightElem.f0);

right.put(rightElem.f0, new Element(rightElem.f1, rightElem.f2));

}

for (String key : set) {

Element leftElem = getHashMapByDefault(left, key, new Element("null", -1L));

Element rightElem = getHashMapByDefault(right, key, new Element("null", -1L));

out.collect(new Tuple5<>(key, leftElem.getName(), rightElem.getName(), leftElem.getNumber(), rightElem.getNumber()));

}

}

private Element getHashMapByDefault(HashMap map, String key, Element defaultValue) {

return map.get(key) == null ? defaultValue : map.get(key);

}

}

public static class LeftSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

public static class RightSelectKey implements KeySelector, String> {

@Override

public String getKey(Tuple3 w) {

return w.f0;

}

}

}

4.4 输出和说明

输出如下:

(a,hangzhou,beijing,1000000059000,1000000058000)

(c,null,beijing,-1,1000000055000)

(b,beijing,null,1000000105000,-1)

(d,null,beijing,-1,1000000106000)

非常生硬地实现了Out Join 的功能,结果是符合预期的。可能需要改进,阶段性先这样了。