【kubernetes/k8s源码分析】kube proxy源码分析

本文再次于2018年11月15日再次编辑,基于1.12版本,包括IPVS

序言

-

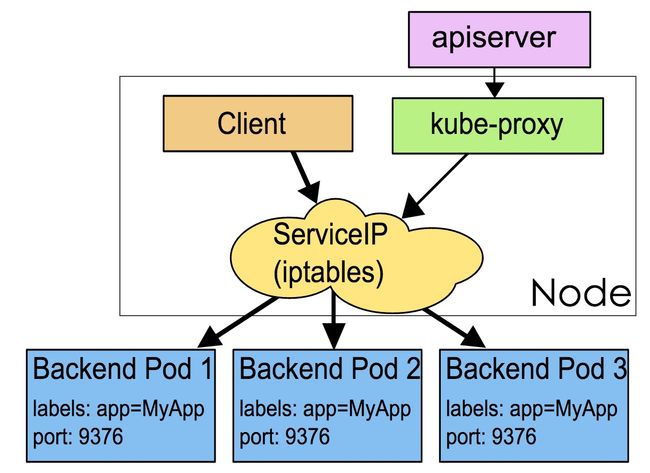

kube-proxy管理sevice的Endpoints,service对外暴露一个Virtual IP(Cluster IP), 集群内Cluster IP:Port就能访问到集群内对应的serivce下的Pod。 service是通过Selector选择的一组Pods的服务抽象

-

kube-proxy的主要作用就是service的实现。 service另一个作用是:一个服务后端的Pods可能会随着生存灭亡而发生IP的改变,service的出现,给服务提供了一个固定的IP,无视后端Endpoint的变化。

-

kube-proxy 工作监听 etcd(通过apiserver 的接口读取 etcd),来实时更新节点上的 iptables

iptables,它完全利用内核iptables来实现service的代理和LB,iptables mode使用iptable NAT来转发,存在性能损耗。如果集群中上万的Service/Endpoint,那么Node上的iptables rules将会非常庞大。

目前大部,都不会直接用kube-proxy作为服务代理,通过自己开发或者通过Ingress Controller。

进程:

/usr/sbin/kube-proxy

--bind-address=10.12.51.186

--hostname-override=10.12.51.186

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

--logtostderr=false --log-dir=/var/log/kubernetes/kube-proxy --v=4

iptables

iptables 有哪些链

- INPUT:进入主机

- OUTPUT:离开主机

- PREROUTING:路由前

- FORWARD:转发

- POSTROUTING:路由后

iptables 提供了哪些表

- filter:负责过滤功能,内核模块 iptables_filter

- nat:负责进行网络地址转换,内核模块 iptable_nat

- mangle:拆解报文,进行修改,重新封装,内核模块 iptable_mangle

- raw:关闭 nat 表上启用的连接追踪机制,内核模块 iptable_raw

- security:CentOS 7 里新增的表,暂且不介绍

处理动作

- iptables 中称为 target

- ACCEPT:允许数据包通过。

- DROP:直接丢弃数据包。不回应任何信息,客户端只有当该链接超时后才会有反应。

- REJECT:拒绝数据包。会给客户端发送一个响应的信息 。

- SNAT:源 NAT,解决私网用户用同一个公网 IP 上网的问题。

- MASQUERADE:是 SNAT 的一种特殊形式,适用于动态的、临时会变的 IP 上。

- DNAT:目的 NAT,解决私网服务端,接收公网请求的问题。

- REDIRECT:在本机做端口映射。

- LOG:在 /etc/log/messages 中留下记录,但并不对数据包进行任何操作。

到本机某进程的报文: PREROUTING --> INPUT

由本机转发的报文: PREROUTING --> FORWARD --> POSTROUTING

由本机的某进程发出的报文(通常为相应报文): OUTPUT --> POSTROUTING

connection tracking

很多iptables的功能需要借助connection tracking实现,所以当数据包经过iptables在raw表处理之后mangle表处理之前,会进行connection tracking处理,/proc/net/ip_conntrack里面保存了所有被跟踪的连接,从iptables的connection tracking机制可以得知当前数据包所对应的连接的状态:

- NEW,该包请求一个新连接,现在没有对应该数据包的连接

- ESTABLISHED,数据包已经属于某个连接

- RELATED,数据包已经属于某个连接,但是又请求一个新连接

- INVALID,数据包不属于任何连接

1. main函数

- NewProxyCommand使用了cobra.Command

- 初始化log,默认刷新间隔30秒

func main() {

command := app.NewProxyCommand()

// TODO: once we switch everything over to Cobra commands, we can go back to calling

// utilflag.InitFlags() (by removing its pflag.Parse() call). For now, we have to set the

// normalize func and add the go flag set by hand.

pflag.CommandLine.SetNormalizeFunc(utilflag.WordSepNormalizeFunc)

pflag.CommandLine.AddGoFlagSet(goflag.CommandLine)

// utilflag.InitFlags()

logs.InitLogs()

defer logs.FlushLogs()

if err := command.Execute(); err != nil {

fmt.Fprintf(os.Stderr, "error: %v\n", err)

os.Exit(1)

}

}2. NewProxyCommand函数

- 完成参数的初始化

- 调用opts.Run()函数,第2.1章节讲解

// NewProxyCommand creates a *cobra.Command object with default parameters

func NewProxyCommand() *cobra.Command {

opts := NewOptions()

cmd := &cobra.Command{

Use: "kube-proxy",

Long: `The Kubernetes network proxy runs on each node. This

reflects services as defined in the Kubernetes API on each node and can do simple

TCP, UDP, and SCTP stream forwarding or round robin TCP, UDP, and SCTP forwarding across a set of backends.

Service cluster IPs and ports are currently found through Docker-links-compatible

environment variables specifying ports opened by the service proxy. There is an optional

addon that provides cluster DNS for these cluster IPs. The user must create a service

with the apiserver API to configure the proxy.`,

Run: func(cmd *cobra.Command, args []string) {

verflag.PrintAndExitIfRequested()

utilflag.PrintFlags(cmd.Flags())

if err := initForOS(opts.WindowsService); err != nil {

glog.Fatalf("failed OS init: %v", err)

}

if err := opts.Complete(); err != nil {

glog.Fatalf("failed complete: %v", err)

}

if err := opts.Validate(args); err != nil {

glog.Fatalf("failed validate: %v", err)

}

glog.Fatal(opts.Run())

},

}

var err error

opts.config, err = opts.ApplyDefaults(opts.config)

if err != nil {

glog.Fatalf("unable to create flag defaults: %v", err)

}

opts.AddFlags(cmd.Flags())

cmd.MarkFlagFilename("config", "yaml", "yml", "json")

return cmd

}2.1 Run函数

- NewProxyServer函数调用newProxyServer(第3章节讲解)

- Run函数,运行主要函数(第 4章节讲解)

func (o *Options) Run() error {

if len(o.WriteConfigTo) > 0 {

return o.writeConfigFile()

}

proxyServer, err := NewProxyServer(o)

if err != nil {

return err

}

return proxyServer.Run()

}3. newProxyServer

主要工作是初始化proxy server,配置,一大堆需要调用的接口

3.1 createClients

创建k8s master的客户端

client, eventClient, err := createClients(config.ClientConnection, master)

if err != nil {

return nil, err

}3.2 创建event Broadcaster和event recorder

// Create event recorder

hostname, err := utilnode.GetHostname(config.HostnameOverride)

if err != nil {

return nil, err

}

eventBroadcaster := record.NewBroadcaster()

recorder := eventBroadcaster.NewRecorder(scheme, v1.EventSource{Component: "kube-proxy", Host: hostname})3.3 模式为iptables,主要初始化一大堆接口

if proxyMode == proxyModeIPTables {

glog.V(0).Info("Using iptables Proxier.")

if config.IPTables.MasqueradeBit == nil {

// MasqueradeBit must be specified or defaulted.

return nil, fmt.Errorf("unable to read IPTables MasqueradeBit from config")

}

// TODO this has side effects that should only happen when Run() is invoked.

proxierIPTables, err := iptables.NewProxier(

iptInterface,

utilsysctl.New(),

execer,

config.IPTables.SyncPeriod.Duration,

config.IPTables.MinSyncPeriod.Duration,

config.IPTables.MasqueradeAll,

int(*config.IPTables.MasqueradeBit),

config.ClusterCIDR,

hostname,

nodeIP,

recorder,

healthzUpdater,

config.NodePortAddresses,

)

if err != nil {

return nil, fmt.Errorf("unable to create proxier: %v", err)

}

metrics.RegisterMetrics()

proxier = proxierIPTables

serviceEventHandler = proxierIPTables

endpointsEventHandler = proxierIPTables

// No turning back. Remove artifacts that might still exist from the userspace Proxier.

glog.V(0).Info("Tearing down inactive rules.")

// TODO this has side effects that should only happen when Run() is invoked.

userspace.CleanupLeftovers(iptInterface)

// IPVS Proxier will generate some iptables rules, need to clean them before switching to other proxy mode.

// Besides, ipvs proxier will create some ipvs rules as well. Because there is no way to tell if a given

// ipvs rule is created by IPVS proxier or not. Users should explicitly specify `--clean-ipvs=true` to flush

// all ipvs rules when kube-proxy start up. Users do this operation should be with caution.

if canUseIPVS {

ipvs.CleanupLeftovers(ipvsInterface, iptInterface, ipsetInterface, cleanupIPVS)

}

}3.4 模式为IPVS情况

else if proxyMode == proxyModeIPVS {

glog.V(0).Info("Using ipvs Proxier.")

proxierIPVS, err := ipvs.NewProxier(

iptInterface,

ipvsInterface,

ipsetInterface,

utilsysctl.New(),

execer,

config.IPVS.SyncPeriod.Duration,

config.IPVS.MinSyncPeriod.Duration,

config.IPVS.ExcludeCIDRs,

config.IPTables.MasqueradeAll,

int(*config.IPTables.MasqueradeBit),

config.ClusterCIDR,

hostname,

nodeIP,

recorder,

healthzServer,

config.IPVS.Scheduler,

config.NodePortAddresses,

)

if err != nil {

return nil, fmt.Errorf("unable to create proxier: %v", err)

}

metrics.RegisterMetrics()

proxier = proxierIPVS

serviceEventHandler = proxierIPVS

endpointsEventHandler = proxierIPVS

glog.V(0).Info("Tearing down inactive rules.")

// TODO this has side effects that should only happen when Run() is invoked.

userspace.CleanupLeftovers(iptInterface)

iptables.CleanupLeftovers(iptInterface)

}3.5 模式为userspace

else {

glog.V(0).Info("Using userspace Proxier.")

// This is a proxy.LoadBalancer which NewProxier needs but has methods we don't need for

// our config.EndpointsConfigHandler.

loadBalancer := userspace.NewLoadBalancerRR()

// set EndpointsConfigHandler to our loadBalancer

endpointsEventHandler = loadBalancer

// TODO this has side effects that should only happen when Run() is invoked.

proxierUserspace, err := userspace.NewProxier(

loadBalancer,

net.ParseIP(config.BindAddress),

iptInterface,

execer,

*utilnet.ParsePortRangeOrDie(config.PortRange),

config.IPTables.SyncPeriod.Duration,

config.IPTables.MinSyncPeriod.Duration,

config.UDPIdleTimeout.Duration,

config.NodePortAddresses,

)

if err != nil {

return nil, fmt.Errorf("unable to create proxier: %v", err)

}

serviceEventHandler = proxierUserspace

proxier = proxierUserspace

// Remove artifacts from the iptables and ipvs Proxier, if not on Windows.

glog.V(0).Info("Tearing down inactive rules.")

// TODO this has side effects that should only happen when Run() is invoked.

iptables.CleanupLeftovers(iptInterface)

// IPVS Proxier will generate some iptables rules, need to clean them before switching to other proxy mode.

// Besides, ipvs proxier will create some ipvs rules as well. Because there is no way to tell if a given

// ipvs rule is created by IPVS proxier or not. Users should explicitly specify `--clean-ipvs=true` to flush

// all ipvs rules when kube-proxy start up. Users do this operation should be with caution.

if canUseIPVS {

ipvs.CleanupLeftovers(ipvsInterface, iptInterface, ipsetInterface, cleanupIPVS)

}

}4. Run函数

路径cmd/kube-proxy/app/server.go

4.1 设置oom数值

设置/proc/self/oom_score_adj值为-999

// TODO(vmarmol): Use container config for this.

var oomAdjuster *oom.OOMAdjuster

if s.OOMScoreAdj != nil {

oomAdjuster = oom.NewOOMAdjuster()

if err := oomAdjuster.ApplyOOMScoreAdj(0, int(*s.OOMScoreAdj)); err != nil {

glog.V(2).Info(err)

}

}4.2 设置连接跟踪

- 设置sysctl 'net/netfilter/nf_conntrack_max' 值为 524288

- 设置sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' 值为 86400

- 设置sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' 值为 3600

// Tune conntrack, if requested

// Conntracker is always nil for windows

if s.Conntracker != nil {

max, err := getConntrackMax(s.ConntrackConfiguration)

if err != nil {

return err

}

if max > 0 {

err := s.Conntracker.SetMax(max)

if err != nil {

if err != readOnlySysFSError {

return err

}

// readOnlySysFSError is caused by a known docker issue (https://github.com/docker/docker/issues/24000),

// the only remediation we know is to restart the docker daemon.

// Here we'll send an node event with specific reason and message, the

// administrator should decide whether and how to handle this issue,

// whether to drain the node and restart docker.

// TODO(random-liu): Remove this when the docker bug is fixed.

const message = "DOCKER RESTART NEEDED (docker issue #24000): /sys is read-only: " +

"cannot modify conntrack limits, problems may arise later."

s.Recorder.Eventf(s.NodeRef, api.EventTypeWarning, err.Error(), message)

}

}

if s.ConntrackConfiguration.TCPEstablishedTimeout != nil && s.ConntrackConfiguration.TCPEstablishedTimeout.Duration > 0 {

timeout := int(s.ConntrackConfiguration.TCPEstablishedTimeout.Duration / time.Second)

if err := s.Conntracker.SetTCPEstablishedTimeout(timeout); err != nil {

return err

}

}

if s.ConntrackConfiguration.TCPCloseWaitTimeout != nil && s.ConntrackConfiguration.TCPCloseWaitTimeout.Duration > 0 {

timeout := int(s.ConntrackConfiguration.TCPCloseWaitTimeout.Duration / time.Second)

if err := s.Conntracker.SetTCPCloseWaitTimeout(timeout); err != nil {

return err

}

}

}4.3 这个是proxy的重点

配置service和endpoint,间接watch etcd,更新service和endpoint关系

第5章节讲解service,endpoint同理略过

// Create configs (i.e. Watches for Services and Endpoints)

// Note: RegisterHandler() calls need to happen before creation of Sources because sources

// only notify on changes, and the initial update (on process start) may be lost if no handlers

// are registered yet.

serviceConfig := config.NewServiceConfig(informerFactory.Core().V1().Services(), s.ConfigSyncPeriod)

serviceConfig.RegisterEventHandler(s.ServiceEventHandler)

go serviceConfig.Run(wait.NeverStop)

endpointsConfig := config.NewEndpointsConfig(informerFactory.Core().V1().Endpoints(), s.ConfigSyncPeriod)

endpointsConfig.RegisterEventHandler(s.EndpointsEventHandler)

go endpointsConfig.Run(wait.NeverStop)4.4 SyncLoop函数

一看熟悉的套路,一猜就知道无限循环进行工作,主要分为iptables,ipvs,userspace等,第6章节讲解

// Just loop forever for now...

s.Proxier.SyncLoop()5. 创建ServiceConfig结构体,注册informer包括回调函数

- handleAddService

- handleUpdateService

- handleDeleteService

负责watch service的创建,更新,删除操作// NewServiceConfig creates a new ServiceConfig.

func NewServiceConfig(serviceInformer coreinformers.ServiceInformer, resyncPeriod time.Duration) *ServiceConfig {

result := &ServiceConfig{

lister: serviceInformer.Lister(),

listerSynced: serviceInformer.Informer().HasSynced,

}

serviceInformer.Informer().AddEventHandlerWithResyncPeriod(

cache.ResourceEventHandlerFuncs{

AddFunc: result.handleAddService,

UpdateFunc: result.handleUpdateService,

DeleteFunc: result.handleDeleteService,

},

resyncPeriod,

)

return result

}5.1 Run函数

主要是与cache进行同步

// Run starts the goroutine responsible for calling

// registered handlers.

func (c *ServiceConfig) Run(stopCh <-chan struct{}) {

defer utilruntime.HandleCrash()

glog.Info("Starting service config controller")

defer glog.Info("Shutting down service config controller")

if !controller.WaitForCacheSync("service config", stopCh, c.listerSynced) {

return

}

for i := range c.eventHandlers {

glog.V(3).Infof("Calling handler.OnServiceSynced()")

c.eventHandlers[i].OnServiceSynced()

}

<-stopCh

}6. SyncLoop函数

路径pkg/proxy/iptables/proxier.go

根据注册的函数 proxier.syncRunner = async.NewBoundedFrequencyRunner("sync-runner", proxier.syncProxyRules, minSyncPeriod, syncPeriod, burstSyncs) 跟吧,跟吧进入到syncProxyRules函数

// SyncLoop runs periodic work. This is expected to run as a goroutine or as the main loop of the app. It does not return.

func (proxier *Proxier) SyncLoop() {

// Update healthz timestamp at beginning in case Sync() never succeeds.

if proxier.healthzServer != nil {

proxier.healthzServer.UpdateTimestamp()

}

proxier.syncRunner.Loop(wait.NeverStop)

}7. syncProxyRules函数

特么长,用脚后跟想想(哈哈哈)就是更新iptables规则,包括表链啥的

Kubernetes可以利用iptables来做针对service的路由和负载均衡,其核心逻辑是通过kubernetes/pkg/proxy/iptables/proxier.go中的函数syncProxyRules来实现的

7.1 跟新service endpoint

// We assume that if this was called, we really want to sync them,

// even if nothing changed in the meantime. In other words, callers are

// responsible for detecting no-op changes and not calling this function.

serviceUpdateResult := proxy.UpdateServiceMap(proxier.serviceMap, proxier.serviceChanges)

endpointUpdateResult := proxy.UpdateEndpointsMap(proxier.endpointsMap, proxier.endpointsChanges)7.2 执行命令,添加chain以及rule

- filter表中 INPUT 链结尾添加自定义链调转到 KUBE-EXTERNAL-SERVICES链: -A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

- filter 表中 OUTPUT 链结尾追加自定义链调转到KUBE-SERVICE链: -A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

- nat表中OUTPUT链结尾追加自定义链调转到KUBE-SERVICES链: -A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

- nat表中PREROUTING链追加自定义链调转到KUBE-SERVICES链: -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

- nat表中POSTROUTING链追加自定义链调转到KUBE-POSTROUTING: -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

- filter表中KUBE-FORWARD追加:-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

// Create and link the kube chains.

for _, chain := range iptablesJumpChains {

if _, err := proxier.iptables.EnsureChain(chain.table, chain.chain); err != nil {

glog.Errorf("Failed to ensure that %s chain %s exists: %v", chain.table, kubeServicesChain, err)

return

}

args := append(chain.extraArgs,

"-m", "comment", "--comment", chain.comment,

"-j", string(chain.chain),

)

if _, err := proxier.iptables.EnsureRule(utiliptables.Prepend, chain.table, chain.sourceChain, args...); err != nil {

glog.Errorf("Failed to ensure that %s chain %s jumps to %s: %v", chain.table, chain.sourceChain, chain.chain, err)

return

}

}KUBE-EXTERNAL-SERVICES -t filter

KUBE-SERVICES -t filter

KUBE-SERVICES -t nat

KUBE-POSTROUTING -t filter

KUBE-FORWARD -t filter

7.3 运行命令iptables-save

- iptables-save [-t filter]

- iptables-save [-t nat]

得到已经存在得filter链,nat链,下面这四个存入iptables规则,用于iptables-restore写回到节点的iptables中

filterChains

filterRules

natChains

natRules //

// Below this point we will not return until we try to write the iptables rules.

//

// Get iptables-save output so we can check for existing chains and rules.

// This will be a map of chain name to chain with rules as stored in iptables-save/iptables-restore

existingFilterChains := make(map[utiliptables.Chain][]byte)

proxier.existingFilterChainsData.Reset()

err := proxier.iptables.SaveInto(utiliptables.TableFilter, proxier.existingFilterChainsData)

if err != nil { // if we failed to get any rules

glog.Errorf("Failed to execute iptables-save, syncing all rules: %v", err)

} else { // otherwise parse the output

existingFilterChains = utiliptables.GetChainLines(utiliptables.TableFilter, proxier.existingFilterChainsData.Bytes())

}

// IMPORTANT: existingNATChains may share memory with proxier.iptablesData.

existingNATChains := make(map[utiliptables.Chain][]byte)

proxier.iptablesData.Reset()

err = proxier.iptables.SaveInto(utiliptables.TableNAT, proxier.iptablesData)

if err != nil { // if we failed to get any rules

glog.Errorf("Failed to execute iptables-save, syncing all rules: %v", err)

} else { // otherwise parse the output

existingNATChains = utiliptables.GetChainLines(utiliptables.TableNAT, proxier.iptablesData.Bytes())

}7.4 filter nat链

filter表中:

- :KUBE-EXTERNAL-SERVICES - [0:0]

- :KUBE-FORWARD - [0:0]

- :KUBE-SERVICES - [0:0]

nat表中:

- :KUBE-SERVICES - [0:0

- :KUBE-MARK-MASQ - [0:0]

- :KUBE-NODEPORTS - [0:0]

- :KUBE-POSTROUTING - [0:0]

// Make sure we keep stats for the top-level chains, if they existed

// (which most should have because we created them above).

for _, chainName := range []utiliptables.Chain{kubeServicesChain, kubeExternalServicesChain, kubeForwardChain} {

if chain, ok := existingFilterChains[chainName]; ok {

writeBytesLine(proxier.filterChains, chain)

} else {

writeLine(proxier.filterChains, utiliptables.MakeChainLine(chainName))

}

}

for _, chainName := range []utiliptables.Chain{kubeServicesChain, kubeNodePortsChain, kubePostroutingChain, KubeMarkMasqChain} {

if chain, ok := existingNATChains[chainName]; ok {

writeBytesLine(proxier.natChains, chain)

} else {

writeLine(proxier.natChains, utiliptables.MakeChainLine(chainName))

}

}7.5 nat规则伪装mark

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

// Install the kubernetes-specific postrouting rules. We use a whole chain for

// this so that it is easier to flush and change, for example if the mark

// value should ever change.

writeLine(proxier.natRules, []string{

"-A", string(kubePostroutingChain),

"-m", "comment", "--comment", `"kubernetes service traffic requiring SNAT"`,

"-m", "mark", "--mark", proxier.masqueradeMark,

"-j", "MASQUERADE",

}...)7.6 KUBE-MARK-MASQ规则打标记

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

// Install the kubernetes-specific masquerade mark rule. We use a whole chain for

// this so that it is easier to flush and change, for example if the mark

// value should ever change.

writeLine(proxier.natRules, []string{

"-A", string(KubeMarkMasqChain),

"-j", "MARK", "--set-xmark", proxier.masqueradeMark,

}...)

# iptables-save | grep pool4-sc-site

-A KUBE-NODEPORTS -p tcp -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -m tcp --dport 64264 -j KUBE-MARK-MASQ

-A KUBE-NODEPORTS -p tcp -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -m tcp --dport 64264 -j KUBE-SVC-SATU2HUKOORIDRPW

-A KUBE-SEP-RRSJPMBBE7GG2XAM -s 192.168.75.114/32 -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -j KUBE-MARK-MASQ

-A KUBE-SEP-RRSJPMBBE7GG2XAM -p tcp -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -m tcp -j DNAT --to-destination 192.168.75.114:20881

-A KUBE-SEP-WVYWW757ZWPZ7HKT -s 192.168.181.42/32 -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -j KUBE-MARK-MASQ

-A KUBE-SEP-WVYWW757ZWPZ7HKT -p tcp -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -m tcp -j DNAT --to-destination 192.168.181.42:20881

-A KUBE-SERVICES -d 10.254.59.218/32 -p tcp -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site cluster IP" -m tcp --dport 20881 -j KUBE-SVC-SATU2HUKOORIDRPW

-A KUBE-SVC-SATU2HUKOORIDRPW -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-WVYWW757ZWPZ7HKT

-A KUBE-SVC-SATU2HUKOORIDRPW -m comment --comment "ns-dfbc21ef/pool4-sc-site:pool4-sc-site" -j KUBE-SEP-RRSJPMBBE7GG2XAM

1. 对每一个服务,在nat表中创建名为“KUBE-SVC-XXXXXXXXXXXXXXXX”的自定义链

所有流经自定义链KUBE-SERVICES的来自于服务“ns-dfbc21ef/pool4-sc-site”的数据包都会跳转到自定义链KUBE-SVC-SATU2HUKOORIDRPW中

2. 检查该服务是否启用了nodeports

所有流经自定义链KUBE-NODEPORTS的来自于服务“ns-dfbc21ef/pool4-sc-site”的数据包都会跳转到自定义链KUBE-MARK-MASQ中,即kubernetes会对来自上述服务的这些数据包打一个标记(0x4000/0x4000)

所有流经自定义链KUBE-NODEPORTS的来自于服务“ns-dfbc21ef/pool4-sc-site”的数据包都会跳转到自定义链KUBE-SVC-SATU2HUKOORIDRPW中

3. 对每一个服务,如果这个服务有对应的endpoints,那么在nat表中创建名为“KUBE-SEP-XXXXXXXXXXXXXXXX”的自定义链

所有流经自定义链KUBE-SVC-VX5XTMYNLWGXYEL4的来自于服务“ym/echo-app”的数据//包既可能会跳转到自定义链KUBE-SEP-27OZWHQEIJ47W5ZW,也可能会跳转到自定义