ffmpeg录音存储

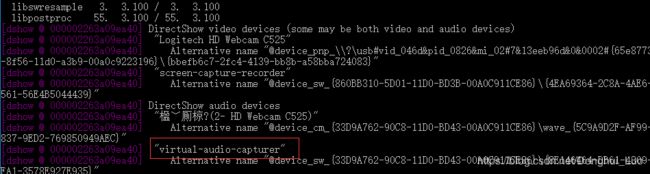

有时候项目需要单独录音,这里使用ffmepg,利用dshow的虚拟音频设备采集语音,可以使用命令查看ffmpeg的音频采集设备:ffmpeg -list_devices true -f dshow -i dummy

上图红色框框里的设备名称就是接下来需要用到的。

//使用ffmpeg dshow自带的音频采集设备,可以通过ffmpeg -list_devices true -f dshow -i dummy命令查看

pAudioInputFmt = av_find_input_format("dshow");

if (!pAudioInputFmt)

return -1;

char * psDevName = wchar_to_utf8(L"audio=virtual-audio-capturer");

if (avformat_open_input(&pFmtInputCtx, psDevName, pAudioInputFmt, NULL) < 0)

return -1;

通过avformat_open_input打开dshow音频采集器。

其它像音频输出、编码器这里略过…

当音频源和音频输出格式、采样率、声音通道数不同时,需要进行重新采样。

这里介绍AVFilter过滤器来进行:

#include "stdafx.h"

#include "AudioResample.h"

CAudioResample::CAudioResample()

{

}

CAudioResample::~CAudioResample()

{

avfilter_graph_free(&filter_graph);

filter_graph = nullptr;

buffersrc_ctx = nullptr;

buffersink_ctx = nullptr;

}

AVFilterContext* CAudioResample::GetBuffersinkPtr()

{

return buffersink_ctx;

}

AVFilterContext* CAudioResample::GetBuffersrcoPtr()

{

return buffersrc_ctx;

}

bool CAudioResample::InitFilter(AVCodecContext* dec_ctx, AVCodecContext* enc_ctx)

{

if (!dec_ctx || !enc_ctx)

return false;

if (dec_ctx->codec_type != AVMEDIA_TYPE_AUDIO)

return false;

AVFilterInOut* inputs = avfilter_inout_alloc();

AVFilterInOut* outputs = avfilter_inout_alloc();

filter_graph = avfilter_graph_alloc();

const AVFilter* buffersrc = nullptr;

const AVFilter* buffersink = nullptr;

buffersrc_ctx = nullptr;

buffersink_ctx = nullptr;

bool result = true;

char args[512];

char args2[512];

if (!inputs || !outputs || !filter_graph)

{

result = false;

goto End;

}

buffersrc = avfilter_get_by_name("abuffer");

buffersink = avfilter_get_by_name("abuffersink");

if (!buffersrc || !buffersink)

{

result = false;

goto End;

}

_snprintf(args, sizeof(args),

"time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x",

dec_ctx->time_base.num, dec_ctx->time_base.den, dec_ctx->sample_rate,

av_get_sample_fmt_name(dec_ctx->sample_fmt),

dec_ctx->channel_layout);

if (avfilter_graph_create_filter(&buffersrc_ctx, buffersrc, "in", args, NULL, filter_graph) < 0)

{

result = false;

goto End;

}

if (avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out", NULL, NULL, filter_graph) < 0)

{

result = false;

goto End;

}

if (av_opt_set_bin(buffersink_ctx, "sample_fmts", (uint8_t*)&enc_ctx->sample_fmt,

sizeof(enc_ctx->sample_fmt), AV_OPT_SEARCH_CHILDREN) < 0)

{

result = false;

goto End;

}

if (av_opt_set_bin(buffersink_ctx, "sample_rates", (uint8_t*)&enc_ctx->sample_rate,

sizeof(enc_ctx->sample_rate), AV_OPT_SEARCH_CHILDREN) < 0)

{

result = false;

goto End;

}

if (av_opt_set_bin(buffersink_ctx, "channel_layouts", (uint8_t*)&enc_ctx->channel_layout,

sizeof(enc_ctx->channel_layout), AV_OPT_SEARCH_CHILDREN) < 0)

{

result = false;

goto End;

}

inputs->name = av_strdup("out");

inputs->filter_ctx = buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

outputs->name = av_strdup("in");

outputs->filter_ctx = buffersrc_ctx;

outputs->pad_idx = 0;

outputs->next = NULL;

if (!inputs->name || !outputs->name)

{

result = false;

goto End;

}

if (avfilter_graph_parse_ptr(filter_graph, "anull", &inputs, &outputs, NULL) < 0)

{

result = false;

goto End;

}

if (avfilter_graph_config(filter_graph, NULL) < 0)

{

result = false;

goto End;

}

End:

avfilter_inout_free(&inputs);

avfilter_inout_free(&outputs);

return result;

}

int CAudioResample::AudioResample_Frame(AVFrame* srcFrame, int flags, AVFrame* destFrame)

{

if (!srcFrame || !destFrame)

return -1;

if (av_buffersrc_add_frame_flags(buffersrc_ctx, srcFrame, flags) < 0)

return -1;

if (av_buffersink_get_frame(buffersink_ctx, destFrame) < 0)

return -1;

return 0;

}

使用时调用audioresample->AudioResample_Frame(frame, 0, filter_frame);这里frame是音频源解码后的帧,filter_frame是我们需要的重新采样后的帧。

采样后,发现音频源的帧大小和音频输出的帧大小不同:frame_size,因此我们还需要再对采样后的帧加入fifo缓冲区,根据音频输出帧大小再一帧一帧的读取。

int CAudioFrameResize::WriteFrame(AVFrame* frame)

{

if (!frame)

return -1;

return av_audio_fifo_write(mFifo, (void**)frame->data, frame->nb_samples);

}

int CAudioFrameResize::ReadFrame(AVFrame* frame)

{

if (frame)

av_frame_free(&frame);

frame = av_frame_alloc();

if (av_audio_fifo_size(mFifo) < (mOutAVcodec_ctx->frame_size > 0 ? mOutAVcodec_ctx->frame_size : 1024))

return -1;

frame->nb_samples = mOutAVcodec_ctx->frame_size > 0 ? mOutAVcodec_ctx->frame_size : 1024;

frame->channel_layout = mOutAVcodec_ctx->channel_layout;

frame->format = mOutAVcodec_ctx->sample_fmt;

frame->sample_rate = mOutAVcodec_ctx->sample_rate;

av_frame_get_buffer(frame, 0);

int ret = av_audio_fifo_read(mFifo, (void **)frame->data, (mOutAVcodec_ctx->frame_size > 0 ? mOutAVcodec_ctx->frame_size : 1024));

return ret;

}

读取fifo缓冲区读取的帧再进行编码

//循环读取数据,直到fifo缓冲区里数据采样数不够

while (audioframeresize->ReadFrame(frameInput) >= 0)

{

ret = avcodec_send_frame(pOutputCodecCtx, frameInput);

if (ret < 0)

continue;

av_init_packet(&pkt_out);

ret = avcodec_receive_packet(pOutputCodecCtx, &pkt_out);

if (ret != 0)

continue;

pkt_out.pts = frameIndex * pOutputCodecCtx->frame_size;

pkt_out.dts = frameIndex * pOutputCodecCtx->frame_size;

pkt_out.duration = pOutputCodecCtx->frame_size;

av_write_frame(ofmt_ctx_a, &pkt_out);

av_packet_unref(&pkt_out);

frameIndex++;

}

【github下载】https://github.com/luodh/ffmpeg-record-audio