cs231n assignment(1.2) svm分类器

SVM分类器练习的宗旨在于:

- 实现全向量模式的SVM损失函数

- 实现全向量模式的SVM梯度

- 使用数值检测来检测结果

- 使用验证集来调试学习率(learning rate)和正则强度(regularization strength)

- 使用SGD(随机梯度下降)优化损失函数

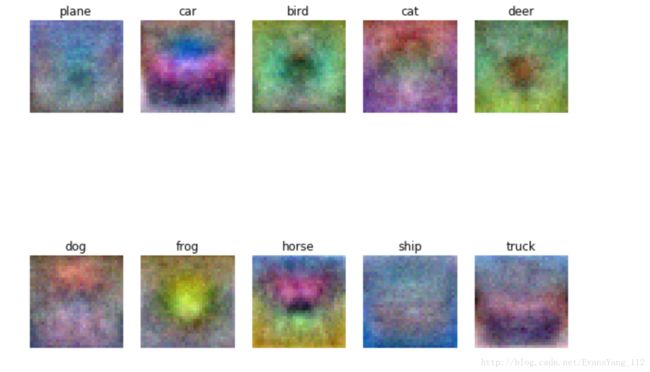

形象化最终的权重值

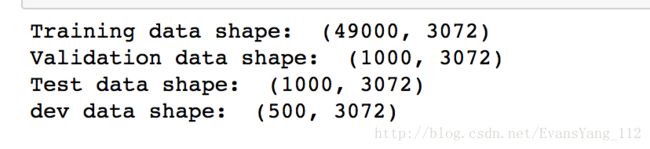

1. 数据准备和预处理

数据准备部分同上一次kNN分类器大部分相同,不同的是添加了一个新的小的dev数据集,用来加快训练过程

预处理分为三步:- 计算训练集合图像的均值

- 给所有集合图像减去均值

- 给所有集合附加一个偏差列

2.线性分类器

线性分类器分类图片的时候分为:

- 给定权重值计算图像在不同权重下的得分: s=f(x,W)=Wx

然后计算损失函数(loss function)损失函数是量化计算权重值的优劣程度,例如本节使用的SVM分类器的损失函数为:

Li=∑j≠yimax(0,sj−syi+1)上式是对每一个分类模板的损失函数,整体的损失函数为:

L=1N∑i=1N∑j≠yimax(0,f(xi;W)j−f(xi;W)yi+1)+λR(W)

其中 R(W)=∑k∑lW2k,l 也称之为L2正则化表达式, λ 为正则化强度(regularization strength)

3.SVM分类器

svm分类器中最主要的就是其损失函数的计算,再次贴出其公式:

Li=∑j≠yimax(0,sj−syi+1)

(i:第i张图片, Li 第i张图片的损失值, sj :该张图片在第j分类下的得分值, yi :i图片的正确分类, syi 正确分类下的得分值)

为了后续使用随机梯度下降法,我们在这里不仅完成了其损失函数的计算,也计算了svm的dW也就是W的梯度

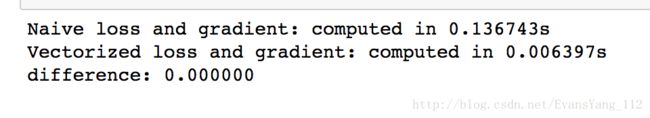

首先借助作业中的代码可以完成非向量化的写法,然后注意推导纯向量化代码,这里只贴出纯向量化的代码

计算SVM损失值和W的梯度计算的纯向量化代码:

def svm_loss_vectorized(W, X, y, reg):

loss = 0.0

dW = np.zeros(W.shape)

num_train = X.shape[0]

Scores = X.dot(W)

Correct_Scores = Scores[np.arange(num_train),y]

Correct_Scores = np.reshape(Correct_Scores,(num_train,1))

margins = Scores - Correct_Scores + 1

margins[np.arange(num_train),y] = 0.0

margins[margins <= 0] = 0.0

loss = np.sum(margins)

loss /= num_train

loss += reg * 0.5 * np.sum(W * W)

#上面loss的计算是比较容易推导的。下面的dW计算时可以借助margins这个矩阵,在演草纸上进行推导

#笔者建议先进行非向量化的推导,明白在margins与0比较后,在不同的条件下(是否是在自己的正确分类下),对dW的操作不同(给非正确分类加上X[i],给正确分类减掉X[i]),然后再书写向量化的推导

margins[margins > 0] = 1

row_sum = np.sum(margins,axis = 1)

margins[np.arange(num_train),y] = -row_sum

dW = X.T.dot(margins)

dW /= num_train

dW += reg * W

return loss, dW4.随机梯度下降法(SGD)

其实我们的目的就是要给每一类图片找到一个最合适的权重W,然后让任何图片X与W相乘后尽可能的在正确的分类下的得分大。如何寻找这个合适的权重矩阵W?我们已经有了量化评判一个矩阵好坏的标准那就是损失函数。只要让损失函数尽可能的小,就意味着我们找到了更好的权重矩阵W。

换句话说,损失函数L是关于X和W的函数,求L的最小值。故我们可以求L关于W的梯度,然后借助梯度去寻找一个尽可能合适的W,让L比较小。

训练模式如下:

def train(self, X, y, learning_rate=1e-3, reg=1e-5, num_iters=100,batch_size=200, verbose=False):

num_train, dim = X.shape

num_classes = np.max(y) + 1 # assume y takes values 0...K-1 where K is number of classes

if self.W is None:

# lazily initialize W

self.W = 0.001 * np.random.randn(dim, num_classes)

# Run stochastic gradient descent to optimize W

loss_history = []

for it in xrange(num_iters):

X_batch = None

y_batch = None

#使用mini-batch来缩短训练过程

indices = np.random.choice(num_train,batch_size,replace = True)

X_batch = X[indices,:]

y_batch = y[indices]

# evaluate loss and gradient

loss, grad = self.loss(X_batch, y_batch, reg)

loss_history.append(loss)

#迭代计算权重矩阵W

self.W += -learning_rate * grad

if verbose and it % 100 == 0:

print 'iteration %d / %d: loss %f' % (it, num_iters, loss)

return loss_history5.训练调整参数

调整的参数主要是learning rate和regularization strength,笔者的实验调整后在验证集上达到了39%的准确率

learning_rates = [1e-7,3e-7]

regularization_strengths = [5e4,4e4,6e4,3e4]

results = {}

best_val = -1 # The highest validation accuracy that we have seen so far.

best_svm = None # The LinearSVM object that achieved the highest validation rate.

iters = 1000

for lr in learning_rates:

for rs in regularization_strengths:

svm = LinearSVM()

svm.train(X_train, y_train, learning_rate=lr, reg=rs, num_iters=iters)

y_train_pred = svm.predict(X_train)

acc_train = np.mean(y_train == y_train_pred)

y_val_pred = svm.predict(X_val)

acc_val = np.mean(y_val == y_val_pred)

results[(lr, rs)] = (acc_train, acc_val)

if best_val < acc_val:

best_val = acc_val

best_svm = svm

# Print out results.

for lr, reg in sorted(results):

train_accuracy, val_accuracy = results[(lr, reg)]

print 'lr %e reg %e train accuracy: %f val accuracy: %f' % (

lr, reg, train_accuracy, val_accuracy)

print 'best validation accuracy achieved during cross-validation: %f' % best_vallr 1.000000e-07 reg 3.000000e+04 train accuracy: 0.376469 val accuracy: 0.382000

lr 1.000000e-07 reg 4.000000e+04 train accuracy: 0.373612 val accuracy: 0.380000

lr 1.000000e-07 reg 5.000000e+04 train accuracy: 0.373633 val accuracy: 0.395000

lr 1.000000e-07 reg 6.000000e+04 train accuracy: 0.355490 val accuracy: 0.370000

lr 3.000000e-07 reg 3.000000e+04 train accuracy: 0.345122 val accuracy: 0.356000

lr 3.000000e-07 reg 4.000000e+04 train accuracy: 0.358592 val accuracy: 0.376000

lr 3.000000e-07 reg 5.000000e+04 train accuracy: 0.349041 val accuracy: 0.356000

lr 3.000000e-07 reg 6.000000e+04 train accuracy: 0.356878 val accuracy: 0.356000

best validation accuracy achieved during cross-validation: 0.395000