16、分布式文件系统化GlusterFs

GlusterFS

实验目标:

掌握Glusterfs的理论和配置

实验理论:

GlusterFS(Gluster File System) 分布式文件系统的诞生:

以淘宝为例,淘宝把全国所有的数据放在杭州,在双11时,面对巨大的访问量,会发生什么?(并发处理用LB解决)大量的用户时时刻刻的对磁盘阵列读写,面对这么强大的攻势,在强大的磁盘阵列也得崩溃,即使访问量不大,有的人离杭州远,有的离杭州近,用户访问速度也是不同的。

使用分布式文件系统可以解决这些问题,分布式文件系统就是在每一个城市放上一台服务器,那么上海的用户就访问上海的服务器,北京的用户就访问北京的服务器,整体数据就像是一个池子,把整体数据切成一个一个的数据分片,每个池子的数据分片分别放在不同城市的服务器上。

针对不同城市的数据,为保证数据的安全,采用RAID的技术保证数据的永不丢失,采用LVM技术来保证硬盘随着数据的日渐增多可以增加硬盘大小,

即使一个城市上的数据损坏了,也可以还原数据

分布式文件系统现在有四种:

1、GlusterFS 2、Ceph 3、MooseFS 4、Luster:

GlusterFS和Ceph是4种分布式文件系统中最好的两个,这两个现在都被红帽收购!

现在是GlusterFS的天下,未来是Ceph的天下

实验拓扑:

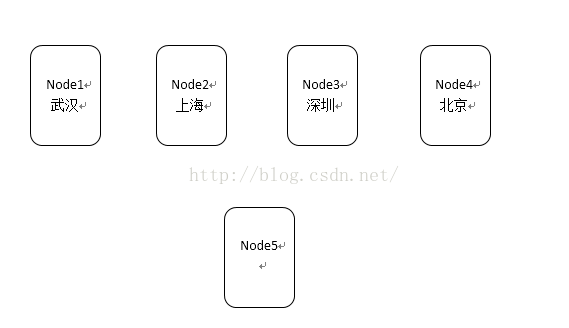

Node1、Node2、Node3、Node4、Node5,IP分配为:192.168.1.121----125

注意:

一、所有设备都要关闭防火墙和SELinux

二、Node1到Node5用的是glusterfs3.0的iso镜像,在安装界面时要按Esc键,输入linux,回车,这样系统只会拷贝自己需要的iso里的文件来安装系统,系统安装会快些,如果直接在安装界面回车,会把整个iso文件拷贝过来,系统安装会很慢

实验步骤:

步骤一、创建分区

Node1、2、3、4:节点1、2、3、4都要做的配置

[root@node1~]# vim /etc/hosts

192.168.1.121 node1.example.com node1

192.168.1.122 node2.example.com node2

192.168.1.123 node3.example.com node3

192.168.1.124 node4.example.com node4

192.168.1.125 node5.example.com node5

[root@node1 ~]# service glusterd status

glusterd (pid 1207) is running... 用专门的iso安装系统,默认就启动了Glusterd服务,其它系统就需要你去安装glusterd这个服务了

[root@node1 ~]# fdisk -cu /dev/sda

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Selected partition 4

First sector (107005952-209715199, default107005952):

Using default value 107005952

Last sector, +sectors or +size{K,M,G}(107005952-209715199, default 209715199):

Using default value 209715199

Command (m for help): n

First sector (107008000-209715199, default107008000):

Using default value 107008000

Last sector, +sectors or +size{K,M,G}(107008000-209715199, default 209715199): +10G

Command (m for help): t

Partition number (1-5): 5

Hex code (type L to list codes): 8e

Changed system type of partition 5 to 8e(Linux LVM)

Command (m for help): w

The partition table has been altered!

[root@node1 ~]# partx -a /dev/sda

BLKPG: Device or resource busy

error adding partition 1

BLKPG: Device or resource busy

error adding partition 2

BLKPG: Device or resource busy

error adding partition 3

[root@node1 ~]# pvcreate /dev/sda5

Physical volume "/dev/sda5" successfully created

[root@node1 ~]# vgcreate vg_bricks /dev/sda5

Volume group "vg_bricks" successfully created

[root@node1 ~]# lvcreate -L 5G -T vg_bricks/brickspool 在vg_bricks中,创建一个LV池子brickspool,大小为5G

Logical volume "lvol0" created

Logical volume "brickspool" created

[root@node1 ~]# lvcreate -V 1G -T vg_bricks/brickspool -n brick1 在LV池子brickspool中,创建一个LV叫brick1 大小1G

Logical volume "brick1" created

[root@node1 ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick1 xfs格式化时要指定大小

meta-data=/dev/vg_bricks/brick1 isize=512 agcount=8, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=262144,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=2560,version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

ode1 ~]# mkdir -p /bricks/brick1

[root@node1 ~]# echo "/dev/vg_bricks/brick1 /bricks/brick1 xfs defaults 0 0" >> /etc/fstab /dev/vg_bricks/brick1开机自动挂载到/bricks/brick1

[root@node1 ~]# mount -a

[root@node1 ~]# mkdir /bricks/brick1/brick 挂载完成后在创建这个存放数据分片的目录

步骤二、搭建Gluster

Node1:

各个城市节点分区完毕后,把这些节点组合起来,形成一个大的“池子”,来综合管理数据,包括数据的动态切割分配,数据的冗余备份,在node1上做,就默认把node1当成主节点

[root@node1 ~]# gluster 敲gluster命令进入到gluster命令行中

gluster> peer probe node1.example.com

peer probe: success. Probe on localhost not needed 在节点1上做,自己怎么加入自己?

gluster> peer probe node2.example.com

peer probe: success.

gluster> peer probe node3.example.com

peer probe: success.

gluster> peer probe node4.example.com

peer probe: success.

gluster> peer status

Number of Peers: 3

Hostname: node2.example.com

Uuid: ce2fe11c-94b8-4884-9d3a-271f01eff280

State: Peer in Cluster (Connected)

Hostname: node3.example.com

Uuid: 28db58f8-5f8a-4a7f-94a9-03a8a8137fdd

State: Peer in Cluster (Connected)

Hostname: node4.example.com

Uuid: 808a34d9-80cf-4077-acfa-f255f52aa9be

State: Peer in Cluster (Connected)

创建第一个GlusterFS组并实现两两备份

[root@node1 ~]# gluster

gluster> volume create firstvol replica 2 node1.example.com:/bricks/brick1/brick node2.example.com:/bricks/brick1/brick node3.example.com:/bricks/brick1/brick node4.example.com:/bricks/brick1/brick 创建firstvol卷

volume create: firstvol: success: pleasestart the volume to access data

gluster> volume start firstvol 创建完firstvol卷后,启动firstvol,否则使用不了

volume start: firstvol: success

gluster> volume info firstvol 查看firstvol卷信息

Volume Name: firstvol

Type: Distributed-Replicate

Volume ID: 4087aecf-a4fe-4773-a1af-fa19d6a7aba5

Status: Started

Snap Volume: no

Number of Bricks: 2 x 2 = 4 两两备份模式

Transport-type: tcp

Bricks:

Brick1: node1.example.com:/bricks/brick1/brick

Brick2: node2.example.com:/bricks/brick1/brick

Brick3: node3.example.com:/bricks/brick1/brick

Brick4: node4.example.com:/bricks/brick1/brick

Options Reconfigured:

performance.readdir-ahead: on

auto-delete: disable

snap-max-soft-limit: 90

snap-max-hard-limit: 256

gluster>exit exit退出时自动保存

客户端 NFS挂载:

[root@node5 ~]# mount -t nfs node1.example.com:/firstvol /mnt

[root@node5 ~]# cd /mnt 使用NFS把主节点node1的firstvol卷挂载过来

[root@node5 mnt]# touch file{0..9} 创建10个文件

[root@node5 mnt]# ls

file0 file1 file2 file3 file4 file5 file6 file7 file8 file9

Node1:

[root@node1 ~]# cd /bricks/brick1/brick/

[root@node1 brick]# ls

file3 file4 file7 file9

Node2:

[root@node2 brick1]# cd brick/

[root@node2 brick]# ls

file3 file4 file7 file9

Node3:

[root@node3 ~]# cd /bricks/brick1/brick

[root@node3 brick]# ls

file0 file1 file2 file5 file6 file8

Node4:

[root@node4 ~]# cd /bricks/brick1/brick/

[root@node4 brick]# ls

file0 file1 file2 file5 file6 file8

客户端 samba挂载:

Node1: samba使用cifs协议来共享和挂载,服务端和客户端都要安装cifs-utils软件包

[root@node1 ~]# service smb restart

Shutting down SMB services: [FAILED]

Starting SMB services: [ OK ]

[root@node1 ~]# service nmb restart

Shutting down NMB services: [FAILED]

Starting NMB services: [ OK ]

[root@node1 ~]# chkconfig smb on

[root@node1 ~]# chkconfig nmb on

[root@node1 ~]# useradd user1

[root@node1 ~]# smbpasswd -a user1

New SMB password:

Retype new SMB password:

Added user user1.

[root@node1 ~]# vim /etc/samba/smb.conf 只需要启动samba服务,不需要你写smb.conf

[gluster-firstvol] 配置文件,有api接口自动把配置写入文件中

comment = For samba share of volumefirstvol

vfs objects = glusterfs

glusterfs:volume = firstvol

glusterfs:logfile = /var/log/samba/glusterfs-firstvol.%M.log

glusterfs:loglevel = 7

path = /

read only = no

guest ok = yes

Node5:

[root@node5 ~]# smbclient -L 192.168.1.121 需要查看samba通过cifs共享过来的共享名

Enter root's password:

Anonymous login successful

Domain=[MYGROUP] OS=[Unix] Server=[Samba3.6.9-169.1.el6rhs]

Sharename Type Comment

--------- ---- ------- 共享名为gluster-firstvol

gluster-firstvol Disk For samba share of volume firstvol

IPC$ IPC IPC Service (Samba Server Version 3.6.9-169.1.el6rhs)

Anonymous login successful

Domain=[MYGROUP] OS=[Unix] Server=[Samba3.6.9-169.1.el6rhs]

Server Comment

--------- -------

NODE1 Samba Server Version3.6.9-169.1.el6rhs

NODE5 Samba Server Version3.6.9-169.1.el6rhs

Workgroup Master

--------- -------

MYGROUP NODE1

[root@node5 ~]# mount -t cifs //192.168.1.121/gluster-firstvol /mnt -o user=user1,password=redhat

[root@node5 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 50395844 1751408 46084436 4% /

tmpfs 506168 0 506168 0% /dev/shm

/dev/sda1 198337 29772 158325 16% /boot

//192.168.1.121/gluster-firstvol

2076672 66592 2010080 4% /mnt

挂载成功!

步骤三、添加节点

如果你节点的磁盘空间不够用了,需要添加新的节点,来应对更大的数据量

Node3:

[root@node3 brick]# lvcreate -V 1G -T vg_bricks/brickspool -n brick2

Logical volume "brick2" created

[root@node3 brick]# mkfs.xfs -i size=512 /dev/vg_bricks/brick2

meta-data=/dev/vg_bricks/brick2 isize=512 agcount=8, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=262144, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=2560,version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node3 brick]# mkdir /bricks/brick2/

[root@node3 ~]# echo "/dev/vg_bricks/brick2 /bricks/brick2 xfs defaults 0 0" >> /etc/fstab

[root@node3 brick2]# mkdir /bricks/brick2/brick

Node4:

[root@node4 brick]# lvcreate -V 1G -T vg_bricks/brickspool -n brick2

Logical volume "brick2" created

[root@node4 brick]# mkfs.xfs -i size=512 /dev/vg_bricks/brick2

meta-data=/dev/vg_bricks/brick2 isize=512 agcount=8, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=262144,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=2560,version=2

= sectsz=512 sunit=0 blks,lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node4 brick]# mkdir /bricks/brick2/

[root@node4 ~]# echo "/dev/vg_bricks/brick2 /bricks/brick2 xfs defaults 0 0" >> /etc/fstab

[root@node4 brick2]# mkdir /bricks/brick2/brick

Node1:

gluster> volume add-brick firstvol node3.example.com:/bricks/brick3/brick node4.example.com:/bricks/brick3/brick

volume add-brick: success

gluster> volume info firstvol

Volume Name: firstvol

Type: Distributed-Replicate

Volume ID:4087aecf-a4fe-4773-a1af-fa19d6a7aba5

Status: Started

Snap Volume: no

Number of Bricks: 3 x 2 = 6

Transport-type: tcp

Bricks:

Brick1:node1.example.com:/bricks/brick1/brick

Brick2:node2.example.com:/bricks/brick1/brick

Brick3:node3.example.com:/bricks/brick1/brick

Brick4:node4.example.com:/bricks/brick1/brick

Brick5:node3.example.com:/bricks/brick3/brick

Brick6:node4.example.com:/bricks/brick3/brick

Options Reconfigured:

performance.readdir-ahead: on

auto-delete: disable

snap-max-soft-limit: 90

snap-max-hard-limit: 256

gluster>exit

步骤四、均衡分布(Rebalance)

虽然节点添加成功,但是查看新节点空间,是没有任何内容的(数据不会重新分配)

[root@node3 /]# ls /bricks/brick3/brick

[root@node3 /]#

即便现在添加数据也不会有

[root@node5 mnt]# touch text{0..10}

[root@node5 mnt]# ls

file0 file2 file4 file6 file8 text0 text10 text3 text5 text7 text9

file1 file3 file5 file7 file9 text1 text2 text4 text6 text8

[root@node1 ~]# cd /bricks/brick1/brick 新数据被添加被添加到Node1和Node2上

[root@node1 brick]# ls

file3 file4 file7 file9 text1 text3 text8 text9

[root@node3 /]# ls /bricks/brick1/brick/ 新数据被添加到Node3和Node4的/bricks/brick1上

file0 file1 file2 file5 file6 file8 text0 text10 text2 text4 text5 text6 text7

[root@node3 /]# ls /bricks/brick3/brick 但是Node和Node4新被添加的空间却没有内容!

[root@node3 /]#

-------------------------无论你添加多少都没有内容---------------------------------------------------------------

由于以上原因,我们需要使用Rebalance参数让数据均衡分布在不同的节点空间上

[root@node1 brick]# gluster volume rebalance firstvol start

volume rebalance: firstvol: success:Starting rebalance on volume firstvol has been successful.

ID: fc79b11d-13a0-4428-9e53-79f04dbc65a4

[root@node3 /]# ls /bricks/brick3/brick

file0 file1 file2 text0 text10 text3

[root@node4 ~]# ls /bricks/brick3/brick Node3和Node4上的新空间被分配到了数据

file0 file1 file2 text0 text10 text3

原先存在的数据也会均衡分布一下

均衡分布之后,所有的内容并不是平均、一样多的。各个节点空间中,新添加的数据会像“轮询”一样分布。比如:再创建一个文件,会在node1&node2上,第二个创建的会在node3&node4的原空间上,第三个才会出现在node3&node4的新空间上。

步骤五、ACL、Quota

ACL:

没有设置acl参数的磁盘是不能设置acl权限的,需要添加磁盘的acl参数方可

node5:

root@node5 mnt]# setfacl -m u:user1:rw- /mnt

setfacl: /mnt: Operation not supported 不支持acl

为分区添加acl选项

[root@node5 home]#vim /etc/fstab

node1.example.com:/firstvol /mnt nfs defaults,_netdev,acl 0 0

打开gluster的acl

[root@node1 brick]# gluster

gluster> volume set firstvol acl on

volume set: success

gluster> exit

重挂分区

[root@node5 home]# mount -o acl node1.example.com:/firstvol /mnt

重新设置用户的ACL

[root@node5 ~]# mount

node1:/firstvolon /mnt type nfs (rw,acl,addr=192.168.1.121)

[root@node5 mnt]# setfacl -m u:user1:rw- /mnt

[root@node5 home]# getfacl /mnt

getfacl: Removing leading '/' from absolutepath names

# file: mnt

# owner: root

# group: root

user::rwx

user:user1:rw-

group::r-x

mask::rwx

other::r-x

[root@node5 ~]# cd /mnt

[root@node5 mnt]# su user1

[user1@node5 mnt]$ touch 123

[user1@node5 mnt$ ls

file0 file2 file4 file6 file8 text0 text10 text3 text5 text7 text9

file1 file3 file5 file7 file9 text1 text2 text4 text6 text8 123

Quota:

普通的磁盘有磁盘配额的设置选项,那么,作为glusterfs也是有磁盘配额选项的

Node1:

[root@node1 /]# gluster

gluster> volume quota firstvol enable 开启Quota功能

volume quota : success

Node5:

[root@node5 /]# cd /mnt

[root@node5 mnt]# mkdir Limit

[root@node5 mnt]# ls

file0 file2 file4 file6 file8 Limit text1 text2 text4 text6 text8

file1 file3 file5 file7 file9 text0 123 text3 text5 text7 text9 text10

Node1:

对/mnt/Limit进行磁盘限制,只能使用20M空间

gluster> volume quota firstvol limit-usage /Limit 20MB 限制大小

volume quota : success

验证:

在/mnt/Limit里创建一个25M的文件

[root@node5 mnt]# cd /mnt/Limit

[root@node5 Limit]# ls

[root@node5 Limit]# dd if=/dev/zero of=bigfile bs=1M count=25

25+0 records in

25+0 records out

26214400 bytes (26 MB) copied, 2.22657 s,11.8 MB/s

[root@node5 Limit]# ls

bigfile

[root@node5 Limit]# ll -h

total 25M

-rw-r--r-- 1 root root 25M Nov 3 2015 bigfile

直接创建出了25M的内容,从这可以看出,磁盘配额并没有生效,这是因为没有同步到磁盘,需要把数据同步到磁盘中,同步之后,配额生效

[root@node5 Limit]# sync

[root@node5 Limit]# dd if=/dev/zero of=bigfile1 bs=1M count=25

dd:opening `bigfile1': Disk quota exceeded

gluster> volume quota firstvol list Quota查看

Path Hard-limit Soft-limit Used Available Soft-limit exceeded?Hard-limit exceeded?

---------------------------------------------------------------------------------------------------------------------------

/Limit 20.0MB 80% 50.0MB 0Bytes Yes Yes

步骤六、节点间漂移CTDB

上述试验中,存在一个问题。Node1是一个主节点,node5直接就挂载了node1上的”池子”firstvol,当node1出现问题之后,整个GlusterFS就挂了。

所以为保证其容错性、可考性,需要实现节点之间的飘移,当一个节点出现问题之后,其它节点能接收任务,继续提供服务,这个技术就叫CTDB,此技术需要有一个VIP,对外来说,访问GlusterFS使用的就是VIP。

Node1、2、3、4:节点1、2、3、4都要做的配置

[root@node1 /]# lvcreate -V 1G -T vg_bricks/brickspool -n brick4

Logical volume "brick4" created

[root@node1 /]# mkfs.xfs -i size=512 /dev/vg_bricks/brick4

meta-data=/dev/vg_bricks/brick4 isize=512 agcount=8, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=262144,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=2560,version=2

= sectsz=512 sunit=0 blks,lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node1 /]# mkdir /bricks/brick4

[root@node1 /]# echo "/dev/vg_bricks/brick4 /bricks/brick4 xfs defaults 0 0" >> /etc/fstab

[root@node1 /]# mount -a

[root@node1 /]# mkdir /bricks/brick4/brick

[root@node1 brick]# gluster

gluster> volume create ctdb replica 4 node1.example.com:/bricks/brick4/brick node2.example.com:/bricks/brick4/brick node3.example.com:/bricks/brick4/brick node4.example.com:/bricks/brick4/brick

volume create: ctdb: success: please startthe volume to access data

先不要启动ctdb卷,所有节点修改卷

修改ctdb服务的配置文件,node1-4都要进行相同的配置

[root@node1 brick]# vim /var/lib/glusterd/hooks/1/start/post/S29CTDBsetup.sh

# to prevent the script from running forvolumes it was not intended.

# User needs to set META to the volume thatserves CTDB lockfile.

META="ctdb" 此处名字要与创建的gluster volume名字一样

[root@node1 brick]# vim /var/lib/glusterd/hooks/1/stop/pre/S29CTDB-teardown.sh

# to prevent the script from running forvolumes it was not intended.

# User needs to set META to the volume thatserves CTDB lockfile.

META="ctdb" 此处名字要与创建的gluster volume名字一样

启动ctdb卷

gluster> volume start ctdb

volume start: ctdb: success

安装ctdb2.5软件,node1-4都要进行相同的配置

[root@node1 /]# yum -y remove ctdb 要安装ctdb2.5版本,先要卸载原有的ctdb

[root@node1 /]# yum -y install ctdb2.5

创建节点文件(用于指定那些设备之间进行漂移),node1-4都要配置

[root@node1 ~]# vim /etc/ctdb/nodes

192.168.1.121

192.168.1.122

192.168.1.123

192.168.1.124

创建VIP文件,指定VIP地址及在那个网卡上进行漂移。node1-4都要配置

[root@node1 /]#vim /etc/ctdb/public_addresses

192.168.1.1/24 eth0

启动ctdb服务,node1-4都要启动此服务,否则不会实现漂移

[root@node1/]# /etc/init.d/ctdb restart

Shutting down ctdbd service: CTDB is notrunning

[ OK ]

Starting ctdbd service: [ OK ]

[root@node1 /]# chkconfig ctdb on

[root@node1 ~]# ctdb status

Number of nodes:4

pnn:0192.168.1.121 OK

pnn:1192.168.1.122 OK

pnn:2192.168.1.123 OK

pnn:3192.168.1.124 OK (THIS NODE)

Generation:1192510318

Size:4

hash:0 lmaster:0

hash:1 lmaster:1

hash:2 lmaster:2

hash:3 lmaster:3

Recovery mode:NORMAL (0)

Recovery master:0

[root@node2 /]# ctdb ip

Public IPs on node 1

192.168.1.1-1

ctdb软件在生成VIP时,速度很慢……耐心等待

Node4上生成了VIP为192.168.1.1/24,代表node4为主节点

[root@node4 ~]# ip addr list

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

2: eth0:

link/ether 00:0c:29:42:b7:f2 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.124/24 brd 192.168.1.255 scope global eth0

inet 192.168.1.1/24 brd 192.168.1.255 scopeglobal secondary eth0

用Node5来ping VIP,进行VIP验证,

[root@node5 ~]# ping 192.168.1.1

PING 192.168.1.1 (192.168.1.1) 56(84) bytesof data.

64 bytes from 192.168.1.1: icmp_seq=1ttl=64 time=4.64 ms

64 bytes from 192.168.1.1: icmp_seq=2ttl=64 time=0.609 ms

^C

--- 192.168.1.1 ping statistics ---

2 packets transmitted, 2 received, 0%packet loss, time 1380ms

rtt min/avg/max/mdev =0.609/2.625/4.642/2.017 ms

VIP连通性正常

NFS挂载:

Node5使用VIP来进行挂载

[root@node5 ~]# mount -t nfs 192.168.1.1:/ctdb /mnt

[root@node5 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 50395844 1748972 46086872 4% /

tmpfs 506168 0 506168 0% /dev/shm

/dev/sda1 198337 29772 158325 16% /boot

192.168.1.1:/ctdb 1038336 32768 1005568 4% /mnt

[root@node5 ~]# cd /mnt

[root@node5 mnt]# touch file{1..5}

[root@node5 mnt]# ls

file1 file2 file3 file4 file5 lockfile

节点之间的漂移,也就是说漂移的这几个节点服务器之间的数据应该是一样的,这样才能保证漂移时数据稳定,ctdb漂移要节点数据是一样的。

[root@node1 ~]# ls /bricks/brick1/brick/

file1 file2 file3 file4 file5 lockfile

[root@node2 ~]# ls /bricks/brick1/brick/

file1 file2 file3 file4 file5 lockfile

[root@node3 ~]# ls /bricks/brick1/brick/

file1 file2 file3 file4 file5 lockfile

[root@node4 ~]# ls /bricks/brick1/brick/

file1 file2 file3 file4 file5 lockfile

验证节点间漂移:

[root@node4 ~]#init 0 关闭节点4

[root@node1 ~]# ctdb status 查看其它节点信息,发现VIP漂移到了Node1上

Number of nodes:4

pnn:0 192.168.1.121 OK (THIS NODE)

pnn:1 192.168.1.122 OK

pnn:2 192.168.1.123 OK

pnn:3192.168.1.124 DISCONNECTED|UNHEALTHY|INACTIVE

Generation:495021029

Size:3

hash:0 lmaster:0

hash:1 lmaster:1

hash:2 lmaster:2

Recovery mode:NORMAL (0)

Recovery master:0

[root@node1 ~]# ip addr list

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

2: eth0:

link/ether 00:0c:29:29:d3:3a brd ff:ff:ff:ff:ff:ff

inet 192.168.1.121/24 brd 192.168.1.255 scope global eth0

inet192.168.1.1/24 brd 192.168.1.255 scope global secondary eth0

Node1变成了主节点,接替Node4使用VIP进行工作

Node5挂载的设备也正常,说明设备漂移成功

[root@node5 mnt]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 50395844 1749132 46086712 4% /

tmpfs 506168 0 506168 0% /dev/shm

/dev/sda1 198337 29772 158325 16% /boot

192.168.1.1:/ctdb 1038336 32768 1005568 4% /mnt

注意:有时候,查看ctdb status,会显示没有OK,这是怎么回事了?

查看日志:/var/log/log.ctdb会显示:(don‘t open /gluster/lock/lockfifile.No such file or directory),此时需要手动创建此文件(所有节点都要创建,先创建/gluster/lock目录),然后再重启服务即可。

步骤七、站点间复制

站点间复制主要用于对站点数据的备份, master 站点在生成新数据之后,实时同步到

slave 站点来防止 master 站点数据的丢失,slave站点只是 master 站点的备份,只有备份作用,master 站点的数据会实时同步到 slave站点,但是 slave 站点只是备份的作用,在 slave 站点上创建的数据是不会复制到 master 站点的

在Node1&Node2上创建mastervol卷,并关在使用

[root@node1 ~]# gluster

gluster> volume create mastervol replica 2 node1.example.com:/bricks/brick1/brick node2.example.com:/bricks/brick1/brick

volume create: mastervol: success: pleasestart the volume to access data

gluster> volume start mastervol

volume start: mastervol: success

gluster> exit

[root@node1~]# echo "node1.example.com:/mastervol /tmp/mastervol glusterfs defaults,_netdev 0 0" >> /etc/fstab

[root@node1 ~]#mount -a

[root@node1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

[root@node1 /]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 50395844 1793688 46042156 4% /

tmpfs 506168 0 506168 0% /dev/shm

/dev/sda1 198337 29791 158306 16% /boot

/dev/mapper/vg_bricks-brick1

1038336 33296 1005040 4% /bricks/brick1

node1.example.com:/mastervol

1038336 33408 1004928 4% /mnt

Node5上创建salvevol卷,并挂载使用

[root@node5 mnt]# gluster

gluster> volume create slavevol node5.example.com:/bricks/brick1/brick

volume create: slavevol: success: pleasestart the volume to access data

gluster> volume start slavevol

volume start: slavevol: success

gluster> exit

[root@node5 /]# echo "node5.exampe.com:/slavevol /mnt glusterfs defaults,_netdev 0 0 " >> /etc/fstab

[root@node5 /]# mount -a

[root@node5 /]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 50395844 1793544 46042300 4% /

tmpfs 506168 0 506168 0% /dev/shm

/dev/sda1 198337 29772 158325 16% /boot

/dev/mapper/vg_bricks-brick1

1038336 33296 1005040 4% /bricks/brick1

node5.example.com:/slavevol

1038336 33408 1004928 4% /mnt

在Node5上创建相应的用户和宿组(用于备份时的账户验证)

[root@node5 /]# groupadd geogroup

[root@node5 /]# useradd -G geogroup geoaccount

[root@node5 /]# passwd geoaccount

Changing password for user geoaccount.

New password:

BAD PASSWORD: it is based on a dictionaryword

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updatedsuccessfully.

主从站点的复制,是需要站点间连接的,在node1上以node5的账户登陆到node5上复制数据以实现备份。而每次登陆都需要输入node5的账号信息,有鉴于此,可以使用秘钥验证来实现自动登陆,把master站点上的公钥传给slave站点,slave站点利用公钥验证master的私钥即可实现两节点的自动连接

[root@node1 ~]# ssh-keygen master站点创建秘钥

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

3d:af:76:30:7c:bc:5c:c3:36:82:a0:d3:98:ae:15:[email protected]

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

| E |

| o.. |

| .=Sooo . |

| =.. =o+ * |

| ... =.= o |

| .. ..+ |

| .. ... |

+-----------------+

用geoaccount账户把master站点的公钥复制到slave站点上

[root@node1 ~]# ssh-copy-id [email protected]

[email protected]'s password:

Now try logging into the machine, with"ssh '[email protected]'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keysthat you weren't expecting.

node5上针对文件/etc/glusterfs/glusterd.vol添加如下内容

[root@node5 ~]# vim /etc/glusterfs/glusterd.vol

volume management

type mgmt/glusterd

option working-directory /var/lib/glusterd

option transport-type socket,rdma

option mountbroker-root /var/mountbroker-root

option mountbroker-geo-replication.geoaccount slavevol

option geo-replication-log-group geogroup

option rpc-auth-allow-insecure on

option transport.socket.keepalive-time 10

option transport.socket.keepalive-interval 2

option transport.socket.read-fail-log off

option ping-timeout 0

# option base-port 49152

end-volume

创建对应的/var/mountbroker-root目录,并给权限0711

[root@node5 ~]# mkdir /var/mountbroker-root

[root@node5 ~]# chmod 0711 /var/mountbroker-root

最后在node5上启动glusterd服务

[root@node5 ~]# service glusterd restart

Starting glusterd: [ OK ]

在node1(即master)上实现站点复制

[root@node1 ~]# gluster

gluster> system:: execute gsec_create

Common secret pub file present at/var/lib/glusterd/geo-replication/common_secret.pem.pub

gluster> volume geo-replication mastervol [email protected]::slavevol create push-pem 指定slave站点通过geoaccount账户把master站点上的mastervol卷的数据复制到slave站点上的slavevol卷上

Creating geo-replication session betweenmastervol & [email protected]::slavevol has been successful

gluster> volume geo-replication mastervol [email protected]::slavevol start

Starting geo-replication session between mastervol& [email protected]::slavevol has been successful 启动主从站点之间的数据复制

gluster> volume geo-replication mastervol [email protected]::slavevol status 查看主从站点关系状态

MASTER NODE MASTER VOL MASTER BRICK SLAVE STATUS CHECKPOINT STATUS CRAWL STATUS

---------------------------------------------------------------------------------------------------------------------------------------------

node1.example.com mastervol /bricks/brick1/brick [email protected]::slavevol faulty N/A N/A

node2.example.com mastervol /bricks/brick1/brick [email protected]::slavevol faulty N/A N/A

node5上执行如下命令

[root@node5 ~]# /usr/libexec/glusterfs/set_geo_rep_pem_keys.sh geoaccount mastervol slavevo

Successfully copied file.

Command executed successfully.

Node1上创建数据

[root@node1 /]# cd /mnt

[root@node1 mnt]# mkdir file{0..5}

[root@node1 mnt]# ls

file0 file1 file2 file3 file4 file5

Node1把数据传送过来了

[root@node5 ~]# cd /mnt

[root@node5 mnt]# ls

[root@node5 mnt]# ls

[root@node5 mnt]# ls

[root@node5 mnt]# ls

file0 file1 file2 file3 file4 file5

注意:master/slave站点之间的复制是实时的,master站点只要有数据更新,马上会把数据复制给slave站点,slave站点只能是备份功能,slave站点上创建的数据是不会主动于slave站点的volume同步的