TensorRT-5.1.5.0-YOLOv3

接上一个博客

TensorRT-5.1.5.0-SSD

https://blog.csdn.net/baidu_40840693/article/details/95642055

首先:

upsample层 密码bwrd

https://pan.baidu.com/s/13GpoYoqKSCeFX0m0ves_fQ

然后:

https://github.com/lewes6369/TensorRT-Yolov3

https://github.com/lewes6369/tensorRTWrapper/

Models

Download the caffe model converted by official model:

- Baidu Cloud here pwd: gbue

- Google Drive here

里面有两种,input416*416和608*608

当然也可以在这里下载:

https://pan.baidu.com/s/1yiCrnmsOm0hbweJBiiUScQ

关于caffe版本-yolov3的运行,都可以在这里找到:

https://github.com/ChenYingpeng/caffe-yolov3

关于yolov3中的Upsample,记得添加对参数的注释:

layer {

bottom: "layer85-conv"

top: "layer86-upsample"

name: "layer86-upsample"

type: "Upsample"

#upsample_param {

# scale: 2

#}

}TensorRT caffe parser can't check the param not in it's default proto file, although I added it as plugin.

TensorRT中的caffe解析器的没能识别,具体参数怎么用,我们可以通过TensorRT去使用

我们先测试yolov3在caffe中的前向速度:

配置好cpp和cu和hpp,还有caffe.proto后

optional UpsampleParameter upsample_param = 149;

}

// added by chen

message UpsampleParameter{

optional int32 scale = 1 [default = 1];

}我们,重新编译caffe,使用416×416的prototxt进行测试

测试机器-GTX TiTAN X

416×416分辨率:

./build/tools/caffe time -model=/home/boyun/code/caffe-ssd/yolov3/yolov3_416.prototxt --weights=/home/boyun/code/caffe-ssd/yolov3/yolov3_416.caffemodel --iterations=200 -gpu 0I0714 22:10:42.351696 10668 caffe.cpp:412] Average Forward pass: 50.3751 ms.

I0714 22:10:42.351701 10668 caffe.cpp:414] Average Backward pass: 77.3334 ms.

I0714 22:10:42.351707 10668 caffe.cpp:416] Average Forward-Backward: 127.876 ms.

I0714 22:10:42.351712 10668 caffe.cpp:418] Total Time: 25575.2 ms.

I0714 22:10:42.351716 10668 caffe.cpp:419] *** Benchmark ends ***

顺便说一下在1070ti下进行416×416分辨率测试:

I0715 10:27:02.526588 20742 caffe.cpp:407] layer106-conv backward: 0.205097 ms.

I0715 10:27:02.526610 20742 caffe.cpp:412] Average Forward pass: 35.5234 ms.

I0715 10:27:02.526621 20742 caffe.cpp:414] Average Backward pass: 43.3386 ms.

I0715 10:27:02.526648 20742 caffe.cpp:416] Average Forward-Backward: 78.9849 ms.

I0715 10:27:02.526661 20742 caffe.cpp:418] Total Time: 15797 ms.

I0715 10:27:02.526671 20742 caffe.cpp:419] *** Benchmark ends ***yolov3一般采用32的倍数,因为多尺度的最大尺度是初始input的1/32,假如416×416,那么最后的feature map为13×13

我们把input改成320*320,重新测速:

320×320分辨率 :

I0714 22:19:04.315593 10894 caffe.cpp:407] layer106-conv backward: 0.18554 ms.

I0714 22:19:04.315603 10894 caffe.cpp:412] Average Forward pass: 42.3266 ms.

I0714 22:19:04.323930 10894 caffe.cpp:414] Average Backward pass: 62.2112 ms.

I0714 22:19:04.323948 10894 caffe.cpp:416] Average Forward-Backward: 104.755 ms.

I0714 22:19:04.323954 10894 caffe.cpp:418] Total Time: 20951 ms.

I0714 22:19:04.323959 10894 caffe.cpp:419] *** Benchmark ends **顺便说一下在1070ti下进行320×320分辨率测试:

I0715 10:23:30.041016 20648 caffe.cpp:407] layer106-conv backward: 0.14551 ms.

I0715 10:23:30.041043 20648 caffe.cpp:412] Average Forward pass: 25.4868 ms.

I0715 10:23:30.041054 20648 caffe.cpp:414] Average Backward pass: 49.6358 ms.

I0715 10:23:30.041083 20648 caffe.cpp:416] Average Forward-Backward: 75.2501 ms.

I0715 10:23:30.041097 20648 caffe.cpp:418] Total Time: 15050 ms.

I0715 10:23:30.041108 20648 caffe.cpp:419] *** Benchmark ends ***608×608分辨率 :

I0714 22:33:18.229004 11462 caffe.cpp:407] layer106-conv backward: 0.293207 ms.

I0714 22:33:18.229012 11462 caffe.cpp:412] Average Forward pass: 75.0531 ms.

I0714 22:33:18.229017 11462 caffe.cpp:414] Average Backward pass: 109.845 ms.

I0714 22:33:18.229023 11462 caffe.cpp:416] Average Forward-Backward: 185.006 ms.

I0714 22:33:18.229028 11462 caffe.cpp:418] Total Time: 37001.2 ms.

I0714 22:33:18.229033 11462 caffe.cpp:419] *** Benchmark ends ***顺便说一下在1070ti下进行608×608分辨率测试:

I0715 10:20:45.098073 20529 caffe.cpp:407] layer106-conv backward: 0.277381 ms.

I0715 10:20:45.098104 20529 caffe.cpp:412] Average Forward pass: 59.2867 ms.

I0715 10:20:45.098117 20529 caffe.cpp:414] Average Backward pass: 76.9649 ms.

I0715 10:20:45.098142 20529 caffe.cpp:416] Average Forward-Backward: 136.397 ms.

I0715 10:20:45.098160 20529 caffe.cpp:418] Total Time: 27279.3 ms.

I0715 10:20:45.098173 20529 caffe.cpp:419] *** Benchmark ends ***

接着我们测试yolov3-tiny

416×416分辨率:

./build/tools/caffe time -model=/home/boyun/code/caffe-ssd/yolov3-tiny/yolov3-tiny.prototxt --weights=/home/boyun/code/caffe-ssd/yolov3-tiny/yolov3-tiny.caffemodel --iterations=200 -gpu 0I0714 22:27:39.310173 11273 caffe.cpp:407] layer23-conv backward: 0.129828 ms.

I0714 22:27:39.310195 11273 caffe.cpp:412] Average Forward pass: 9.04005 ms.

I0714 22:27:39.310200 11273 caffe.cpp:414] Average Backward pass: 14.5964 ms.

I0714 22:27:39.310243 11273 caffe.cpp:416] Average Forward-Backward: 23.8689 ms.

I0714 22:27:39.310250 11273 caffe.cpp:418] Total Time: 4773.78 ms.

I0714 22:27:39.310253 11273 caffe.cpp:419] *** Benchmark ends ***

顺便说一下在1070ti下进行416×416分辨率测试:

I0715 10:29:10.839944 20802 caffe.cpp:407] layer23-conv backward: 0.099241 ms.

I0715 10:29:10.839954 20802 caffe.cpp:412] Average Forward pass: 5.15907 ms.

I0715 10:29:10.839959 20802 caffe.cpp:414] Average Backward pass: 8.64283 ms.

I0715 10:29:10.840006 20802 caffe.cpp:416] Average Forward-Backward: 13.842 ms.

I0715 10:29:10.840013 20802 caffe.cpp:418] Total Time: 2768.4 ms.

I0715 10:29:10.840018 20802 caffe.cpp:419] *** Benchmark ends ***

320×320分辨率 :

I0714 22:29:15.098641 11341 caffe.cpp:412] Average Forward pass: 8.37247 ms.

I0714 22:29:15.098646 11341 caffe.cpp:414] Average Backward pass: 11.5893 ms.

I0714 22:29:15.098657 11341 caffe.cpp:416] Average Forward-Backward: 20.1775 ms.

I0714 22:29:15.098662 11341 caffe.cpp:418] Total Time: 4035.49 ms.

I0714 22:29:15.098667 11341 caffe.cpp:419] *** Benchmark ends ***顺便说一下在1070ti下进行320×320分辨率测试:

I0715 10:30:05.019290 20863 caffe.cpp:407] layer23-conv backward: 0.0819661 ms.

I0715 10:30:05.019299 20863 caffe.cpp:412] Average Forward pass: 4.28037 ms.

I0715 10:30:05.019304 20863 caffe.cpp:414] Average Backward pass: 7.55383 ms.

I0715 10:30:05.019309 20863 caffe.cpp:416] Average Forward-Backward: 11.8756 ms.

I0715 10:30:05.019315 20863 caffe.cpp:418] Total Time: 2375.13 ms.

I0715 10:30:05.019322 20863 caffe.cpp:419] *** Benchmark ends ***

608×608分辨率 :

I0714 22:30:52.382453 11410 caffe.cpp:407] layer23-conv backward: 0.177674 ms.

I0714 22:30:52.382462 11410 caffe.cpp:412] Average Forward pass: 12.2339 ms.

I0714 22:30:52.382467 11410 caffe.cpp:414] Average Backward pass: 22.8627 ms.

I0714 22:30:52.382506 11410 caffe.cpp:416] Average Forward-Backward: 35.5057 ms.

I0714 22:30:52.382513 11410 caffe.cpp:418] Total Time: 7101.14 ms.

I0714 22:30:52.382515 11410 caffe.cpp:419] *** Benchmark ends ***顺便说一下在1070ti下进行608×608分辨率测试:

I0715 10:30:49.398526 20891 caffe.cpp:404] layer23-conv forward: 0.0902006 ms.

I0715 10:30:49.398531 20891 caffe.cpp:407] layer23-conv backward: 0.144245 ms.

I0715 10:30:49.398541 20891 caffe.cpp:412] Average Forward pass: 8.15075 ms.

I0715 10:30:49.398546 20891 caffe.cpp:414] Average Backward pass: 15.6652 ms.

I0715 10:30:49.398555 20891 caffe.cpp:416] Average Forward-Backward: 23.8652 ms.

I0715 10:30:49.398561 20891 caffe.cpp:418] Total Time: 4773.03 ms.

I0715 10:30:49.398566 20891 caffe.cpp:419] *** Benchmark ends ***

接着我们测试yolov3-tiny所有channels减半的网络:

608×608分辨率 :

./build/tools/caffe time -model=/home/boyun/code/caffe-ssd/yolov3-tiny/yolov3-tiny-half.prototxt --weights=/home/boyun/code/caffe-ssd/yolov3-tiny/yolov3-tiny-half.caffemodel --iterations=200 -gpu 0I0714 23:15:48.347908 14637 caffe.cpp:407] layer23-conv backward: 0.0562594 ms.

I0714 23:15:48.347916 14637 caffe.cpp:412] Average Forward pass: 8.46485 ms.

I0714 23:15:48.347920 14637 caffe.cpp:414] Average Backward pass: 15.7116 ms.

I0714 23:15:48.347964 14637 caffe.cpp:416] Average Forward-Backward: 24.4433 ms.

I0714 23:15:48.347970 14637 caffe.cpp:418] Total Time: 4888.66 ms.

I0714 23:15:48.347978 14637 caffe.cpp:419] *** Benchmark ends ***顺便说一下在1070ti下进行608×608分辨率测试:

I0715 10:32:16.474354 20914 caffe.cpp:404] layer23-conv forward: 0.0535805 ms.

I0715 10:32:16.474359 20914 caffe.cpp:407] layer23-conv backward: 0.0452762 ms.

I0715 10:32:16.474370 20914 caffe.cpp:412] Average Forward pass: 4.50442 ms.

I0715 10:32:16.474375 20914 caffe.cpp:414] Average Backward pass: 11.1228 ms.

I0715 10:32:16.474383 20914 caffe.cpp:416] Average Forward-Backward: 15.6752 ms.

I0715 10:32:16.474391 20914 caffe.cpp:418] Total Time: 3135.05 ms.

I0715 10:32:16.474402 20914 caffe.cpp:419] *** Benchmark ends ***

还在这种减半网络下测试了backbone的前向,就是conv1-conv16的速度:

I0714 23:24:19.573200 14933 caffe.cpp:407] layer16-conv backward: 0.054884 ms.

I0714 23:24:19.573209 14933 caffe.cpp:412] Average Forward pass: 6.96861 ms.

I0714 23:24:19.573212 14933 caffe.cpp:414] Average Backward pass: 14.954 ms.

I0714 23:24:19.573729 14933 caffe.cpp:416] Average Forward-Backward: 22.1895 ms.

I0714 23:24:19.573735 14933 caffe.cpp:418] Total Time: 4437.9 ms.

I0714 23:24:19.573740 14933 caffe.cpp:419] *** Benchmark ends ***顺便说一下使用darknet进行测试,网络前向一次运行时间为:5-7ms

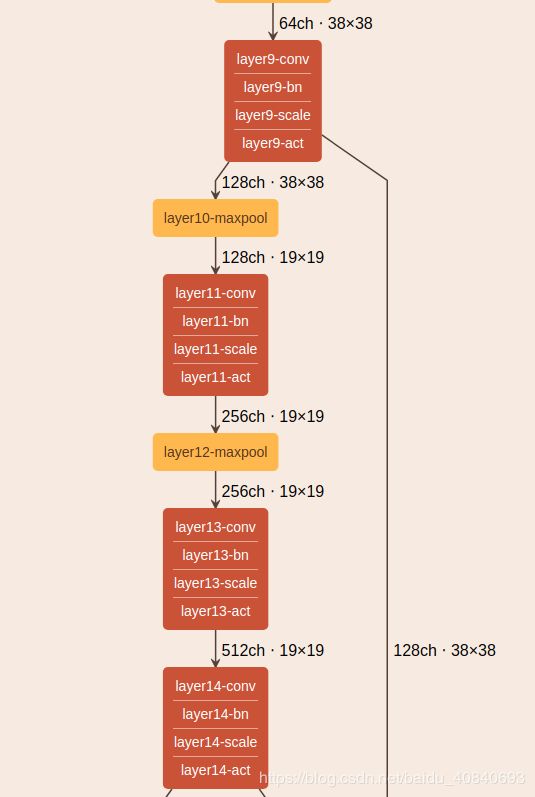

因为caffe和darknet的卷积和池化后feature map大小的机制有一些不同,所以做了一些修改

name: "Darkent2Caffe"

input: "data"

input_dim: 1

input_dim: 3

input_dim: 608

input_dim: 608

layer {

bottom: "data"

top: "layer1-conv"

name: "layer1-conv"

type: "Convolution"

convolution_param {

num_output: 8

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer1-conv"

top: "layer1-conv"

name: "layer1-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer1-conv"

top: "layer1-conv"

name: "layer1-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer1-conv"

top: "layer1-conv"

name: "layer1-act"

type: "ReLU"

}

layer {

bottom: "layer1-conv"

top: "layer2-maxpool"

name: "layer2-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 2

stride: 2

pool: MAX

}

}

layer {

bottom: "layer2-maxpool"

top: "layer3-conv"

name: "layer3-conv"

type: "Convolution"

convolution_param {

num_output: 16

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer3-conv"

top: "layer3-conv"

name: "layer3-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer3-conv"

top: "layer3-conv"

name: "layer3-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer3-conv"

top: "layer3-conv"

name: "layer3-act"

type: "ReLU"

}

layer {

bottom: "layer3-conv"

top: "layer4-maxpool"

name: "layer4-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 2

stride: 2

pool: MAX

}

}

layer {

bottom: "layer4-maxpool"

top: "layer5-conv"

name: "layer5-conv"

type: "Convolution"

convolution_param {

num_output: 32

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer5-conv"

top: "layer5-conv"

name: "layer5-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer5-conv"

top: "layer5-conv"

name: "layer5-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer5-conv"

top: "layer5-conv"

name: "layer5-act"

type: "ReLU"

}

layer {

bottom: "layer5-conv"

top: "layer6-maxpool"

name: "layer6-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 2

stride: 2

pool: MAX

}

}

layer {

bottom: "layer6-maxpool"

top: "layer7-conv"

name: "layer7-conv"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer7-conv"

top: "layer7-conv"

name: "layer7-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer7-conv"

top: "layer7-conv"

name: "layer7-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer7-conv"

top: "layer7-conv"

name: "layer7-act"

type: "ReLU"

}

layer {

bottom: "layer7-conv"

top: "layer8-maxpool"

name: "layer8-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 2

stride: 2

pool: MAX

}

}

layer {

bottom: "layer8-maxpool"

top: "layer9-conv"

name: "layer9-conv"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer9-conv"

top: "layer9-conv"

name: "layer9-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer9-conv"

top: "layer9-conv"

name: "layer9-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer9-conv"

top: "layer9-conv"

name: "layer9-act"

type: "ReLU"

}

layer {

bottom: "layer9-conv"

top: "layer10-maxpool"

name: "layer10-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 2

stride: 2

pool: MAX

}

}

layer {

bottom: "layer10-maxpool"

top: "layer11-conv"

name: "layer11-conv"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer11-conv"

top: "layer11-conv"

name: "layer11-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer11-conv"

top: "layer11-conv"

name: "layer11-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer11-conv"

top: "layer11-conv"

name: "layer11-act"

type: "ReLU"

}

layer {

bottom: "layer11-conv"

top: "layer12-maxpool"

name: "layer12-maxpool"

type: "Pooling"

pooling_param {

kernel_size: 3

stride: 1

pad: 1

pool: MAX

}

}

layer {

bottom: "layer12-maxpool"

top: "layer13-conv"

name: "layer13-conv"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer13-conv"

top: "layer13-conv"

name: "layer13-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer13-conv"

top: "layer13-conv"

name: "layer13-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer13-conv"

top: "layer13-conv"

name: "layer13-act"

type: "ReLU"

}

layer {

bottom: "layer13-conv"

top: "layer14-conv"

name: "layer14-conv"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "layer14-conv"

top: "layer14-conv"

name: "layer14-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer14-conv"

top: "layer14-conv"

name: "layer14-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer14-conv"

top: "layer14-conv"

name: "layer14-act"

type: "ReLU"

}

layer {

bottom: "layer14-conv"

top: "layer15-conv"

name: "layer15-conv"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer15-conv"

top: "layer15-conv"

name: "layer15-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer15-conv"

top: "layer15-conv"

name: "layer15-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer15-conv"

top: "layer15-conv"

name: "layer15-act"

type: "ReLU"

}

layer {

bottom: "layer15-conv"

top: "layer16-conv"

name: "layer16-conv"

type: "Convolution"

convolution_param {

num_output: 72

kernel_size: 1

pad: 0

stride: 1

bias_term: true

}

}

layer {

bottom: "layer14-conv"

top: "layer18-route"

name: "layer18-route"

type: "Concat"

}

layer {

bottom: "layer18-route"

top: "layer19-conv"

name: "layer19-conv"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "layer19-conv"

top: "layer19-conv"

name: "layer19-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer19-conv"

top: "layer19-conv"

name: "layer19-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer19-conv"

top: "layer19-conv"

name: "layer19-act"

type: "ReLU"

}

layer {

bottom: "layer19-conv"

top: "layer20-upsample"

name: "layer20-upsample"

type: "Upsample"

upsample_param {

scale: 2

}

}

layer {

bottom: "layer20-upsample"

bottom: "layer9-conv"

top: "layer21-route"

name: "layer21-route"

type: "Concat"

}

layer {

bottom: "layer21-route"

top: "layer22-conv"

name: "layer22-conv"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "layer22-conv"

top: "layer22-conv"

name: "layer22-bn"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "layer22-conv"

top: "layer22-conv"

name: "layer22-scale"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "layer22-conv"

top: "layer22-conv"

name: "layer22-act"

type: "ReLU"

}

layer {

bottom: "layer22-conv"

top: "layer23-conv"

name: "layer23-conv"

type: "Convolution"

convolution_param {

num_output: 48

kernel_size: 1

pad: 0

stride: 1

bias_term: true

}

}接下来进行TensorRT

下载好我们前面说的TensorRT-Yolov3,其中子目录tensorRTWrapper也要下好文件

然后在tensorRTWrapper子目录下的

./TensorRT-Yolov3/tensorRTWrapper/code/CMakeLists.txt

加一句话,让它能找到你的TnesorRT

set(TENSORRT_ROOT "/home/boyun/NVIDIA/TensorRT-5.1.5.0")

cmake_minimum_required(VERSION 2.8)

project(trtNet)

set(CMAKE_BUILD_TYPE Release)

#include

include_directories(${CMAKE_CURRENT_SOURCE_DIR}/include)

#src

set(PLUGIN_SOURCES

src/EntroyCalibrator.cpp

src/UpsampleLayer.cpp

src/UpsampleLayer.cu

src/YoloLayer.cu

src/TrtNet.cpp

)

#

# CUDA Configuration

#

find_package(CUDA REQUIRED)

set(CUDA_VERBOSE_BUILD ON)

# Specify the cuda host compiler to use the same compiler as cmake.

set(CUDA_HOST_COMPILER ${CMAKE_CXX_COMPILER})

set(TENSORRT_ROOT "/home/boyun/NVIDIA/TensorRT-5.1.5.0")然后在后面需要对coco数据集处理,安装库:

sudo pip install pycocotools -i https://pypi.tuna.tsinghua.edu.cn/simple接着就是和平常一样:

mkdir build

cd build && cmake .. && make && make install

cd ..

然后自己找个图片,还有yolov3_608.caffemodel和yolov3_608.prototxt

#for yolov3-608

./install/runYolov3 --caffemodel=./yolov3_608.caffemodel --prototxt=./yolov3_608.prototxt --input=./test.jpg --W=608 --H=608 --class=80

#for fp16

./install/runYolov3 --caffemodel=./yolov3_608.caffemodel --prototxt=./yolov3_608.prototxt --input=./test.jpg --W=608 --H=608 --class=80 --mode=fp16

#for int8 with calibration datasets

./install/runYolov3 --caffemodel=./yolov3_608.caffemodel --prototxt=./yolov3_608.prototxt --input=./test.jpg --W=608 --H=608 --class=80 --mode=int8 --calib=./calib_sample.txt

#for yolov3-416 (need to modify include/YoloConfigs for YoloKernel)

./install/runYolov3 --caffemodel=./yolov3_416.caffemodel --prototxt=./yolov3_416.prototxt --input=./test.jpg --W=416 --H=416 --class=80下载COCO数据集到TensorRT-Yolov3/scripts/

https://blog.csdn.net/weixin_36474809/article/details/90262591

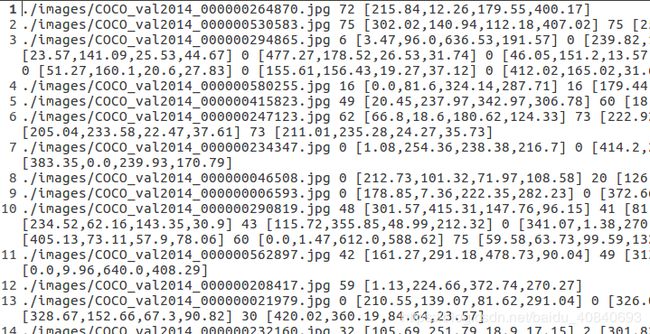

还可以测试eval也就是map,两个阈值下的测试 0.5 和 0.75

首先是label.txt的生成:

将coco数据集解压到scripts,我们只用val2014

其中scripts/annotations放置的是coco的json

scripts/images放置的是coco的val2014图片库,images中有

然后scripts下新建一个label

python ./createCOCOlabels.py ./annotations/instances_val2014.json ./label val2014运行完会在label下生成txt

16 [214.15,41.29,348.26,243.78]其中images是40504个文件

其中label是40137个文件

因为

接着

makelabels.py修改一句话,否则python2会报错:

with open('labels.txt', 'w') as f:

f.write(str)

# print(str, file=f)cd TensorRT-Yolov3/scripts/

../install/runYolov3 --caffemodel=../yolov3_608.caffemodel --prototxt=../yolov3_608.prototxt --W=608 --H=608 --class=80 --evallist=./labels.txt

至于为什么要这样运行代码,可以打开TensorRT-Yolov3/scripts/labels.txt

我使用的使相对路径./images 大家可以改为绝对路径,就可以像readme那样运行了,比如/home/boyun/data/coco2014/val2014

Time taken for nms is 0.002925 ms.

layer1-conv 0.329ms

layer1-act 0.476ms

layer2-conv 0.539ms

layer2-act 0.239ms

layer3-conv 0.195ms

layer3-act 0.121ms

layer4-conv 0.578ms

layer4-act 0.238ms

layer5-shortcut 0.346ms

layer6-conv 0.506ms

layer6-act 0.121ms

layer7-conv 0.118ms

layer7-act 0.061ms

layer8-conv 0.328ms

layer8-act 0.120ms

layer9-shortcut 0.277ms

layer10-conv 0.134ms

layer10-act 0.061ms

layer11-conv 0.337ms

layer11-act 0.120ms

layer12-shortcut 0.175ms

layer13-conv 0.548ms

layer13-act 0.061ms

layer14-conv 0.094ms

layer14-act 0.031ms

layer15-conv 0.313ms

layer15-act 0.059ms

layer16-shortcut 0.096ms

layer17-conv 0.115ms

layer17-act 0.049ms

layer18-conv 0.365ms

layer18-act 0.059ms

layer19-shortcut 0.092ms

layer20-conv 0.095ms

layer20-act 0.031ms

layer21-conv 0.308ms

layer21-act 0.059ms

layer22-shortcut 0.092ms

layer23-conv 0.092ms

layer23-act 0.031ms

layer24-conv 0.307ms

layer24-act 0.058ms

layer25-shortcut 0.092ms

layer26-conv 0.093ms

layer26-act 0.031ms

layer27-conv 0.311ms

layer27-act 0.081ms

layer28-shortcut 0.145ms

layer29-conv 0.102ms

layer29-act 0.033ms

layer30-conv 0.312ms

layer30-act 0.058ms

layer31-shortcut 0.092ms

layer32-conv 0.091ms

layer32-act 0.030ms

layer33-conv 0.307ms

layer33-act 0.059ms

layer34-shortcut 0.092ms

layer35-conv 0.091ms

layer35-act 0.030ms

layer36-conv 0.308ms

layer36-act 0.059ms

layer37-shortcut 0.093ms

layer38-conv 0.716ms

layer38-act 0.032ms

layer39-conv 0.081ms

layer39-act 0.015ms

layer40-conv 0.387ms

layer40-act 0.028ms

layer41-shortcut 0.053ms

layer42-conv 0.086ms

layer42-act 0.015ms

layer43-conv 0.389ms

layer43-act 0.028ms

layer44-shortcut 0.053ms

layer45-conv 0.081ms

layer45-act 0.015ms

layer46-conv 0.384ms

layer46-act 0.028ms

layer47-shortcut 0.053ms

layer48-conv 0.086ms

layer48-act 0.016ms

layer49-conv 0.491ms

layer49-act 0.028ms

layer50-shortcut 0.053ms

layer51-conv 0.079ms

layer51-act 0.015ms

layer52-conv 0.382ms

layer52-act 0.028ms

layer53-shortcut 0.053ms

layer54-conv 0.079ms

layer54-act 0.015ms

layer55-conv 0.385ms

layer55-act 0.028ms

layer56-shortcut 0.053ms

layer57-conv 0.079ms

layer57-act 0.015ms

layer58-conv 0.384ms

layer58-act 0.030ms

layer59-shortcut 0.058ms

layer60-conv 0.103ms

layer60-act 0.018ms

layer61-conv 0.469ms

layer61-act 0.028ms

layer62-shortcut 0.053ms

layer63-conv 0.619ms

layer63-act 0.014ms

layer64-conv 0.110ms

layer64-act 0.005ms

layer65-conv 0.612ms

layer65-act 0.014ms

layer66-shortcut 0.026ms

layer67-conv 0.110ms

layer67-act 0.007ms

layer68-conv 0.760ms

layer68-act 0.014ms

layer69-shortcut 0.025ms

layer70-conv 0.107ms

layer70-act 0.005ms

layer71-conv 0.615ms

layer71-act 0.015ms

layer72-shortcut 0.025ms

layer73-conv 0.107ms

layer73-act 0.005ms

layer74-conv 0.620ms

layer74-act 0.015ms

layer75-shortcut 0.029ms

layer76-conv 0.121ms

layer76-act 0.005ms

layer77-conv 0.827ms

layer77-act 0.014ms

layer78-conv 0.107ms

layer78-act 0.005ms

layer79-conv 0.619ms

layer79-act 0.014ms

layer80-conv 0.105ms

layer80-act 0.005ms

layer81-conv 0.630ms

layer81-act 0.017ms

layer82-conv 0.089ms

layer80-conv copy 0.008ms

layer85-conv 0.057ms

layer85-act 0.004ms

layer86-upsample 0.021ms

layer86-upsample copy 0.013ms

layer88-conv 0.112ms

layer88-act 0.015ms

layer89-conv 0.385ms

layer89-act 0.028ms

layer90-conv 0.077ms

layer90-act 0.015ms

layer91-conv 0.383ms

layer91-act 0.028ms

layer92-conv 0.077ms

layer92-act 0.015ms

layer93-conv 0.381ms

layer93-act 0.028ms

layer94-conv 0.077ms

layer92-conv copy 0.019ms

layer97-conv 0.031ms

layer97-act 0.005ms

layer98-upsample 0.037ms

layer98-upsample copy 0.035ms

layer100-conv 0.123ms

layer100-act 0.032ms

layer101-conv 0.330ms

layer101-act 0.071ms

layer102-conv 0.086ms

layer102-act 0.031ms

layer103-conv 0.306ms

layer103-act 0.059ms

layer104-conv 0.085ms

layer104-act 0.031ms

layer105-conv 0.305ms

layer105-act 0.059ms

layer106-conv 0.153ms

yolo-det 0.184ms

Time over all layers: 26.481

evalMAPResult:

class: 0 iou thresh-0.5 AP: 0.7614 recall: 0.7236 precision: 0.8038

class: 1 iou thresh-0.5 AP: 0.75 recall: 0.25 precision: 0.75

class: 2 iou thresh-0.5 AP: 0.8815 recall: 0.629 precision: 0.8966

class: 3 iou thresh-0.5 AP: 0.8598 recall: 0.7407 precision: 0.8696

class: 4 iou thresh-0.5 AP: 1 recall: 0.7692 precision: 1

class: 5 iou thresh-0.5 AP: 0.75 recall: 0.8571 precision: 0.75

class: 6 iou thresh-0.5 AP: 1 recall: 0.8667 precision: 1

class: 7 iou thresh-0.5 AP: 0.905 recall: 0.6 precision: 0.913

class: 8 iou thresh-0.5 AP: 0.5815 recall: 0.36 precision: 0.6923

class: 9 iou thresh-0.5 AP: 0.8105 recall: 0.641 precision: 0.8333

class: 10 iou thresh-0.5 AP: 1 recall: 0.8 precision: 1

class: 11 iou thresh-0.5 AP: 0.8207 recall: 0.7778 precision: 0.875

class: 12 iou thresh-0.5 AP: 1 recall: 0.2857 precision: 1

class: 13 iou thresh-0.5 AP: 0.7858 recall: 0.2564 precision: 0.8333

class: 14 iou thresh-0.5 AP: 0.8182 recall: 0.3 precision: 0.8182

class: 15 iou thresh-0.5 AP: 0.9 recall: 0.8182 precision: 0.9

class: 16 iou thresh-0.5 AP: 0.7692 recall: 0.7692 precision: 0.7692

class: 17 iou thresh-0.5 AP: 1 recall: 0.9474 precision: 1

class: 18 iou thresh-0.5 AP: 0.8074 recall: 0.6557 precision: 0.8511

class: 19 iou thresh-0.5 AP: 0.9068 recall: 0.6364 precision: 0.913

class: 20 iou thresh-0.5 AP: 0.96 recall: 0.5854 precision: 0.96

class: 21 iou thresh-0.5 AP: 0.6667 recall: 1 precision: 0.6667

class: 22 iou thresh-0.5 AP: 1 recall: 0.871 precision: 1

class: 23 iou thresh-0.5 AP: 1 recall: 0.85 precision: 1

class: 24 iou thresh-0.5 AP: 0.5283 recall: 0.2143 precision: 0.6

class: 25 iou thresh-0.5 AP: 0.88 recall: 0.6286 precision: 0.88

class: 26 iou thresh-0.5 AP: 0.7957 recall: 0.1556 precision: 0.875

class: 27 iou thresh-0.5 AP: 0.8276 recall: 0.5926 precision: 0.8421

class: 28 iou thresh-0.5 AP: 0.839 recall: 0.6071 precision: 0.8947

class: 29 iou thresh-0.5 AP: 0.8571 recall: 0.75 precision: 0.8571

class: 30 iou thresh-0.5 AP: 0.7783 recall: 0.4 precision: 0.8889

class: 31 iou thresh-0.5 AP: 1 recall: 0.6667 precision: 1

class: 32 iou thresh-0.5 AP: 0.7597 recall: 0.75 precision: 0.8

class: 33 iou thresh-0.5 AP: 1 recall: 0.75 precision: 1

class: 34 iou thresh-0.5 AP: 1 recall: 0.75 precision: 1

class: 35 iou thresh-0.5 AP: 0.6667 recall: 0.4 precision: 0.6667

class: 36 iou thresh-0.5 AP: 0.9294 recall: 0.6522 precision: 0.9375

class: 37 iou thresh-0.5 AP: 0.8815 recall: 0.5909 precision: 0.9286

class: 38 iou thresh-0.5 AP: 0.9107 recall: 0.6667 precision: 0.9231

class: 39 iou thresh-0.5 AP: 0.764 recall: 0.451 precision: 0.807

class: 40 iou thresh-0.5 AP: 0.8311 recall: 0.5152 precision: 0.85

class: 41 iou thresh-0.5 AP: 0.7388 recall: 0.5244 precision: 0.7544

class: 42 iou thresh-0.5 AP: 0.6759 recall: 0.32 precision: 0.7273

class: 43 iou thresh-0.5 AP: 0.7675 recall: 0.2353 precision: 0.8

class: 44 iou thresh-0.5 AP: 0.7292 recall: 0.3214 precision: 0.75

class: 45 iou thresh-0.5 AP: 0.6084 recall: 0.3864 precision: 0.6538

class: 46 iou thresh-0.5 AP: 1 recall: 0.1818 precision: 1

class: 47 iou thresh-0.5 AP: 0.9231 recall: 0.6667 precision: 0.9231

class: 48 iou thresh-0.5 AP: 0.8664 recall: 0.5625 precision: 0.9

class: 49 iou thresh-0.5 AP: 0.8333 recall: 0.5 precision: 0.8333

class: 50 iou thresh-0.5 AP: 1 recall: 0.3333 precision: 1

class: 51 iou thresh-0.5 AP: 0.8182 recall: 0.3214 precision: 0.8182

class: 52 iou thresh-0.5 AP: 0.6006 recall: 0.5714 precision: 0.75

class: 53 iou thresh-0.5 AP: 0.7398 recall: 0.5417 precision: 0.7647

class: 54 iou thresh-0.5 AP: 1 recall: 0.8182 precision: 1

class: 55 iou thresh-0.5 AP: 0.6 recall: 0.375 precision: 0.6

class: 56 iou thresh-0.5 AP: 0.7776 recall: 0.4729 precision: 0.8133

class: 57 iou thresh-0.5 AP: 0.8447 recall: 0.5833 precision: 0.875

class: 58 iou thresh-0.5 AP: 0.8789 recall: 0.2647 precision: 0.9

class: 59 iou thresh-0.5 AP: 0.9091 recall: 0.4348 precision: 0.9091

class: 60 iou thresh-0.5 AP: 0.738 recall: 0.4219 precision: 0.7941

class: 61 iou thresh-0.5 AP: 1 recall: 0.8696 precision: 1

class: 62 iou thresh-0.5 AP: 1 recall: 0.9333 precision: 1

class: 63 iou thresh-0.5 AP: 1 recall: 0.9 precision: 1

class: 64 iou thresh-0.5 AP: 1 recall: 0.625 precision: 1

class: 65 iou thresh-0.5 AP: 0.7904 recall: 0.5294 precision: 0.9

class: 66 iou thresh-0.5 AP: 0.875 recall: 0.7778 precision: 0.875

class: 67 iou thresh-0.5 AP: 1 recall: 0.4242 precision: 1

class: 68 iou thresh-0.5 AP: 1 recall: 1 precision: 1

class: 69 iou thresh-0.5 AP: 0.8571 recall: 0.4286 precision: 0.8571

class: 71 iou thresh-0.5 AP: 0.7921 recall: 0.5 precision: 0.8421

class: 72 iou thresh-0.5 AP: 0.8889 recall: 0.7273 precision: 0.8889

class: 73 iou thresh-0.5 AP: 0.5855 recall: 0.2436 precision: 0.7037

class: 74 iou thresh-0.5 AP: 1 recall: 0.5172 precision: 1

class: 75 iou thresh-0.5 AP: 0.6417 recall: 0.3333 precision: 0.75

class: 76 iou thresh-0.5 AP: 0.7692 recall: 0.6667 precision: 0.7692

class: 77 iou thresh-0.5 AP: 1 recall: 0.6154 precision: 1

class: 79 iou thresh-0.5 AP: 0.25 recall: 0.2 precision: 0.5

MAP:0.831

evalMAPResult:

class: 0 iou thresh-0.75 AP: 0.5343 recall: 0.5459 precision: 0.6064

class: 1 iou thresh-0.75 AP: 0.5063 recall: 0.2083 precision: 0.625

class: 2 iou thresh-0.75 AP: 0.4526 recall: 0.3871 precision: 0.5517

class: 3 iou thresh-0.75 AP: 0.5801 recall: 0.5185 precision: 0.6087

class: 4 iou thresh-0.75 AP: 1 recall: 0.7692 precision: 1

class: 5 iou thresh-0.75 AP: 0.75 recall: 0.8571 precision: 0.75

class: 6 iou thresh-0.75 AP: 0.8669 recall: 0.8 precision: 0.9231

class: 7 iou thresh-0.75 AP: 0.6159 recall: 0.4571 precision: 0.6957

class: 8 iou thresh-0.75 AP: 0.3966 recall: 0.24 precision: 0.4615

class: 9 iou thresh-0.75 AP: 0.3891 recall: 0.4359 precision: 0.5667

class: 10 iou thresh-0.75 AP: 0.4792 recall: 0.6 precision: 0.75

class: 11 iou thresh-0.75 AP: 0.4375 recall: 0.4444 precision: 0.5

class: 12 iou thresh-0.75 AP: 0.5 recall: 0.1429 precision: 0.5

class: 13 iou thresh-0.75 AP: 0.4232 recall: 0.1795 precision: 0.5833

class: 14 iou thresh-0.75 AP: 0.1515 recall: 0.1 precision: 0.2727

class: 15 iou thresh-0.75 AP: 0.8 recall: 0.7273 precision: 0.8

class: 16 iou thresh-0.75 AP: 0.6923 recall: 0.6923 precision: 0.6923

class: 17 iou thresh-0.75 AP: 0.7934 recall: 0.7895 precision: 0.8333

class: 18 iou thresh-0.75 AP: 0.3753 recall: 0.3934 precision: 0.5106

class: 19 iou thresh-0.75 AP: 0.4418 recall: 0.4242 precision: 0.6087

class: 20 iou thresh-0.75 AP: 0.8643 recall: 0.5366 precision: 0.88

class: 21 iou thresh-0.75 AP: 0.6667 recall: 1 precision: 0.6667

class: 22 iou thresh-0.75 AP: 0.9199 recall: 0.8065 precision: 0.9259

class: 23 iou thresh-0.75 AP: 0.9377 recall: 0.8 precision: 0.9412

class: 24 iou thresh-0.75 AP: 0.253 recall: 0.1429 precision: 0.4

class: 25 iou thresh-0.75 AP: 0.4121 recall: 0.3714 precision: 0.52

class: 26 iou thresh-0.75 AP: 0.4583 recall:0.08889 precision: 0.5

class: 27 iou thresh-0.75 AP: 0.5228 recall: 0.4074 precision: 0.5789

class: 28 iou thresh-0.75 AP: 0.6633 recall: 0.5 precision: 0.7368

class: 29 iou thresh-0.75 AP: 0.3714 recall: 0.375 precision: 0.4286

class: 30 iou thresh-0.75 AP: 0 recall: 0 precision: 0

class: 31 iou thresh-0.75 AP: 0.5 recall: 0.3333 precision: 0.5

class: 32 iou thresh-0.75 AP: 0.5012 recall: 0.5625 precision: 0.6

class: 33 iou thresh-0.75 AP: 1 recall: 0.75 precision: 1

class: 34 iou thresh-0.75 AP: 0.5608 recall: 0.5 precision: 0.6667

class: 35 iou thresh-0.75 AP: 0.4583 recall: 0.3 precision: 0.5

class: 36 iou thresh-0.75 AP: 0.5326 recall: 0.4783 precision: 0.6875

class: 37 iou thresh-0.75 AP: 0.3845 recall: 0.3182 precision: 0.5

class: 38 iou thresh-0.75 AP: 0.4077 recall: 0.3333 precision: 0.4615

class: 39 iou thresh-0.75 AP: 0.3815 recall: 0.2843 precision: 0.5088

class: 40 iou thresh-0.75 AP: 0.6853 recall: 0.4242 precision: 0.7

class: 41 iou thresh-0.75 AP: 0.4974 recall: 0.3902 precision: 0.5614

class: 42 iou thresh-0.75 AP: 0.471 recall: 0.24 precision: 0.5455

class: 43 iou thresh-0.75 AP: 0.5225 recall: 0.1765 precision: 0.6

class: 44 iou thresh-0.75 AP: 0.3673 recall: 0.2143 precision: 0.5

class: 45 iou thresh-0.75 AP: 0.2551 recall: 0.25 precision: 0.4231

class: 46 iou thresh-0.75 AP: 0.5 recall:0.09091 precision: 0.5

class: 47 iou thresh-0.75 AP: 0.422 recall: 0.4444 precision: 0.6154

class: 48 iou thresh-0.75 AP: 0.6711 recall: 0.5 precision: 0.8

class: 49 iou thresh-0.75 AP: 0.6333 recall: 0.4 precision: 0.6667

class: 50 iou thresh-0.75 AP: 0.71 recall: 0.2667 precision: 0.8

class: 51 iou thresh-0.75 AP: 0.3838 recall: 0.1786 precision: 0.4545

class: 52 iou thresh-0.75 AP: 0.1869 recall: 0.3333 precision: 0.4375

class: 53 iou thresh-0.75 AP: 0.3933 recall: 0.3333 precision: 0.4706

class: 54 iou thresh-0.75 AP: 0.8627 recall: 0.7273 precision: 0.8889

class: 55 iou thresh-0.75 AP: 0.6 recall: 0.375 precision: 0.6

class: 56 iou thresh-0.75 AP: 0.3677 recall: 0.3256 precision: 0.56

class: 57 iou thresh-0.75 AP: 0.3568 recall: 0.375 precision: 0.5625

class: 58 iou thresh-0.75 AP: 0.3494 recall: 0.1765 precision: 0.6

class: 59 iou thresh-0.75 AP: 0.5091 recall: 0.2609 precision: 0.5455

class: 60 iou thresh-0.75 AP: 0.5487 recall: 0.3281 precision: 0.6176

class: 61 iou thresh-0.75 AP: 0.9211 recall: 0.8261 precision: 0.95

class: 62 iou thresh-0.75 AP: 0.8516 recall: 0.8 precision: 0.8571

class: 63 iou thresh-0.75 AP: 0.8889 recall: 0.8 precision: 0.8889

class: 64 iou thresh-0.75 AP: 0.6 recall: 0.375 precision: 0.6

class: 65 iou thresh-0.75 AP: 0.3296 recall: 0.2941 precision: 0.5

class: 66 iou thresh-0.75 AP: 0.2923 recall: 0.4444 precision: 0.5

class: 67 iou thresh-0.75 AP: 0.7691 recall: 0.3636 precision: 0.8571

class: 68 iou thresh-0.75 AP: 1 recall: 1 precision: 1

class: 69 iou thresh-0.75 AP: 0.7143 recall: 0.3571 precision: 0.7143

class: 71 iou thresh-0.75 AP: 0.342 recall: 0.2812 precision: 0.4737

class: 72 iou thresh-0.75 AP: 0.8889 recall: 0.7273 precision: 0.8889

class: 73 iou thresh-0.75 AP:0.06203 recall:0.05128 precision: 0.1481

class: 74 iou thresh-0.75 AP: 0.484 recall: 0.3103 precision: 0.6

class: 75 iou thresh-0.75 AP: 0.4083 recall: 0.2222 precision: 0.5

class: 76 iou thresh-0.75 AP: 0.2231 recall: 0.2667 precision: 0.3077

class: 77 iou thresh-0.75 AP: 0.6 recall: 0.4615 precision: 0.75

class: 79 iou thresh-0.75 AP: 0.25 recall: 0.2 precision: 0.5

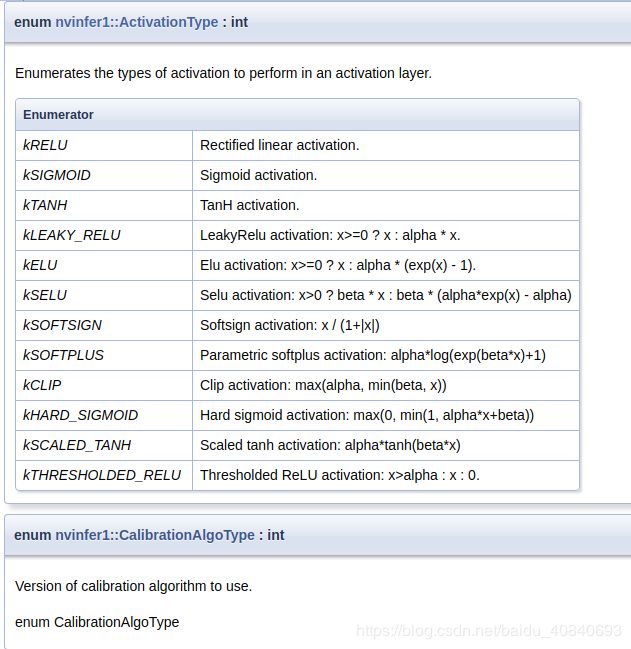

MAP:0.5363TensorRT已经支持的激活函数: