Canal+Otter实现Mysql数据库数据记录增量备份实践

通过之前对Canal的了解,我们知道Canal实际上只做了日志级别的读取和操作事件的捕获,对于如何将获取到的增量日志保存到目标数据库去,这里单纯地通过Canal来做就需要做很多后续解析的工作,好在阿里都给出了解决方案,那就只Otter。Otter就是来帮助我们让源数据库的数据通过binlog再次写入到目标数据库。

目录

必须弄清楚的两个问题

Canal帮我们做了什么?

我们将用Otter来干什么?

Otter后续工作实践说明

机器配置环境说明

Manger安装配置

Otter Manger和Node下载

Otter依赖Aria2下载

Manger配置修改

Otter管理数据库初始化

Node安装配置

修改Node配置

Aria2配置

Zookeeper安装

安装两个MySQL实例

Master安装

Dest安装

Canal安装

Otter的管理和配置

机器管理

zookeeper管理

Node管理

配置管理

数据源配置

数据表配置

Canal配置

主备配置

同步管理

Channel管理

Pipleline管理

Wiki学习

参考资料

必须弄清楚的两个问题

Canal帮我们做了什么?

前面的文章有讲到,Canal实际上就是模拟了一个Slave节点去读取源数据库的binlog日志,通过Canal的客户端我们可以轻松地获取到操作的事件对这类数据做有价值的分析。也就是说,Canal为我们建立了一个binlog日志的读写通道和开放的业务设计客户端业务数据分发消费。简言之,通过Canal我们可以知道所有业务对表的行操作(QUERY(SELECT)\INSERT\UPDATE\DELETE)。

我们将用Otter来干什么?

Otter实际上是一个web的管理服务,它的核心是Manger模块。启动一个Manger,我们可以访问它的管理界面来实现Channel、Pipleline 、数据源、数据表、zookeeper集群、主备配置。Otter就是做Canal拿到binlog日志之后做消费处理,对目标数据库进行增量日志消费,实现数据搬运工作。

我这里画了一张比较形象的水獭送鱼图:

Otter后续工作实践说明

机器配置环境说明

共有三台机器(一个主机、两个虚拟机):

- 主机Windows使用127.0.0.1(localhost)——主要目的是安装Otter Manger方便操作。

- 虚拟机一Linux(192.168.1.66)——安装zookeeper集群、Otter Node(作为一个Node节点)。

- 虚拟机二Linux(192.168.1.88)——安装源Mysql、目标Mysql、Canal、Otter Node(作为另一个Node节点)。

Manger安装配置

Otter Manger和Node下载

https://github.com/alibaba/otter/releases

wget https://github.com/alibaba/otter/releases/download/otter-4.2.17/manager.deployer-4.2.17.tar.gz

wget https://github.com/alibaba/otter/releases/download/otter-4.2.17/node.deployer-4.2.17.tar.gz

Otter依赖Aria2下载

Aria2是一个轻量级的多协议和多源,跨平台下载实用程序,在命令行中运行。 它支持HTTP / HTTPS,FTP,SFTP,BitTorrent和Metalink。

https://github.com/aria2/aria2/releases/tag/release-1.30.0

wget https://github.com/aria2/aria2/releases/download/release-1.30.0/aria2-1.30.0.tar.gz

Manger配置修改

我们的Otter Manger在Windows上操作,其他的环境安装都在Docker环境下。

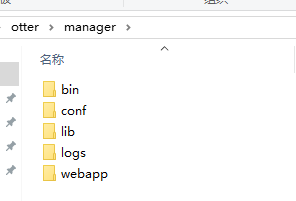

解压下载的manger.deployer-4.2.17.tar.gz,修改相应的目录得到如下目录结构:

修改otter.properties,建议就以本地MySQL作为管理端的Mysql主机服务:

## otter manager domain name

otter.domainName = 127.0.0.1

## otter manager http port

otter.port = 8080

## jetty web config xml

otter.jetty = jetty.xml

## otter manager database config

otter.database.driver.class.name = com.mysql.jdbc.Driver

otter.database.driver.url = jdbc:mysql://127.0.0.1:3306/otter

otter.database.driver.username = root

otter.database.driver.password = root

## otter communication port

otter.communication.manager.port = 1099

## otter communication payload size (default = 8388608)

otter.communication.payload = 8388608

## otter communication pool size

otter.communication.pool.size = 10

## default zookeeper address

otter.zookeeper.cluster.default = 127.0.0.1:2181

## default zookeeper sesstion timeout = 60s

otter.zookeeper.sessionTimeout = 60000

## otter arbitrate connect manager config

otter.manager.address = ${otter.domainName}:${otter.communication.manager.port}

## should run in product mode , true/false

otter.manager.productionMode = true

## self-monitor enable or disable

otter.manager.monitor.self.enable = true

## self-montir interval , default 120s

otter.manager.monitor.self.interval = 120

## auto-recovery paused enable or disable

otter.manager.monitor.recovery.paused = true

# manager email user config

otter.manager.monitor.email.host = smtp.gmail.com

otter.manager.monitor.email.username =

otter.manager.monitor.email.password =

otter.manager.monitor.email.stmp.port = 465注意:这里MySQL跟我们的Master-salve模式暂时不用做过多联想 ,纯粹就是为了将Otter运行起来。Windows本机也需要先启动一个zookeeper服务节点。

Otter管理数据库初始化

在本地Mysql中建立一个otter数据库示例用来支持otter服务。

从这里访问Otter sql数据库脚本(https://github.com/alibaba/otter/blob/master/manager/deployer/src/main/resources/sql/otter-manager-schema.sql)。

CREATE DATABASE /*!32312 IF NOT EXISTS*/ `otter` /*!40100 DEFAULT CHARACTER SET utf8 COLLATE utf8_bin */;

USE `otter`;

CREATE TABLE `ALARM_RULE` (

`ID` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`MONITOR_NAME` varchar(1024) DEFAULT NULL,

`RECEIVER_KEY` varchar(1024) DEFAULT NULL,

`STATUS` varchar(32) DEFAULT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`DESCRIPTION` varchar(256) DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

`MATCH_VALUE` varchar(1024) DEFAULT NULL,

`PARAMETERS` text DEFAULT NULL,

PRIMARY KEY (`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `AUTOKEEPER_CLUSTER` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`CLUSTER_NAME` varchar(200) NOT NULL,

`SERVER_LIST` varchar(1024) NOT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `CANAL` (

`ID` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) DEFAULT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`PARAMETERS` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `CANALUNIQUE` (`NAME`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `CHANNEL` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) NOT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`PARAMETERS` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `CHANNELUNIQUE` (`NAME`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `COLUMN_PAIR` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`SOURCE_COLUMN` varchar(200) DEFAULT NULL,

`TARGET_COLUMN` varchar(200) DEFAULT NULL,

`DATA_MEDIA_PAIR_ID` bigint(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_DATA_MEDIA_PAIR_ID` (`DATA_MEDIA_PAIR_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `COLUMN_PAIR_GROUP` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`DATA_MEDIA_PAIR_ID` bigint(20) NOT NULL,

`COLUMN_PAIR_CONTENT` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_DATA_MEDIA_PAIR_ID` (`DATA_MEDIA_PAIR_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `DATA_MEDIA` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) NOT NULL,

`NAMESPACE` varchar(200) NOT NULL,

`PROPERTIES` varchar(1000) NOT NULL,

`DATA_MEDIA_SOURCE_ID` bigint(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `DATAMEDIAUNIQUE` (`NAME`,`NAMESPACE`,`DATA_MEDIA_SOURCE_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `DATA_MEDIA_PAIR` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`PULLWEIGHT` bigint(20) DEFAULT NULL,

`PUSHWEIGHT` bigint(20) DEFAULT NULL,

`RESOLVER` text DEFAULT NULL,

`FILTER` text DEFAULT NULL,

`SOURCE_DATA_MEDIA_ID` bigint(20) DEFAULT NULL,

`TARGET_DATA_MEDIA_ID` bigint(20) DEFAULT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`COLUMN_PAIR_MODE` varchar(20) DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_PipelineID` (`PIPELINE_ID`,`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `DATA_MEDIA_SOURCE` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) NOT NULL,

`TYPE` varchar(20) NOT NULL,

`PROPERTIES` varchar(1000) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `DATAMEDIASOURCEUNIQUE` (`NAME`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `DELAY_STAT` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`DELAY_TIME` bigint(20) NOT NULL,

`DELAY_NUMBER` bigint(20) NOT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_PipelineID_GmtModified_ID` (`PIPELINE_ID`,`GMT_MODIFIED`,`ID`),

KEY `idx_Pipeline_GmtCreate` (`PIPELINE_ID`,`GMT_CREATE`),

KEY `idx_GmtCreate_id` (`GMT_CREATE`,`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `LOG_RECORD` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NID` varchar(200) DEFAULT NULL,

`CHANNEL_ID` varchar(200) NOT NULL,

`PIPELINE_ID` varchar(200) NOT NULL,

`TITLE` varchar(1000) DEFAULT NULL,

`MESSAGE` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `logRecord_pipelineId` (`PIPELINE_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `NODE` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) NOT NULL,

`IP` varchar(200) NOT NULL,

`PORT` bigint(20) NOT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`PARAMETERS` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `NODEUNIQUE` (`NAME`,`IP`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `PIPELINE` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NAME` varchar(200) NOT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`PARAMETERS` text DEFAULT NULL,

`CHANNEL_ID` bigint(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `PIPELINEUNIQUE` (`NAME`,`CHANNEL_ID`),

KEY `idx_ChannelID` (`CHANNEL_ID`,`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `PIPELINE_NODE_RELATION` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`NODE_ID` bigint(20) NOT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`LOCATION` varchar(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_PipelineID` (`PIPELINE_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `SYSTEM_PARAMETER` (

`ID` bigint(20) unsigned NOT NULL,

`VALUE` text DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `TABLE_HISTORY_STAT` (

`ID` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`FILE_SIZE` bigint(20) DEFAULT NULL,

`FILE_COUNT` bigint(20) DEFAULT NULL,

`INSERT_COUNT` bigint(20) DEFAULT NULL,

`UPDATE_COUNT` bigint(20) DEFAULT NULL,

`DELETE_COUNT` bigint(20) DEFAULT NULL,

`DATA_MEDIA_PAIR_ID` bigint(20) DEFAULT NULL,

`PIPELINE_ID` bigint(20) DEFAULT NULL,

`START_TIME` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`END_TIME` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_DATA_MEDIA_PAIR_ID_END_TIME` (`DATA_MEDIA_PAIR_ID`,`END_TIME`),

KEY `idx_GmtCreate_id` (`GMT_CREATE`,`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `TABLE_STAT` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`FILE_SIZE` bigint(20) NOT NULL,

`FILE_COUNT` bigint(20) NOT NULL,

`INSERT_COUNT` bigint(20) NOT NULL,

`UPDATE_COUNT` bigint(20) NOT NULL,

`DELETE_COUNT` bigint(20) NOT NULL,

`DATA_MEDIA_PAIR_ID` bigint(20) NOT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_PipelineID_DataMediaPairID` (`PIPELINE_ID`,`DATA_MEDIA_PAIR_ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `THROUGHPUT_STAT` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`TYPE` varchar(20) NOT NULL,

`NUMBER` bigint(20) NOT NULL,

`SIZE` bigint(20) NOT NULL,

`PIPELINE_ID` bigint(20) NOT NULL,

`START_TIME` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`END_TIME` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `idx_PipelineID_Type_GmtCreate_ID` (`PIPELINE_ID`,`TYPE`,`GMT_CREATE`,`ID`),

KEY `idx_PipelineID_Type_EndTime_ID` (`PIPELINE_ID`,`TYPE`,`END_TIME`,`ID`),

KEY `idx_GmtCreate_id` (`GMT_CREATE`,`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `USER` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`USERNAME` varchar(20) NOT NULL,

`PASSWORD` varchar(20) NOT NULL,

`AUTHORIZETYPE` varchar(20) NOT NULL,

`DEPARTMENT` varchar(20) NOT NULL,

`REALNAME` varchar(20) NOT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

UNIQUE KEY `USERUNIQUE` (`USERNAME`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE `DATA_MATRIX` (

`ID` bigint(20) NOT NULL AUTO_INCREMENT,

`GROUP_KEY` varchar(200) DEFAULT NULL,

`MASTER` varchar(200) DEFAULT NULL,

`SLAVE` varchar(200) DEFAULT NULL,

`DESCRIPTION` varchar(200) DEFAULT NULL,

`GMT_CREATE` timestamp NOT NULL DEFAULT '0000-00-00 00:00:00',

`GMT_MODIFIED` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`ID`),

KEY `GROUPKEY` (`GROUP_KEY`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE IF NOT EXISTS `meta_history` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',

`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',

`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',

`binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlog节点id',

`binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog应用的时间戳',

`use_schema` varchar(1024) DEFAULT NULL COMMENT '执行sql时对应的schema',

`sql_schema` varchar(1024) DEFAULT NULL COMMENT '对应的schema',

`sql_table` varchar(1024) DEFAULT NULL COMMENT '对应的table',

`sql_text` longtext DEFAULT NULL COMMENT '执行的sql',

`sql_type` varchar(256) DEFAULT NULL COMMENT 'sql类型',

`extra` text DEFAULT NULL COMMENT '额外的扩展信息',

PRIMARY KEY (`id`),

UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),

KEY `destination` (`destination`),

KEY `destination_timestamp` (`destination`,`binlog_timestamp`),

KEY `gmt_modified` (`gmt_modified`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='表结构变化明细表';

CREATE TABLE IF NOT EXISTS `meta_snapshot` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',

`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',

`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',

`binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlog节点id',

`binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog应用的时间戳',

`data` longtext DEFAULT NULL COMMENT '表结构数据',

`extra` text DEFAULT NULL COMMENT '额外的扩展信息',

PRIMARY KEY (`id`),

UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),

KEY `destination` (`destination`),

KEY `destination_timestamp` (`destination`,`binlog_timestamp`),

KEY `gmt_modified` (`gmt_modified`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COMMENT='表结构记录表快照表';

insert into USER(ID,USERNAME,PASSWORD,AUTHORIZETYPE,DEPARTMENT,REALNAME,GMT_CREATE,GMT_MODIFIED) values(null,'admin','801fc357a5a74743894a','ADMIN','admin','admin',now(),now());

insert into USER(ID,USERNAME,PASSWORD,AUTHORIZETYPE,DEPARTMENT,REALNAME,GMT_CREATE,GMT_MODIFIED) values(null,'guest','471e02a154a2121dc577','OPERATOR','guest','guest',now(),now());此时我们就可以启动otter服务了到bin目录下运行startup.bat即可,但是我们后面会倒回来安装Node,所以索性一起做了。

Node安装配置

我们在Linux的Docker环境来完成。将我们下载好的node.deployer-4.2.17.tar.gz文件分别上传到两台LinuxNode机器上并解压,也可以在windows上都改好了在上传。

真实情况下,两个Mysql分别在不同机器上,所以Node是来验证两个机器的联通性的,所以在192.168.1.66和192.168.1.88上镜像如下操作。

修改Node配置

可以在Windows上修改好了再上传到服务器,下面是Linux操作下载带解压:

cd /usr/local

wget https://github.com/alibaba/otter/releases/download/otter-4.2.17/node.deployer-4.2.17.tar.gz

tar -zxvf node.deployer-4.2.17.tar.gz

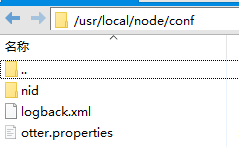

mv node.deployer-4.2.17 node需要修改conf下面的otter.properties配置,需要创建部分不存在的文件夹:

# otter node root dir

otter.nodeHome = ${user.dir}

## otter node dir

otter.htdocs.dir = ${otter.nodeHome}/htdocs

otter.download.dir = ${otter.nodeHome}/download

otter.extend.dir= ${otter.nodeHome}/extend

## default zookeeper sesstion timeout = 60s

otter.zookeeper.sessionTimeout = 60000

## otter communication payload size (default = 8388608)

otter.communication.payload = 8388608

## otter communication pool size

otter.communication.pool.size = 10

## otter arbitrate & node connect manager config

otter.manager.address = 127.0.0.1:1099

Linux创建缺少的目录:

mkdir /usr/local/node/htdocs

mkdir /usr/local/node/download

mkdir /usr/local/node/extend

#Node节点ID

mkdir /usr/local/node/conf/nid

此时需要的东西还需要依赖Aria2.

Aria2配置

Aria2是otter Node的依赖,所以需要下载、解压、编译、安装并配置环境变量,下载解压都不说了直接说编译。解压后的目录是/usr/local/aria2-1.30.0。然后进入此目录做如下操作。

cd /usr/local/aria2-1.30.0

./configure

make

make install验证是否安装成功:

man aria2c 配置Linux虚拟机环境变量,vi /etc/profile:

export ARIA2_HOME=/usr/local/aria2-1.30.0

export PATH=$PATH:$ARIA2_HOME/bin

资源同步:

source /etc/profileAria2配置文件修改:

mkdir /etc/aria2/

vi /etc/aria2/aria2.conf配置内容参考:https://www.centos.bz/2018/03/ubuntu%E4%B8%8Baria2%E6%90%AD%E5%BB%BA%E4%B8%8B%E8%BD%BD%E6%9C%BA%EF%BC%9Aaria2%E5%9B%BE%E5%BD%A2%E7%AE%A1%E7%90%86/

https://www.cnblogs.com/LiQingsong/p/10293142.html

注:本案例没有设置aria2.conf致使Node节点机器没法启动,后面有时间再做调整。

此时进入到Node目录启动Node服务:

/usr/local/node/bin/startup.shWindows上启动 Manger,双击startup.bat,启动之后访问管理地址:http://${host}:8080/ 。

Zookeeper安装

进入192.168.1.66机器安装zookeeper集群,此处采用docker-compose方式快速安装。

编写docker-compose.yml集群脚本:

version: '2'

services:

zk1:

image: zookeeper

restart: always

container_name: zk1

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

zk2:

image: zookeeper

restart: always

container_name: zk2

ports:

- "2182:2181"

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

zk3:

image: zookeeper

restart: always

container_name: zk3

ports:

- "2183:2181"

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

安装环境并运行容器:

# 安装docker-compose

yum -y install docker-compose

# 在编写好docker-compose.yml文件路径运行zk集群

COMPOSE_PROJECT_NAME=zk_cluster docker-compose up

# 新开终端窗口查看启动的zk容器

COMPOSE_PROJECT_NAME=zk_cluster docker-compose ps安装两个MySQL实例

在192.168.1.88上安装两个Mysql容器实例。

Master安装

准备好my.cnf的Canal需要的配置,需要开启ROW binlog读写:

[mysqld]

#跳过域名解析,提升访问速度

skip-name-resolve

#开启binlog日志

log-bin=mysql-bin

#添加这一行就ok

binlog-format=ROW #选择row模式

server_id=1 #配置mysql replaction需要定义,不能和canal的slaveId重复

character_set_server=utf8 #服务器字符运行Master容器:

docker run -p 3366:3306 --name master -v /usr/local/conf/mysql/master/my.cnf:/etc/mysql/my.cnf -v /usr/local/conf/mysql/master/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7进入MySQL命令行授权Canal账号:

# canal 用户权限

CREATE USER canal IDENTIFIED BY 'canal';

GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';

FLUSH PRIVILEGES;Dest安装

dest这个比较简单,直接运行容器就行,不过后续有什么问题再修改吧。

docker run -p 3367:3306 --name dest -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7Canal安装

准备canal的一个实例配置instance.properties,只需要设置Master的数据源地址:

canal.instance.master.address=192.168.1.88:3366#################################################

## mysql serverId , v1.0.26+ will autoGen

# canal.instance.mysql.slaveId=0

# enable gtid use true/false

canal.instance.gtidon=false

# position info

canal.instance.master.address=192.168.1.88:3366

canal.instance.master.journal.name=

canal.instance.master.position=

canal.instance.master.timestamp=

canal.instance.master.gtid=

# rds oss binlog

canal.instance.rds.accesskey=

canal.instance.rds.secretkey=

canal.instance.rds.instanceId=

# table meta tsdb info

canal.instance.tsdb.enable=true

#canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb

#canal.instance.tsdb.dbUsername=canal

#canal.instance.tsdb.dbPassword=canal

#canal.instance.standby.address =

#canal.instance.standby.journal.name =

#canal.instance.standby.position =

#canal.instance.standby.timestamp =

#canal.instance.standby.gtid=

# username/password

canal.instance.dbUsername=canal

canal.instance.dbPassword=canal

canal.instance.connectionCharset = UTF-8

# enable druid Decrypt database password

canal.instance.enableDruid=false

#canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==

# table regex

canal.instance.filter.regex=.*\\..*

# table black regex

canal.instance.filter.black.regex=

# mq config

canal.mq.topic=example

# dynamic topic route by schema or table regex

#canal.mq.dynamicTopic=mytest1.user,mytest2\\..*,.*\\..*

canal.mq.partition=0

# hash partition config

#canal.mq.partitionsNum=3

#canal.mq.partitionHash=test.table:id^name,.*\\..*

#################################################运行canal容器:

#canal带配置映射

docker run -p 11111:11111 --name canal -v /usr/local/conf/canal/instance.properties:/home/admin/canal-server/conf/example/instance.properties -d canal/canal-server至此,Canal、Otter、Zookeeper集群、Mysql原数据源 和Mysql目标数据源都已准备就绪。

Otter的管理和配置

otter管理地址是http://localhost:8080/ 用户密码都是admin。

机器管理

zookeeper管理

Node管理

配置管理

数据源配置

数据表配置

Canal配置

主备配置

这样配置有待考证。

同步管理

Channel管理

Pipleline管理

Wiki学习

- Home

- Introduction

- Otter调度模型

- Otter数据入库算法

- Otter双向回环控制

- Otter数据一致性

- Otter高可用性

- Otter扩展性

- QuickStart

- Manager_QuickStart

- Node_QuickStar

- AdminGuide

- Manager使用介绍

- Manager配置介绍

- 相关PPT&PDF

- FAQ

参考资料

使用 Docker 一步搞定 ZooKeeper 集群的搭建

Otter Node管理及相关端口说明(为什么是2088,9090)

docker批量删除容器、镜像

Aria2-不限速全平台下载利器

windows 下 cannal & otter 配置

文章就分享到这里,后期再补充说明。