Learning To Detect Unseen Object Classes by Between-Class Attribute Transfer

mark:

1.似乎是论文Learning To Detect Unseen Object Classes by Between-Class Attribute Transfer 中的Feature Representations所使用的代码。

2.与上述论文有关的另外一个链接资料:http://attributes.kyb.tuebingen.mpg.de/。包含测试数据以及分类代码,但是没有特征提取的代码。

Multiple Kernels for Image Classification

Andrea Vedaldi Manik Varma Varun Gulshan Andrew Zisserman

Overview

vgg-mkl-class is a VGG implementation of a multiple kernel image classifier. It combines dense SIFT, self-similarity, and geometric blur features with the multiple kernel learning of Varma and Ray [1] to obtain very competitive performance on Caltech-101. Download the implementation and data to try yourself.

- New!

- Pre-computed kernel matrices available for download.

- Version 1.1

- Significantly improved the classification performance of the PHOW features; added experiments comparing the features.

- Version 1.0

- Initial public release.

Citation. If you use this code or data in your experiments, please cite:

Multiple Kernels for Object Detection.

A. Vedaldi, V. Gulshan, M. Varma, and A. Zisserman.

In Proceedings of the International Conference on Computer Vision, 2009.

Download and setup

Here you can download the VGG MKL image classifier implementation and test data. The code and data can be used to reproduce all the experiments we report here. The code is in MATLAB and C and requires a single additional open-source library (VLFeat) to run. It works under Linux (32/64), Windows (32/64), and Mac OS X.

- vgg-mkl-class-1.1.tar.gz

-

vgg-mkl-classMATLAB/C implementation [Mac OS X 32/64, Linux 32/64, Windows 32/64]. It bundles for convenience Varma and Ray's MKL code, Berg's GB code, and Gulshan's SSIM code (see [ D2, D3]). (Previous versions: 1.0). - vlfeat-0.9.6-bin.tar.gz

- VLFeat library [Mac OS X, Linux 32/64, Windows 32/64]. Used for the fast PHOW features and kd-tree matching of the GB features (from www.vlfeat.org)

- caltech-101-prep.tar.gz [~200 MB]

-

Scaled and rearranged Caltech-101 dataset compatible with

vgg-mkl-class. It is provied for convenience, but can be obtained from the original Caltech-101 data [ D1] with a provided script. - cal101-meta.tar.gz [151 KB]

- cal101-ker-15-1.tar.gz [~900 MB]

- cal101-ker-15-2.tar.gz [~900 MB]

- cal101-ker-15-3.tar.gz [~900 MB]

- cal101-ker-30-1.tar.gz [~1.5 GB]5B

- cal101-ker-30-2.tar.gz [~1.5 GB]

- cal101-ker-30-3.tar.gz [~1.5 GB]

- Pre-computed kernel matrices. These are provided for convenience since evaluating certain features is quite slow. See further details on the format.

- Download and unpack

vlfeat-0.9.6.tar.gz(or later version). Installing requires including VLFeat in MATLAB search path, which can be done at run-time by the commandvl_setup('noprefix')found invlfeat/tooblox/. Runvl_demoto verify that the library is correctly installed. Detailed instructions can be found in the VLFeat website. - Download the and unpack

vgg-mkl-class-1.0.tar.gz. This will populate avgg-mkl-classdirectory. - Download and unpack

caltech-101-prep.tar.gz. Then move the directorycaltech-101-preptovgg-mkl-class/data/caltech-101-prepdirectory.Note. This archive contains a preprocessed version of the Caltech-101 data and is made available for simplicit5By. You can obtain the preprocessed data from the original Caltech-101 data by using the provided

vgg-mkl-class/drivers/prepCal101.shscript. - Use MATLAB to run the

vgg-mkl-classcode. To do this, make sure that VLFeat is in MATLAB path, change the current directory tovgg-mkl-class, typesetupto addvgg-mkl-classto MATLAB path, and then typecal_demo.Compiling the MEX files. The package contains pre-compiled MEX files for Linux, Windows, and Mac OS X. Due to binary compatibility problems, however, these may not work for you. In this case, you can use the

compilecommand to compile the MEX files.

The demo runs a relatively quick test with only four classes. See the experimental section to run the full pipeline and compare the method with the current state of the art.

System details

The system uses the following feature channels:

- Geometric blur (GB). These are the features from [2,6]: First, geometric blur descriptors are computed at representative points of the image. Then the distance between two images is obtained as the average distance of nearest descriptor pairs. Finally, the distance matrix is used to compute an RBF kernel. Remark. The code uses approximated nearest neighbors (based on the randomized kd-trees of [7]) to improve matching speed. This may have an impact on the performance of this descriptor.

- PHOW gray/color. These are the dense visual words proposed in [4]. SIFT features are computed densely at four scales on a regular grid and quantized in 300 visual words (we use VLFeat optimized implementation which is about 30× faster than standard SIFT for this case). Spatial histograms with 2×2 and 4×4 subdivisions [3] are then formed. Finally, two corresponding RBF-Chi2 kernels are computed. The color variant concatenates SIFT descriptors computed on the HSV channels.

- Self-similarity (SSIM). These are the features from [5]. Similarly to the PHOW features, descriptors are quantized in 300 visual words, and two spatial histograms and corresponding RBF-Chi2 kernels are computed. We use Varun Gulshan's implementation.

The seven kernels are combined linearly. The weights are determined by the MKL software of Varma and Ray [1]. 102 one-versus-rests SVMs are trained (allowing for a different set of weights for each class) and classification results are combined by assigning each image to the class that obtains the largest SVM discriminant score.

The training data is augmented by the use of virtual samples (jittering).

Experiments on Caltech-101

vgg-mkl-class is evaluated on the Caltech-101 dataset under common training and testing settings.

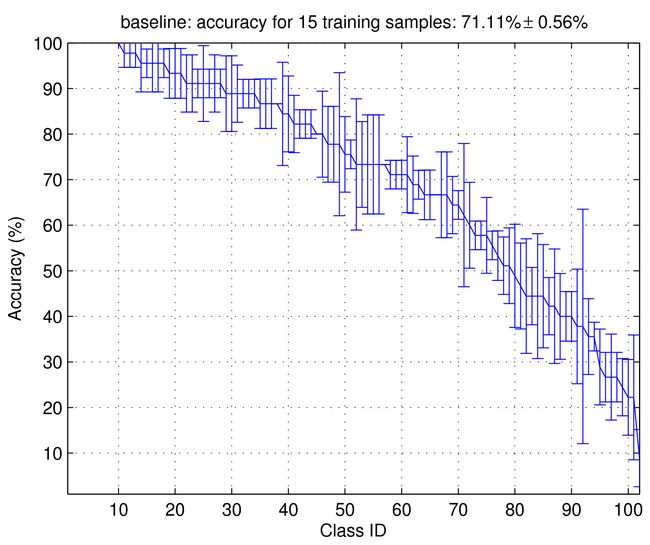

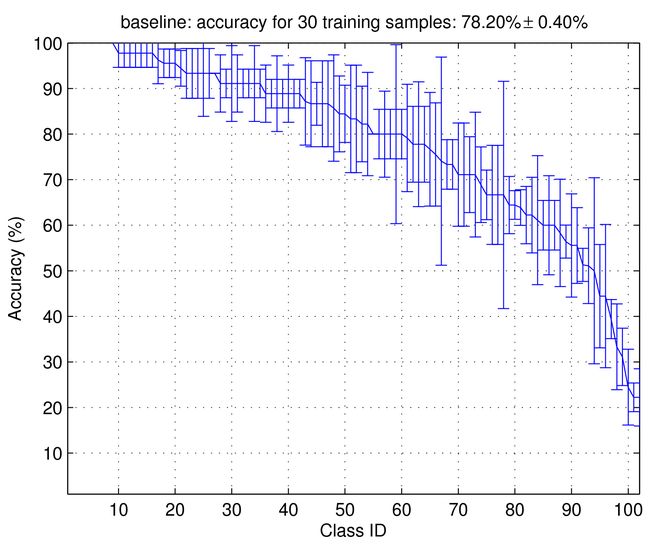

The system is trained with either 15 or 30 training images per category and tested on 15 image per category (except for categories including less than 45 images). Evaluation includes all classes 102 (comprising BACKROUND_GOOGLE and FACES_EASY which some authors exclude).

The average accuracy is 71.1 ± 0.6 % for 15 training examples and 78.2 ± 0.4 % for 30 training examples.

The figures (click to enlarge) show the accuracy obtained for each class (average and standard deviation for three runs). The 102 categories are sorted by decreasing accuracy (read a detailed listing for 15 training examples and 30 training examples).

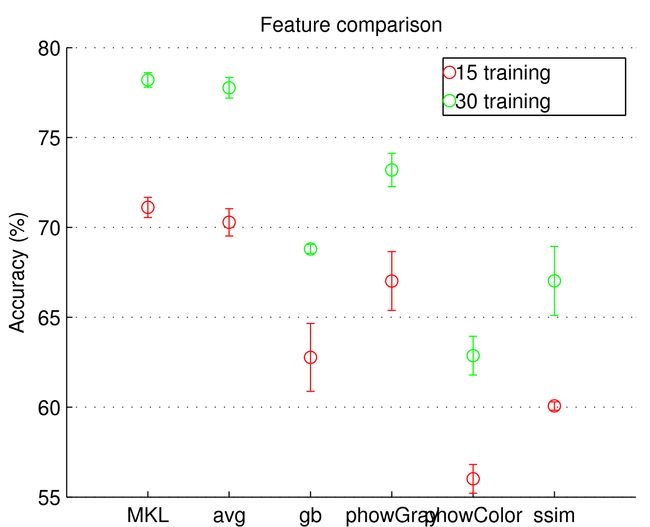

The following figure compares the performance of MKL, simple averaging of the kernels, and the single feature channels (click to enlarge):

| Method | 15 train | 30 train |

| LP-beta(*)P. Gehler and S. Nowozin, ICCV'09. | 74.6 ± 1.0 | 82.1 ± 0.3 |

| Group-sensitive multiple kernel learning for object categorization.J. Yang, Y. Li, Y. Tian, L. Duan, and W. In Proc. ICCV, 2009. | 73.2 | 84.3 |

| Bayesian localized multiple kernel learning.M. Christoudias, R. Urtasun, and T. Darrell. Technical report, UC Berkeley,2009. | 73.0 ± 1.3 | NA |

| In defense of nearest-neighbor based image classification.O. Boiman, E. Shechtman, and M. Irani. In Proc. CVPR, 2008. | 72.8 | ≈79 |

| This method. | 71.1 ± 0.6 | 78.2 ± 0.4 |

| On feature combination for multiclass object classification.P. Gehler and S. Nowozin. In Proc. ICCV, 2009. | 70.4 ± 0.8 | 77.7 ± 0.3 |

| Recognition using regions.C. Gu, J. J. Lim, P. Arbelàez, and J. Malik. In Proc. CVPR, 2009. | 65.0 | 73.1 |

| SVM-KNN: Discriminative nearest neighbor classification for visual category recognition.H. Zhang, A. C. Berg, M. Maire, and J. Malik. In Proc. CVPR, 2006. | 59.06 ± 0.56 | 66.23 ± 0.48 |

Reproducing the experiments

To reproduce the experiments, use drivers/cal_all_exps.m. This will run the algorithm on the full Caltech-101 data for three times, and for 15 and 30 training examples. Once this is done, you can use drivers/cal_all_exps_collect.m to visualize the final results.

Expect this process to take several days on a standard CPU.

References

M. Varma and D. Ray. In Proc. ICCV, 2007.

H. Zhang, A. C. Berg, M. Maire, and J. Malik. In Proc. CVPR, 2006.

S. Lazebnik, C. Schmid, and J. Ponce. In Proc. CVPR, 2006.

A. Bosch, A. Zisserman, and X. Munoz. In Proc. CIVR, 2007.

E. Shechtman and M. Irani. In Proc. CVPR, 2007.

A. C. Berg, T. L. Berg, and J. Malik. In Proc. CVPR, 2005.

M. Muja and D. G. Lowe. In Proc. VISAPP, 2009.

Acknowledgements

We would like to thank Alex Berg for contributing his Geometric Blur code.

This work is funded by the ONR and ERC